- minor fixes and improvements for Llama 3.2 standalone nb

Closed d-kleine closed 1 month ago

Check out this pull request on ![]()

See visual diffs & provide feedback on Jupyter Notebooks.

Powered by ReviewNB

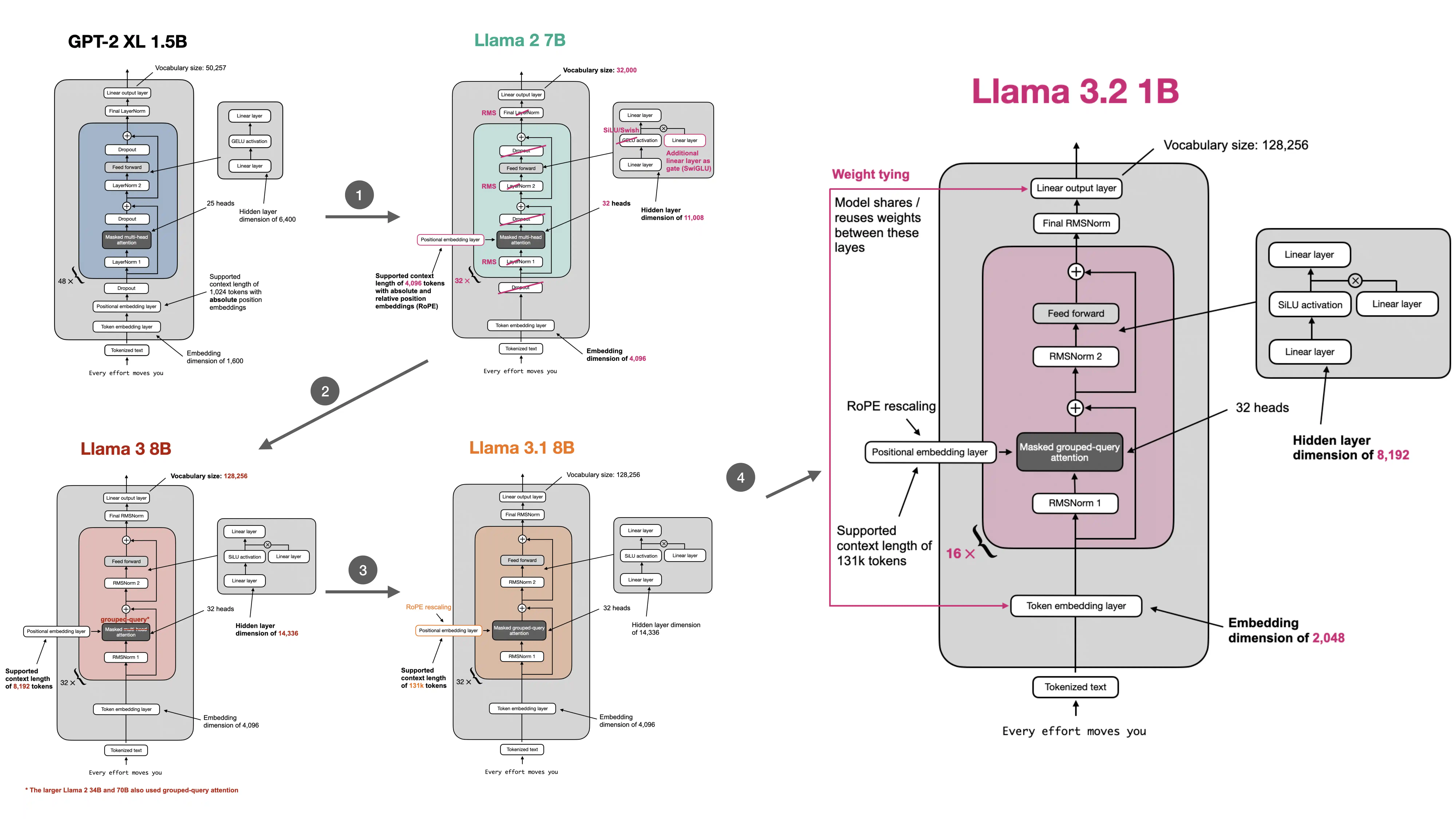

If you find some spare time, it would be great if you could implement these formatting changes to this figure:

Bold print seems to indicate what has been changed from before, so

I really enjoy these notebooks and figures around GPT-2 and the LLama 2/3 models here - it's a great round-up of the contents of the book both technically (code) and visually (figures)!

Good catch regarding the 72. I also reformatted the RoPE base as float to make it consistent with the other RoPE float settings. (I synced the figure, but it may be a few hours until the change takes effect due to GitHub's caching.)

@rasbt Thanks! I just took a look into the updated figure, the "32 heads" of Llama 3 8B are still bold print.

Also, I have seen another information in the figure that might need an update:

You could also add the information that Llama 2 already used GQA for the larger models (34b and 70b) for improved inferencescalability. I think this an interesting information for the figure.

Thanks, I will try to update it in the next few days!

Looks like I had fixed the "heads" in the Llama figure but then forgot to apply it to some of the figures where it's used as subfigure. Good call regarding the RoPE btw. Should be taken care of now!

Looks great, thanks! Superb comprehensive overview btw!