This is an interesting example. But have you made sure that your OPPONENT_OBS in your postprocessing fn is built for the correct number of opponent agents? I'm only seeing you do this (loss not initialized yet):

sample_batch[OPPONENT_OBS] = np.zeros_like(

sample_batch[SampleBatch.CUR_OBS])which is only 0s for one opponent. That's why you get the shape error: You are passing in data for 1 opponent, instead of 3.

What is the problem?

ray 1.0.1, Python 3.7, TensorFlow 2.3.1, Windows 10

Hi!

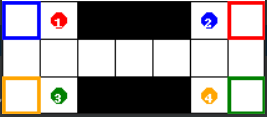

I am trying to solve the following environment with the MAPPO (PPO with a centralized critic)

Reward For each time step a agents is not in its final position, it receives a reward of -1 For each time step a agents is in its final position, it receives a reward of 0

Actions

Observation For each agent an obs consists of:

Resulting in the following observation and action spaces of the environment:

_action_space = spaces.Discrete(5) observationspace = spaces.Box(np.array([0., 0., 0., 0.]), np.array([1., 1., 1., 1.]))

One episode lasts for 50 time steps. The goal for all agents is to get into their final position (cell which has the same colour as the corresponding agent) as fast as possibe and stay in there until the episode ends.

I was able to solve this environment with 2 agents, following rllibs’s centralized critic example.

In order to handle a increased number of agents, I made following changes to the example code (see section "centralized critic model", I am only using the TF version):

This results in the following error message:

Did anyone manage to adjust the number of agents in the centralized_critic.py example or has an idea what else I have to change?

Thank you in advance!

Cheers, Korbi :)

Reproduction (REQUIRED)