@sven1977 Adding a little more detail.

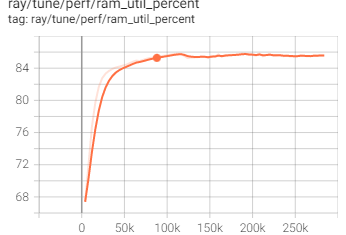

The following image show single champion:

- As can be seen there is an initial growth and plateau when starting league from scratch related to the None (default) policies used in training when no past selves can be used for matches.

- The second spike in training is when we move from the None Policies to past selves

- (Example has 8 Challengers Polices - 8 None (default) Policies - 1 champion policy) --- Optimizations can be done here but really do not address the limitation.

Each Worker of which there is 60 has a RLLIB PolicyMap which is configured to cache 10 Policies in memory. Once capacity is reached the oldest polices are mapped to disk and loaded next time used.

Note: In a case of cache capacity for policy of 10 we will transition items to and from disk lowering throughput but preserving memory at reasonable values,

- If the cache was 20 then each map would hold all possible policies for run

Going back to the PolicyMap - this is at the worker level as noted above and not at the node level leaving us with multiple duplications of the above noted policies per worker.

- Example above with 60 workers would then have a total of ~600 policies in memory on a single node (60 workers with 4 cpus per env). You can see a memory growth here for items not using gpu for inference

Manageable for single champion and can increase past 60 but limits will clearly be reached... Multi Agent Training has similar constraints but are less likely to experience in simple agent on agent setups. NvN agent training would assume to hit similar limits as champion vs multiple challenger policies

Let's show an example for small league where there is multiple champions.

This setup extends and run six champions which has a mix of Main-Agents and Main-Exploiters (with various selection stratigies).

- uses 6 128 core/256 thread, 250GB mem, 1V100

- There are 8 possible challenger slots

So the possible combination of policies in a given worker are:

- 1 champion policy

- 8 Challenger policies for Champion 1

- 8 Challenger policies for Champion 2

- 8 Challenger policies for Champion 3

- 8 Challenger policies for Champion 4

- 8 Challenger policies for Champion 5

- 8 Challenger policies for Champion 6

- 8 Challenger policies for None (default)

- Note: similar to the above there are optimizations that can be performed but again do not address growing differences. Additionally, this is intended for HLP setups.

Now in any given Match there will be only 1 champion + 8 Challenger policies used... Which can be handled by the policy map. So we can cache items which helps at cost of the throughput but sure that allows us to run. However, in the example above we get to less than 10% memory free on nodes which can cause stability concerns in ray/rllib. (Note: Ray will post a warning at every report print to console for this)

- So four of the six arenas (RLLIB Trials) in setup fail eventually with unknown work faults.

- Reducing the challengers to 5 allows to run longer in this case... though we could also set lower cache capacities

looking to see if there is an implementation detail that I am missing here for how to implement large groups of varying policies (act, obs, model - optionally include rewards and dones) for setup.

Search before asking

Ray Component

RLlib

What happened + What you expected to happen

Currently exploring heterogenous league-based training with RLLIB and running into scalability issues/implementation issues which are noted bellow:

League Terminology Used In this Post:

env_runnerin sampler is implemented, The resetting/initializing running environments for challenger policy between training iterations/matches (train step/callback for training results) must occur at the end of the first episode of the next training iteration/match. This leaves the next training iteration/match as it were with samples from previous tournament match. Work around in provided example script.https://github.com/ray-project/ray/blob/596c8e27726075623d51428c549304bc0f141f8d/rllib/evaluation/sampler.py#L963-L969

policy_map_capacitysettings which in example is set to 2 to reduce to min policies in memory. However, when scaled in the CartPole-v0 and MountainCarContinuopus-v0 examples across 250 workers this can take 250GB of memory. Question is there a recommended way to accomplish the multiple policies in each worker such that it supports Heterogenous league like runs where challengers are constantly loaded in without blowing through memory.128 cores, 256 threads, v100, 256GB Memory

Note: I am willing to provide more involved examples and answer questions as needed to resolve mapping issue.

Question is it possible to have PolicyMap per node vs per worker? It is almost like the policy map should be a shared resource on a node vs being duplicated per worker on the node.

Versions / Dependencies

Ray Version:

Tensor Flow

Python

OS

Reproduction script

Anything else

No response

Are you willing to submit a PR?