Upon further investigation it appears as if this error is being thrown when calling

return rgb2gray(image_or_path) on line 257 in the goldberg.py

Thanks again!

Open selected-pixel-jameson opened 2 years ago

Upon further investigation it appears as if this error is being thrown when calling

return rgb2gray(image_or_path) on line 257 in the goldberg.py

Thanks again!

I've got my environment setup using scikit-image==0.17.2 now and I'm able to run the code as is from the master branch now. However, search results still are not coming back correct.

I'm using ElasticSearch version 7.16 and using the elasticsearch 8.0.0 Python library.

I'm simply trying to get the image indexed to return as a result when searching and similar images, but the images returned are not close to similar or exactly matching at all.

FYI, if there is someone out there who would be interested in helping me with this I'd be more then willing to tip them or some other sort of payment.

I changed the elasticsearch version to 7.0.0 and reindexed all the images after I got the scikit-image version to 0.17.2 and it is now working! 🎆

Upon further test this seems to be good at finding the exact same image and images with extremely slight variations, but it seems like it runs into issues when possibly color variations start coming into play? Here is an example.

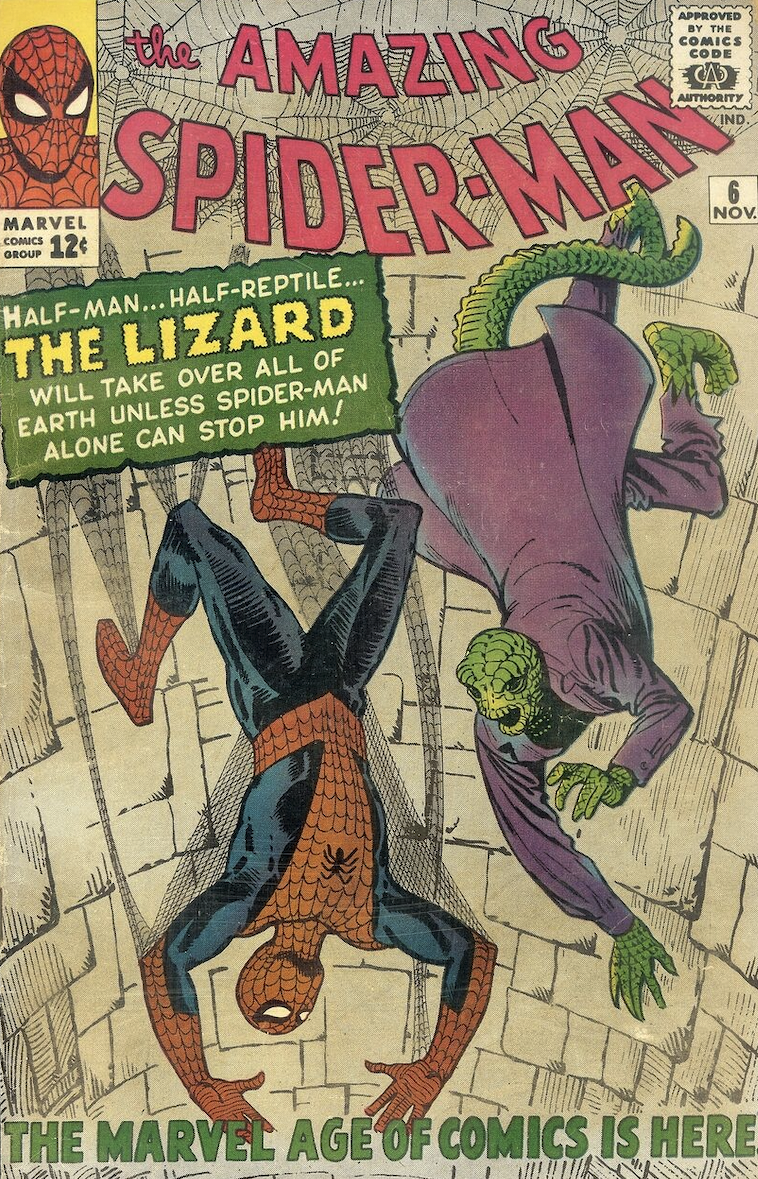

This is the indexed image.

This is the image I'm using to search with.

While results come back they don't seem accurate at all. This is the first result that comes back.

0.5466048990253396 (Dist)

0.5466048990253396 (Dist)

As I stated before if anyone has some insight into how to get adjust these I'd be willing to compensate them for their time. Really need a solid solution for this.

Interestingly enough this seems to be the same issue logged in this issue https://github.com/ProvenanceLabs/image-match/issues/64.

I'm unable to determine if it was actually resolved or not though.

It seems now that the issue is the score that is being associated with the results. If I change the result size to 10,000 the correct images come back. They have the best dist value out of all the images, but many of the other images have a higher score.

Not sure how to resolve this one yet.

I do not fully understand the vector / word storage in elasticsearch yet, but I'm starting to think that the logic behind this entire project is flawed unless the score is not accurately being calculated for some reason on my end of things.

I've implemented a dense_vector field on the elasticsearch index at this point and have changed the query for searching to

body = {

"query": {

"script_score": {

"query": {

"bool": {

"filter": {

"term": {

"image.metadata.isKey": "true"

}

}

}

},

"script": {

"source": "1 / (1 + l2norm(params.queryVector, 'image_dense_vector'))",

"params": {

"queryVector": dense_vector

}

}

}

}

}Unfortunately this is still not returning an accurate result for the example provided above.

At this point I'm unable to figure this out. Ultimately the issue boils down to the fact that the score that is being generated is inaccurate when compared to the distance and the results are being ordered by the distance after the query has run.

At this point I think the only option is to literally pull all 500,000 results that will ultimately be in the database as the elasticsearch word query seems inaccurate and I'm unable to get the dense vector object to work.

@selected-pixel-jameson I got the same error while performing search on image-search, elasticsearch as a database running on docker

by changing first argument as "path" from "transformed_img" while make_record function in line "273" solves the issue

from this --> l = self.search_single_record(transformed_record, pre_filter=pre_filter) to --> l = self.search_single_record(path, pre_filter=pre_filter)

as you can see in make_record function - def make_record(path, gis, k, N, img=None, bytestream=False, metadata=None):

first argument is expected to be "path"

Test Dataset -

Results -

After solving the issue the results are pretty awesome!

But it still not able to perform search on different orientation of image. What are your views on this?

@A7-4real I'll have to investigate your solution further. Would require me to revert some changes I've made. Did you ever get the orientation piece working? That part is something I really need to get working.

However, I would point out that the issue I'm having is not something you are going to see with a small dataset.

I ended up reworking the search_single_record function to this.

def search_single_record(self, rec, pre_filter=None):

path = rec.pop('path')

signature = rec.pop('signature')

if 'metadata' in rec:

rec.pop('metadata')

dense_vector = rec['image_dense_vector']

rec.pop('image_dense_vector')

# build the 'should' list

should = [{'term': {'{}.{}'.format(self.doc_type, word): rec[word]}} for word in rec]

body = {

'query': {

'bool': {'should': should}

},

'_source': {'excludes': ['{}.simple_word_*'.format(self.doc_type)]}

}

body = {

"query": {

"script_score": {

"query": {

"exists": {

"field": "image.metadata"

}

},

"script": {

"source": "1 / (1 + l2norm(params.queryVector, 'image_dense_vector'))",

"params": {

"queryVector": dense_vector

}

}

}

}

}

if pre_filter is not None:

body['query']['bool']['filter'] = pre_filter

res = self.es.search(index=self.index,

body=body,

size=self.size,

timeout=self.timeout)['hits']['hits']

sigs = np.array([x['_source'][self.doc_type]['signature'] for x in res])

if sigs.size == 0:

return []

dists = normalized_distance(sigs, np.array(signature))

formatted_res = [{'id': x['_id'],

'score': x['_score'],

'metadata': x['_source'][self.doc_type].get('metadata'),

'path': x['_source'][self.doc_type].get('url', x['_source'][self.doc_type].get('path'))}

for x in res]

for i, row in enumerate(formatted_res):

row['dist'] = dists[i]

formatted_res = filter(lambda y: y['dist'] < self.distance_cutoff, formatted_res)

return formatted_resI've been getting pretty accurate results for one of the purposes I need this for, but when the images are off or skewed or distorted it doesn't work very well.

When performing a search on the database I'm getting this error.

I'm running Python 3.8 and had to update the packages to get them to compile. I had to use 3.8 because I'm running on a Macbook Pro M1 and anything lower will not compile. When I use this version of python I have to install the latest version of scikit-image or it won't compile when trying to install the package via pip.

These are the package versions that I have

elasticsearch==8.0.0 scikit-image==0.19.2 six==1.15.0 flask==2.0.3

In order to fix this issue I had to change the following lines of code.

When I search for the exact image that is in the path it does not return accurate results.

Any guidance would be greatly appreciated! Thank you.