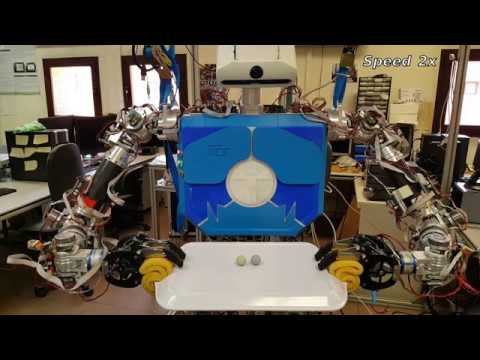

One possible idea of a demo is:

- Teo grasps and object which is necessary to use the two hands to hold it. It can be a tray for example. The object will be known and it cannot be change. It's necessary to position the object with respect to the robot always in the same distance and location.

- The robot will elevate the tray. Once the robot holds it in the air, you will can place an object over the tray.

- At this point, I thought that Teo can do something like:

- Say the weight of the object using the jr3 sensors

- Try to set the object in the center, balancing the tray and using jr3 to know the forces in each hand. In this case, the object should be a sliding object.

We are interested of create a demostration which we can see Teo manipulating an object with two arms. It can be something with handles or something that it's necessary to grasp with two hands at the same time. The objective is to check the correct functionality of the robot, manipulating with two arms. It's not necessary to use path-planning. We can grab waypoints (see this old issue for that) like

teo-self-presentationand make something simple and nice to see.UPDATED:

Next to do: