Not sure if it helps, but I had these problems when I started using yak.

- Make sure your camera matrix is correct

- Verify that the published point cloud is in the 1st octant (meaning only positive x-, y- and z coordinates)

Closed gachiemchiep closed 3 years ago

Not sure if it helps, but I had these problems when I started using yak.

Hello @LorenzBung Thank you for your comment.

I put the camera depth intrinsics into the camera matrix field so I guess that should be correct.

What do you mean about "Verify that the published point cloud is in the 1st octant (meaning only positive x-, y- and z coordinates)"? Sorry I still can't get your point.

My point cloud was centered around the y-axis. So I had values between -0.05 and 0.05 for an object of length 10cm which was centered. But if I recall correctly only the positive values were considered by yak, e.g. the object was cut off at the x-, y- and z-axes.

Hello @LorenzBung

We moved our camera into a positive position. So in this case, positive (x, y, z) points should exist.

# Before

<node pkg="tf" type="static_transform_publisher" name="camera_link_depth"

args=" 0.21577865 -0.36410704 1.02983987 -0.70355683 0.71061717 0.00165881 0.00531743 world camera_depth_optical_frame 100"/>

<node pkg="tf" type="static_transform_publisher" name="camera_link_color"

args=" 0.21577865 -0.33410704 1.02983987 -0.70355683 0.71061717 0.00165881 0.00531743 world camera_color_optical_frame 100"/>

# After

<node pkg="tf" type="static_transform_publisher" name="camera_link_depth"

args=" 0.22027305 0.42083749 1.02705574 0.70856721 -0.70556173 -0.00934054 -0.00528296 world camera_depth_optical_frame 100"/>

<node pkg="tf" type="static_transform_publisher" name="camera_link_color"

args=" 0.22027305 0.42083749 1.02705574 0.70856721 -0.70556173 -0.00934054 -0.00528296 world camera_color_optical_frame 100"/>Unfortunately, as before the GUI still didn't show anything.

If there isn't any problem, would you mind share your launch file for using yak_node?

I translate my cloud before integrating it and read the camera matrix directly in the code

@LorenzBung Thanks for your code. Did you use the point cloud data directly from 3d sensor or only from the 2 ply files?

bun_on_table.ply

sample_box_eurobox_200_100_300.plyI did use data from the sensor.

Thank you for your help. I will dig deeper into the code and keep debugging.

@gachiemchiep Can you copy and paste the terminal output from the yak_ros node? There should be some messages that are printed that indicate the different stages of the 3D integration process.

In general if the raycast preview GUI doesn't update at all (as opposed to just showing an empty volume and a blank background) it means that the reconstruction process is quitting early and the RGB-D data isn't being used. Some of the reasons this can happen are:

DEFAULT_MINIMUM_TRANSLATION meters since the last received image.header.frame_id field and the tsdf_origin frame.In the bunny demo launch file, a static transform publisher provides the transform between world and tsdf_origin. Do you have something to provide this transform in your project? Otherwise the TF lookup to find the position of the camera relative to the TSDF volume will fail, which would cause the problem you've described.

@schornakj

What is the meaning of tsdf_origin? Is this the pose of depth camera. We used the pose of depth camera as value for tsdf_origin.

# launch file

<?xml version="1.0"?>

<launch>

<!-- <include file="$(find jil_object_tracking)/launch/3d_reconstruction/rs_rgbd.launch"></include> -->

<!-- Fake tf, use for developing -->

<node pkg="tf" type="static_transform_publisher" name="camera_link_depth"

args=" 0.22027305 0.42083749 1.02705574 0.70856721 -0.70556173 -0.00934054 -0.00528296 world camera_depth_optical_frame 100"/>

<node pkg="tf" type="static_transform_publisher" name="camera_link_color"

args=" 0.22027305 0.42083749 1.02705574 0.70856721 -0.70556173 -0.00934054 -0.00528296 world camera_color_optical_frame 100"/>

<node name="tsdf_node" pkg="yak_ros" type="yak_ros_node" output="screen">

<!-- remap realsensen topic -->

<remap from="~input_depth_image" to="/camera/depth/image_rect_raw"/>

<remap from="~input_point_cloud" to="/camera/depth_registered/points"/>

<param name="tsdf_frame" value="tsdf_origin"/>

<rosparam param="camera_matrix">[411.8604736328125, 0.0, 325.3558044433594, 0.0, 411.8604736328125, 246.15582275390625, 0.0, 0.0, 1.0]</rosparam>

<param name="cols" value="640"/>

<param name="rows" value="480"/>

<param name="volume_resolution" value="0.001"/>

<param name="volume_x" value="640"/>

<param name="volume_y" value="640"/>

<param name="volume_z" value="192"/>

</node>

<node pkg="tf" type="static_transform_publisher" name="link1_broadcaster" args="0.22027305 0.42083749 1.02705574 0.70856721 -0.70556173 -0.00934054 -0.00528296 world tsdf_origin 100" />

<node type="rviz" name="rviz" pkg="rviz" args="-d $(find jil_object_tracking)/rviz/yak_demo.rviz" />

</launch>

Here's the log of yak_ros node

... logging to /home/jil/.ros/log/1fc0402e-d144-11ea-8a3f-54bf64969598/roslaunch-rtrworkstation-Precision-7920-Tower-40883.log

Checking log directory for disk usage. This may take awhile.

Press Ctrl-C to interrupt

WARNING: disk usage in log directory [/home/jil/.ros/log] is over 1GB.

It's recommended that you use the 'rosclean' command.

started roslaunch server http://rtrworkstation-Precision-7920-Tower:39217/

SUMMARY

========

PARAMETERS

* /rosdistro: kinetic

* /rosversion: 1.12.14

* /tsdf_node/camera_matrix: [411.860473632812...

* /tsdf_node/cols: 640

* /tsdf_node/rows: 480

* /tsdf_node/tsdf_frame: tsdf_origin

* /tsdf_node/volume_resolution: 0.001

* /tsdf_node/volume_x: 640

* /tsdf_node/volume_y: 640

* /tsdf_node/volume_z: 192

NODES

/

camera_link_color (tf/static_transform_publisher)

camera_link_depth (tf/static_transform_publisher)

link1_broadcaster (tf/static_transform_publisher)

rviz (rviz/rviz)

tsdf_node (yak_ros/yak_ros_node)

ROS_MASTER_URI=http://localhost:11311

process[camera_link_depth-1]: started with pid [40900]

process[camera_link_color-2]: started with pid [40901]

process[tsdf_node-3]: started with pid [40903]

process[link1_broadcaster-4]: started with pid [40919]

process[rviz-5]: started with pid [40924]

[ INFO] [1595990120.733169433]: Camera Intr Params: 411.860474 411.860474 325.355804 246.155823

[ INFO] [1595990120.739211721]: TSDF Volume Dimensions (Voxels): [640, 640, 192]

[ INFO] [1595990120.739266228]: TSDF Volume Resolution (m): 0.001

Reset Volume

[ WARN] [1595990121.295289241]: TF lookupTransform error: "tsdf_origin" passed to lookupTransform argument target_frame does not exist.

[ WARN] [1595990121.330188202]: TF lookupTransform error: Lookup would require extrapolation at time 1595990121.291812407, but only time 1595990121.409273771 is in the buffer, when looking up transform from frame [camera_depth_optical_frame] to frame [tsdf_origin]

[ WARN] [1595990121.367307757]: TF lookupTransform error: Lookup would require extrapolation at time 1595990121.291812407, but only time 1595990121.409273771 is in the buffer, when looking up transform from frame [camera_depth_optical_frame] to frame [tsdf_origin]

[ WARN] [1595990121.460183941]: TF lookupTransform error: Lookup would require extrapolation into the past. Requested time 1595990121.291812407 but the earliest data is at time 1595990121.409273771, when looking up transform from frame [camera_depth_optical_frame] to frame [tsdf_origin]

[ WARN] [1595990121.539577266]: TF lookupTransform error: Lookup would require extrapolation into the past. Requested time 1595990121.327403877 but the earliest data is at time 1595990121.409273771, when looking up transform from frame [camera_depth_optical_frame] to frame [tsdf_origin]

[ WARN] [1595990121.543879526]: TF lookupTransform error: Lookup would require extrapolation into the past. Requested time 1595990121.358412433 but the earliest data is at time 1595990121.409273771, when looking up transform from frame [camera_depth_optical_frame] to frame [tsdf_origin]

[ WARN] [1595990121.616006682]: TF lookupTransform error: Lookup would require extrapolation into the past. Requested time 1595990121.327403877 but the earliest data is at time 1595990121.409273771, when looking up transform from frame [camera_color_optical_frame] to frame [tsdf_origin]

[ERROR] [1595990122.569898079]: Robot model parameter not found! Did you remap 'robot_description'?

[ERROR] [1595990122.572993743]: Robot model not loaded

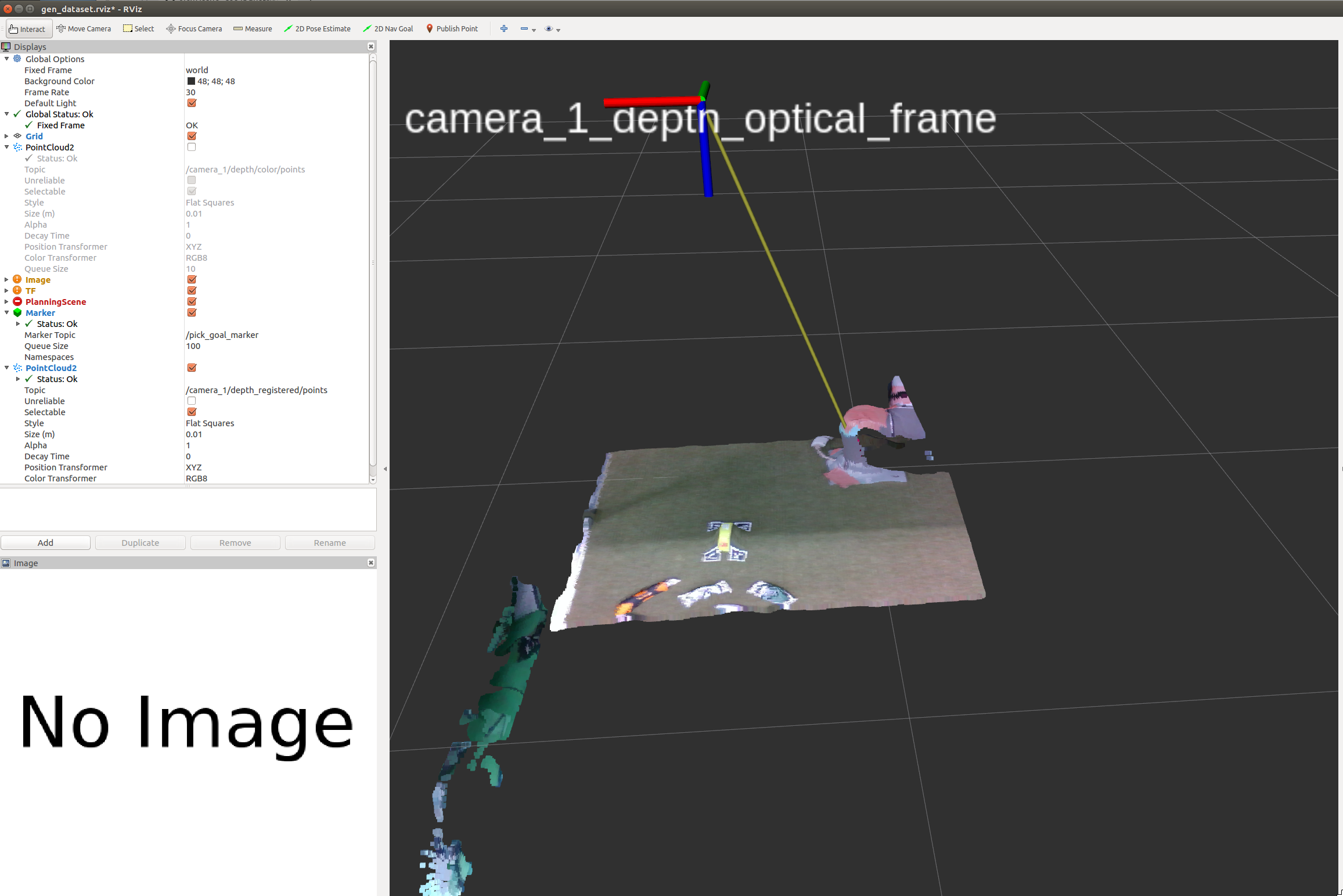

Here is the tf graph

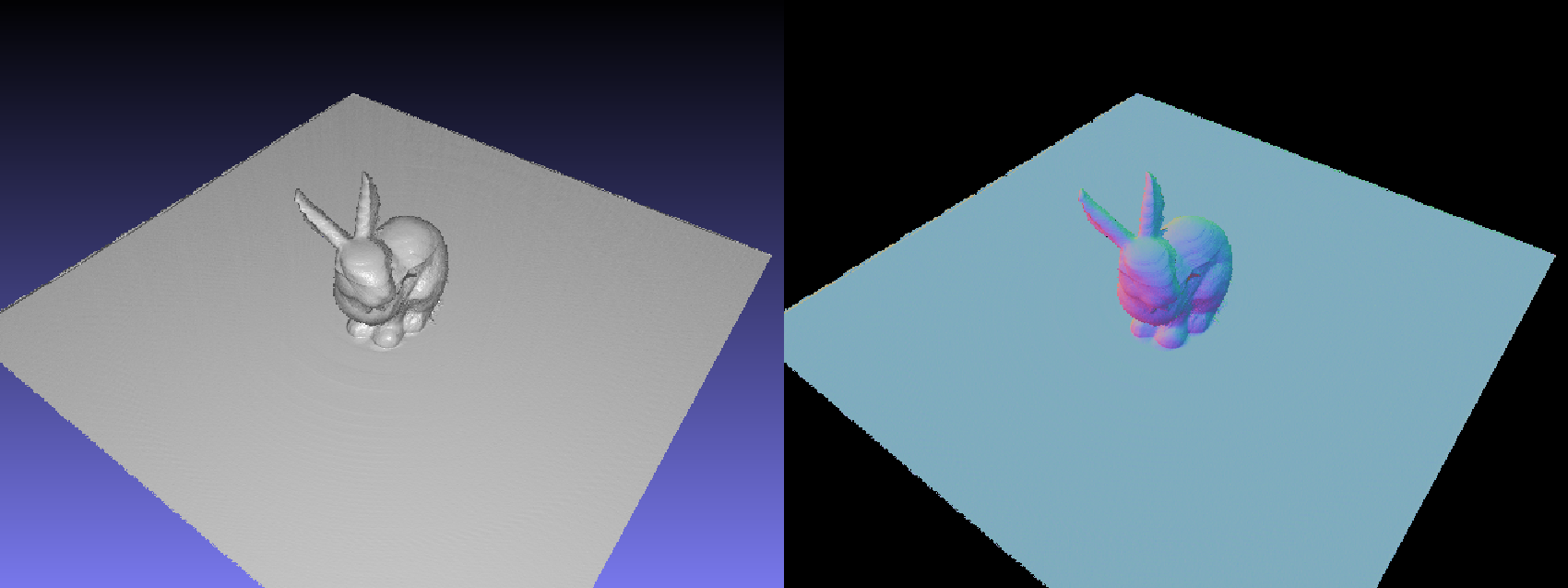

The tsdf_origin frame is at the bottom corner of the rectangular volume in which surfaces will be reconstructed. It's usually fixed somewhere in the environment, for example at one corner of a tabletop. The positive axes of this frame point along the edges of the volume. Here's a picture showing the relationships between this frame, the camera, and the world:

Here is the tf graph

This graph shows that you have two separate TF trees, and that the frame associated with the depth images is disconnected from tsdf_frame. This is the fundamental problem preventing your system from working correctly.

Fortunately there are just a few things you'll need to fix:

static_transform_publisher that publishes a transform between world and camera_link. This will connect the frames published by the camera driver to your world and tsdf_origin frames.static_transform_publisher nodes for camera_color_optical_frame and camera_depth_optical_frame: the Realsense node is already publishing TF data for the camera frames.@schornakj Thank you for your advice. We're working to fix those problems.

@schornakj We fixed the TF trees, the program does run. The result doesn't look good but we'll cut off un-related points from point cloud, and find the correct value of tsdf_origin.

This is a very noob question but between 1, 2, 3, 4 (as in the image), which one is the bottom corner (tsdf_origin)?

This is a very noob question but between 1, 2, 3, 4 (as in the image), which one is the bottom corner (tsdf_origin)?

If you enable the TF display (and enable visualisation for the tsdf_origin frame) you should be able to see it in RViz.

@gavanderhoorn I'm confused between points 1, 2, 3, 4. Which one should be used as tsdf_origin.

It's a TF frame IIRC, so you should be able to visualise it using the TF display in RViz.

Hello @gavanderhoorn Do we need to set position of tsdf_origin manually by using static_transform_publisher as in https://github.com/ros-industrial/yak_ros/blob/7784f82e4aae23a396c1403600717a4683359d5b/yak_ros/launch/demo.launch#L24 .

I use the camera_1_depth_frame as the tsdf_frame.

<node name="tsdf_node" pkg="yak_ros" type="yak_ros_node" output="screen">

<!-- remap realsensen topic -->

<remap from="~input_depth_image" to="/camera_1/depth/image_rect_raw"/>

<remap from="~input_point_cloud" to="/camera_1/depth_registered/points"/>

<param name="tsdf_frame" value="camera_1_depth_frame"/>

<rosparam param="camera_matrix">[411.8604736328125, 0.0, 325.3558044433594, 0.0, 411.8604736328125, 246.15582275390625, 0.0, 0.0, 1.0]</rosparam>

<param name="cols" value="640"/>

<param name="rows" value="480"/>

<param name="volume_resolution" value="0.001"/>

<param name="volume_x" value="640"/>

<param name="volume_y" value="640"/>

<param name="volume_z" value="192"/>

</node>But inside rviz tsdf_origin isn't exist.

Here's my tf graph. It look fine for me, but some how tsdf_origin and tsdf_frame aren't exist.

@gachiemchiep Yes, you need to have something that publishes a transform for tsdf_origin. This can be provided by a static transform publisher, a fixed joint in a URDF, or something else.

I'd suggest using a different frame for tsdf_origin than the camera optical frame. Generally the camera is moving relative to the TSDF volume, so they'll need to be represented by separate frames eventually anyway.

An additional comment for clarity: This frame doesn't strictly need to be named tsdf_origin -- that's just the default name, but in other applications I've found it helpful to keep using that name to avoid confusion. The yak_ros_node lets you set any TF frame as the origin of the TSDF voxel volume by setting the tsdf_frame parameter (as you have done in the example you provide).

In your example you've set the origin of the TSDF volume to be at camera_1_depth_frame, so here is a picture showing the approximate orientation and extent of the volume. This should help explain why your reconstructed surface looks the way it does, since only the furthest corner of the volume intersects the object you're scanning.

I'm confused between points 1, 2, 3, 4. Which one should be used as tsdf_origin.

It depends on how you orient the TF frame you set to use as the TSDF origin frame. For example, if you picked point 1 as the origin, you would want the X-axis pointing towards 2, the Y-axis pointing towards 4, and the Z-axis pointing up out of the table.

@schornakj Thank you for your explaination

Hello @schornakj Thank you for your explanation.

Sorry it took us a while to implement the code. We add some Aruco tags to cut off un-related points. But inside the yak_ros's gui the displayed mesh isn't stable at all. The shape isn't change, but the mesh keep moving up and down. It's hard to explain so we add the video here. https://www.youtube.com/watch?v=mZ1eFHsEldY&feature=youtu.be Do we need to cut off input_depth_image too?

Here's our current result. We thought we use the correct tf this time/

The left/right motion is because of some issue with your TF frames. Since the reconstructed mesh is alternating between just two positions, I think the most likely explanation is that two separate TF broadcasters are publishing slightly different and conflicting poses for some frame in the TF tree. Otherwise, something along the kinematic chain is publishing inconsistent data.

It shouldn't be necessary to crop the depth images. Since it looks like you just want to make a model of the area within the Aruco tags, you can adjust the volume_x, volume_y, and volume_z parameters to make the dimensions of the TSDF volume match the size of the region you want to capture. Since you have volume_resolution set to 0.001m, you could set volume_x to 100, volume_y to 200, and volume_z to 100. This would give you a 10cm 20cm 10cm volume, which looks close to the size of the thing you're scanning.

Hello @schornakj

Thank you for your explanation The yak_ros complaint that Failed to initialize yak_ros node: Number of voxels in each dimension must be multiples of 32. so I changed values into volume_x=224, volume_y=160, volume_z=128.

I set the TF of tsdf_origin into fixed value, but the left/right motion is still exist. https://www.youtube.com/watch?v=hNBsSWu7WMA (The TF of tsdf_origin is showed on the left side)

Do you know how to extract the mesh from /yak_ros/generate_mesh topic. I search through the document, but couldn't find any example.

The request message for the GenerateMesh service has a results_dir field where you can set the directory where the output mesh will be saved. The marching cubes results will be saved in a file named results_mesh.ply in the specified directory.

For the left/right motion, have you run Rviz with the TF display enabled? You might be able to see the frames moving there like you see in the raycast preview. I don't expect that the yak_ros node has anything to do with that motion, since it just takes the position of whichever TF frame you provide and uses that as the origin of the depth images.

Hello @schornakj Sorry for the late response. Are you talking about the tsdf_origin frame ? As you can see in the video that tsdf_origin is in fixed position and didn't move at all.

I was talking about some other transform in the TF tree leading up to the camera. I don't expect the tsdf_origin frame to be moving since you're providing it using a static transform publisher. I see in your video that you're displaying the frame for the camera depth optical frame, which doesn't look like it's moving either.

The problem you show in your video can happen if you have multiple sources publishing a pose for the TF frame listed as the frame of reference in the depth image header message. You should determine which frame is listed in the header of the images you're passing to the TSDF algorithm, make sure that there is only one entity publishing TF data for that frame, and make sure that this frame is totally stationary relative to the tsdf_origin frame when your robot is not moving.

@schornakj Thank you for your explanation. I will check our code again.

Hello @schornakj Sorry to bother you again. We check our code and couldn't find anything. The only thing suspicious is the coordinate of the camera is change like 0.0001 per frame. We don't know whether that value is large enough to make an obvious effect.

We change our program a little bit.

But the result is very weird. https://www.youtube.com/watch?v=X3l7jo3KP9E

We though one frame per scanning position is not enough then we increase into 30 frames. But the result is still the same. https://www.youtube.com/watch?v=VPID_7Oy53U

Hello guys. Is there anyone have ideas about our problem?

The only thing suspicious is the coordinate of the camera is change like 0.0001 per frame. We don't know whether that value is large enough to make an obvious effect.

I don't expect a very small change like that to have a significant effect on your result.

We change our program a little bit.

1. We manually take some pose and scanning positions. 2. The robot will move to each scanning position, at each scanning position it will read one-frame from the depth and point-cloud of the on-arm camera. Then those frames is send to /tsdf_node/input_depth, /tsdf_node/input_pointcloud

I think that this is just masking your original problem. The TSDF reconstruction process should work correctly regardless of how often you're capturing images or whether or not the camera is moving during capture. You also shouldn't need to publish to both /input_depth and /input_pointcloud at the same time, especially if you're just providing the same data in two different data formats.

But the result is very weird. https://www.youtube.com/watch?v=X3l7jo3KP9E

We though one frame per scanning position is not enough then we increase into 30 frames. But the result is still the same. https://www.youtube.com/watch?v=VPID_7Oy53U

In your videos it looks like something is wrong with the synchronization between your images and the camera TF frame. Whenever the robot moves the camera to a new position and begins scanning, the existing TSDF surface is eroded away and replaced by a new surface at a slightly different position. I've seen this kind of thing happen before, and it's generally either because the 3D position of the camera relative to the robot is incorrect, or the timestamp in the header of the depth images is incorrect. How did you determine the position of the camera relative to the robot tool flange? This is a very important aspect of generating good 3D models.

Going back to your previous video, I noticed that the robot isn't moving while performing the scan. It would be very useful to see what happens while you're continuously capturing depth images while the system is moving around the part -- the nature of reconstruction errors that appear while the system is moving can be really helpful for figuring out the root cause of the issue.

I wanted to quote myself from a few posts ago:

The problem you show in your video can happen if you have multiple sources publishing a pose for the TF frame listed as the frame of reference in the depth image header message. You should determine which frame is listed in the header of the images you're passing to the TSDF algorithm, make sure that there is only one entity publishing TF data for that frame, and make sure that this frame is totally stationary relative to the

tsdf_originframe when your robot is not moving.

To be more specific, the Realsense D435 camera driver publishes a separate TF frame for each of the 3 cameras it provides (IR left, IR right, and color). In the video where the mesh is shifting left and right, it really looks like it's shifting by the distance between the left and right IR cameras. I was thinking that those TF frames might have the same name, or that the pairing between the depth images and the TF frames was being mismatched somehow.

Hello @schornakj Thank you for your comment. We'll check everything and let's you know detail soon.

Hello @schornakj

How did you determine the position of the camera relative to the robot tool flange? : we created a URDF file that contains urdfs for our robot and d435, then we attached them together. Later we let the ROS TF get the position of the camera, so I think there isn't any problem with the camera position or the TF.

<robot name="ur5_with_realsense" xmlns:xacro="http://www.ros.org/wiki/xacro" xmlns:xi="http://www.w3.org/2001/XInclude">

<!-- myrobot urdf file -->

<xacro:include filename="$(find jil_setup)/urdf/ur5_camera_fixed_mount.urdf"/>

<!-- add any joints/links required to connect the two -->

<xacro:include filename="$(find realsense2_description)/urdf/_d435.urdf.xacro" />

<sensor_d435 parent="camera_mount" name="camera_1">

<origin xyz="-0.0176 0 0.0792" rpy="0 0.261799 3.141592"/>

</sensor_d435>

</robot>Going back to your previous video, I noticed that the robot isn't moving while performing the scan. It would be very useful to see what happens while you're continuously capturing depth images while the system is moving around the part -- the nature of reconstruction errors that appear while the system is moving can be really helpful for figuring out the root cause of the issue. : we let the robot move to scan pose, then the robot stop, then we take the depth image and point cloud, then we publish as input_depth and input_point_clouds.

Here's a list of image topics published by the D435

/camera_1/aligned_depth_to_color/image_raw

/camera_1/aligned_depth_to_infra1/image_raw

/camera_1/color/image_raw

/camera_1/depth/image_rect_raw

/camera_1/depth_registered/points

/camera_1/infra1/image_rect_raw

/camera_1/infra2/image_rect_rawWe used the following topics as input depth or point clouds. The origin tf of them are both camera_1_color_optical_frame.

/camera_1/aligned_depth_to_color/image_raw : frame_id: "camera_1_color_optical_frame"

/camera_1/depth_registered/points : frame_id: "camera_1_color_optical_frame"

/camera_1/depth/image_rect_raw : frame_id: "camera_1_depth_optical_frame"

To be more specific, the Realsense D435 camera driver publishes a separate TF frame for each of the 3 cameras it provides (IR left, IR right, and color). In the video where the mesh is shifting left and right, it really looks like it's shifting by the distance between the left and right IR cameras. : I read the code, and find out that the point cloud is converted into an depth image then is pushed into the algorithm. So instead of using both depth and point cloud, we only use the depth. As a result, the jitter when creating mesh is gone. But still, the mesh is eroded over time.

I was thinking that those TF frames might have the same name, or that the pairing between the depth images and the TF frames was being mismatched somehow. : I don't understand this point. I assume that yak will convert depth and point cloud from their origin tf into tsdf_origin tf, then merge them into point cloud.

I read the code, and find out that the point cloud is converted into an depth image then is pushed into the algorithm. So instead of using both depth and point cloud, we only use the depth. As a result, the jitter when creating mesh is gone. But still, the mesh is eroded over time.

Does the bolded part mean that you were able to solve the problem where the reconstructed mesh was shifting left and right? If so, that's great to hear!

Your mesh is being eroded as you scan because the calculated position of the camera at a given time does not match the real-world position of the camera at that time. This can happen if one of the sources of TF information within your system has a clock that is out of sync relative to the rest of your system. The last time I encountered this, it was because the system clock in my robot's controller was 5 seconds behind my PC, so when Yak was trying to match up the depth images with camera positions it would use positions that were 5 seconds in the past, and it produced an issue similar to the one in your video.

In this video that you posted it looks like there is a 2 second delay between the robot moving and the TSDF preview updating, but there should be no delay if the system is working correctly. I think this means that either your camera or your robot is publishing messages with incorrect timestamps. I have a hazy memory that the Realsense D435 ROS driver ends up using the host PC's clock to calculate the image header timestamp, so I think it's likely that the robot driver is publishing joint data with and old timestamp.

Some follow-up questions:

Do you see any warnings or errors in the console after the program starts up or while it's running? Some warnings will be printed if the TF lookup fails when a new depth image is received.

Which Universal Robots driver are you using? In case you're not already I would suggest that you use the newest version since the old ones can be somewhat buggy.

Can you provide a video of the TSDF preview when your robot is moving around while continuously scanning?

I was thinking that those TF frames might have the same name, or that the pairing between the depth images and the TF frames was being mismatched somehow. : I don't understand this point. I assume that yak will convert depth and point cloud from their origin tf into tsdf_origin tf, then merge them into point cloud.

Yak does do that transformation into the tsdf_origin frame, but it relies on all the frame_id fields in the message headers being correct, and on the TF data of your environment being accurate. I was partly just speculating about possible sources of error in case an image was mislabeled with the wrong frame_id, and I think you've shown that this is not what's happening.

How did you determine the position of the camera relative to the robot tool flange? : we created a URDF file that contains urdfs for our robot and d435, then we attached them together. Later we let the ROS TF get the position of the camera, so I think there isn't any problem with the camera position or the TF.

I should have been more clear: I was referring to the real-life position of your camera relative to your actual robot, since this is usually different from the ideal transforms defined in the URDF. It's not directly related to the issue you're having, but if you want good-quality 3D models using Yak you should perform an extrinsic calibration to determine the actual real-world relationship between your robot's tool flange and your camera.

@schornakj Thank you for your explaination. I will send the detail to you in a few days.

Hello @schornakj

In the beginning, we saw a few messages like these. But during the scanning, those messages didn't appear, so I guess it didn't create any error.

[ WARN] [1599532854.080449728]: Frame metadata isn't available! (frame_timestamp_domain = RS2_TIMESTAMP_DOMAIN_SYSTEM_TIME)

[ WARN] [1599532854.083928449]: TF lookupTransform error: Lookup would require extrapolation into the future. Requested time 1599532854.080548404 but the latest data is at time 1599532854.067743469, when looking up transform from frame [camera_1_depth_optical_frame] to frame [tsdf_origin]

[ERROR] [1599532854.211213737]: Failed to fuse image.

08/09 11:40:54,386 WARNING [139732398896896] (ds5-timestamp.cpp:76) UVC metadata payloads not available. Please refer to the installation chapter for details.

[ WARN] [1599532859.592890252]: TF lookupTransform error: Lookup would require extrapolation into the future. Requested time 1599532859.557813205 but the latest data is at time 1599532859.539539878, when looking up transform from frame [camera_1_depth_optical_frame] to frame [tsdf_origin]

[ INFO] [1599532982.098745003]: Beginning marching cubes meshing

[ INFO] [1599533007.203660318]: Meshing done, saving to /home/jil/Desktop/results_mesh.ply

[ WARN] [1599533007.593554005]: TF lookupTransform error: Lookup would require extrapolation into the past. Requested time 1599532982.046206305 but the earliest data is at time 1599532997.608627230, when looking up transform from frame [camera_1_depth_optical_frame] to frame [tsdf_origin]

[ WARN] [1599533007.599522840]: TF lookupTransform error: Lookup would require extrapolation into the past. Requested time 1599532982.079140184 but the earliest data is at time 1599532997.608627230, when looking up transform from frame [camera_1_depth_optical_frame] to frame [tsdf_origin]

[ WARN] [1599533160.787737934]: TF lookupTransform error: Lookup would require extrapolation into the future. Requested time 1599533160.757272582 but the latest data is at time 1599533160.749214432, when looking up transform from frame [camera_1_depth_optical_frame] to frame [tsdf_origin]

[ INFO] [1599533430.818510140]: Beginning marching cubes meshing

[ INFO] [1599533456.243323671]: Meshing done, saving to /home/jil/Desktop/results_mesh.ply

[ WARN] [1599533456.645793275]: TF lookupTransform error: Lookup would require extrapolation into the past. Requested time 1599533430.720996561 but the earliest data is at time 1599533446.655006661, when looking up transform from frame [camera_1_depth_optical_frame] to frame [tsdf_origin]

[ WARN] [1599533456.660979529]: TF lookupTransform error: Lookup would require extrapolation into the past. Requested time 1599533430.753489749 but the earliest data is at time 1599533446.675151028, when looking up transform from frame [camera_1_depth_optical_frame] to frame [tsdf_origin]

[ WARN] [1599533456.694230367]: TF lookupTransform error: Lookup would require extrapolation into the past. Requested time 1599533430.786521952 but the earliest data is at time 1599533446.705285541, when looking up transform from frame [camera_1_depth_optical_frame] to frame [tsdf_origin]

We are using the newest version. UniversalRobots/Universal_Robots_ROS_Driver

Here is the link to the video: https://www.youtube.com/watch?v=zC7C9vg4iFs&feature=youtu.be&ab_channel=vugiatruong Here is the link to the generated mesh : https://drive.google.com/file/d/1WRbbfii-auE0c8i6vcemX2rs8fKnBYd-/view?usp=sharing

By the way, you're correct about the time stamp. We fixed it by letting the robot driver startup first, then start the camera. But There's still big timestamp difference between the last frame of input depth (/camera_1_depth_optical_frame) and tf (/joint_states/header).

When we use the rs_rgbd.launch file to publish both depth and point-cloud. The timestamp difference between the last frame is roughly 0.4 seconds.

# rostopic echo /camera_1/depth/image_rect_raw/header

seq: 642

stamp:

secs: 1599534754

nsecs: 207518343

frame_id: "camera_1_depth_optical_frame"

# rostopic echo /joint_states/header

seq: 2541

stamp:

secs: 1599534754

nsecs: 618653594

frame_id: ''

We tried to exclde the publishing of point-cloud topic by using this launch file rs_camera.launch, but then the timestamp difference between lst frame of input depth (/camera_1_depth_optical_frame) and tf (/joint_states/header) is still roughly 0.3 seconds.

# rostopic echo /camera_1/depth/image_rect_raw/header

seq: 1135

stamp:

secs: 1599534459

nsecs: 469069312

frame_id: "camera_1_depth_optical_frame"

# rostopic echo /joint_states/header

seq: 4602

stamp:

secs: 1599534459

nsecs: 688337916

frame_id: ''

As you can see in the above video, erosion is still available but it's slower than the previous experiment. May I ask how you overcome this problem: I have a hazy memory that the Realsense D435 ROS driver ends up using the host PC's clock to calculate the image header timestamp, so I think it's likely that the robot driver is publishing joint data with and old timestamp.

To solve that particular problem I made a little ROS node that subscribed to /joint_states, copied the contents of the incoming messages to a new message while setting the timestamp in the header to the node's current time, and publishing them to a different topic (/joint_states_restamped or similar). In that particular case the root cause was that the clock of the robot's control computer (a Kuka iiwa7) was out of sync with the computer running the rest of the ROS nodes, which isn't directly applicable to the way you have things set up (in other words: I'm not confident that the "fix" I described is actually a solution for your problem).

You should perform an extrinsic camera calibration to accurately calculate the position of your camera relative to your robot tool flange. Using an inaccurate estimate of the position of the camera relative to the robot can also cause the same kind of erosion you're seeing in your video. For example, if the actual orientation of the camera is a few degrees different than the orientation used to fuse depth images into the TSDF volume, then the geometry in the depth images will be integrated into the volume at the wrong relative positions as the robot moves the camera around the scene. The robot_cal_tools package provides some tools to do the data collection and optimization for camera calibration (though some setup will always be required for your specific robot and workcell).

You should also change the position of the TSDF origin so it's some distance underneath the tabletop. In your video it's very close to intersecting, so the surface is right on the edge of the volume and it sort of fades in and out of existence as the camera moves around.

Hello @schornakj

Thank you for your advices.

We'll try to:

Thanks for information of robot_cal_tools package. We'll try this too.

1. fix the time-shift between the robot controller and depth frame first.

Just an observation: seeing as you are using ur_robot_driver: the stamp is set by the driver using the clock of the ROS PC which is running the driver. There is no "robot controller" involved there.

@gavanderhoorn you're right. the stamp of /joint_states/ published by Universal_Robots_ROS_Driver is the stamp of ROS PC. I've corrected my comment.

We change the position of TSDF_origin to outside of scanning volume. And the erosion disappear. https://www.youtube.com/watch?v=18nOlJk0yGA&ab_channel=vugiatruong

But the final result of 3D model still terrible. https://drive.google.com/file/d/1kK6MuOou9fN1m4zHc2mm9AxVO0vEC4j4/view?usp=sharing

We'll try to fix the 1. problem and let you know soon.

Apparently the D435 has a minimum distance for depth of 11cm, but in the video it still looks awfully close to the object.

Hello @gavanderhoorn It may look close but the distance between camera and scanning object is actually more than 30 cm.

@schornakj We apply a little trick to overcome the problem 1.

Unfortunately, the result 3d model is still bumpy (surface of the model). I guess to make this work we need to do the calibration to get correct position of our camera related to ur5.

Hello @schornakj We used your suggested tool to calibrate the position of our camera. The 3d model look good now : 20200924 model .

I think this question is off topic but do you any tool or algorithm that allows us to calculate the scanning path for a known voxel volume?

It looks like I don't have access to download your model, but I think that posting a picture of it here would be a good way for you to declare victory!

I think this question is off topic but do you any tool or algorithm that allows us to calculate the scanning path for a known voxel volume?

This is a fairly broad area of research with lots of possible solutions depending on what you want to do. If you know where the volume you want to scan is positioned relative to your robot and can guarantee that the area outside of that volume will be collision-free, then it might be good enough to just move the camera in alternating lines back and forth over the volume. You can programmatically generate Cartesian waypoints along the scan path and then use a motion planning library like MoveIt's Cartesian Plan plugin or Tesseract's Descartes planner. It'll probably require some custom software on your end to coordinate planning and trajectory execution at the top level.

Here is the link to our model : 3d model

For anyone struggling with a black output screen, be aware that the camera specs are needed for the depth sensor which is normally different from the RGB. For a d435 the depth sensor has a resolution with 1280 to 720 instead of the full HD 1920 to 1080 of the RGB camera. This is really obvious and still took me a hole day to figure out.

If you are using this plugin to simulate a Realsense D435i in gazebo this launch file should do the job:

<launch>

<node name="tsdf_node" pkg="yak_ros" type="yak_ros_node" output="screen">

<remap from="~input_point_cloud" to="/point_cloud/"/>

<param name="tsdf_frame" value="tsdf_origin"/>

<param name="generate_mesh" value="$(find yak_ros)/demo/output"/>

<!--rosparam param="camera_matrix">[411.8604736328125, 0.0, 325.3558044433594, 0.0, 411.8604736328125, 246.15582275390625, 0.0, 0.0, 1.0]</rosparam-->

<rosparam param="camera_matrix">[695.9951171875, 0.0, 640.0, 0.0, 695.9951171875, 360.0, 0.0, 0.0, 1.0]</rosparam>

<param name="cols" value="1280"/>

<param name="rows" value="720"/>

<param name="volume_resolution" value="0.001"/>

<param name="volume_x" value="640"/>

<param name="volume_y" value="640"/>

<param name="volume_z" value="192"/>

</node>

</launch>@schornakj Thanks for your good explanations! :) <3

@gachiemchiep have you solved the problem, can you provide the solution link about the launch file or related code ?

Hello @schornakj We followed your guide at https://github.com/ros-industrial/yak_ros/issues/36#issuecomment-654521420 but we couldn't make yak_ros work yet. Would you mind give us some guidance.

Here're what we already finished:

ROS kinetic, create ROS workspace, compile the yak and yak_ros package. We ran the yak_ros's demo and see the output as follow

compile the realsense-ros package and use it to publish the pointcloud in structured PointCloud2 format

write launch file to start yak_ros_node. Part of the launch file is as:

But the GUI just got stuck and didn't have any output message.

Would you mind take a look and help us. Thank you/