Projection is generally quite hard and I suspect perceptual losses will not work well for your data domains. So unless there a bug in the projection process, I think it is not very strange to get a bad samples.

Closed MHX1203 closed 2 years ago

Projection is generally quite hard and I suspect perceptual losses will not work well for your data domains. So unless there a bug in the projection process, I think it is not very strange to get a bad samples.

thanks, this issue has resolved.

Hi, how did you solve it in the end?

I have trained stylegan2 with train.py with my own dataset, and training works well look like, the middle image that model generate is very real already.

but when i use modified projector.py to do gan inversion, i got an awful result, obviously gan inversion has failed, the image used to project is random sample from training set.

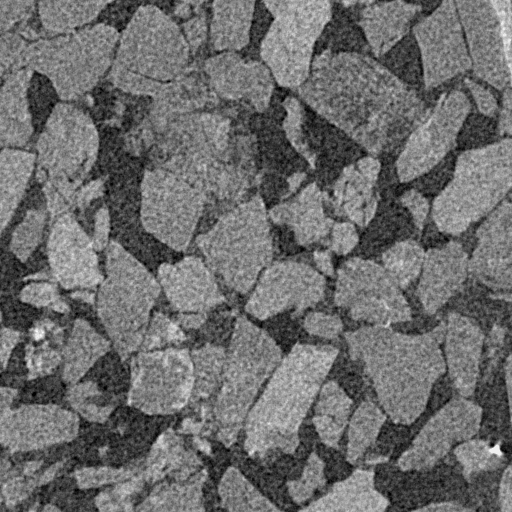

here is the real image from training set.

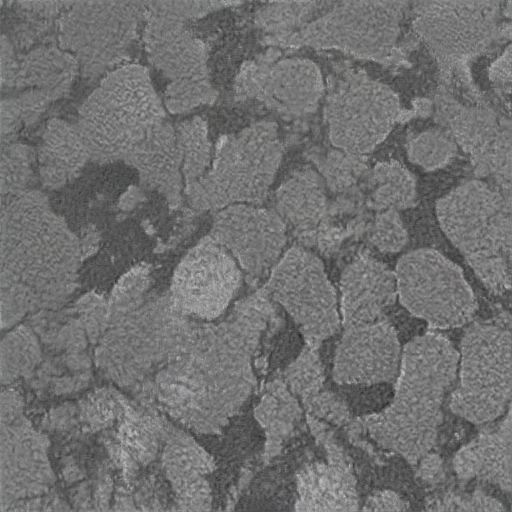

the following image is projected image

here is projector.py

and here is comman of run projection

I have try to decrease learning rate or change vgg loss to L1, but result is still awful. i'm confused now and have no idea what cause this failure.

Hope any one can give me some help, Sincere thanks.