librustc still has a lot of code that's "just implementation code" and not something infrastructural like types or traits used in the rest of the compiler.

If we look e.g. at rustc/middle: resolve_lifetime.rs - just a pass, can be moved to rustc_resolve, stability.rs - just a pass, can be moved to rustc_passes or something.

EDIT(Centril): The crate was renamed to

rustc_middlein https://github.com/rust-lang/rust/pull/70536. EDIT(jyn514): Most of rustc_middle was split intorustc_query_implin https://github.com/rust-lang/rust/pull/70951.The results from @ehuss's new Things to note.

Things to note.

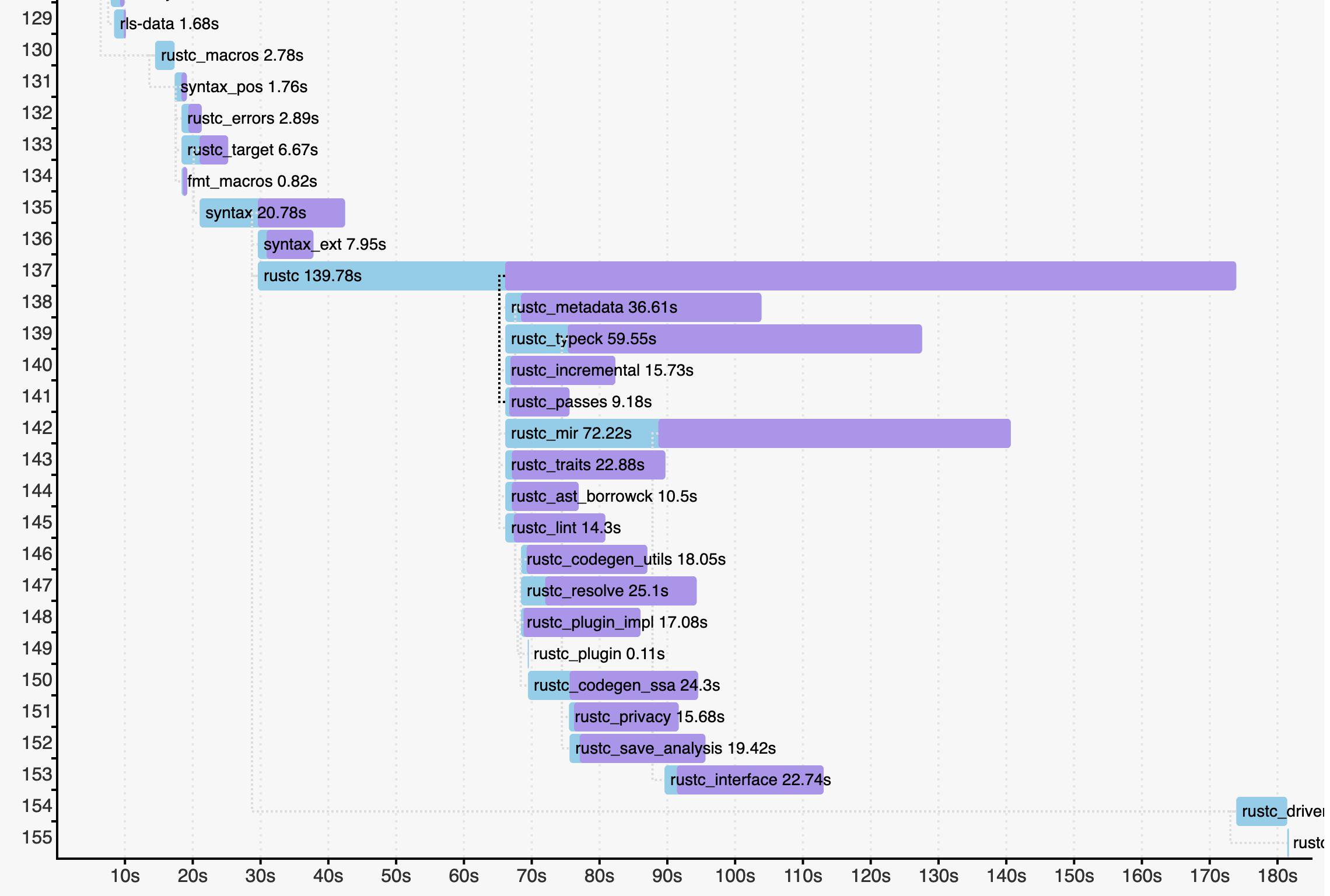

cargo -Ztimingsfeature really drive home how the size of therustccrate hurts compile times for the compiler. Here's a picture:rustccrate takes about twice as long to compile as therustc_mircrate, which is the second-biggest crate.rustccrate's codegen is happening. (Thank goodness for pipelining, it really makes a difference in this case.)rustccrate without anything else happening in parallel (from 42-67s, and from 141-174s).Also, even doing

checkbuilds onrustccode is painful. On my fast 28-core Linux box it takes 10-15 seconds even for the first compile error to come up. This is much longer than other crates. Refactorings within therustccrate that require many edit/compile cycles are painful.I've been told that

rustcis quite tangled and splitting it up could be difficult. That may well be true. The good news is that the picture shows we don't need to split it into 10 equal-sized pieces to get benefits. Even splitting off small chunks into separate crates will have an outsized effect on compilation times.