Hi @yisun-git, thank you for providing this additional detail on the VMM/hypervisor crates!

Some thoughts:

-

I like the VMM and the VM traits you've outlined and the functionality they provide. I see these working nicely with the VCPU trait and providing a nice hierarchy of functionality and solid building blocks for building hypervisors.

-

I see dynamic dispatch being utilized in the

createfunctions ofHypervisorandVm. I think these might be better served using static dispatch and trait generics, rather than "box"ing the references to the other trait implementations. The overhead introduced by dynamic dispatch and the virtual functions may not be necessary, and as you said, there will only be one type or implementation of hypervisor <-> VM <-> VCPU (as built at the highest level through conditional compilation) for each scenario. A statically-dispatched return value of a Trait generic can be achieved with theimpl Traitsyntax. For example:

pub trait Vcpu {

// …

}

pub trait Vm {

pub fn create_vcpu(&self, id: u8) -> impl Vcpu;

// …

}- I think each of these traits would be better implemented as a separate crate, so that each piece can be modularly used as VMM building blocks. That would make the VM crate a nice next step to implement, as the next highest-level crate (it would consume the VCPU).

You've created the issue here due to its connection to the Vcpu crate, but you can also feel free to move the discussion to the rust-vmm community issues to ensure that a larger audience sees it as well.

Proposal

vmm-vcpu has made Vcpu handling be hypervisor agnostic. But there are still some works to do to make whole rust-vmm be hypervisor agnostic. So here is a proposal to extend vmm-vcpu to Hypervisor crate to make rust-vmm be hypervisor agnostic.

Short Description

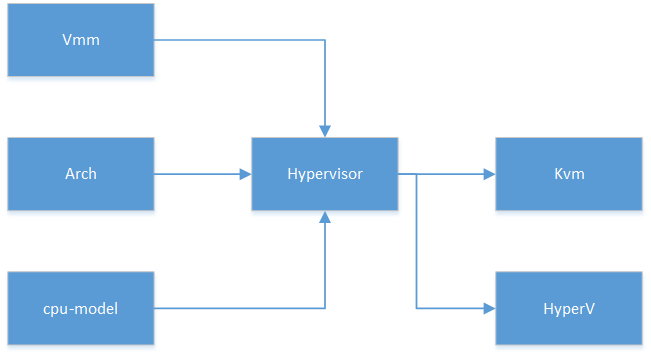

Hypervisor crate abstracts different hypervisors interfaces (e.g. kvm ioctls) to provide unified interfaces to upper layer. The concrete hypervisor (e.g. Kvm/ HyperV) implements the traits to provide hypervisor specific functions.

The upper layer (e.g. Vmm) creates Hypervisor instance which links to the running hypervisor. Then, it calls running hypervisor interfaces through Hypervisor instance to make the upper layer be hypervisor agnostic.

Why is this crate relevant to the rust-vmm project?

Rust-vmm should be workable for all hypervisors, e.g. KVM/HyperV/etc. So the hypervisor abstraction crate is necessary to encapsulate the hypervisor specific operations so that the upper layer can simplify the implementations to be hypervisor agnostic.

Design

Relationships of crates

Compilation arguments

Create concrete hypervisor instance for Hypervisor users (e.g. Vmm) through compilation argument. Because only one hypervisor is running for cloud scenario.

Hypervisor crate

This crate itself is simple to expose three public traits Hypervisor, Vm and Vcpu. This crate is used by KVM/HyperV/etc. The interfaces defined below are used to show the mechanism. They are got from Firecracker. They are more Kvm specific. We may change them per requirements.

Note: The Vcpu part refers the [1] and [2] with some changes.

[1] While the data types themselves (VmmRegs, SpecialRegisters, etc) are exposed via the trait with generic names, under the hood they can be kvm_bindings data structures, which are also exposed from the same crate via public redefinitions:

Sample codes to show how it works

Kvm crate

Below are sample codes in Kvm crate to show how to implement above traits.

Vmm crate

Below are sample codes in Vmm crate to show how to work with Hypervisor crate.

When start Vmm, create concrete hypervisor instance according to compilation argument. Then, set it to Vmm and start the flow: create guest vm -> create guest vcpus -> run.

References: [1] https://github.com/rust-vmm/community/issues/40 [2] https://github.com/rust-vmm/vmm-vcpu