This is one of the key differences between salsa and adaption (cc @matthewhammer). We followed here the Glimmer approach (cc @wycats) — in part because it is much simpler. You only need to track the inputs to any query, and don't have to be able to work the graph the other way. In the context of glimmer, it was also found to be more efficient: you are only doing work you know you have to do.

I think I would like to wait a bit until we see a true problem before pre-emptively making changes here. That might reveal whether indeed any changes are needed.

I would expect that in the context of an IDE, as you type into a fn, the IDE is also prioritized re-evaluation of queries related to that function, so they need to be re-evaluated anyway. It will also be re-evaluating the set of errors in other files, of course, but that can be done at a lower priority and will be continuously getting canceled as you type. Once you reach quiescence, we'll have to revalidate the inputs to those other files, but I expect that will be fast. In particular, they will share many of the same inputs, so the idea of O(n) time to revalidate a single query isn't really correct -- don't forget that once we revalidate the work for a given function, we cache that by updating verified_at, so we won't revalidate it twice. Hence it's really O(n) time to revalidate the entire graph.

But I guess time will out. =)

Currently, applying any change advances the global revision and "marks" all queries as potentially invalid (because their

verified_atbecomes smaller than current revision). The "marking" process is O(1) (we only bump one atomic usize), but subsequent validation can be O(N). Validation is on-demand (pull-based) and cheap because we don't execute queries and just compare hashes, but it still must walk large parts of the query graphs.Here are two examples where we might want something more efficient.

function body modification

In IDEs, a lot of typing happens inside a single function body. On the first sight, this seems like it could be implemented very efficiently: changing a body can't (in most cases) have any globally effects, so we should be able to recompute types inside function's body very efficiently. However, in the current setup, types inside functions indirectly depend on the all source files (b/c, to infer types, you need to know all of the impls, which might be anywhere), so, after a change to a function, we need to check if all inputs are up to date, and this is O(N) in the number of files in the project.

unrelated crates modification

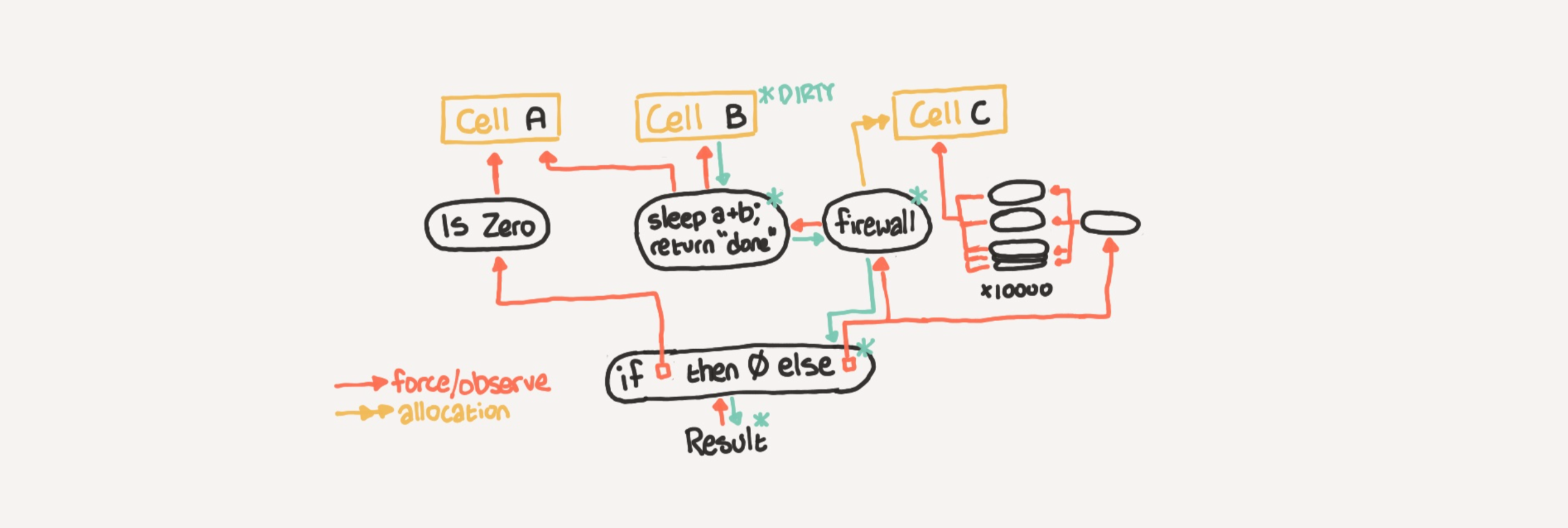

Suppose we have two independent crates, A and B, and a third crate C, which depends on A and B. Ideally, any modification of A should invalidate queries for C, but all queries for B should remain fresh. In the current setup, because there's a single global revision counter, we will have to re validate B's queries anyway.

It seems like we could achieve better big-O here, if we move validation work from query time (pull) to modification time (push).

In the second example we, for example, can define a per-crate revision counter, which is the sum of dependent-crates revisions + the revision of crate's source. During modification, we'll have to update the revision of all the reverse-dependencies of the current crate, but, during query, we can skip validation altogether, if crate-local revision hasn't change.

In the first example, we can define a "set of item declarations" query, whose results should not be affected by modification of function bodies. As "set of all items" is a union of per-files set of items (more or less, macros make everything complicated), we should be able to update when a single file is modified without checking if all other files are fresh.