Hello.

If you would like to upscale the JPEG images, you need to fine-tune our model with JPEG images.

It is because EDSR even does super-resolution to JPEG artifacts, so if you use the model that is trained with clean images, it will end up with enlarged artifacts.

We found that JPEG-input / PNG-output can solve this problem.

If you have enough time, you can try it.

Otherwise, maybe I can upload a model that is trained with the strategy above after CVPR submission.

Thank you!

.

.

Hi, thanks for you sharing.

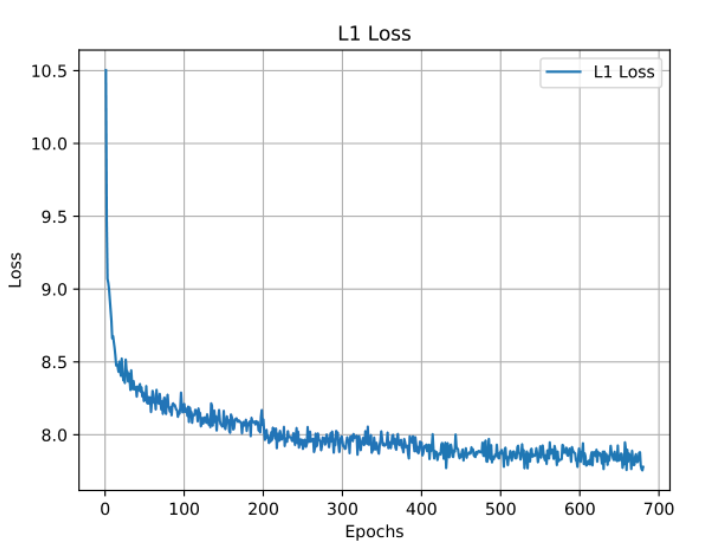

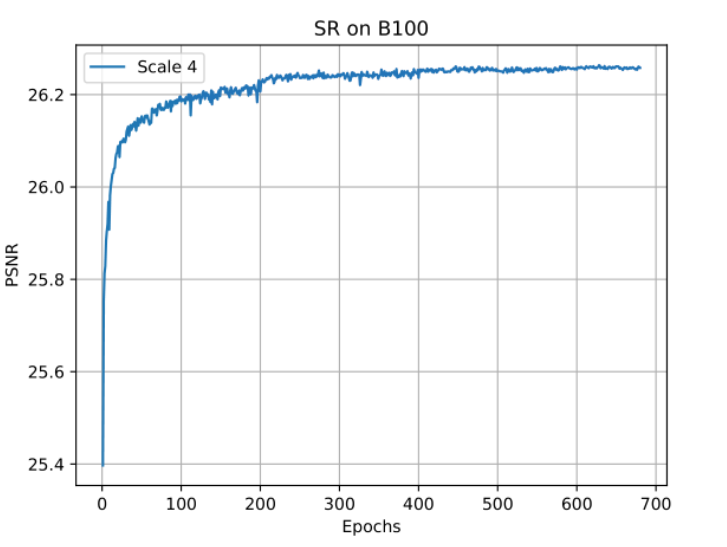

when I test the DIV2K validation dataset, the result is very good. But when I test the B100 dataset, the result is very bad and the value of psnr is 25.571 in scale 4. Both pre-trained model of the two tests are EDSR_baseline_x4.pt.

The images in B100 dataset are jpg format and I get low resolution jpg images by bicubic downsampling.Then I used these jpg images to participate in the test. Howeve, the reconstruct result is bad and psnr is very low.

Could you tell me how do you test on benchmark dataset such as B100? Thank you very much!