Closed sg-s closed 7 years ago

demo_finite_size

shuffled prinz model

shuffled prinz model

Adaptive sampling can be used to efficiently sweep a parameter space. Instead of sampling along grid spaces, adaptive sampling algorithms sample more closely when the metric of in- terest is changing and sample more slowly when the metric is slowly varying or invariant. Adaptive sampling algorithms can effectively identify boundaries in parameter space. In 2 di- mensions, Delaunay triangularization can be used to partition a space into searchable regions which can then be efficiently explored. Figure 2 shows the results of an adaptive sampling algorithm operating over two parameters of a stomatogastric ganglion neuron model (Z. Liu et al. 1998). The burst period of the neuron depends on the slow calcium conductance (gCaS ) and the hyperpolarization-activated mixed cation conductance (gH ). The algorithm samples more closely when the absolute value of the gradient of the burst period with respect to the parameters is high, in order to efficiently sample regions of interest.

Adaptive sampling of two parameters to characterize burst period. Red pluses indicate boundaries of the null region (no bursting in the model). Dots indicate sampled parameter values. Dots are colored based on observed burst period. Dots are clustered where the burst period covaries with the parameters most strongly.

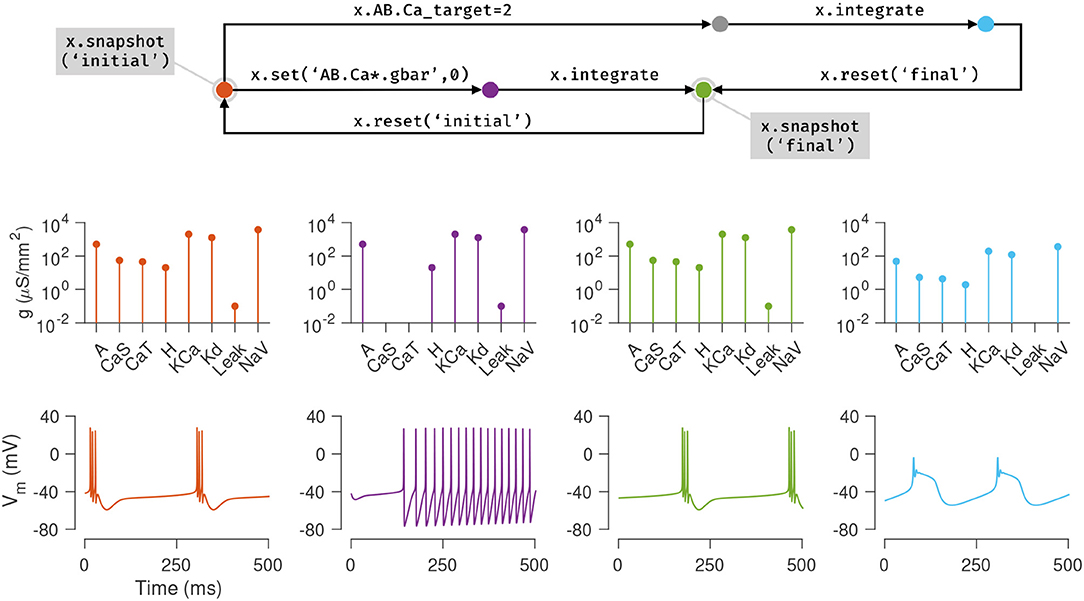

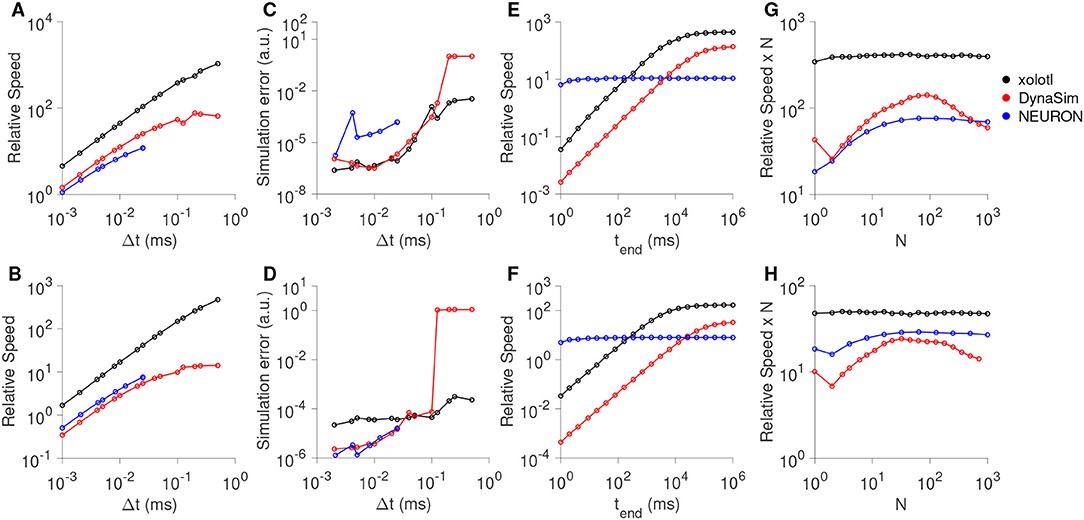

Particle swarm performs well on “pockmarked” objective function landscapes, where there are many local minima corresponding to degenerate solutions (Coventry et al. 2017; Hoyland 2018). Figure 3 shows benchmarks for three optimization algorithms implemented in the sim- ulation software xolotl (Gorur-Shandilya, Hoyland, and Eve Marder 2018). An 8-parameter model of a stomatogastric ganglion cell (Prinz, Billimoria, and Eve Marder 2003) was opti- mized for desired burst frequency, mean number of spikes per burst, and duty cycle using a pattern search algorithm (Hooke and Jeeves 1961), a genetic algorithm (Mitchell 2001), and particle swarm optimization (Kennedy and Eberhart 1995). A cost of 0 means that the model’s neurocomputational properties lie within specified ranges and the objective is met. Initial pa- rameter values were determined randomly. Since genetic algorithms and particle swarm are stochastic optimization algorithms, the optimizations were repeated multiple times with the same initial conditions and parameter values. Since particle swarm samples many points in parameter space at once during each step of the optimization process, the algorithm can find a local minimum corresponding to a degenerate solution very quickly.

Benchmark of parameter optimization algorithms. (a) Cost over time for the cost func- tion measuring burst frequency, mean number of spikes per burst, and duty cycle, as a function of real time. A cost of zero indicates a model that satisfies all constraints. Colors indicate different algorithms used. Particle swarm and genetic algorithm are stochastic and were simulated 100 times each (multiple trajectories plotted). (b) Proportion of models reaching a cost of zero during optimization as a function of the optimization time. (c) Waveform of model before optimization (black) and waveform after particle swarm optimization with zero cost (red). (d) Depiction of the conductance parameters in base-10 logarithmic units for the model before optimization (transparent) and after optimization depicted in (c).