- adding or removing (or crashing) gateway only nodes are also resetting recovery process. I believe there is no reason for any gateway node to affect recovery/reweight process.

Open furkanmustafa opened 6 years ago

Switched all cluster from corosync to zookeeper. That definitely made it more robust & responsive. Yet, problems still occur and I cannot get out of recovery loop.

Just a moment ago gateway node ( sheep -g ) died after a failing dog vdi list command.

[root@mizumushi sheepdog]# dog vdi list

failed to read a response

Failed to read object 800f2da500000000

Failed to read inode header

failed to read a response

Failed to read object 8011310f00000000

Failed to read inode headerand it crashed, /tmp/obj/sheep.log;

19 Segmentation fault (core dumped)and systemd-journal logged coredump, rather small;

Feb 05 22:12:33 mizumushi systemd[1]: Started Process Core Dump (PID 22254/UID 0).

-- Subject: Unit systemd-coredump@92-22254-0.service has finished start-up

-- Defined-By: systemd

-- Support: https://lists.freedesktop.org/mailman/listinfo/systemd-devel

--

-- Unit systemd-coredump@92-22254-0.service has finished starting up.

--

-- The start-up result is done.

Feb 05 22:12:34 mizumushi systemd-coredump[22255]: Process 18420 (sheep) of user 0 dumped core.

Stack trace of thread 1002:

#0 0x00007f7d6d29d1fb n/a (/lib/x86_64-linux-gnu/libpthread-2.19.so)

-- Subject: Process 18420 (sheep) dumped core

-- Defined-By: systemd

-- Support: https://lists.freedesktop.org/mailman/listinfo/systemd-devel

-- Documentation: man:core(5)

--

-- Process 18420 (sheep) crashed and dumped core.

--

-- This usually indicates a programming error in the crashing program and

-- should be reported to its vendor as a bug. Recovery finally succeeded this morning. With no crashes yet. In the process, because of high IO load, I have lost 2 disks. I have inserted 4 new 1 TB disks to some nodes. (disk full nodes and one new node). Moved existing data to the new disks (except the new node). This also helped the recovery.

Now the disks are working, but throughput has lowered down drastically. It does not saturate any of CPU/Disk/Network. Sometimes looks like does not even move, when I'm trying to read the vdi. As a result VMs are extremely slow (but working).

I had no performance problems before the outage. Using -n (nosync), but no object cache or journalling.

Is there a specific reason for losing cluster performance? and is there a way to fix it?

have you tune number of threads and throttling of recovery process?

After realizing that recovery was hard on disks, yes. I have enabled throttling. But I was late for it.

Also another update; cluster performance is back improving now.

I did not touch any thread numbers.

3 days later, sheep processes starting crashing (segfaults) randomly here and there and cluster is down again.

I've seen a new behavior this time, first time since I've started using sheepdog; I say, any dog command, for example;

dog node listIt just hangs there. (on all nodes of the cluster. None of them answers this question.)

Only one node showing a different, but not less strange behavior.

It says; "There are no active sheep daemons"

root@server:~# ps axf

PID TTY STAT TIME COMMAND

2099 ? Ss 0:00 bash

2122 ? R+ 0:00 \_ ps axf

1 ? Ss 0:00 /bin/bash /root/run.sh sheep

22 ? Sl 10:39 /usr/sbin/sheep -c zookeeper:10.0.42.202:2181,10.0.42.200:2181,10.0.42.201:2181 -R max=100 interval=1000 /store/server -p 7000 -P /var/run/sheep.pid -f

28 ? Ss 0:00 \_ /usr/sbin/sheep -c zookeeper:10.0.42.202:2181,10.0.42.200:2181,10.0.42.201:2181 -R max=100 interval=1000 /store/server -p 7000 -P /var/run/sheep.pid -f

25 ? S 0:00 tail -F /var/log/sheep*.log /var/log/corosync/corosync.log /store/server/sheep.log

2121 ? S 0:00 sleep 1

root@server:~# dog node list

There are no active sheep daemons

root@server:~# tail /store/server/sheep.log

Feb 10 09:27:16 WARN [main] do_add_vdi_state(379) caution, updating parent VID of VID: f2da5 (old parent VID: f2da4, new parent VID: f2da4)

Feb 10 09:27:16 WARN [main] do_add_vdi_state(379) caution, updating parent VID of VID: da4e6a (old parent VID: 9f82b2, new parent VID: 9f82b2)

Feb 10 09:27:16 WARN [main] do_add_vdi_state(379) caution, updating parent VID of VID: c42b26 (old parent VID: c42b25, new parent VID: c42b25)

Feb 10 09:27:16 WARN [main] do_add_vdi_state(379) caution, updating parent VID of VID: 17875d (old parent VID: bc085e, new parent VID: bc085e)

Feb 10 09:27:16 WARN [main] do_add_vdi_state(379) caution, updating parent VID of VID: bc085f (old parent VID: bc085e, new parent VID: bc085e)

Feb 10 09:27:16 WARN [main] do_add_vdi_state(379) caution, updating parent VID of VID: 9f82b3 (old parent VID: 9f82b2, new parent VID: 9f82b2)

Feb 10 09:27:16 WARN [main] do_add_vdi_state(379) caution, updating parent VID of VID: d4693a (old parent VID: bc085e, new parent VID: bc085e)

Feb 10 09:27:16 ERROR [main] check_host_env(571) failed to set nofile to suggested 6144000, Operation not permitted

Feb 10 09:27:16 ERROR [main] check_host_env(572) please increase nofile via sysctl fs.nr_open

Feb 10 09:27:16 INFO [main] main(1108) sheepdog daemon (version 1.0.1) started

root@server:~# Ok. This time looks like zookeeper went crazy. nodes were blocked on their init while trying to join the cluster, thus not answering cli calls.

All the troubles in the world concentrated here.

So, I have replaced zookeeper with etcd + zetcd. That (for now) gave me a robust/working/lightweight cluster and my nodes are happy for now. (recovering...)

A question at this point; Is this huge recovery process caused by automatic vnode assignment? If the vnode-assignment was done manually from the beginning, would this recovery process be only minimal, for only lost objects, etc.?

Status is the morning; I have managed to get dog client lockups again. I am suspecting this is probably due to some cluster locks being stucked in a locked state? And started thinking that was my probably problem with original zookeeper too?

After that, in etcd logs I have spotted time difference between nodes, does this have a big impact? (I have fixed it now using ntpdate)

Ok. on one of the nodes, I have this =>

Feb 11 01:41:49 ERROR [main] listen_handler(1036) failed to accept a new connection: Too many open filesI'll try to fix that and see what happens.

Yes. Looks like that problem in single node in cluster blocked the whole cluster. When I restarted that node, everything started to move again. Lost object counts increased a bit for some volumes.

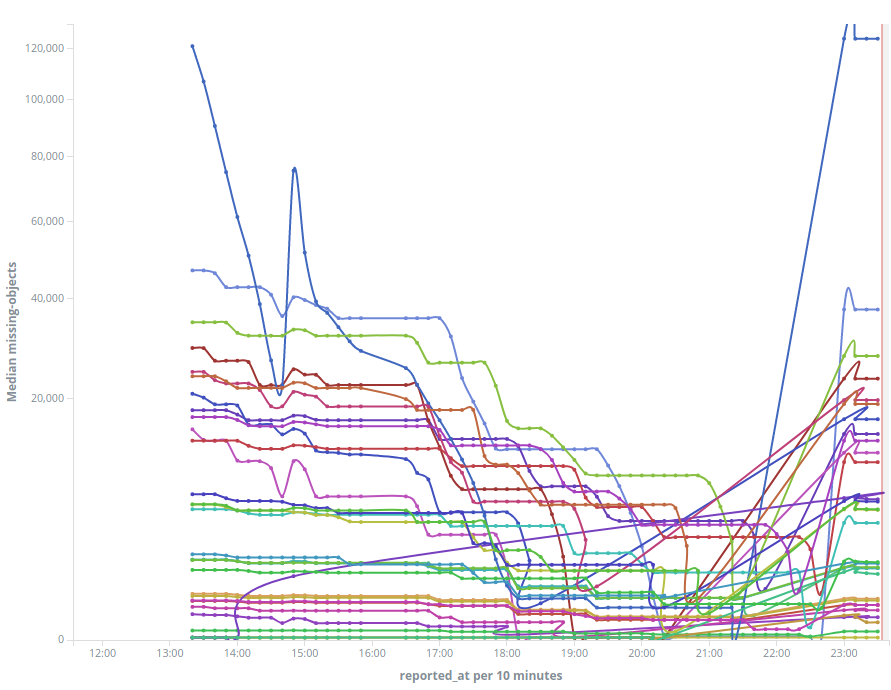

(Graph of recovery; lost object count per volume)

Another constant problem I am experiencing is;

After this last restart, recovery is almost not proceeding at all..

getting this error in some of the nodes;

Feb 11 16:15:27 ERROR [main] check_request_epoch(157) old node version 1166, 1164 (READ_PEER)after checking all, seems like some are lacking behind, like below;

Feb 11 16:17:21 ERROR [main] check_request_epoch(165) new node version 1124, 1164 (READ_PEER)Restarting the ones lacking behind...

Ok. Question; If some nodes realize that they're old (have old cluster epoch, whatever), why wouldn't they kill themselves, or just upgrade their knowledge about the cluster somehow? Or why would they get behind in the first place?

sorry, i don't think that sheepdog stable enough to handle unexpected failures and recovery. Sometimes ago i'm lost all of my data after power down two nodes with copies=2. Because recovery delete all of my data moving it into stale and removed after recovery finished.

Because recovery delete all of my data moving it into stale and removed after recovery finished.

Was it because of this? => https://github.com/sheepdog/sheepdog/pull/371

I think that this is not only one issue that can damage data. In my case i can't start sheep because it says that somethink wrong with epoch.

the amount of space that .stale folder eats is ENDLESS. 4TB in one node. 2.6TB in another node. I did not even have that much data in my cluster.

I understand the file names are in .stale folder mostly have .{epoch} at their name. like; 002e36e8000003a3_1.56

For example in this file, epoch number is 56. But my last working, completely recovered cluster epoch is higher than that. Would that be OKAY to delete that file in this case?

A bit more digging into .stale, I have found this;

[root@my-server .stale]# find . -name "00905fdd00000ec1_1*" | tail | xargs -n 1 md5sum

b27345e0fa8ac51fac3032eeddb82e5d ./00905fdd00000ec1_1.56

b27345e0fa8ac51fac3032eeddb82e5d ./00905fdd00000ec1_1.180

b27345e0fa8ac51fac3032eeddb82e5d ./00905fdd00000ec1_1.528

b27345e0fa8ac51fac3032eeddb82e5d ./00905fdd00000ec1_1.681

b27345e0fa8ac51fac3032eeddb82e5d ./00905fdd00000ec1_1.790

b27345e0fa8ac51fac3032eeddb82e5d ./00905fdd00000ec1_1.816

b27345e0fa8ac51fac3032eeddb82e5d ./00905fdd00000ec1_1.950

b27345e0fa8ac51fac3032eeddb82e5d ./00905fdd00000ec1_1.1124

b27345e0fa8ac51fac3032eeddb82e5d ./00905fdd00000ec1_1.1168The very same object with same contents, but copies for multiple epochs in .stale folder.

Reading the source code to be sure that I can delete older copies. Please confirm if you know.

I am using v1.0.1, compiled from github tag, on ubuntu14.04, with corosync. 7~10 TB total md size.

I had 7 nodes on my experimental setup. One of the nodes suddenly failed, and automatic-recovery (reweight) kicked in, which caused lots of IO on other nodes. And that caused some other nodes to crash. My nodes are set to restart after crash. But now my cluster is in recovery loop that never succeeds.

Also it continuously increases disk usage until disk is full on some nodes (in .stall folder). If the disk is full, the node cannot start again, and other nodes cannot see the objects those nodes have, and cannot properly recover.

Lot's of points could be suggested to improve at this point, like;

dog cluster recovery disabledoes not work until the cluster is healthy. An option to disable recovery on init would be helpful. Otherwise everytime a node dies/comes-back, the whole operation is restarting, which takes hours and hours to recover each node.I'm currently still trying to recover the cluster (for the last 30~40 hours).

Any suggestions would be really helpful.