HI Shoubhik,

We also have the requirement, for buildpacks buildstrategy, we would like to use RedHat UBI base image to build the output image. I am not sure if this requirement can be addressed in this issue.

I think like the builder image, we should not ask end-user to set the builder image and base runtime image explicitly. But we should have the capability to choose the different base image we used for end user to build the output image.

Pls let me know if I misunderstand something.

Thanks!

Clubbing https://github.com/redhat-developer/build/issues/40 https://github.com/redhat-developer/build/issues/39

Goal

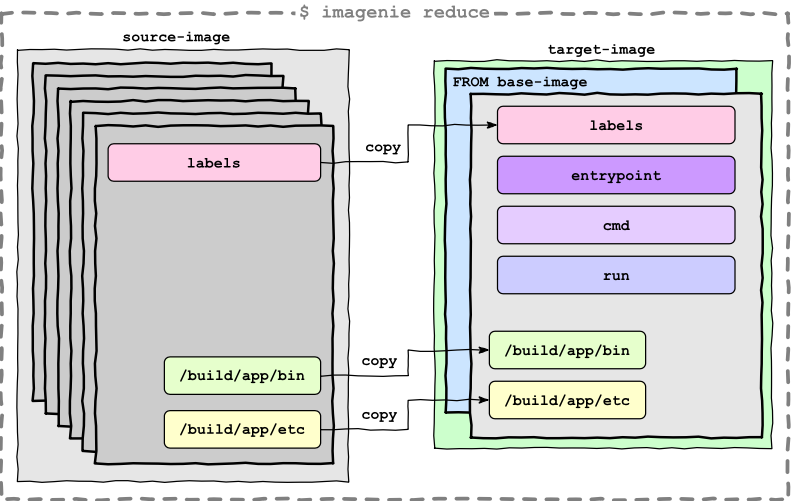

As a developer, I want to separate build tools used to build the application from runtimes, so that I can create lean application images.

A Build would require three image designations in this case:

Problem

S2I images on OpenShift contain both build tools and runtime dependencies which produce bulky images. The build tools are only needed when building the application and image and not needed when the image is deployed but they add a unnecessarily overhead to the size of the image.

Why is this important?

To enable developers to build lean runtime images for their application that only contain the application and the minimal dependencies it has at runtime

Solution requirements

The solution needs to be generic so that it usable by any strategy if the user provides the information on