Data and Model Parallel

https://openai.com/research/techniques-for-training-large-neural-networks

https://openai.com/research/techniques-for-training-large-neural-networks

Start from here

torch-model-parallel-tutorial: speed up training by 50% using Pipeline Parallel, for it solves the GPU idling problem in Naive Model Parallel (Vertical).

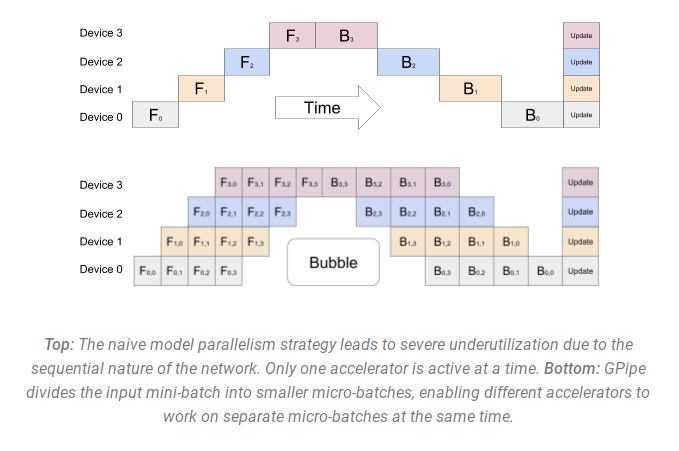

First, split model into 2 parts on 2 gpus. One gpu do its own part of work. One gpu wait the other gpu finish its batch. It contains lots of idle gpu time. This is called Naive Model Parallel (Vertical).

Then, we split the whole batch in smaller batch-splits. GPU0 finish one small batch-split and then GPU1 start its task as well. Through split big batch into small, could reduce the whole ilde gpu time and raise the training speed.

We call this upgrade as Pipeline Parallel.

Model Parallel Review

huggingface-model-parallelism | new, In the modern machine learning the various approaches to parallelism are used to:

- fit very large models onto limited hardware - e.g. t5-11b is 45GB in just model params

- significantly speed up training - finish training that would take a year in hours

Concepts 1. DataParallel (DP) - the same setup is replicated multiple times, and each being fed a slice of the data. The processing is done in parallel and all setups are synchronized at the end of each training step. 2. TensorParallel (TP) - each tensor is split up into multiple chunks, so instead of having the whole tensor reside on a single gpu, each shard of the tensor resides on its designated gpu. During processing each shard gets processed separately and in parallel on different GPUs and the results are synced at the end of the step. This is what one may call horizontal parallelism, as the splitting happens on horizontal level. 3. PipelineParallel (PP) - the model is split up vertically (layer-level) across multiple GPUs, so that only one or several layers of the model are places on a single gpu. Each gpu processes in parallel different stages of the pipeline and working on a small chunk of the batch. 4. Zero Redundancy Optimizer (ZeRO) - Also performs sharding of the tensors somewhat similar to TP, except the whole tensor gets reconstructed in time for a forward or backward computation, therefore the model doesn’t need to be modified. It also supports various offloading techniques to compensate for limited GPU memory. 5. Sharded DDP - is another name for the foundational ZeRO concept as used by various other implementations of ZeRO.

DataParallel (DP)

Built-in feature of Pytorch(rank, torchrun): torch-parallel-training, torch-ddp-youtube

DP vs DDP: https://huggingface.co/docs/transformers/v4.28.1/en/perf_train_gpu_many#dp-vs-ddp

- First, DataParallel is single-process, multi-thread, and only works on a single machine, while DistributedDataParallel is multi-process and works for both single- and multi- machine training. DataParallel is usually slower than DistributedDataParallel even on a single machine due to GIL contention across threads, per-iteration replicated model, and additional overhead introduced by scattering inputs and gathering outputs.

- Recall from the prior tutorial that if your model is too large to fit on a single GPU, you must use model parallel to split it across multiple GPUs. DistributedDataParallel works with model parallel; DataParallel does not at this time. When DDP is combined with model parallel, each DDP process would use model parallel, and all processes collectively would use data parallel.

Sync required.

Naive Model Parallel (Vertical) and Pipeline Parallel

Like tutorial before, when model is too big to fit in one GPU. It's easy to slice it in several parts vertically. Problems:

- Deficiency: only one GPU is not idle at computation time.

- shared embeddings may need to get copied back and forth between GPUs.

Pipeline Parallel (PP) is almost identical to a naive MP, but it solves the GPU idling problem, by chunking the incoming batch into micro-batches and artificially creating a pipeline, which allows different GPUs to concurrently participate in the computation process.

PP introduces a new hyper-parameter to tune and it’s chunks which defines how many chunks of data are sent in a sequence through the same pipe stage. (Pytorch uses chunks, whereas DeepSpeed refers to the same hyper-parameter as GAS.)

Because of the chunks, PP introduces the concept of micro-batches (MBS). DP splits the global data batch size into mini-batches, so if you have a DP degree of 4, a global batch size of 1024 gets split up into 4 mini-batches of 256 each (1024/4). And if the number of chunks (or GAS) is 32 we end up with a micro-batch size of 8 (256/32). Each Pipeline stage works with a single micro-batch at a time.

Problems with traditional Pipeline API solutions:

- have to modify the model quite heavily, because Pipeline requires one to rewrite the normal flow of modules

- have to arrange each layer so that the output of one model becomes an input to the other model.

- conditional control flow at the level of pipe stages is not possible - e.g., Encoder-Decoder models like T5 require special workarounds to handle a conditional encoder stage.

Support in Pytorch, FairScale(FSDP), DeepSpeed, .etc

DeepSpeed, Varuna and SageMaker use the concept of an Interleaved Pipeline

Tensor Parallel (TP)

In Tensor Parallelism each GPU processes only a slice of a tensor and only aggregates the full tensor for operations that require the whole thing.

The main building block of any transformer is a fully connected. If we look at the computation in matrix form, it’s easy to see how the matrix multiplication can be split between multiple GPUs:

Using this principle, we can update an MLP of arbitrary depth, without the need for any synchronization between GPUs until the very end, where we need to reconstruct the output vector from shards.

Parallelizing the multi-headed attention layers is even simpler, since they are already inherently parallel, due to having multiple independent heads!

Special considerations:

- TP requires very fast network.

- therefore it’s not advisable to do TP across more than one node. Practically, if a node has 4 GPUs, the highest TP degree is therefore 4.

DeepSpeed calls it tensor slicing

Zero Redundancy Optimizer (ZeRO) Data Parallel

ZeRO-powered data parallelism (ZeRO-DP) is described on the following diagram from this blog post.

For simple talk, ZeRO is just the usual DataParallel (DP), except, instead of replicating the full model params, gradients and optimizer states, each GPU stores only a slice of it.

And then at run-time when the full layer params are needed just for the given layer, all GPUs synchronize to give each other parts that they miss - this is it.

More detail in zhihu.

DeepSpeed, FairScale(FSDP) support ZeRO-DP stages 1+2+3.

ZeRO-Offload

Offload is a strategy to complement GPU VRAM with CPU RAM for its cheaper.

We want minimal GPU VRAM cost with efficient communication strategy. In offload, FWD/BWD works in GPU VRAM for its big computation. Param update/float2half/optimizer state works in CPU RAM for its smaller computation and big memory cost. Others like activation, buffer, fragment can be reduced using checkpointing.

Problem:

- CPU RAM explosion.

- When batch size is small, gpu works faster than cpu, makes cpu a bottleneck.

PyTorch Fully Sharded Data Parallel (FSDP)

- meta-blog, torch-blog, fsdp-tutorial-youtube

- In standard DDP training, every worker processes a separate batch and the gradients are summed across workers using an all-reduce operation. FSDP unlock full parameter sharding by decomposing the all-reduce operations in DDP into separate reduce-scatter and all-gather operations:

- To maximize memory efficiency, we can discard the full weights after each layer’s forward pass, saving memory for subsequent layers. In pseudo-code:

- communication overhead: The maximum per-GPU throughput of 159 teraFLOP/s (51% of NVIDIA A100 peak theoretical performance 312 teraFLOP/s/GPU) is achieved with batch size 20 and sequence length 512 on 128 GPUs for the GPT 175B model; further increase of the number of GPUs leads to per-GPU throughput degradation because of growing communication between the nodes.

- An implementation of this method, ZeRO-3, has already been popularized by Microsoft. deepspeed-tutorial

use a random Gaussian initialization for A and zero for B.

use a random Gaussian initialization for A and zero for B.

Typology of Efficient Training

Data & Model Parallel

Param Efficient