@llCurious I've added responses in order for your questions below:

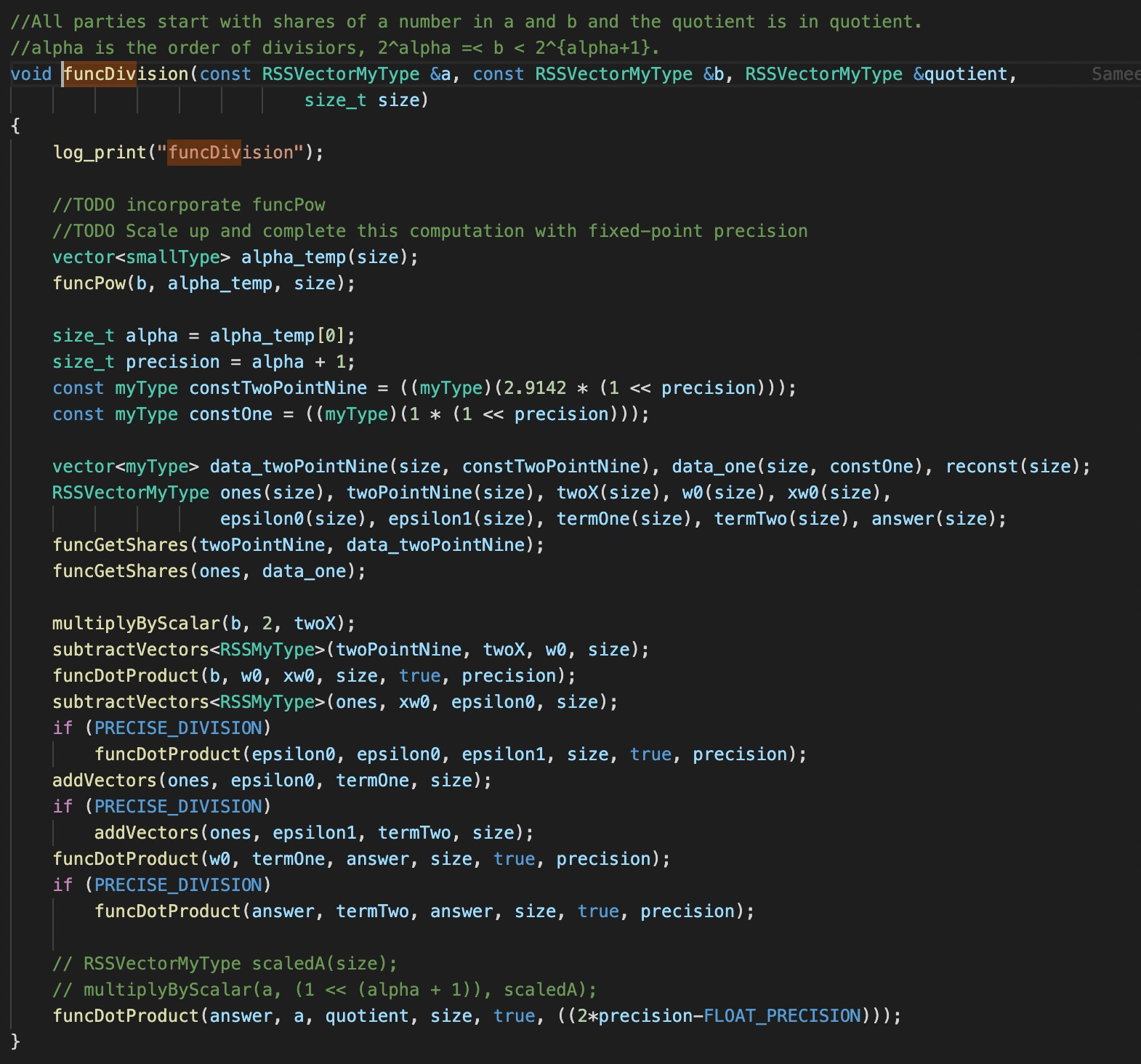

- Right, the

Powfunction needs to be vectorized. Take a look at this git issue for more details. The division protocol also needs to be modified accordingly. The function correctly reports the run-time but effectively computes only the first component correctly. - The

Powdoes indeed reveal information of the exponent \alpha and it is by design (see Fig. 8 here). This considerably simplifies the computation and the leakage is well quantified. However, the broader implications of revealing this value (such as can an adversary launch an attack using that information) is not studied in the paper. - A

BIT_SIZEof 32 is sufficient for inference and the code to reproduce this is given in thefiles/preload/. End-to-end training in MPC was not performed (given the prohibitive time and parameter tuning) though I suspect you're right, it would either require a larger bit-width or adaptive setting of the fixed-point precision.

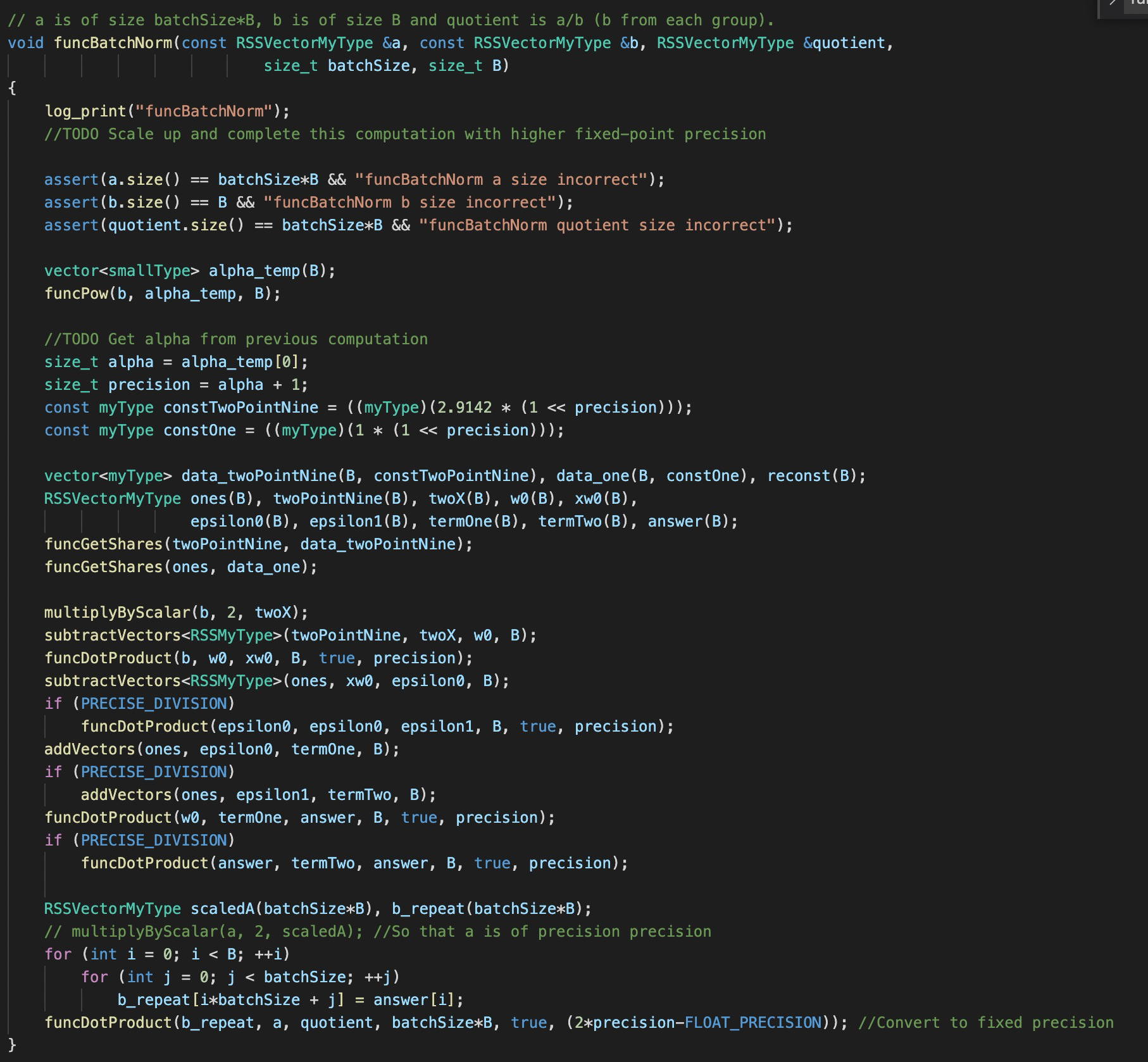

Hey, snwagh. I have been reading your paper of Falcon and found this repo. And I am interested in how you perform the computation of Batch Normalization.

I have the following two questions:

the implementation of BN seems to be just a single division

the protocol of

Powseems to reveal the information of the exponent, i.e., \alphathe BIT_SIZE in your paper is 32, which seems to be too small. How you guarantee the accuracy or say precision? IS the BN actually essential to your ML training and inference?