Seems that loss does not really converge with int4 fine tuning - 12% into data but loss remains 10.40?

Closed shreyansh26 closed 1 year ago

Seems that loss does not really converge with int4 fine tuning - 12% into data but loss remains 10.40?

That's an issue as well. Had raised it here - https://github.com/stochasticai/xturing/issues/162

Hi @shreyansh26, Can you share your GPU model and system specs? We will try to resolve this issue asap

@StochasticRomanAgeev For me -

Platform: Paperspace Gradient CPU: 8 core RAM: 45G GPU: A4000 16G

I am using a n1-standard-8 VM on GCP. With a single T4 16GB.

Do you use latest version of our lib?

I think so yes. My version number is 0.1.1

Same here.

On Apr 28, 2023 at 5:03 AM, <Roman Ageev @.***)> wrote:

Do you use latest version of our lib?

— Reply to this email directly, view it on GitHub (https://github.com/stochasticai/xturing/issues/167#issuecomment-1527228510), or unsubscribe (https://github.com/notifications/unsubscribe-auth/AAL5DAX3CXPCX6MNPF27HO3XDOBWTANCNFSM6AAAAAAXMHD4TQ). You are receiving this because you commented.Message ID: @.***>

The same happened to me. I tried with RTX 4090 Single gpu. In vast.ai.

I'm using Google colab pro with NVIDIA A100-SXM4-40GB and I got this issue with llama_lora_int8

@shreyansh26 We just released the v0.1.2 that should fix these issues. Can you please try and let us know?

I had the same problem before that everybody complained with v0.1.1. The new version v0.1.2 solved it. Thanks!

Hey @sarthaklangde, thank you. After upgrading, I don't see empty strings anymore. Loss values are also no longer constant, although it is highly fluctuating, which may be due to hyperparams. That's okay. But there is a different issue I am experiencing.

I finetuned the llama_lora_int4 on Alpaca for just one epoch to test. Script is below.

from xturing.datasets.instruction_dataset import InstructionDataset

from xturing.models import BaseModel

import sys

# Initializes the model

model = BaseModel.create("llama_lora_int4")

finetuning_config = model.finetuning_config()

print(finetuning_config)

finetuning_config.batch_size = 4

finetuning_config.gradient_accumulation_steps = 2

finetuning_config.num_train_epochs = 1

finetuning_config.learning_rate = 1e-7

finetuning_config.weight_decay = 0.01

finetuning_config.optimizer_name = "adamw"

print(model.finetuning_config())

instruction_dataset = InstructionDataset("data/alpaca_data")

model.finetune(dataset=instruction_dataset)

# Save the model

model.save("./llama_weights_alpaca")However, on testing, the original model and the finetuned model both return the same strings. I have tried for multiple inputs.

from xturing.datasets.instruction_dataset import InstructionDataset

from xturing.models import BaseModel

from datasets import load_from_disk

from tqdm import tqdm

import gc

model = BaseModel.create('llama_lora_int4')

output = model.generate(texts=["Explain Newton's laws of motion."])

print("Generated output by the model: {}".format(output))

del model

gc.collect()

model = BaseModel.load('./llama_weights_alpaca')

output = model.generate(texts=["Explain Newton's laws of motion."])

print("Generated output by the model: {}".format(output))The output is this -

I will run another finetuning run with default learning rates to check if the issue is dependent on learning rate or not. Maybe it is a trivial issue. I'll update on this thread.

I did another run, this time with the default learning of 1e-4. Loss curve looked better with this. But during inference, I am still seeing the same issue. The output from the finetuned model is same as the one from llama_lora_int4.

These were my hyperparameters.

learning_rate=0.0001

gradient_accumulation_steps=1

batch_size=8

weight_decay=0.01

warmup_steps=50

eval_steps=5000

save_steps=5000

max_length=256

num_train_epochs=3

logging_steps=10

max_grad_norm=2.0

save_total_limit=4

optimizer_name='adamw'

output_dir='saved_model'Hi @shreyansh26 How many num_train_epochs you set up during training?

1 epoch. But the loss went down from 10.4 to 0.9 or so. So the model was learning something but probably something is wrong with the loading or saving part. Not sure.

Hi @shreyansh26, Doing testing, need to do finetuning loop from scratch to check if it is saving problem

Hi @shreyansh26,

We released fix for this issue.

You also should consider smaller weight_decay to see changes in generation.

Please do pip install xturing -U

Hey @StochasticRomanAgeev, did you test the new changes at your end as well? I ask this because I am experiencing something very different now.

Reduced weight decay as you suggested, this is my training script now -

from xturing.datasets.instruction_dataset import InstructionDataset

from xturing.models import BaseModel

import sys

# Initializes the model

model = BaseModel.create("llama_lora_int4")

finetuning_config = model.finetuning_config()

print(finetuning_config)

finetuning_config.batch_size = 4

finetuning_config.gradient_accumulation_steps = 2

finetuning_config.num_train_epochs = 1

finetuning_config.weight_decay = 0.0001

finetuning_config.optimizer_name = "adamw"

print(model.finetuning_config())

instruction_dataset = InstructionDataset("../data/alpaca_data")

model.finetune(dataset=instruction_dataset)

# Save the model

model.save("./llama_weights_alpaca")And the same test script -

from xturing.datasets.instruction_dataset import InstructionDataset

from xturing.models import BaseModel

from datasets import load_from_disk

from tqdm import tqdm

import gc

model = BaseModel.create('llama_lora_int4')

output = model.generate(texts=["Explain Newton's laws of motion."])

print("Generated output by the model: {}".format(output))

del model

gc.collect()

model = BaseModel.load('./llama_weights_alpaca')

output = model.generate(texts=["Explain Newton's laws of motion."])

print("Generated output by the model: {}".format(output))The output from the new model is some garbage values it seems -

This is the case with other prompts I tried as well. The outputs are not the same but still a combination of '1', '\n' and some alphabets in between.

The loss did decrease similarly as last time, so some training did happen, but not sure why the outputs are like this.

Just a question can I train a model with kaggle GPU. As soon as the training starts I am getting the error with can anyone take a look and guide me?

KeyError Traceback (most recent call last)

File

KeyError: ('2-.-0-.-0-ccf5c87a2f99551e7274ef3e5605df6e-d6252949da17ceb5f3a278a70250af13-3b85c7bef5f0a641282f3b73af50f599-3d2aedeb40d6d81c66a42791e268f98b-3498c340fd4b6ee7805fd54b882a04f5-e1f133f98d04093da2078dfc51c36b72-b26258bf01f839199e39d64851821f26-d7c06e3b46e708006c15224aac7a1378-f585402118c8a136948ce0a49cfe122c', (torch.float16, torch.int32, torch.float16, torch.float16, torch.int32, torch.int32, 'i32', 'i32', 'i32', 'i32', 'i32', 'i32', 'i32', 'i32', 'i32', 'i32', 'i32', 'i32', 'i32'), (256, 64, 32, 8), (True, True, True, True, True, True, (False, True), (True, False), (True, False), (False, False), (False, False), (True, False), (False, True), (True, False), (False, True), (True, False), (False, True), (True, False), (True, False)))

During handling of the above exception, another exception occurred:

CalledProcessError Traceback (most recent call last) Cell In[9], line 6 3 import sys 5 # Initializes the model ----> 6 model = BaseModel.create("llama_lora_int4") 8 finetuning_config = model.finetuning_config() 10 print(finetuning_config)

File /opt/conda/lib/python3.10/site-packages/xturing/registry.py:14, in BaseParent.create(cls, class_key, *args, kwargs) 12 @classmethod 13 def create(cls, class_key, *args, *kwargs): ---> 14 return cls.registry[class_key](args, kwargs)

File /opt/conda/lib/python3.10/site-packages/xturing/models/llama.py:72, in LlamaLoraInt4.init(self, weights_path) 71 def init(self, weights_path: Optional[str] = None): ---> 72 super().init(LlamaLoraInt4Engine.config_name, weights_path)

File /opt/conda/lib/python3.10/site-packages/xturing/models/causal.py:215, in CausalLoraInt8Model.init(self, engine, weights_path) 213 def init(self, engine: str, weights_path: Optional[str] = None): 214 assert_not_cpu_int8() --> 215 super().init(engine, weights_path)

File /opt/conda/lib/python3.10/site-packages/xturing/models/causal.py:193, in CausalLoraModel.init(self, engine, weights_path) 192 def init(self, engine: str, weights_path: Optional[str] = None): --> 193 super().init(engine, weights_path)

File /opt/conda/lib/python3.10/site-packages/xturing/models/causal.py:28, in CausalModel.init(self, engine, weights_path) 27 def init(self, engine: str, weights_path: Optional[str] = None): ---> 28 self.engine = BaseEngine.create(engine, weights_path) 30 self.model_name = engine.replace("_engine", "") 32 # Finetuning config

File /opt/conda/lib/python3.10/site-packages/xturing/registry.py:14, in BaseParent.create(cls, class_key, *args, kwargs) 12 @classmethod 13 def create(cls, class_key, *args, *kwargs): ---> 14 return cls.registry[class_key](args, kwargs)

File /opt/conda/lib/python3.10/site-packages/xturing/engines/llama_engine.py:166, in LlamaLoraInt4Engine.init(self, weights_path) 161 state_dict = torch.load( 162 weights_path / Path("pytorch_model.bin"), map_location="cpu" 163 ) 165 if warmup_autotune: --> 166 autotune_warmup(model) 168 model.seqlen = 2048 170 model.gptq = True

File /opt/conda/lib/python3.10/site-packages/xturing/engines/quant_utils/quant.py:862, in autotune_warmup(model, transpose) 860 for n, (k, qweight, scales, qzeros, g_idx, bits, maxq) in n_values.items(): 861 a = torch.randn(m, k, dtype=torch.float16, device="cuda") --> 862 matmul248(a, qweight, scales, qzeros, g_idx, bits, maxq) 863 if transpose: 864 a = torch.randn(m, n, dtype=torch.float16, device="cuda")

File /opt/conda/lib/python3.10/site-packages/xturing/engines/quant_utils/quant.py:635, in matmul248(input, qweight, scales, qzeros, g_idx, bits, maxq) 628 output = torch.empty( 629 (input.shape[0], qweight.shape[1]), device="cuda", dtype=torch.float16 630 ) 631 grid = lambda META: ( 632 triton.cdiv(input.shape[0], META["BLOCK_SIZE_M"]) 633 * triton.cdiv(qweight.shape[1], META["BLOCK_SIZE_N"]), 634 ) --> 635 matmul_248_kernel[grid]( 636 input, 637 qweight, 638 output, 639 scales, 640 qzeros, 641 g_idx, 642 input.shape[0], 643 qweight.shape[1], 644 input.shape[1], 645 bits, 646 maxq, 647 input.stride(0), 648 input.stride(1), 649 qweight.stride(0), 650 qweight.stride(1), 651 output.stride(0), 652 output.stride(1), 653 scales.stride(0), 654 qzeros.stride(0), 655 ) 656 return output

File /opt/conda/lib/python3.10/site-packages/xturing/engines/quant_utils/custom_autotune.py:89, in Autotuner.run(self, *args, *kwargs) 87 pruned_configs = self.prune_configs(kwargs) 88 bench_start = time.time() ---> 89 timings = {config: self._bench(args, config=config, **kwargs) 90 for config in pruned_configs} 91 timings = {k:v for k,v in timings.items() if v != float('inf')} 92 bench_end = time.time()

File /opt/conda/lib/python3.10/site-packages/xturing/engines/quant_utils/custom_autotune.py:89, in

File /opt/conda/lib/python3.10/site-packages/xturing/engines/quant_utils/custom_autotune.py:71, in Autotuner._bench(self, config, *args, *meta) 67 self.fn.run(args, num_warps=config.num_warps, num_stages=config.num_stages, **current) 68 try: 69 # In testings using only 40 reps seems to be close enough and it appears to be what PyTorch uses 70 # PyTorch also sets fast_flush to True, but I didn't see any speedup so I'll leave the default ---> 71 return triton.testing.do_bench(kernel_call, rep=40) 72 except triton.compiler.OutOfResources: 73 return float('inf')

File /opt/conda/lib/python3.10/site-packages/triton/testing.py:143, in do_bench(fn, warmup, rep, grad_to_none, percentiles, record_clocks, fast_flush)

124 """

125 Benchmark the runtime of the provided function. By default, return the median runtime of :code:fn along with

126 the 20-th and 80-th performance percentile.

(...)

139 :type fast_flush: bool

140 """

142 # Estimate the runtime of the function

--> 143 fn()

144 torch.cuda.synchronize()

145 start_event = torch.cuda.Event(enable_timing=True)

File /opt/conda/lib/python3.10/site-packages/xturing/engines/quant_utils/custom_autotune.py:67, in Autotuner._bench.

File

File /opt/conda/lib/python3.10/site-packages/triton/compiler.py:1588, in compile(fn, kwargs) 1585 first_stage = list(stages.keys()).index(ir) 1587 # cache manager -> 1588 so_path = make_stub(name, signature, constants) 1589 # create cache manager 1590 fn_cache_manager = CacheManager(make_hash(fn, kwargs))

File /opt/conda/lib/python3.10/site-packages/triton/compiler.py:1477, in make_stub(name, signature, constants) 1475 with open(src_path, "w") as f: 1476 f.write(src) -> 1477 so = _build(name, src_path, tmpdir) 1478 with open(so, "rb") as f: 1479 so_cache_manager.put(f.read(), so_name, binary=True)

File /opt/conda/lib/python3.10/site-packages/triton/compiler.py:1392, in _build(name, src, srcdir) 1390 cc_cmd = [cc, src, "-O3", f"-I{cu_include_dir}", f"-I{py_include_dir}", f"-I{srcdir}", "-shared", "-fPIC", "-lcuda", "-o", so] 1391 cc_cmd += [f"-L{dir}" for dir in cuda_lib_dirs] -> 1392 ret = subprocess.check_call(cc_cmd) 1394 if ret == 0: 1395 return so

File /opt/conda/lib/python3.10/subprocess.py:369, in check_call(*popenargs, **kwargs) 367 if cmd is None: 368 cmd = popenargs[0] --> 369 raise CalledProcessError(retcode, cmd) 370 return 0

CalledProcessError: Command '['/usr/bin/gcc', '/tmp/tmpk73auo4o/main.c', '-O3', '-I/usr/local/cuda/include', '-I/opt/conda/include/python3.10', '-I/tmp/tmpk73auo4o', '-shared', '-fPIC', '-lcuda', '-o', '/tmp/tmpk73auo4o/matmul_248_kernel.cpython-310-x86_64-linux-gnu.so']' returned non-zero exit status 1.

Just a question can I train a model with kaggle GPU. As soon as the training starts I am getting the error with can anyone take a look and guide me?

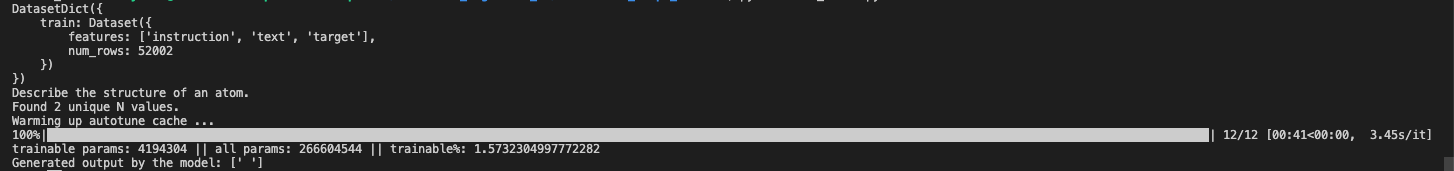

Found 2 unique N values.

Warming up autotune cache ... 0%| | 0/12 [00:00<?, ?it/s]/usr/bin/ld: cannot find -lcuda collect2: error: ld returned 1 exit status 0%| | 0/12 [00:00<?, ?it/s] KeyError Traceback (most recent call last) File :21, in matmul_248_kernel(a_ptr, b_ptr, c_ptr, scales_ptr, zeros_ptr, g_ptr, M, N, K, bits, maxq, stride_am, stride_ak, stride_bk, stride_bn, stride_cm, stride_cn, stride_scales, stride_zeros, BLOCK_SIZE_M, BLOCK_SIZE_N, BLOCK_SIZE_K, GROUP_SIZE_M, grid, num_warps, num_stages, extern_libs, stream, warmup)

KeyError: ('2-.-0-.-0-ccf5c87a2f99551e7274ef3e5605df6e-d6252949da17ceb5f3a278a70250af13-3b85c7bef5f0a641282f3b73af50f599-3d2aedeb40d6d81c66a42791e268f98b-3498c340fd4b6ee7805fd54b882a04f5-e1f133f98d04093da2078dfc51c36b72-b26258bf01f839199e39d64851821f26-d7c06e3b46e708006c15224aac7a1378-f585402118c8a136948ce0a49cfe122c', (torch.float16, torch.int32, torch.float16, torch.float16, torch.int32, torch.int32, 'i32', 'i32', 'i32', 'i32', 'i32', 'i32', 'i32', 'i32', 'i32', 'i32', 'i32', 'i32', 'i32'), (256, 64, 32, 8), (True, True, True, True, True, True, (False, True), (True, False), (True, False), (False, False), (False, False), (True, False), (False, True), (True, False), (False, True), (True, False), (False, True), (True, False), (True, False)))

During handling of the above exception, another exception occurred:

CalledProcessError Traceback (most recent call last) Cell In[9], line 6 3 import sys 5 # Initializes the model ----> 6 model = BaseModel.create("llama_lora_int4") 8 finetuning_config = model.finetuning_config() 10 print(finetuning_config)

File /opt/conda/lib/python3.10/site-packages/xturing/registry.py:14, in BaseParent.create(cls, class_key, *args, kwargs) 12 @classmethod 13 def create(cls, class_key, *args, *kwargs): ---> 14 return cls.registry[class_key](args, kwargs)

File /opt/conda/lib/python3.10/site-packages/xturing/models/llama.py:72, in LlamaLoraInt4.init(self, weights_path) 71 def init(self, weights_path: Optional[str] = None): ---> 72 super().init(LlamaLoraInt4Engine.config_name, weights_path)

File /opt/conda/lib/python3.10/site-packages/xturing/models/causal.py:215, in CausalLoraInt8Model.init(self, engine, weights_path) 213 def init(self, engine: str, weights_path: Optional[str] = None): 214 assert_not_cpu_int8() --> 215 super().init(engine, weights_path)

File /opt/conda/lib/python3.10/site-packages/xturing/models/causal.py:193, in CausalLoraModel.init(self, engine, weights_path) 192 def init(self, engine: str, weights_path: Optional[str] = None): --> 193 super().init(engine, weights_path)

File /opt/conda/lib/python3.10/site-packages/xturing/models/causal.py:28, in CausalModel.init(self, engine, weights_path) 27 def init(self, engine: str, weights_path: Optional[str] = None): ---> 28 self.engine = BaseEngine.create(engine, weights_path) 30 self.model_name = engine.replace("_engine", "") 32 # Finetuning config

File /opt/conda/lib/python3.10/site-packages/xturing/registry.py:14, in BaseParent.create(cls, class_key, *args, kwargs) 12 @classmethod 13 def create(cls, class_key, *args, *kwargs): ---> 14 return cls.registry[class_key](args, kwargs)

File /opt/conda/lib/python3.10/site-packages/xturing/engines/llama_engine.py:166, in LlamaLoraInt4Engine.init(self, weights_path) 161 state_dict = torch.load( 162 weights_path / Path("pytorch_model.bin"), map_location="cpu" 163 ) 165 if warmup_autotune: --> 166 autotune_warmup(model) 168 model.seqlen = 2048 170 model.gptq = True

File /opt/conda/lib/python3.10/site-packages/xturing/engines/quant_utils/quant.py:862, in autotune_warmup(model, transpose) 860 for n, (k, qweight, scales, qzeros, g_idx, bits, maxq) in n_values.items(): 861 a = torch.randn(m, k, dtype=torch.float16, device="cuda") --> 862 matmul248(a, qweight, scales, qzeros, g_idx, bits, maxq) 863 if transpose: 864 a = torch.randn(m, n, dtype=torch.float16, device="cuda")

File /opt/conda/lib/python3.10/site-packages/xturing/engines/quant_utils/quant.py:635, in matmul248(input, qweight, scales, qzeros, g_idx, bits, maxq) 628 output = torch.empty( 629 (input.shape[0], qweight.shape[1]), device="cuda", dtype=torch.float16 630 ) 631 grid = lambda META: ( 632 triton.cdiv(input.shape[0], META["BLOCK_SIZE_M"]) 633 * triton.cdiv(qweight.shape[1], META["BLOCK_SIZE_N"]), 634 ) --> 635 matmul_248_kernel[grid]( 636 input, 637 qweight, 638 output, 639 scales, 640 qzeros, 641 g_idx, 642 input.shape[0], 643 qweight.shape[1], 644 input.shape[1], 645 bits, 646 maxq, 647 input.stride(0), 648 input.stride(1), 649 qweight.stride(0), 650 qweight.stride(1), 651 output.stride(0), 652 output.stride(1), 653 scales.stride(0), 654 qzeros.stride(0), 655 ) 656 return output

File /opt/conda/lib/python3.10/site-packages/xturing/engines/quant_utils/custom_autotune.py:89, in Autotuner.run(self, *args, *kwargs) 87 pruned_configs = self.prune_configs(kwargs) 88 bench_start = time.time() ---> 89 timings = {config: self._bench(args, config=config, **kwargs) 90 for config in pruned_configs} 91 timings = {k:v for k,v in timings.items() if v != float('inf')} 92 bench_end = time.time()

File /opt/conda/lib/python3.10/site-packages/xturing/engines/quant_utils/custom_autotune.py:89, in (.0) 87 pruned_configs = self.prune_configs(kwargs) 88 bench_start = time.time() ---> 89 timings = {config: self._bench(*args, config=config, **kwargs) 90 for config in pruned_configs} 91 timings = {k:v for k,v in timings.items() if v != float('inf')} 92 bench_end = time.time()

File /opt/conda/lib/python3.10/site-packages/xturing/engines/quant_utils/custom_autotune.py:71, in Autotuner._bench(self, config, *args, *meta) 67 self.fn.run(args, num_warps=config.num_warps, num_stages=config.num_stages, **current) 68 try: 69 # In testings using only 40 reps seems to be close enough and it appears to be what PyTorch uses 70 # PyTorch also sets fast_flush to True, but I didn't see any speedup so I'll leave the default ---> 71 return triton.testing.do_bench(kernel_call, rep=40) 72 except triton.compiler.OutOfResources: 73 return float('inf')

File /opt/conda/lib/python3.10/site-packages/triton/testing.py:143, in do_bench(fn, warmup, rep, grad_to_none, percentiles, record_clocks, fast_flush) 124 """ 125 Benchmark the runtime of the provided function. By default, return the median runtime of :code:

fnalong with 126 the 20-th and 80-th performance percentile. (...) 139 :type fast_flush: bool 140 """ 142 # Estimate the runtime of the function --> 143 fn() 144 torch.cuda.synchronize() 145 start_event = torch.cuda.Event(enable_timing=True)File /opt/conda/lib/python3.10/site-packages/xturing/engines/quant_utils/custom_autotune.py:67, in Autotuner._bench..kernel_call() 65 config.pre_hook(self.nargs) 66 self.hook(args) ---> 67 self.fn.run(*args, num_warps=config.num_warps, num_stages=config.num_stages, **current)

File :41, in matmul_248_kernel(a_ptr, b_ptr, c_ptr, scales_ptr, zeros_ptr, g_ptr, M, N, K, bits, maxq, stride_am, stride_ak, stride_bk, stride_bn, stride_cm, stride_cn, stride_scales, stride_zeros, BLOCK_SIZE_M, BLOCK_SIZE_N, BLOCK_SIZE_K, GROUP_SIZE_M, grid, num_warps, num_stages, extern_libs, stream, warmup)

File /opt/conda/lib/python3.10/site-packages/triton/compiler.py:1588, in compile(fn, kwargs) 1585 first_stage = list(stages.keys()).index(ir) 1587 # cache manager -> 1588 so_path = make_stub(name, signature, constants) 1589 # create cache manager 1590 fn_cache_manager = CacheManager(make_hash(fn, kwargs))

File /opt/conda/lib/python3.10/site-packages/triton/compiler.py:1477, in make_stub(name, signature, constants) 1475 with open(src_path, "w") as f: 1476 f.write(src) -> 1477 so = _build(name, src_path, tmpdir) 1478 with open(so, "rb") as f: 1479 so_cache_manager.put(f.read(), so_name, binary=True)

File /opt/conda/lib/python3.10/site-packages/triton/compiler.py:1392, in _build(name, src, srcdir) 1390 cc_cmd = [cc, src, "-O3", f"-I{cu_include_dir}", f"-I{py_include_dir}", f"-I{srcdir}", "-shared", "-fPIC", "-lcuda", "-o", so] 1391 cc_cmd += [f"-L{dir}" for dir in cuda_lib_dirs] -> 1392 ret = subprocess.check_call(cc_cmd) 1394 if ret == 0: 1395 return so

File /opt/conda/lib/python3.10/subprocess.py:369, in check_call(*popenargs, **kwargs) 367 if cmd is None: 368 cmd = popenargs[0] --> 369 raise CalledProcessError(retcode, cmd) 370 return 0

CalledProcessError: Command '['/usr/bin/gcc', '/tmp/tmpk73auo4o/main.c', '-O3', '-I/usr/local/cuda/include', '-I/opt/conda/include/python3.10', '-I/tmp/tmpk73auo4o', '-shared', '-fPIC', '-lcuda', '-o', '/tmp/tmpk73auo4o/matmul_248_kernel.cpython-310-x86_64-linux-gnu.so']' returned non-zero exit status 1.

from xturing.datasets.instruction_dataset import InstructionDataset from xturing.models import BaseModel import sys

model = BaseModel.create("llama_lora_int4")

finetuning_config = model.finetuning_config()

print(finetuning_config)

finetuning_config.batch_size = 4 finetuning_config.gradient_accumulation_steps = 2 finetuning_config.num_train_epochs = 1 finetuning_config.learning_rate = 1e-7 finetuning_config.weight_decay = 0.01 finetuning_config.optimizer_name = "adamw"

print(model.finetuning_config())

instruction_dataset = InstructionDataset("/kaggle/working/alpaca_data")

model.finetune(dataset=instruction_dataset)

model.save("/kaggle/working/llama_weights_alpaca")

The output from the new model is some garbage values it seems

This is the case with other prompts I tried as well. The outputs are not the same but still a combination of '1', '\n' and some alphabets in between.

The loss did decrease similarly as last time, so some training did happen, but not sure why the outputs are like this.

I can confirm the description given by shreyansh26. Training on 10k input/output pairs (3 epochs, about 2 hours and a half with an RTX 4070), fast loss decrease, inconsistent weird outputs in the end. I have used the last version of xturing (by the way, I had to upgrade numpy and click to make it work).

Hi again, @gredin @cloudcoder2

We will replace int4 version with newer implementation in some time

For now you can try usage of int8 version with lora.

Hi @gredin @cloudcoder2, we have added int4 with the newer implementation on the latest update.

Hope it helps!

Referencing #161 again.

I tried finetuning the model on the alpaca data. Given below is the script I used.

Now when doing inference on the saved model, using the script below -

again outputs an empty string -