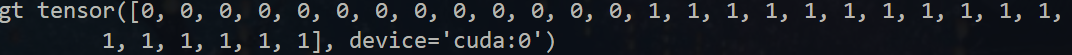

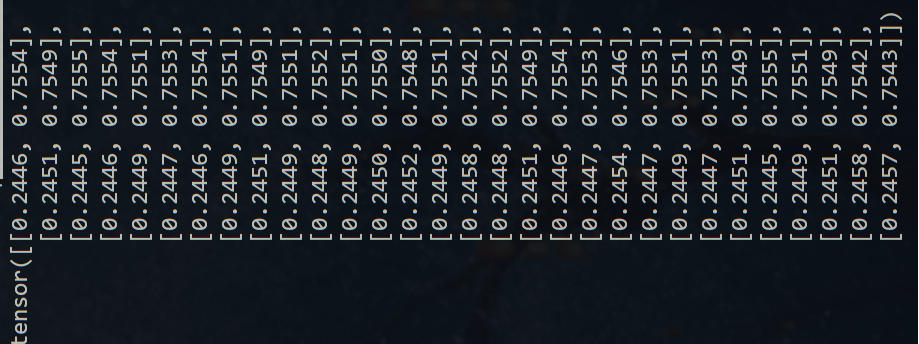

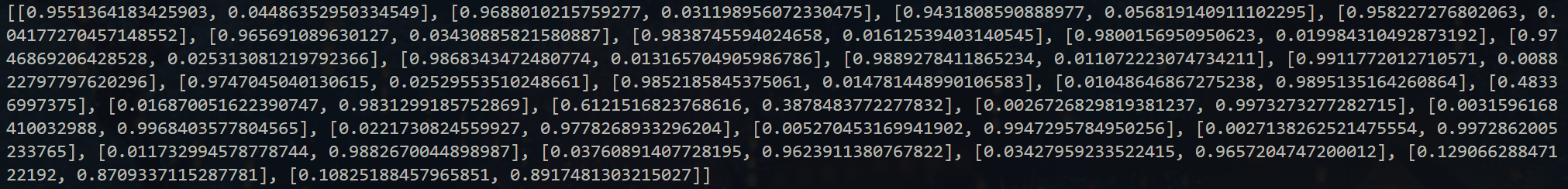

I trained on my own dataset(200train +30val),each slide were cut into 500~1500 tiles, and then embeded into 2048 dim vectors. It cost about 1min per epoch. I wonder how many slide is in your dataset(train and val) and how long it takes per epoch. By the way, my result were pretty poor and my training procedure were not stable, don't know where maybe wrong.

i would like to ask how much the training step takes per Epoch, i used your built model and i modified the PPGE model by adding FFT to reduce the dimension Convolution operation, only issue i noticed was that the Trainer took a lot of time to finish single Epoch, that's is related to size the shape of the image (2154,1024) or i missed something