Thanks for the issue.

In general I agree that a better "workers" handling is desirable. Something to consider is that once a worker is instantiated it generates a uuid that it uses for locking the jobs, only a worker with the correct uuid can unlock the job, so this uuid could be used for representing a unique worker in the queue.

Please see my answers below:

3) Application is restarted and new workers take other jobs to process, however, those ACTIVE jobs will never get processed (even when the lock timer expires)

New workers will process old jobs that where active but that lost their locks, however you need to have at least 1 QueueScheduler instance so that the mechanism works.

- Better worker creation process First of all, it would be nice to have ability to set name for the worker

I agree. That would be a nice and useful feature.

Right now, as i see it, every application which creates queue with the same name will effectively become a manager of this queue. The reason i think so is because whenever i start second application which creates instance of Queue with the same name, both of them will eventually stop processing jobs.

This should not be the behaviour, you can have as many instance of the same Queue as you want and it should not matter. If you found a case where this does not hold please submit it as a bug and I will look into it.

The WHAT part

It would be nice to see which jobs worker is working on.

The WHY part

Whenever you are building a distributed service, which will be executed on multiple machines, it is nice to know how many workers and jobs this specific machine is running.

Second thing, when application receives any of the SIG**** signals, it would be nice to do something about the jobs which are currently processing.

Desired behavior:

docker-compose stop && docker-compose pull && docker-compose up -dCurrent behavior:

docker-compose stop && docker-compose pull && docker-compose up -dThe HOW part

There are few fields for improvement (in my opinion).

1. Better worker creation process First of all, it would be nice to have ability to set name for the worker

Name could be optional due to the fact, that

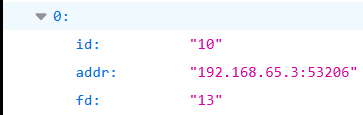

queue.getWorkers()method actually returns IDs for workers.2. Actually allow user to access Worker ID The ID for worker is actually present in the

queue.getWorkers()method, however, it is not accessible to the user3. Something like this would be nice to have in the per-worker output of

queue.getWorkers()4. Mark queues as 'master' and 'slave'

This is needed as multiple application might need to connect to queue (maybe some sort of governor for # of workers to create, for example if there are only 5 jobs and 5 servers, each server will only create 1 worker).

Right now, as i see it, every application which creates queue with the same name will effectively become a manager of this queue. The reason i think so is because whenever i start second application which creates instance of

Queuewith the same name, both of them will eventually stop processing jobs.So,

master/slaveis needed to basically disable the "manager" functionality of particular queue.