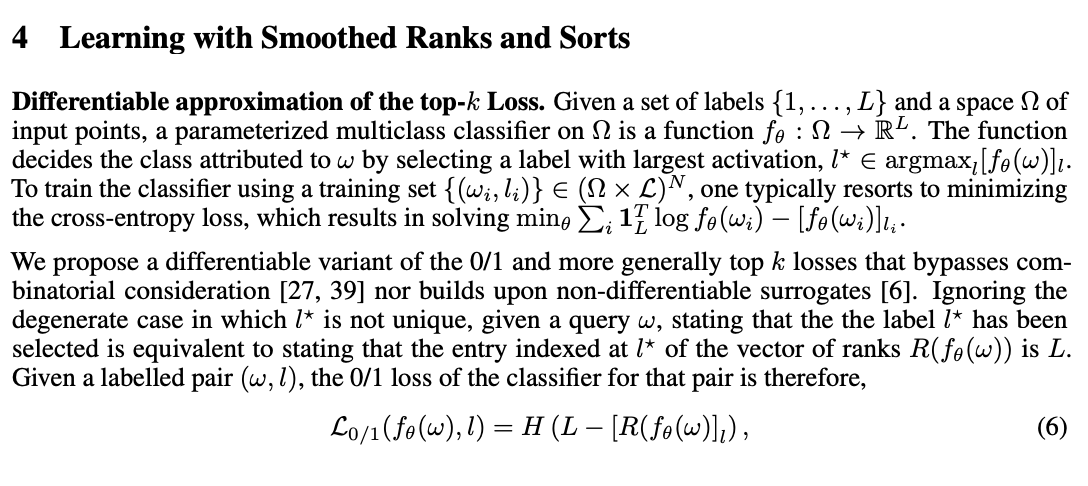

In the paper they follow the method described in Differentiable Ranking and Sorting using Optimal Transport :

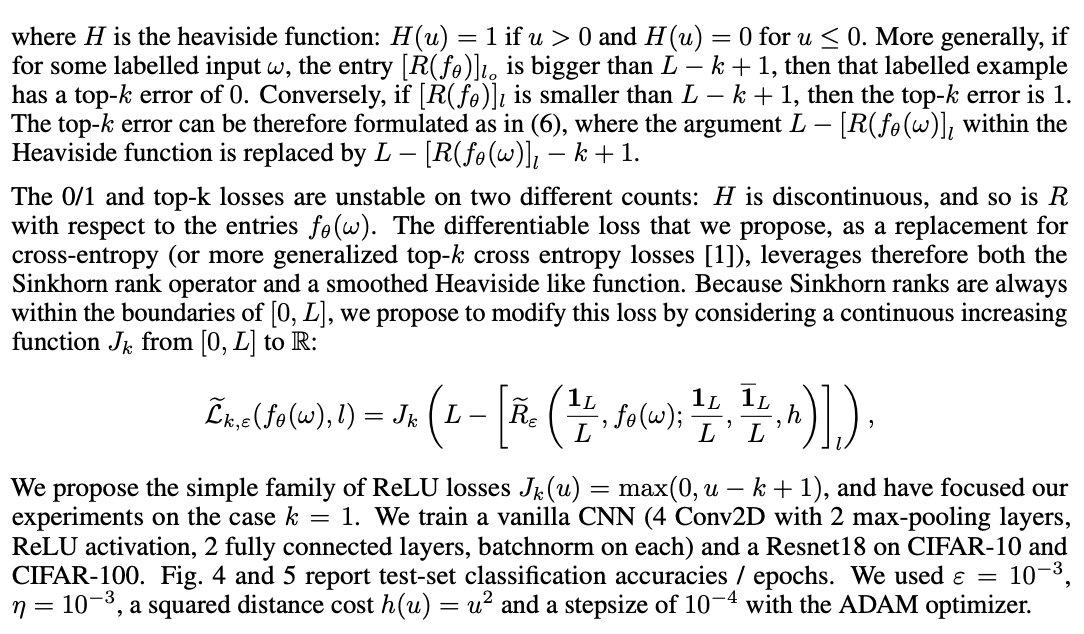

I believe this is what you would need to do:

import torch

import torchsort

def J(u, k=1):

return torch.nn.functional.relu(u - k + 1)

BATCH_SIZE = 64

L = 10 # number of classes

f_w = torch.randn(BATCH_SIZE, L) # logits of the CNN

l = torch.randint(high=L, size=(BATCH_SIZE, 1)) # integer labels

R = torchsort.soft_rank(f_w) # compute ranks of logits

R_l = R.gather(-1, l) # index ranks at label (l)

loss = J(L - R_l).mean()

...Intuitively this makes sense as we are effectively maximizing the rank of the correctly labelled logit. Once I have some more time on my hands I will work to fully reproduce their results, but this should be enough for you to get started!

Thanks a lot for this implementation. I was wondering how can I use the repo to reproduce the results on CIFAR as reported in the paper. As I understand, the target one-hot encoding will serve as top-k classification(k=1). But, after obtaining the logits and passing through the softmax(putting output [0, 1]) the objective is to make the output follow the target ordering. How can this be achieved?