Faster RCNN works in multiple stages.

-

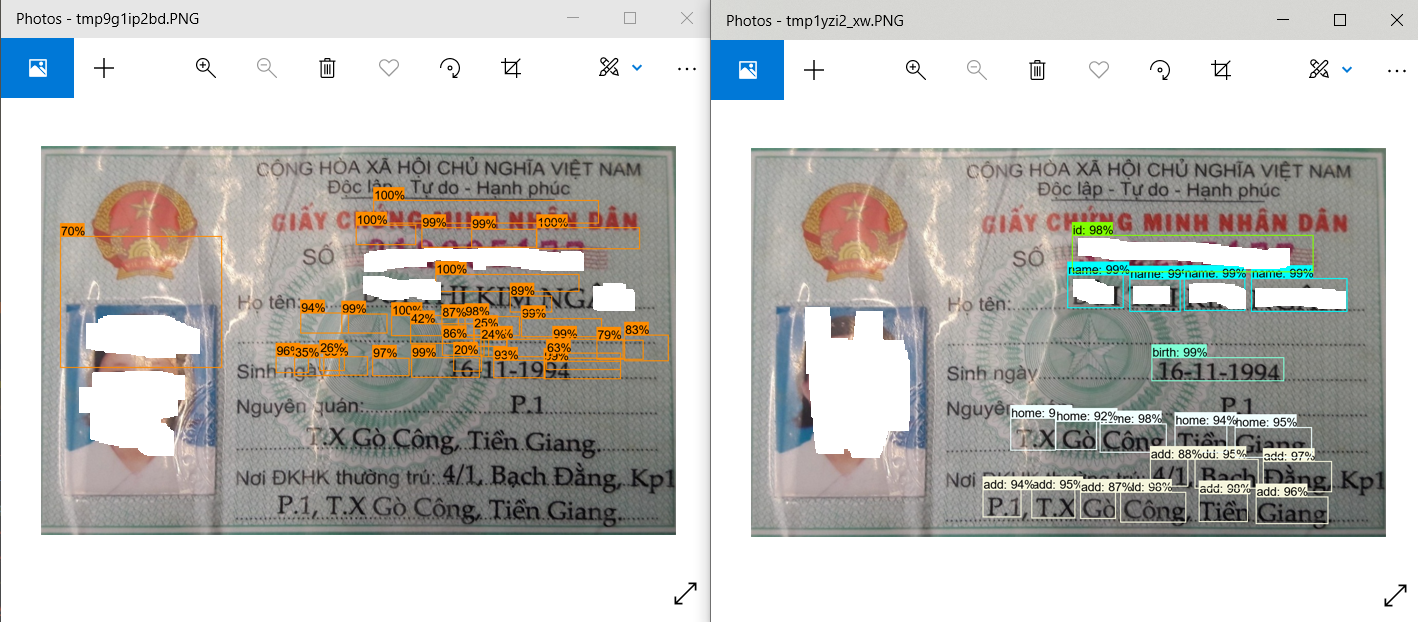

It takes the feature maps from CNN and passes them to the Region Proposal Network(RPN), and result of this is the n anchor boxes which you see in the left side of the image above. These Anchor boxes have different sizes and RPN predicts the probability that anchor is an object(without labels) and bounding box regressor for adjusting the anchors for better fit the object. That is the reason the RPN network output image won't be close to the final output since it has not passed the second stage.

-

After the RPN, it is passed to the pooling layer so that each proposals with no classes assigned will be cropped and classified to a object by extracting fixed size feature maps for each anchor. Finally, these feature maps are passed to a fully connected layer which has a softmax and linear regression layer to classify the object and predict the final bounding boxes for the identified objects. That is where you see the accurate bounding boxes in the right image.

Prerequisites

Please answer the following questions for yourself before submitting an issue.

1. The entire URL of the file you are using

https://github.com/tensorflow/models/blob/master/research/object_detection/utils/visualization_utils.py

2. Describe the bug

The visualization of proposal regions for Faster R-CNN Resnet101 aren't showing correctly. Left is output of RPN, Right is output of the entire network with Fast R-CNN head

3. Steps to reproduce

I build the model with

number_of_stages: 1in the config fileThen run detection on an image

Then pass the detections to

visualization_utils.visualize_boxes_and_labels_on_image_arrayFinally show the image with PIL

4. Expected behavior

Correct visualization, this is from Faster R-CNN Resnet 50

5. Additional context

Include any logs that would be helpful to diagnose the problem.

6. System information