@jch1 can comment on the why of this, but it seems that the graph is "frozen" (i.e., all variables are converted to constant nodes in the graph), so there are no "variables" left in the computation and hence the directory is empty.

Closed lionel92 closed 7 years ago

@jch1 can comment on the why of this, but it seems that the graph is "frozen" (i.e., all variables are converted to constant nodes in the graph), so there are no "variables" left in the computation and hence the directory is empty.

@asimshankar Thanks for your reply. I need the variables for serving, so what should I do to avoid this 'frozen' and not generate the empty directory?

@asimshankar @jch1 @lionel92 I am also facing this same issue following the base tutorials and the provided "export_inference_graph.py" funtion. If the graph is frozen, is there a way to unfreeze it?

Can't find help anywhere, any advice is greatly appreciated!

@vitutc There is a simple way to solve this problem. Just modify the function "_write_saved_model" in "exporter.py" like this, using the default graph which is already loaded into the global environment instead of generating a frozen one. Don't forget to modify the caller's arguments.

def _write_saved_model(saved_model_path,

trained_checkpoint_prefix,

inputs,

outputs):

"""Writes SavedModel to disk.

Args:

saved_model_path: Path to write SavedModel.

trained_checkpoint_prefix: path to trained_checkpoint_prefix.

inputs: The input image tensor to use for detection.

outputs: A tensor dictionary containing the outputs of a DetectionModel.

"""

saver = tf.train.Saver()

with session.Session() as sess:

saver.restore(sess, trained_checkpoint_prefix)

builder = tf.saved_model.builder.SavedModelBuilder(saved_model_path)

tensor_info_inputs = {

'inputs': tf.saved_model.utils.build_tensor_info(inputs)}

tensor_info_outputs = {}

for k, v in outputs.items():

tensor_info_outputs[k] = tf.saved_model.utils.build_tensor_info(v)

detection_signature = (

tf.saved_model.signature_def_utils.build_signature_def(

inputs=tensor_info_inputs,

outputs=tensor_info_outputs,

method_name=signature_constants.PREDICT_METHOD_NAME))

builder.add_meta_graph_and_variables(

sess, [tf.saved_model.tag_constants.SERVING],

signature_def_map={

signature_constants.DEFAULT_SERVING_SIGNATURE_DEF_KEY:

detection_signature,

},

)

builder.save()@lionel92 THANK YOU SO MUCH! A little detail but so hard for me as a beginner to piece together. I hope this helps other people as well! =D

I created a script using the above modification from @lionel92 which should essentially replace export_inference_graph.py to export a graph which can be served from TensorFlow Serving.

https://gist.github.com/dnlglsn/c42fbe71b448a11cd72041c5fcc08092

Hello Mr. @dnlglsn , could you please explain why we need the variables folder when we save the model? Is it useful to use it for C++ or when we import a model to OpenCV c++?

@kerolos I wanted to use a non-frozen model in Python and serve it via TensorFlow Serving. I guess the way models are saved expects a "variables" folder where the un-frozen variables are saved.

@dnlglsn you should make a pull request to add https://gist.github.com/dnlglsn/c42fbe71b448a11cd72041c5fcc08092 as a separate file to server unfrozen variables and not a frozen graph so for us that want to serve it via google cloud ml engine we use that exporter instead!

Hello @dnlglsn , I used your export_inference_graph.py in place of the original one, but i got the error:"TypeError: predict() missing 1 required positional argument: 'true_image_shapes' " , caused by row 109 in the script"output_tensors = detection_model.predict(preprocessed_inputs)" my python version is 3.6. As a beginner, I don't know how to solve this problem, would you please tell me how to fix this, thank you.

Hi here is function write_saved_model in exporter.py,

def write_saved_model(saved_model_path, frozen_graph_def, inputs, outputs): """Writes SavedModel to disk. If checkpoint_path is not None bakes the weights into the graph thereby eliminating the need of checkpoint files during inference. If the model was trained with moving averages, setting use_moving_averages to true restores the moving averages, otherwise the original set of variables is restored. Args: saved_model_path: Path to write SavedModel. frozen_graph_def: tf.GraphDef holding frozen graph. inputs: The input image tensor to use for detection. outputs: A tensor dictionary containing the outputs of a DetectionModel. """ with tf.Graph().as_default(): with session.Session() as sess:

tf.import_graph_def(frozen_graph_def, name='')

builder = tf.saved_model.builder.SavedModelBuilder(saved_model_path)

tensor_info_inputs = {

'inputs': tf.saved_model.utils.build_tensor_info(inputs)}

tensor_info_outputs = {}

for k, v in outputs.items():

tensor_info_outputs[k] = tf.saved_model.utils.build_tensor_info(v)

detection_signature = (

tf.saved_model.signature_def_utils.build_signature_def(

inputs=tensor_info_inputs,

outputs=tensor_info_outputs,

method_name=signature_constants.PREDICT_METHOD_NAME))

builder.add_meta_graph_and_variables(

sess, [tf.saved_model.tag_constants.SERVING],

signature_def_map={

signature_constants.DEFAULT_SERVING_SIGNATURE_DEF_KEY:

detection_signature,

},

)

builder.save()when I execute python export_inference_graph.py --input_type image_tensor --pipeline_config_path training/ssd_mobilenet_v1_pets.config --trained_checkpoint_prefix training/model.ckpt-59300 --output_directory DetectionModel

It creates a model named DetectionModel but that directory contains empty variables folder, please suggest appropriate solution

@mona-abc I left the job I was doing this work for and we only had Python 2.7. I am sorry, but I can't help you with your Python 3.6 issue. My guess is the underlying API changed and now the script won't work without modification. Look through the notes at the top of the source code on this page. It should shed some light on the situation. https://github.com/tensorflow/models/blob/master/research/object_detection/core/model.py It looks like the preprocess function might be returning a tuple now, but I'm not sure.

@dnlglsn Thank you for your response, I changed my python version to python2.7 and exported the model successfully. But now I met another problem in calling the model using the client.py. The codes I mainly depend on are:" Deploying Object Detection Model with TensorFlow Serving — Part 3 https://medium.com/@KailaGaurav/deploying-object-detection-model-with-tensorflow-serving-part-3-6a3d59c1e7c0", in this article he used the same way of saving the model as you. But there are some errors in using this client.py script, and I can't figure it out. Would you please share the "client.py" you used in tensorflow serving for object detection? That will be very helpful to us tf learners, thank you.

hi, Just share my case for others. I need to assign "saver" parameter to fix "empty variables folder" issue.

builder = tf.saved_model.builder.SavedModelBuilder('models/siam-fc/1')

builder.add_meta_graph_and_variables(

model.sess,

[tf.saved_model.tag_constants.SERVING],

signature_def_map={

'tracker_init': model_signature,

'tracker_predict': model_signature2

},

**saver=model.saver**

)

builder.save()

@minfenghong hi, where did you get the model and model.saver?

@minfenghong hi, where did you get the model and model.saver?

Hi, We have saver when we restore the model from checkpoint files e.g. https://github.com/bilylee/SiamFC-TensorFlow/blob/master/inference/inference_wrapper.py saver = tf.train.Saver(variables_to_restore_filterd)

I was also struggling with this, and was able to export a model (include the variables file) by updating @lionel92's suggestion for the current OD API code version (as of July 2). It mostly involves changing the write_saved_model function in models/research/object_detection/exporter.py

While this works, it's definitely a hack. @asimshankar, I think we should reopen this issue (or maybe #2045) -- I think it'd be valuable for the community to have a "proper" way to export models for inference w/ TF Serving.

write_saved_model in exporter.pydef write_saved_model(saved_model_path,

trained_checkpoint_prefix,

inputs,

outputs):

saver = tf.train.Saver()

with tf.Session() as sess:

saver.restore(sess, trained_checkpoint_prefix)

builder = tf.saved_model.builder.SavedModelBuilder(saved_model_path)

tensor_info_inputs = {

'inputs': tf.saved_model.utils.build_tensor_info(inputs)}

tensor_info_outputs = {}

for k, v in outputs.items():

tensor_info_outputs[k] = tf.saved_model.utils.build_tensor_info(v)

detection_signature = (

tf.saved_model.signature_def_utils.build_signature_def(

inputs=tensor_info_inputs,

outputs=tensor_info_outputs,

method_name=tf.saved_model.signature_constants.PREDICT_METHOD_NAME

))

builder.add_meta_graph_and_variables(

sess,

[tf.saved_model.tag_constants.SERVING],

signature_def_map={

tf.saved_model.signature_constants

.DEFAULT_SERVING_SIGNATURE_DEF_KEY:

detection_signature,

},

)

builder.save()_export_inference_graph in exporter.pyThen, within the _export_inference_graph function, update the final line to pass the checkpoint prefix like:

write_saved_model(saved_model_path, trained_checkpoint_prefix,

placeholder_tensor, outputs)Call models/research/object_detection/export_inference_graph.py normally. For me, that looked similar to this:

INPUT_TYPE=encoded_image_string_tensor

PIPELINE_CONFIG_PATH=/path/to/model.config

TRAINED_CKPT_PREFIX=/path/to/model.ckpt-50000

EXPORT_DIR=/path/to/export/dir/001/

python $BUILDS_DIR/models/research/object_detection/export_inference_graph.py \

--input_type=${INPUT_TYPE} \

--pipeline_config_path=${PIPELINE_CONFIG_PATH} \

--trained_checkpoint_prefix=${TRAINED_CKPT_PREFIX} \

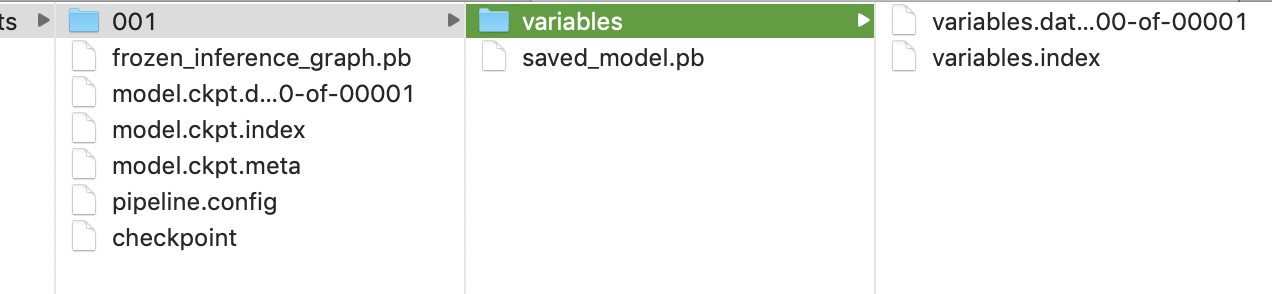

--output_directory=${EXPORT_DIR}If it works, you should see a directory structure like this. This is ready to be dropped into a TF Serving Docker image for scaled inference.

@wronk I did the same thing but I only have the pb file and the variable folder is empty :( HEEELPPP!! Version of Tensorflow: 1.13.1

@pablo-ticnow, it could be the version of TF, but I'm guessing there's something else wrong. I just confirmed that this still works on TF v1.15 and I made that original post using v1.12. Try it out with some of the models from model zoo to see if you can't lock down the issue.

@asimshankar, @pkulzc, I still think this issue or #2045 is worth a second look.

Does anyone have a solution for this?

have the same issue with tf 1.14

@wronk I did the same thing but I only have the pb file and the variable folder is empty :( HEEELPPP!! Version of Tensorflow: 1.15.1 I also only have a pb file generated by onnx transformation from pytorch model. So I can not supply the path of model.ckpt-50000 as the value of parameter TRAINED_CKPT_PREFIX. So does it mean that I have no ways to unfreeze my frozen graph?

I was also struggling with this, and was able to export a model (include the variables file) by updating @lionel92's suggestion for the current OD API code version (as of July 2). It mostly involves changing the

write_saved_modelfunction inmodels/research/object_detection/exporter.pyWhile this works, it's definitely a hack. @asimshankar, I think we should reopen this issue (or maybe #2045) -- I think it'd be valuable for the community to have a "proper" way to export models for inference w/ TF Serving.

Update

write_saved_modelinexporter.pydef write_saved_model(saved_model_path, trained_checkpoint_prefix, inputs, outputs): saver = tf.train.Saver() with tf.Session() as sess: saver.restore(sess, trained_checkpoint_prefix) builder = tf.saved_model.builder.SavedModelBuilder(saved_model_path) tensor_info_inputs = { 'inputs': tf.saved_model.utils.build_tensor_info(inputs)} tensor_info_outputs = {} for k, v in outputs.items(): tensor_info_outputs[k] = tf.saved_model.utils.build_tensor_info(v) detection_signature = ( tf.saved_model.signature_def_utils.build_signature_def( inputs=tensor_info_inputs, outputs=tensor_info_outputs, method_name=tf.saved_model.signature_constants.PREDICT_METHOD_NAME )) builder.add_meta_graph_and_variables( sess, [tf.saved_model.tag_constants.SERVING], signature_def_map={ tf.saved_model.signature_constants .DEFAULT_SERVING_SIGNATURE_DEF_KEY: detection_signature, }, ) builder.save()Update

_export_inference_graphinexporter.pyThen, within the

_export_inference_graphfunction, update the final line to pass the checkpoint prefix like:write_saved_model(saved_model_path, trained_checkpoint_prefix, placeholder_tensor, outputs)Call export script

Call

models/research/object_detection/export_inference_graph.pynormally. For me, that looked similar to this:INPUT_TYPE=encoded_image_string_tensor PIPELINE_CONFIG_PATH=/path/to/model.config TRAINED_CKPT_PREFIX=/path/to/model.ckpt-50000 EXPORT_DIR=/path/to/export/dir/001/ python $BUILDS_DIR/models/research/object_detection/export_inference_graph.py \ --input_type=${INPUT_TYPE} \ --pipeline_config_path=${PIPELINE_CONFIG_PATH} \ --trained_checkpoint_prefix=${TRAINED_CKPT_PREFIX} \ --output_directory=${EXPORT_DIR}If it works, you should see a directory structure like this. This is ready to be dropped into a TF Serving Docker image for scaled inference.

Hi, I only have a pb file generated by onnx transformation from pytorch model. So I can not supply the path of model.ckpt-50000 as the value of parameter TRAINED_CKPT_PREFIX. So does it mean that I have no ways to unfreeze my frozen graph?

hi everyone, I did the changes on exporter. py but unfortunately I got the error I downgrade the TF because of error ImportError: No module named 'tensorflow.contrib' but still, variables are empty. Is any solution for that?

Can confirm that tf-1.10.1 still generates variables data and index under variables folder.

@pharrellyhy did you make the changes to code as @MissDinosaur said. I did the changes to the code I downgrade to 1.15 still empty the folder variables. Is there any solution to export the model and serving it.

with 1.10 i take

Traceback (most recent call last): File "export_inference_graph.py", line 162, in <module> tf.app.run() File "/usr/local/lib/python3.5/dist-packages/tensorflow/python/platform/app.py", line 125, in run _sys.exit(main(argv)) File "export_inference_graph.py", line 158, in main write_inference_graph=FLAGS.write_inference_graph) File "/usr/local/lib/python3.5/dist-packages/object_detection/exporter.py", line 482, in export_inference_graph write_inference_graph=write_inference_graph) File "/usr/local/lib/python3.5/dist-packages/object_detection/exporter.py", line 385, in _export_inference_graph graph_hook_fn=graph_hook_fn) File "/usr/local/lib/python3.5/dist-packages/object_detection/exporter.py", line 352, in build_detection_graph output_collection_name=output_collection_name) File "/usr/local/lib/python3.5/dist-packages/object_detection/exporter.py", line 331, in _get_outputs_from_inputs output_tensors, true_image_shapes) File "/usr/local/lib/python3.5/dist-packages/object_detection/meta_architectures/ssd_meta_arch.py", line 802, in postprocess prediction_dict.get('mask_predictions') File "/usr/local/lib/python3.5/dist-packages/object_detection/meta_architectures/ssd_meta_arch.py", line 785, in _non_max_suppression_wrapper return tf.contrib.tpu.outside_compilation( AttributeError: module 'tensorflow.contrib.tpu' has no attribute 'outside_compilation'

@Anatolist Nope, I have my own script but looks similar. Can you post your code and how you run it?

Thank u for your reply, I did not write any code, I use this command for export, I used a pre-trained model. But the model.ckpt-29275 it's from my training.

python3 export_inference_graph.py \ --input_type image_tensor \ --pipeline_config_path training/ssd_mobilenet_v1_pets.config \ --trained_checkpoint_prefix training/model.ckpt-29275 \ --output_directory cellphone_inference_graph

and the exporter.py file has been changed as @MissDinosaur said.

@pharrellyhy It works! I had a problem with that for over 2 weeks. So did the steps:

1.Downgrade python 3.6 to 2.7

2.Install modules that missing to run the

python3 export_inference_graph.py \ --input_type image_tensor \ --pipeline_config_path training/ssd_mobilenet_v1_pets.config \ --trained_checkpoint_prefix training/model.ckpt-29275 \ --output_directory cellphone_inference_graph

@Anatolist This works!!, Thanks meeennnn!!!

write_saved_model(saved_model_path, trained_checkpoint_prefix, placeholder_tensor, outputs)

Hi, I followed your suggestion, but I'm getting this error, can anyone help me with this? File "/content/drive/My Drive/tfod/models/research/object_detection/exporter.py", line 660, in export_inference_graph side_input_types=side_input_types) File "/content/drive/My Drive/tfod/models/research/object_detection/exporter.py", line 601, in _export_inference_graph write_saved_model(saved_model_path, trained_checkpoint_prefix, placeholder_tensor, outputs) NameError: name 'placeholder_tensor' is not defined

@srikanth9444 , I had the same error but was able to get it to work by replacing placeholder_tensor with placeholder_tensor_dict['inputs'] like this:

write_saved_model(saved_model_path, trained_checkpoint_prefix,

placeholder_tensor_dict['inputs'], outputs)write_saved_model(saved_model_path, trained_checkpoint_prefix, placeholder_tensor, outputs)Hi, I followed your suggestion, but I'm getting this error, can anyone help me with this? File "/content/drive/My Drive/tfod/models/research/object_detection/exporter.py", line 660, in export_inference_graph side_input_types=side_input_types) File "/content/drive/My Drive/tfod/models/research/object_detection/exporter.py", line 601, in _export_inference_graph write_saved_model(saved_model_path, trained_checkpoint_prefix, placeholder_tensor, outputs) NameError: name 'placeholder_tensor' is not defined

Hi when I'm making these changes in the exporter.py I get the error "AttributeError: 'dict' object has no attribute 'dtype' " Is there anyone.

@dnlglsn Thank you for your response, I changed my python version to python2.7 and exported the model successfully. But now I met another problem in calling the model using the client.py. The codes I mainly depend on are:" Deploying Object Detection Model with TensorFlow Serving — Part 3 https://medium.com/@KailaGaurav/deploying-object-detection-model-with-tensorflow-serving-part-3-6a3d59c1e7c0", in this article he used the same way of saving the model as you. But there are some errors in using this client.py script, and I can't figure it out. Would you please share the "client.py" you used in tensorflow serving for object detection? That will be very helpful to us tf learners, thank you.

Instead of using this method I tried to simiply run tensorflow_model_server with new model path (as .pb file) and rest api port and I am able to get response by POST request to the REST api port.

requests.post("http://localhost:8501/v1/models/object_detection:predict", data=data)

BUT while looking for the solution to an issue I am facing right now, I somehow landed on this thread, I want to serve my custom object detection model using TensorFlow serving, I had followed various solutions and converted my .ckpt saved model to .pb along with variables folder. I had changed the exporter.py file and got my model in this format.

What I am struggling to achieve is to get the correct prediction, when I run the tersorflow_model_server at localhost:9000 and send a POST request to it with an expanded image array I am getting the exact same output for different input images.

does anybody have a clue why am I getting exactly the same response for any kind of input image?

System information

Describe the problem

The above command can run with no errors. However it only generates a saved_model.pb file and an empty variables directory. According to https://tensorflow.github.io/serving/serving_basic.html, in the variables directory, there are variables.data-?????-of-???? and variables.index files. Is this normal? There is no problem to generate a model for inferrence and test.