@ehsan2211 TF 1.1 is older version , please try in latest versions in python 1.15 and try to convert in to tfjs.

Closed ehsan2211 closed 3 years ago

@ehsan2211 TF 1.1 is older version , please try in latest versions in python 1.15 and try to convert in to tfjs.

The model works in Python. I tried reading and saving in tf.2 and tf 1.6 on colab. The final converted model leaks in TFJS unless I use TFJS older than 1.0.0.

On Fri, Jan 31, 2020, 14:28 Rajeshwar Reddy T notifications@github.com wrote:

@ehsan2211 https://github.com/ehsan2211 TF 1.1 is older version , please try in latest versions in python 1.15 and try to convert in to tfjs.

— You are receiving this because you were mentioned. Reply to this email directly, view it on GitHub https://github.com/tensorflow/tfjs/issues/2718?email_source=notifications&email_token=ANSIL7IGFKP4AGAYVF6SLALRAQRP3A5CNFSM4KOEYVUKYY3PNVWWK3TUL52HS4DFVREXG43VMVBW63LNMVXHJKTDN5WW2ZLOORPWSZGOEKOURBA#issuecomment-580733060, or unsubscribe https://github.com/notifications/unsubscribe-auth/ANSIL7MDSEAWWNPSPIRQT7DRAQRP3ANCNFSM4KOEYVUA .

Any update on this issue? I have found out that the problem is not TF-version-related. Even a small Segnet (model 1) trained in TF2 and converted to TFJS leaks. See bellow. I think the problem is the up_sampling2d layer as it does not exit in the classifier (model 2) net that I use without any problem.

This model (trained in TF2 and converted to tfjs) leaks in TFJS above 0.15.3 but not in Python TF!

Model 1: "Segnet model"

Total params: 44,816 Trainable params: 44,322 Non-trainable params: 494

This model does not leak in the latest TFJS nor in Python TF!

Model 2: "model"

Total params: 3,545,278

Trainable params: 3,542,924

Non-trainable params: 2,354

Model 2: "model"

Total params: 3,545,278

Trainable params: 3,542,924

Non-trainable params: 2,354

Hi,

Is it possible to share code that reproduces the problem. If the model is proprietary, can you share it with randomized weights? Thanks!

I just checked upSampling2d, it doesn't have memory leak, see this codepen example: https://codepen.io/na-li/pen/yLNKOxX

Thanks Na! @ehsan2211 does the memory leak show up for the latest (1.7.0) version of TF.js?

Hi,

Is it possible to share code that reproduces the problem. If the model is proprietary, can you share it with randomized weights? Thanks!

@dsmilkov I can send you the models but It seems that I cannot upload the models here.

Thanks Na! @ehsan2211 does the memory leak show up for the latest (1.7.0) version of TF.js?

Both models leak in tfjs 1.7.

@dsmilkov BTW the code I use is a very simple code used in tfjs mobilenet example.

`"use strict";

import * as tf from '@tensorflow/tfjs';

export const IMAGE_WIDTH = 640; export const IMAGE_HEIGHT = 480;

export let lenet;

const demoStatusElement = document.getElementById('status'); export const status = msg => demoStatusElement.innerText = msg; const predictionsElement = document.getElementById('predictions');

const LENET_MODEL_PATH = 'model/tf2/tfjs16_seg_tf2_y5_layers/model.json';

const lenetDemo = async () => { status('Loading model...'); console.log(tf.version); lenet = await tf.loadLayersModel(LENET_MODEL_PATH, {strict: true}); // lenet = await tf.loadGraphModel(LENET_MODEL_PATH, {strict: true});

lenet.summary(); console.log('Prediction from loaded model:'); lenet.predict(tf.zeros([1, IMAGE_HEIGHT,IMAGE_WIDTH, 1])).dispose(); console.log('Successfully loaded second model'); status('segmenter successfully loaded!');

document.getElementById('file-container').style.display = ''; };

lenetDemo(); `

Hi @ehsan2211, I created a model in codepen that contains the minimal set of layers in the model you shared here, and it doesn't seem to leak. But maybe I missed some part, please take a look here: https://codepen.io/na-li/pen/yLNKOxX

We'd also like to know which backend did you use (node, cpu, webgl, or wasm)? Also, how did you find the leak, e.g. does it leak after repeatedly load the model or use the model to predict? What tools did you use to detect the leak, e.g. tf.memory() or Chrome dev tool's memory usage?

Thanks.

Hi @lina128, I try to explain the whole process below:

Convert Keras models to TFJS format using tensorflowjs_converter (I tried different versions and setting even with --quantization_bytes 1 ) 1) segnet_1 (trained using TF1.1); https://drive.google.com/file/d/1oePxzLkabYBIjGffDj2HOQZk1obSod5t/view?usp=sharing 2) segnet_2 (trained using TF2) https://drive.google.com/file/d/11Radc6EbQeCR6PhdbviB7t6ieXoapz_H/view?usp=sharing 3) classnet (trained using TF1.1) https://drive.google.com/file/d/17ctckx--Dyt2H19_GVdRvdL94kmL438U/view?usp=sharing

Load the models and perform a simple predict using below

import * as tf from '@tensorflow/tfjs';export const IMAGE_WIDTH = 640; export const IMAGE_HEIGHT = 480; export let lenet;

const demoStatusElement = document.getElementById('status'); export const status = msg => demoStatusElement.innerText = msg; const predictionsElement = document.getElementById('predictions'); const LENET_MODEL_PATH = 'model/tf2/tfjs16_seg_tf2_y5_layers/model.json';

const lenetDemo = async () => { status('Loading model...'); console.log(tf.version); lenet = await tf.loadLayersModel(LENET_MODEL_PATH, {strict: true}); // lenet = await tf.loadGraphModel(LENET_MODEL_PATH, {strict: true}); lenet.summary(); console.log('Prediction from loaded model:'); // up to here everything is fine lenet.predict(tf.zeros([1, IMAGE_HEIGHT,IMAGE_WIDTH, 1])).dispose(); // this leaks console.log('Successfully loaded second model'); status('segmenter successfully loaded!');

document.getElementById('file-container').style.display = ''; };

lenetDemo();

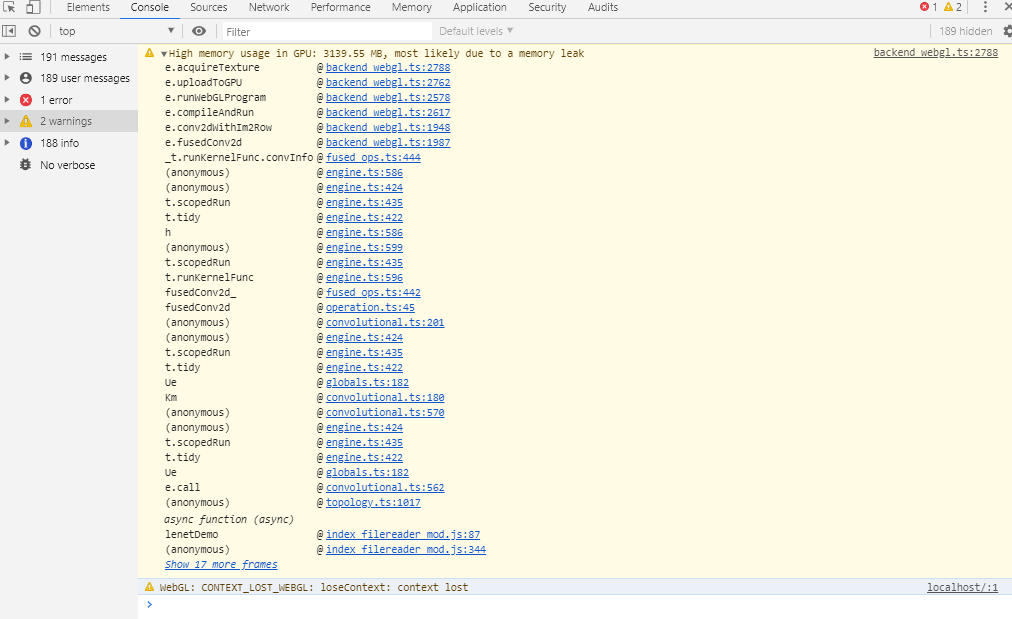

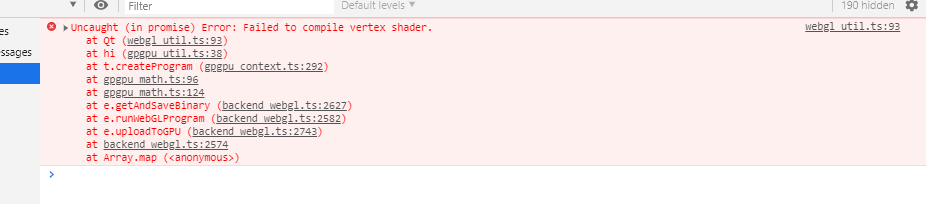

3. The following is the results if I load the segnets (not the classnet) and use tfjs newer than 0.15.3

One comment about this line:

lenet.predict(tf.zeros([1, IMAGE_HEIGHT,IMAGE_WIDTH, 1])).dispose(); // this leaksThis will dispose the tensor returned from predict but not the input tensor created by tf.zeros. Try assigning that to a variable and disposing it after the prediction is done.

Hi @tafsiri, it makes no difference. The memory leak happen at the same line.

Closing this due to lack of activity, feel to reopen. Thank you

Hi @ehsan2211, we now have a benchmark tool, you can use it to benchmark the model performance, it will give detailed information of memory usage, time spent for each op, and inference performance. https://tensorflow.github.io/tfjs/e2e/benchmarks/ In the right panel, for the models field, choose the custom option, and then provide your model.json url (the weight file ended in .bin should be in the same directory). If you have a chance to try this, let us know whether the result, and we can debug possible memory leak from there.

Btw, I'm no able to use the drive link you shared, it seems to be a binary. If you can share with us the folder that has the model.json and all the weight files, that will be great. Sorry for the slow response, we are trying to catch up with community requirements. Thank you for understanding.

Hi @ehsan2211 , I just figure out the link you shared has the original model. So I converted the first model to tfjs format and use our benchmark tool to test it. The result shows that, inference is indeed slow and memory usage is large. But there is no leaked tensor. We further checked that this model uses 14 conv2d, which is the source of the slowness. Conv2d is expensive, so if a model has a lot of conv2d, it may not be suitable for running on edge device. So we suggest you consider optimize the model for edge use.

This issue has been automatically marked as stale because it has not had recent activity. It will be closed in 7 dyas if no further activity occurs. Thank you.

Closing as stale. Please @mention us if this needs more attention.

To get help from the community, we encourage using Stack Overflow and the

tensorflow.jstag.TensorFlow.js version

tfjs 1.0.0 and above

Browser version

all

Describe the problem or feature request

I have a Segnet (around 3 million parameters) model trained in TF 1.1 (python). The converted model leaks even with simple inference (model.predict(tf.zeros([1,im_w, im_h, 1])). When I use TFJS older than 1.0.0 no leak happens! I tested different versions of tensorflowjs for conversion of model with no success. I wish you can fix the bug since it limits the user to older version of TFJS. FYI, when I convert a classifier with the same size (trained on the same platform) no leak happens!

Code to reproduce the bug / link to feature request

If you would like to get help from the community, we encourage using Stack Overflow and the

tensorflow.jstag.GitHub issues for this repository are tracked in the tfjs union repository.

Please file your issue there, following the guidance in that issue template.