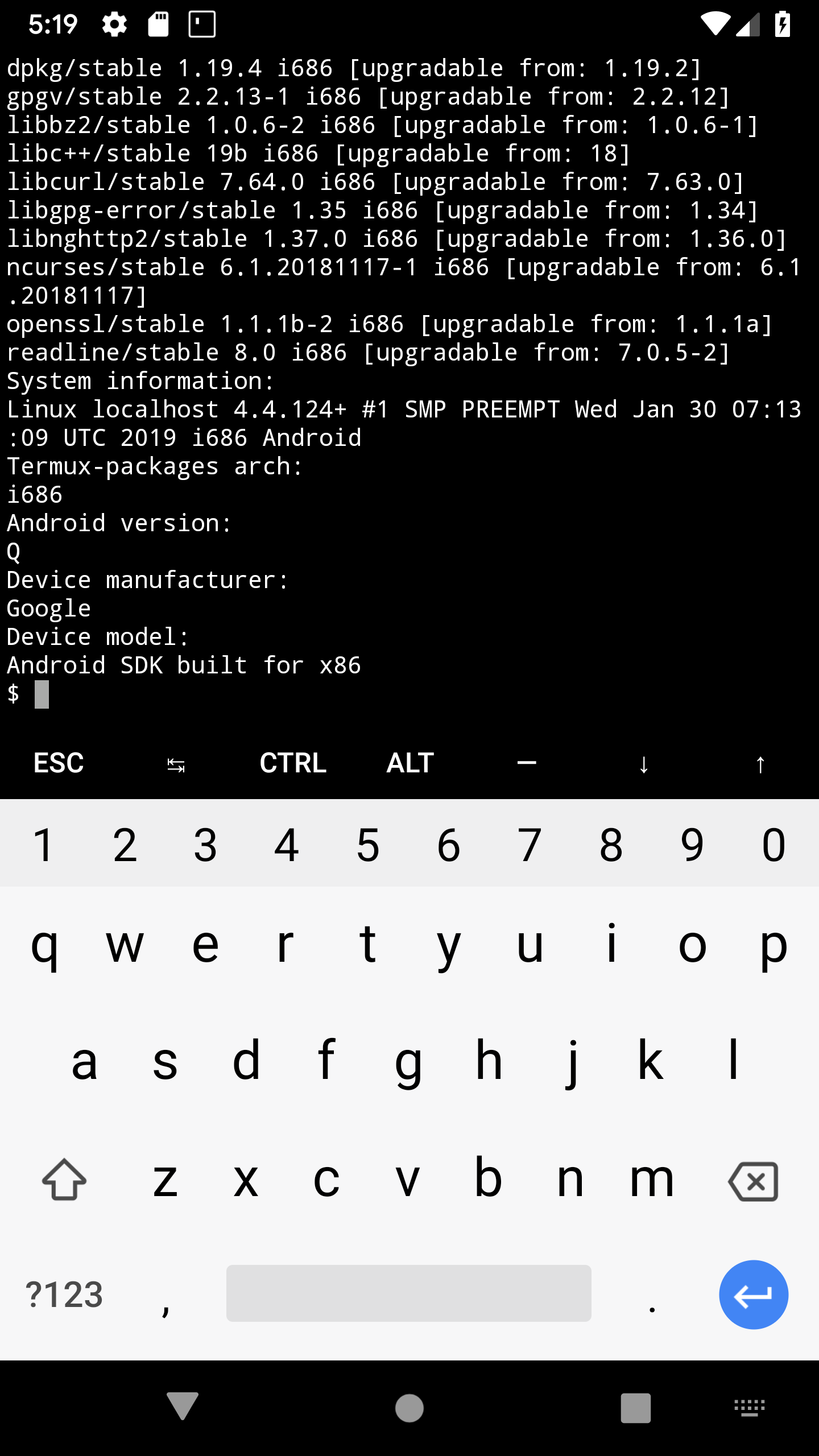

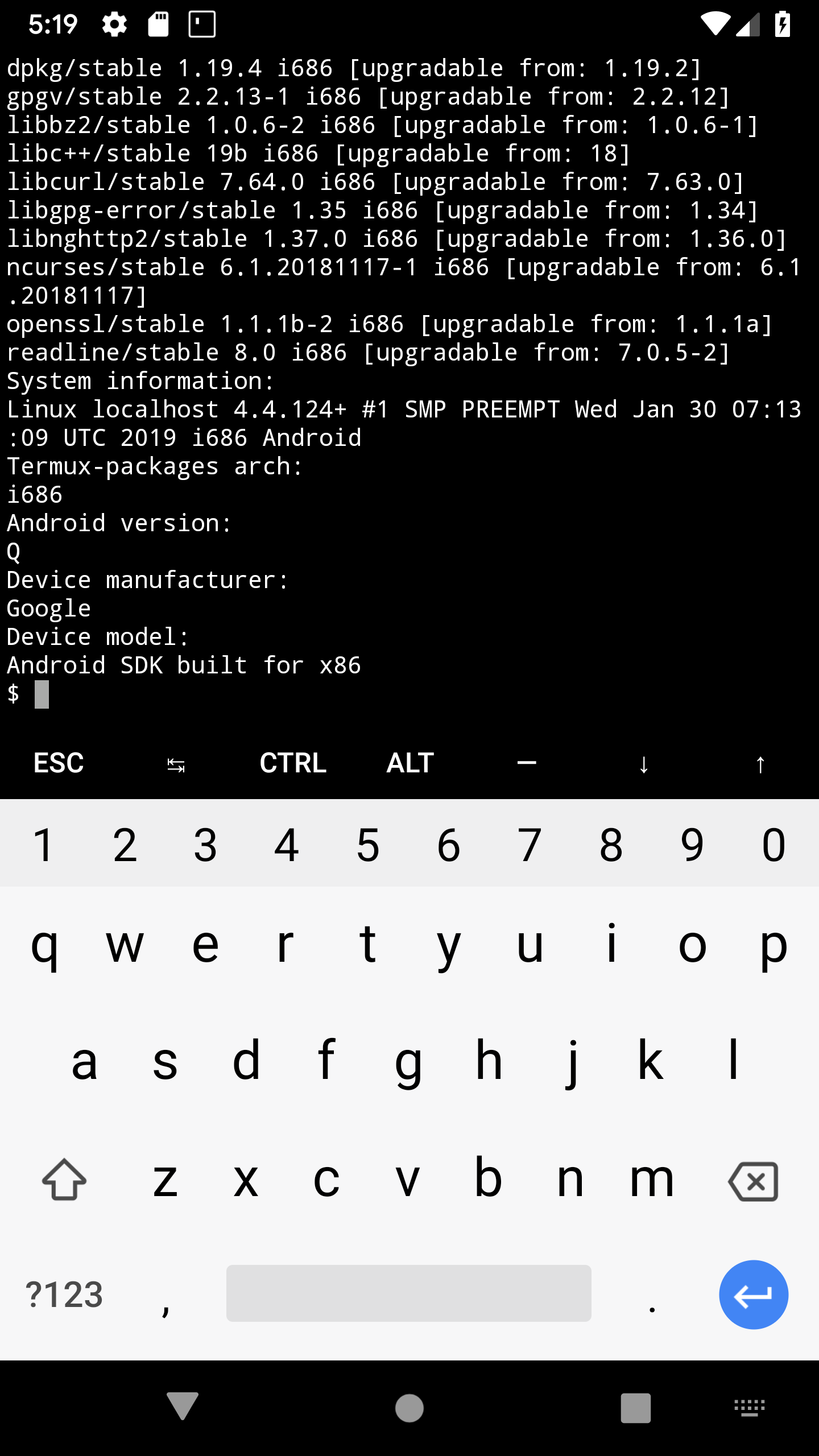

This definetly the worst thing that may happen. Though, in AVD with Android Q Preview Termux still works:

Closed n0n3m4 closed 3 years ago

This definetly the worst thing that may happen. Though, in AVD with Android Q Preview Termux still works:

I brought up that this was forbidden and would likely become part of the SELinux policy last year (9 Apr 2018): https://github.com/termux/termux-app/issues/655.

As I stated then,

They rewrote the policy to be much more explicitly about this being disallowed, and it can be expected that they'll not only act on it for the Play Store but via SELinux policy.

They intend to support cases like Termux by having apps like Termux upload their packages as separate apps to the Play Store, with it initiating user installs / uninstalls of those apps.

Staying ahead of the game (i.e. future SELinux policy enforcement) would involve not extracting the executables manually but rather running them from where the apk package installer extracts libraries. Being able to execute app_data_file either as an executable or a library is on the way out in the long term.

It doesn't fundamentally break Termux, but rather Termux would need to switch to an alternative approach for distributing packages. Using apt within the app sandbox to install code isn't going to be viable anymore. The restrictions are no doubt going to be tightened up in the future too, and it can be expected that libraries will need to be mapped from the read-only app data directory or apk too, rather than the read-write home directory working. It wouldn't be necessary to split absolutely everything into assorted apks, but rather there could be apks for groups of related packages based on size. Termux can initiate the apk installs itself too, after prompting the user to allow it as an unknown source. It's an inconvenience for sure, but not a deal breaker.

Someone there accused me of concern trolling, but I was genuinely giving an insider heads up about likely changes on the horizon bringing w^x enforcement for data in storage. I use Termux and also work on Android OS security, so I wanted to have a conversation about how this could be addressed long before it was going to become a problem. I provided enforcement of the same kind of restrictions in my own downstream work, but with a toggle to turn it off for apps like Termux. I would have loved to have an alternate option for installing packages compatible with the security feature, and that would have prepared Termux for the eventual enforcement of this feature upstream.

It's worth noting that there are still holes in the SELinux policy, which can be used to bypass it by jumping through some hoops, but the intention is clearly to move towards enforcing w^x and enforcing the official rules via the implementation. See https://play.google.com/about/privacy-security-deception/malicious-behavior/.

The following are explicitly prohibited:

[...] Apps or SDKs that download executable code, such as dex files or native code, from a source other than Google Play. [...]

Termux could also ship the necessary scripting for generating a Termux package apk from executables/libraries so that users could create their own and continue running their own code, albeit less conveniently.

@xeffyr This only applies to apps targeting API > 28. Termux will continue to work indefinitely as long as it continues to target API 28. However, there's a timeline for phasing out old API targets on the Play Store about a year after they're introduced. It then becomes impossible to upload a new version of the app without updating the target API level.

https://developer.android.com/distribute/best-practices/develop/target-sdk

Distributing the app outside the Play Store would still be possible, but the OS has the concept of a minimum supported target API level already (ro.build.version.min_supported_target_sdk). It's currently 17 in Android P and is being increased to 23 in Android Q. It currently only produces a warning, but it won't necessarily remain that way. They don't want targeting an old API level or distributing outside the Play Store to be a loophole for bypassing privacy and security restrictions tied to API level.

@thestinger

Someone there accused me of concern trolling

I apologize for how issue https://github.com/termux/termux-app/issues/655 was received, I guess people freaked out and decided to shoot the messenger. We should have moderated it better.

It's currently 17 in Android P and is being increased to 23 in Android Q

I guess we have a year or two (at least) to figure out how to deal with this then

You can probably stay at API 28 until around November 2020 for the Play Store assuming the timeline at https://developer.android.com/distribute/best-practices/develop/target-sdk is followed again for API 29.

It would be even longer until using API 28 broke outside the Play Store via a stricter future version of ro.build.version.min_supported_target_sdk doing more than warning. Probably at least 2 years and likely significantly longer.

So, it's not like it's going to suddenly break when Android Q comes out, since it's only for API 29+.

@thestinger

Termux could also ship the necessary scripting for generating a Termux package apk from executables/libraries so that users could create their own and continue running their own code, albeit less conveniently.

That is horrible in terms of usability. Also wouldn't the apks need to be signed with original apk key?

Not only signed with original key. It seems that executables will have to be placed to native lib directory:

While exec() no longer works on files within the application home directory, it continues to be supported for files within the read-only /data/app directory. In particular, it should be possible to package the binaries into your application's native libs directory and enable android:extractNativeLibs=true, and then call exec() on the /data/app artifacts. A similar approach is done with the wrap.sh functionality, documented at https://developer.android.com/ndk/guides/wrap-script#packaging_wrapsh .

Termux compiled with targetSdkVersion 29:

Exec is allowed from native lib directory:

But this directory is read-only.

Distributing packages in APKs will either require to have hundreds of these APKs published to the Google Play / F-Droid or put multiple packages into single APK up to maximal size (~ 100MB). Technically it is possible to have very large APKs, but they cannot be distributed with Google Play and maybe F-Droid.

But anyway, distributing all packages in APKs is weird:

Also, we should not forget that user environment will be broken:

pip, gem, cpan will be broken.Python, Ruby, Perl, etc. written by users will still work without a hassle because it's not native code. They won't be able to execute it as ./script.py but they can still run it as python3 script.py. I don't see any way that would ever stop working. Libraries without native code are also going to continue working without being in the native library directory too, since they don't get mapped as executable.

In the future, native libraries will likely all need to be in the native library directory to work but for API 29 they're only enforcing it for executables. I expect the enforcement will happen for native libraries next year, since they already added an auditallow rule to warn in the logs when it occurs.

Distributing packages in APKs will either require to have hundreds of these APKs published to the Google Play / F-Droid or put multiple packages into single APK up to maximal size (~ 100MB). Technically it is possible to have very large APKs, but they cannot be distributed with Google Play and maybe F-Droid.

Yes, that's what I was saying would need to happen: bundling groups of related packages inside of shared uid apks signed with the same key. For users to be able to extend it with their own native code, they would need to have their own build of Termux signed with their own key, along with scripting for generating new extension apks out of their code. There might be a better approach than my suggestion. It was just intended as a starting point.

It will also still be possible to interpret native code from the app data directory. For example, I think valgrind executable_in_app_data will continue working, even though ./executable_in_app_data will not, since valgrind acts as a machine code interpreter. Similarly, running native code inside QEMU would obviously still work.

Is this a potential use case for Dynamic Delivery using App Bundles?

A dynamic feature module could be either a bundled group of related packages, or, if still feasible, each separate package. The submitted app bundle would include all available packages, where the base installation is only core packages, and pkg install would request the dynamic feature from Google Play.

This would likely require a pretty robust build system to keep the Google Play listing's packages in parity with termux-packages, perhaps as a separate listing from termux-core.

I see this as a way to comply with Google Play while still allowing a package manager-like function, potentially with another mechanism for installations outside of Google Play or from source that more closely resembles the current mechanism.

Is this a potential use case for Dynamic Delivery using App Bundles?

It could work, if dynamic feature modules are able to ship additional executables / libraries to be extracted into the native libraries directory. It's not clear if that's possible. It appears the limit is 500MB via an app bundle, rather than 100MB, so it's a limited solution:

Publishing with Android App Bundles also increases the app size limit to 500MB without having to use APK expansion files. Keep in mind, this limit applies only to the actual download size, not the publishing size. So, users can download apps as large as 500MB and, with dynamic delivery, all of that storage is used for only the code and resources they need to run the app. When you combine this with support for uncompressed native libraries, larger apps, such as games, can reduce disk usage and increase user retention.

I see this as a way to comply with Google Play while still allowing a package manager-like function, potentially with another mechanism for installations outside of Google Play or from source that more closely resembles the current mechanism.

I don't think it's going to be able to be much different outside the Play Store, since the SELinux policy restrictions on executing only read-only native code still apply for apps outside the Play Store and Android variants without the Play Store or Play Services. It would make sense to work around this issue at the same time as coming into compliance with the Play Store policy on downloading executable code, but they don't necessarily need to be solved at the same time. I think it would be best to choose a path that will work both inside and outside the Play Store. I don't know how realistic it is to support the app bundle and dynamic feature modules outside Play, since there isn't an existing distribution option with support for it and it's not clear how much is available without Play Services, etc.

Sure--I supposed my suggestion was intended more as a solution to the SELinux policy restrictions by using Google Play, but it does hinge on 1) the ability to ship additional executables (I suspect their example of games would make that true, but it is still a question) and 2) reliance on Google Play as a complete distribution system and being subject to their rules and whims.

It's a difficult problem, no doubt. I suspect that the timing of App Bundles and the native executable restriction policy are not entirely unrelated, and a solution to downloading and executing arbitrary native code without going through Google Play isn't immediately obvious.

bundling groups of related packages inside of shared uid apks signed with the same key.

Seems to be the quickest and simplest solution at this point, with the above mentioned restrictions which may annihilate realistic usability.

What if you bundled PRoot (and busybox and a bare minimum of what is required to get a PRooted system setup and running) with the app itself and then had everything run through PRoot. Could PRoot be modified to allow executing outside of the lib directory? It has been proven twice before to be able to get around limitations of the sdcard being mounted noexec.

This is the most recent example of how PRoot can defeat noexec: https://github.com/termux/proot/commit/ebcfe01744a4a288df38f76c24a60ae8ab978240

Notice what is not blocked here: https://android-review.googlesource.com/c/platform/system/sepolicy/+/804149

There would be a performance impact of course, but maybe it allows the party to go on.

I work on UserLAnd and we always use PRoot. This is where my mind went when I heard of this limitation. Thought I would share.

If proot works, this means that we can left packages as-is. Proot also means that Termux will no longer be prefixed which is good as a lot of patches can be dropped + less headache with compiling software by user.

Will try to package proot into Termux APK and run on Android Q AVD to test this.

I think that proot (or other theoretically possible packages like this) will be broken no earlier than Google forbids any kind of JIT in 3rd-party applications, so this is a very nice idea. I'm still not sure if Google will ever dare to do such thing.

forbids any kind of JIT

If Google do this, it has to forbid javascript in browsers too.

PRoot will certainly execute from the apps lib directory, just like busybox did above, but you will need to play with the proot version I mentioned above. It is @michalbednarski 's work, so he might be able to comment on how suited he thinks it might be for this purpose.

@corbinlc Original proot worked. Even this anti-noexec extension not needed.

So under proot everything works fine:

No need to distribute packages in APKs or bundles. Everything that is needed is to launch shell under proot.

Notice what is not blocked here: https://android-review.googlesource.com/c/platform/system/sepolicy/+/804149

Note that execute for app_data_file (mapping as PROT_EXEC) had an auditallow rule added after this commit as they're planning on removing that in the near future, perhaps next year. It will currently log audit warnings leading up to the likely eventual removal.

The remaining holes in the SELinux policy are tied to the ART JIT and other JIT compilers: ashmem execute (an implementation detail that could change) and execmem (in-memory rwx code and rw -> rx transitions). ART supports using full AOT compilation instead of the JIT compiler, but it's not their current choice.

Chromium-based browsers including the WebView use execmem in the isolated_app domain, not untrusted_app, so even without an Apple-like approach only permitting the bundled browser and WebView for other apps to use, that wouldn't be a direct blocker. I would be surprised if they removed execmem for untrusted_app in the near future, but it could happen over the longer term, especially if they simply restrict it to a variant of isolated_app.

The original issue that I filed (https://github.com/termux/termux-app/issues/655) was about the general problem of this being in violation of their policy, before there was a partial implementation of a technical mechanism enforcing it for native code. There can never be full enforcement at a technical level since interpreters will continue to work even once native code execution is forbidden.

If Google do this, it has to forbid javascript in browsers too.

They permit JavaScript via the exception in the policy for isolated virtual machines:

An app distributed via Google Play may not modify, replace, or update itself using any method other than Google Play's update mechanism. Likewise, an app may not download executable code (e.g. dex, JAR, .so files) from a source other than Google Play. This restriction does not apply to code that runs in a virtual machine and has limited access to Android APIs (such as JavaScript in a webview or browser).

https://play.google.com/about/privacy-security-deception/malicious-behavior/

The result at a technical level would be that they need to keep around execmem for a variant of the isolated_app SELinux domain used by browsers, but not untrusted_app. At the moment, both isolated_app and untrusted_app permit execmem, but Chromium doesn't use it in untrusted_app. ART uses the untrusted_app execmem when configured to do JIT compilation via the relevant system properties. It's fully optional though, and still supports full AOT compilation as was done in Android 6.

@xeffyr I guess that makes sense, since PRoot already deals with execve. The new work is only needed for files on a noexec partition. That is great news for everyone.

@thestinger interesting comment about them potentially removing the ability to mmap(PROT_EXEC) for files outside of the app's lib directory in the future. I guess we live to fight another day (or more likely more than a year).

Do you know if they are planning on preventing mprotect(PROT_EXEC)? Where do you see the audit call you mentioned related to mmap(PROT_EXEC)? I don't know where the these exist in the Android source tree.

Files in the app home directory are labelled as app_data_file and this is the audit rule generating warnings for mapping the data as executable:

They add auditallow rules leading up to eventually removing it from the policy. You can try loading a library from app data with API 29 and you'll see an audit warning in the logs.

In-memory code generation via rwx pages or rw -> rx transitions is classified as execmem, which is something they'd like to remove in the long-term but it's currently used by the ART JIT. It's not used by Chromium within untrusted_app domains, since it only does that within the sandboxed renderers which use isolated_app domains. They could remove it from the main isolated_app and require that apps opt-in to having it available via a special domain. It should be expected that it will be eventually happen, since this is a very standard form of security hardening and they've been slowly moving towards it.

They already removed execmem for some of the base system including system_server. Their security team would obviously like to remove it everywhere, but for now the performance vs. memory vs. storage usage characteristics of mixed AOT and JIT compilation for ART are winning out over the security advantages of pure AOT compilation with execmem disallowed.

The only reason they're starting with requiring executables to be in the native library directory rather than native libraries (which is the main purpose of the directory) is because that will impact more apps, so the transition will be more painful. Apps can also disable native library extraction and map native libraries from the apk directly, since the linker supports mapping libraries from zips directly as long as they meet the requirements (page aligned and not compressed). They have a pass for properly aligning them in the apk build system.

It will actually log the warning about native libraries in the data directory for all API levels.

Here's where they continue to allow running executables from app_data_file for API < 28:

Even though it's allowed, they do still log audit warnings about it for API < 29.

Also you can see that for API < 26, even more is permitted, since they've tightened up these policies for API 26+ in the past too:

For example, API 26+ forbid execmod which means things like rx -> rw -> rx transitions for files mapped from storage. You can only do those kinds of transitions in-memory via execmem now. They've removed execmem for a lot of the base system but not yet apps, which is as I mentioned primarily due to the ART JIT and it'd also be a major backwards incompatible change. Finishing this up by removing the remaining 3 or so holes in the policy is definitely on their radar. It's just hard to get it done due to compatibility issues and performance/memory/storage vs. security tradeoffs made elsewhere that they'd need to decide are less important (and I definitely think they will, eventually).

This is great info @thestinger

One more thing to mention Google is planning to deny any type of exec, read here: https://issuetracker.google.com/issues/128554619

Relying on exec() may be problematic in future Android versions.

The steps they took:

So any solution implemented here will probably not work next year or even earlier. Essentially they will kill apps like Termux, server apps, ... unless you build everything using jni / ndk.

Essentially they will kill apps like Termux, server apps, ... unless you build everything using jni / ndk.

Not kill, if we switch to QEMU (system mode). It should be possible to turn QEMU into shared library (Limbo PC app did this) and attach serial consoles as terminals. Performance will be worse, but still usable.

That is, of course, if QEMU doesn't use mmap(PROT_EXEC), execmem or other similar things which may be blacklisted.

One more thing to mention Google is planning to deny any type of exec

That's not backed up by what you're linking and I don't think it's true.

Deny downloaded code execution using policy

Executing downloaded code was denied by policy for a long time too. I only opened an issue when the wording became substantially stronger and considered malicious regardless of why it's done. I think Termux has been in violation of the policy as long as it has existed, but it became more clear that it was serious a year ago.

Next step would probably be denying any exec, removing Runtime.exec / ProcessBuilder, ... So any solution implemented here will probably not work next year or even earlier. Essentially they will kill apps like Termux, server apps, ... unless you build everything using jni / ndk.

So any solution implemented here will probably not work next year or even earlier. Essentially they will kill apps like Termux, server apps, ... unless you build everything using jni / ndk.

Spawning processes with exec based on the native library directory is fully allowed by their app policy and documented as a supported feature. A random Google engineer on the bug tracker urging you to be cautious about using exec is good advice and doesn't imply there's any plan to remove it. Mapping code from outside the native library directory and apk as exec is definitely on the way out, and class loading is probably on their radar. There is not much they could do about third party interpreters beyond forbidding it in policy. The general policy is that executing arbitrary code not included with the apk is only allowed if it runs in a well sandboxed virtual machine, with the Chromium web sandbox as a baseline to compare since it's allowed through that exception.

They have no security reason to remove it now that they enforce w^x for it. Relying on executing system executables is a bad idea and it'd make sense for them to continue stripping down what is allowed.

By saying it's problematic, they are not saying that it's going to be forbidden, but rather that it doesn't fit well into a model where apps are often killed at any time and then respawned with the expectation that they saved all of their state to be restored, etc. That model can be more aggressive on some devices, which is what makes it problematic. Switching away from the app can result in all these native processes getting killed, potentially losing data. In practice, it's fine, especially with a foreground service to prioritize keeping the app alive. It also applies to a lot more than native processes. A long-running foreground service doesn't conform to the activity life cycle either. Essentially the same potential for problems there.

Not kill, if we switch to QEMU (system mode). It should be possible to turn QEMU into shared library (Limbo PC app did this) and attach serial consoles as terminals. Performance will be worse, but still usable.

I also don't think it's true.

That is, of course, if QEMU doesn't use mmap(PROT_EXEC), execmem or other similar things which may be blacklisted.

I think QEMU does rely on execmem. I'm not sure that it can be used without it. So, while that will currently work, it may not in the long term. I do think executing files from the native library directory is going to continue working in the long term. Using an emulator is certainly a viable approach though, but obviously slow, especially without execmem at some future point.

The viable long-term approach is using documented ways of doing things, and they have documentation showing how to execute something from the native library directory, along with that being fully supported for native libraries.

Well I wish it was not not true but, doubt it following recent actions by Google / Android.

It's an issue tracker thread aimed at productively finding solutions to future compatibility issues starting with API 29. There's no point of being hysterical / dramatic and treating understandable security changes as an unpredictable, draconian crackdown on apps. The compatibility issue is unfortunate. There are trade-offs to changes like this, and this is the downside to it. The upside is making apps more secure against exploitation, while not hurting the vast majority of them.

It was denied by policy not so long ago - a year or two.

It was the policy that all code was signed and must come from Google Play for a long time. It was stated in multiple locations in different ways, and reworded into stronger wording over time. For example, here's one case that the policy is stated in 2013:

An app downloaded from Google Play may not modify, replace or update its own APK binary code using any method other than Google Play's update mechanism.

The intent is all apps don't execute code that was signed and delivered through Google Play, other than outside of a sandboxed virtual machine environment (such as web content).

Yes, it has been possible to play semantic games with all variants of the wording, including the very aggressively worded version introduced in the malicious apps policy. It's best to understand the intent of the policy and comply with that, rather than trying to find loopholes. It has been worded different ways even at the same time, on different policy pages.

So essentially strip down everything and allow only some games, ad/spam-ware apps to run, that would be really secure. w^x does not change security in most cases on Android cause most of security/privacy issues are created by pure Java / Kotlin apps.

That's not what I said and I don't know where you're getting that. I said relying on system executables is a bad idea rather than shipping them with the app. For example, using the system toolbox / toybox would be a bad idea even if the SELinux policy allows it. It's not part of the official API.

It remains possible to execute native code, but the implementation permits fewer violations of the policy than it did before, not as an attempt to enforce the policy but to mitigate vulnerabilities in apps.

It's expected that code is shipped in the apk, and either executed from the apk or the native library directory. You aren't supposed to execute Java code from outside an installed apk / app bundle component either. That isn't impacted by SELinux policy right now. They could teach the class loader to enforce rules, but as I mentioned, they fundamentally cannot prevent people from breaking the rules with interpreters via a technical approach.

As I explained above, this has nothing to do with protecting the system from apps or protecting user data from apps but rather improving security for apps themselves. That's certainly something important and is a real world problem. There have been many cases of apps exploited due to them executing code from app data. They introduced a partial mitigation for it, in a way that only truly breaks compatibility with apps they'd already defined as violating their policies. That's why it made sense for them to do this. Yes, it's unfortunate for Termux, but they aren't doing it to be evil.

You mean "problematic in future" Runtime.exec? :)

I mean exactly what I said. Apps executing system executables are relying on a non-public API and can definitely expect problems, just like using private APIs elsewhere. You're reading something and twisting the meaning into what you want to see based on your already negative outlook. You view these security improvements as something bad. It's a balance between different needs, and the balance is moving towards security. That will make some people upset, but it's what most people want. There has always been this balance in place, and most people think Android was way too permissive with apps and needs to continue changing.

I think the only viable in future approach is compile everything using NDK and execute using JNI, but in light of Fuschia / Chrome OS changes it is very unclear.

Fuchsia is not Linux and is effectively a different platform. They may end up implementing a very complete layer for Linux binary compatibility. The CTS requires Linux compatibility so if they want full Android support they need to provide it. That's their problem to figure out. Executing native code is still possible there, but it's not Linux and currently isn't compatible with Linux at the ABI or API level. ChromeOS simply ships Android as a whole in a container and provides an Android compatible Linux kernel. It's just another kind of Android device and is CTS compatible.

The low-level portions of Android like Bionic and SELinux policy are entirely developed in the open rather than having code dumps. I also talk with members of their security team fairly regularly. It's not a mystery what they are working on and where they are planning on taking it in the future. It's not surprising that they partially implemented a very standard security mitigation used across other operating systems in various forms (not just iOS), and it's in line with their existing policy. You can look through the SELinux policy repository and see commit messages and code comments describing many of the future breaking changes.

They released the v2 sandbox (targetSandboxVersion) with Nougat and among other things it disabled app_data_file execution as a whole. That was essentially a preview of future changes.

There are a few long-term options. One is figuring out a way to ship code as part of apks and a way to offer users the option of generating an apk wrapping their native code in a usable way. Another is using a virtual machine to run everything, which would also work on non-Linux platforms, but supporting those is probably never going to be necessary. Those platforms would need to implement Linux compatibility to be Android compatible. This would be slower, especially if support for using a JIT compiler in untrusted_app goes away. It could be moved to isolated_app, but that would be hard.

@esminis Your previous comment seem to be mostly a rant. If you are unhappy with the road google is taking then I suggest you either complain to your local google office or stop using (official) android altogether, complaining here won't change anything.

You can do any mental gymnastics you want here but it does not forbid downloading and running third party code, only modifying already installed APK. And yes later they updated policy with malicious behavior, that denied third party code download / execution.

It's not possible for an app to modify the installed APK at a technical level. The policy used to state that apps couldn't modify, replace or update the code (extending it counts as updating it too, as bringing in the new code is an update). Apps obviously can't touch the APK itself, since the OS doesn't permit them to do that. Their policies are not listing out things that are implemented by the OS sandbox and permission model, because you're forbidden from exploiting it in general.

I think it's ridiculous for you to make these disingenuous misrepresentations of the policies and then claim that I'm the one doing mental gymnastics. It would be completely fair to disagree with the policy and argue that the drawbacks of the limitations it imposes are not worth the security advantages that it provides. That's not a debate about the facts but rather the value of allowing apps in the Play Store to do whatever they want vs. them policing it. Part of them being able to police it is being able to review and analyze the code used by apps, which they can't do if the code is dynamically downloaded from outside the Play Store. Separately from that, having non-read-only code is a security issue in general, and the system packaging system provides substantial security by not allowing apps to write to their code, including native executables and libraries it has extracted. When installing apps with apt on a system like Debian, the same thing is true, and in general very few apps will install code to your home directory because it's a bad practice with security issues. Disabling in-memory and on-disk code generation to improve security is an extremely common practice on traditional Linux distributions too. Even web developers without systems programming knowledge are taught to make their server side code read-only for the web / application server user, so a file write vulnerability is not arbitrary code execution. It's part of security 101.

I certainly agree that the policy is unfortunate for use cases like Termux and that it will be painful to adapt it to an approach like apk-based packages. I do think an approach like that is viable and it wouldn't make using it substantially worse unless you need to compile / download and run native code for development. It won't impact development in scripting languages like Python, other than installing libraries with native code, which could be packaged by Termux via apks in the same way. It does definitely throw a wrench into native code development workflows, since you would need a system for generating an apk packaging up your code. I don't think that's completely awful though, and it would be neat to be able to generate a standalone apk out of the code not depending on Termux, which is definitely feasible. It could package Termux into the generated apk with the user code.

Yea that is the main point. Road to hell is paved with good intentions - earlier call recorders, now termux, servers, ... . For those who came to Android because it was platform that would allow doing advanced stuff those changes are big evil

Call recording is still often provided by the base OS along with screen recording capturing audio. It's also worth noting that only the native telephony layer calls are impacted at all. An app like WhatsApp or Signal is fully allowed to provide call recording for their own calling implementation if they choose. There are regulations on the legacy telephone infrastructure for call recording, and they are expected by the authorities in some regions to prevent it or at least to add a notice that the call is being recorded. I suggest avoiding telephony layer text and voice calls in the first place because they aren't secure and in practice many people / organizations are able to listen in on them if they choose.

Apps like Termux are still allowed and possible. The part that's disallowed is the internal apt-based package management, but it was also disallowed since at least 2012 anyway. The SELinux policies just hadn't advanced to the point that they made the assumption apps didn't violate the policy to implement a mitigation.

Of course bad because it is taking freedom due to security. In my dictionary it is never good. Of course it would be possible to implement properly by letting user choose he wants app executing external code or not - simply add a permission and issue is resolved (same goes to other breaking changes that users are still unhappy about - like SAF, call recorders, ...).

That model doesn't work, even for experienced power users and developers. Apps are in a position to coerce users into accepting permissions. They choose not to implement fallbacks. For example, an app could respond to being denied the Contacts permission by using the API for having the user pick contacts to provide to the app which requires no permission. Are you aware of any app doing that? The same goes for storage access and camera access. They could ask for what they want and then fall back to a working implementation without a bulk data access permission. However, they don't, as they know users have to say yes to get done what they want to get done. The alternative is removing an app, which is often not even an option unless you want to lose your job, make your friends upset, etc. A better way of doing it is having the user in control of exactly what is granted, like picking which contacts (or groups of contacts) an app is provided with, or which subset of the storage is provided (SAF), etc. Android has had the better way of doing things in many areas for a long time, such as SAF being introduced in 4.4. It's no good if it's not what the ecosystem uses, even as a fallback.

I'm not sure why people would be unhappy about the storage changes, since those only put users in control and they can still grant apps access to everything. Look at Termux itself for a good implementation of part of SAF. It has a storage provider exposing the internal storage to the file manager. You can move files / directories in and out of Termux without trusting it to access anything else. The other part of the picture is apps requesting access to external files / directories outside of their own scope, which is something Termux could do, although I don't think it's worth implementing. The existing implementation is already solid.

Yea you sure - who are those people who want that?, maybe same people that wanted SAF forced on them or denied call recording (only read angry comments about those).

I certainly care about the privacy and security of my device. I've always been incredibly unhappy with the way that external (as in shared between apps) storage worked. The storage changes as fantastic from my perspective and don't eliminate any capabilities. Termux won't have an issue with that. It can choose to provide more SAF-based functionality or not, since the existing functionality is already fine.

No there are not - you can`t be sure any of those will be allowed in future as it seems to have become unpredictable. One rule change and Google will deny any apps that include emulator or packing binary code in lib dir, only loading .so file with jni.

It's not at all unpredictable. There was no change in policy beyond more aggressive wording and considering apps violating it to be malicious. You'd probably argue that their new wording allows apps to add and run new code dynamically outside a sandbox because they specifically state 'downloaded' but the intent of the policy is as clear as it has always been. Trying to find loopholes in the wording doesn't work with these things. It's not code or a technical implementation of rules like SELinux policy. It works like a code of law, where it is interpreted by humans and intent of the creators of the law is what matters. You have the option to avoid it by avoiding the Play Store, in which case you're only restricted by the OS implementation, but whenever possible that implementation will enforce or take advantage of their policy for apps. They won't go out of the way to leave out a nice security feature like this which doesn't heavily impact compliant apps.

? Or maybe if you have some inside info on what will be forbidden in near / further future you can share

It's not really insider information, since most of this stuff is developed publicly and the developers are quite willing to talk about it and take suggestions. I mentioned where things are heading above: there are auditallow rules for mapping app_data_file as executable so that's on the way out, and the same can be expected for execmem in the long term, but it's blocked by ART using it. If ART didn't use it, I'd expect there would be an auditallow rule already. In Android 6, it only used AOT compilation, so it didn't need that. That's a design compromise their security and runtime teams will need to work out over time. They already got rid of execmem for a lot of the base system, by relying only on AOT compilation for parts of it. I'm sure they will continue allowing execmem (i.e. in-memory code generation) within a sandbox (currently isolated_app, but it could become isolated_app_execmem or whatever with opt-in to it) since they definitely want to allow things like JIT engines for browsers - but that doesn't mean they need to allow it outside of a sandbox. Once Firefox has a proper sandbox, that removes another big blocker for this.

I haven't been very active in the Android privacy and security world lately, due to very unfortunate events that occurred (nothing to do with the Android development team), so I wasn't following this and didn't have input into it. However, it is something I've worked on directly in the past. In my work, I implemented a complete version of what they're headed towards: fully forbidding any dynamic native code and requiring it to be in the apk / native library directories. I provided a system for making exceptions, but I wasn't in a position to get apps to move their libraries, and I have a different approach with different trade-offs. I filed the original issue last year to act as an early warning and to encourage considering other ways of distributing code that would be compatible with hardening like this, so Termux can exist on a system with that hardening whether or not Google did it.

Anyway, what I want is a system with great privacy and security but also a working Termux app. I think that both are possible, although it requires some thinking and a lot of implementation work. Luckily, there's a lot of time to become compatible with this change. Termux will still work on Android Q with API level 28. It becomes a blocker for Play Store updates over a year from now, and it becoming a blocker for sideloading the app is at least several years away.

The main impact of moving to apks would be download size (since package granularity with likely be far coarser) and the inconvenience of native code development. One way to make native code development more practical would be having a script for self-building Termux and your used packages, in order to have it signed with your own keys, so you can generate your own extension apks with your native code, including within Termux itself.

In my opinion, that's really not the end of the world.

@xeffyr Using QEMU is a good idea (it seems to be much more reasonable, than asking to build an APK each time user wants to build something from source), but I think that there is more potential in using isolatedProcess and just bridging all required system calls using proot. This will not harm performance and will let Termux remain the same in a long term. Furthermore, formally it will be "sandboxed" and it will be perfectly legal to download any kind of binary code. Google will definitely not block JIT in isolated_app, as this will cause an antitrust case with Firefox authors (and probably, somebody else) immediately.

it is possible to execute binaries from noexec directories using proot

Yes, see https://github.com/termux/termux-app/issues/1072#issuecomment-474546253 - in case of Android Q.

Regarding /sdcard, proot should be built with special extension: https://github.com/termux/proot/commit/ebcfe01744a4a288df38f76c24a60ae8ab978240. Note that this extension is disabled by default.

Proot will work until Google will go beyond just blocking execve().

execve()

There other ways to execute machine code from ELF binaries, not only execve().

Something like reading file and executing it from memory - shmem, mmap, fexecve?

@esminis Yes.

@thestinger Do you know if there are plans to disallow dlopen() on shared libraries extracted to application data (not /lib) ? For now it is working, I even don't see any warning or avc denials when doing it.

you are one of several people I know that likes SAF - have you ever tried using any file managers? in most cases only advanced users can use external SD card in file managers due to SAF

This claim doesn't make any sense. If an app implements SAF for loading and storing files, the user is presented with the system file manager API and is in full control. They can save / load from external storage or alternative storage providers like the one provided by Termux. They can provide persistent access to directories for the app to do whatever it wants inside those. Have you not ever used the standard Android Files app and the features of various apps implementing SAF as clients and providers, including Termux? It doesn't seem like it.

Sure, if apps haven't adopted the SAF API introduced in 4.4, they won't see external storage or alternative storage providers like Termux, since that's how those are exposed... I'm not sure how that is the fault of the API they should be using. Most apps aren't since they'd rather just be write insecure, privacy invasive software not respecting consent. It's easier to just ask for bulk data access in the legacy way, and it should be no surprise that the option of doing that is finally going away with users left in control. If you want to provide an app with full access to everything, SAF lets you do that. Adopting SAF would have given apps more capabilities like being able to load / store to an external storage device, the internal Termux storage, and other storage providers such as those exposing cloud storage directories, network shares, etc. It doesn't take away capabilities but rather abstracts file access in a way that makes it more powerful while giving the user much more control, by being able to define exactly how narrow / broadly the app can access files. Most developers certainly aren't fans of giving the user control especially when they need to do work to provide it, along with putting in the minimum effort possible to adopt new APIs, even when it would substantially improve the app. They still aren't forced to adopt it in Android Q, but they can't get access to the entirety of external storage anymore rather than what the user wants them to be able to access, which can be everything, but it probably isn't what users want so they won't do that.

And no it is absolutely not security 101 for web to make files read-only, cause if website already has remote execution issue, read-only access wont help much

You're once again completely misrepresenting what I said.

on webdev it is much more critical to properly filter input/output if you don`t do that there is absolutely no difference in other security.

It's obviously important to apply good development practices and to avoid vulnerabilities whenever possible, but bugs will still happen anyway. Using tools able to not just prevent many classes of vulnerabilities and also to mitigate their impact when they aren't outright prevented is important. What I said, which you misrepresented, is the following (simply quoted as is):

Even web developers without systems programming knowledge are taught to make their server side code read-only for the web / application server user, so a file write vulnerability is not arbitrary code execution. It's part of security 101.

The entire existence of features like Content Security Policy is defense in depth. If you don't understand that concept, I really don't know what to say. The principle of least privilege and defense in depth are such basic concepts that you should really know and understand them without having any involvement in software development. It's basic rational thinking. If you don't provide the capability to cause harm, you prevent harm from being caused even if something does go wrong which it often will despite substantial effort to prevent it.

Also this is not the place to argue about privacy / security vs freedom, especially when one thinks it is important to have secure app that does nothing and other thinks that app / platform that does something useful is much more important, then how secure it is.

It's you coming here disrupting a productive discussion with your misinformation. I'm not going to leave the falsehoods uncorrected when they are so harmful. The storage changes in Android Q don't reduce the capabilities of apps. It puts users in control. They can choose to give an app access to all the directories in shared storage, or they can choose not to do that. The app doesn't get to just ask for access to everything via a prompt, but rather the user chooses the level of access in the system file manager UI for the app. I don't see any issue with that, and you either don't know what you're talking about or you're just lying to push your negativity.

It has nothing to do with this issue and I don't know why you keep going on about it from your position of complete ignorance. I'd suggest educating yourself about the very basics of what you're talking about before trying to debate the merits of it. It helps no one and only makes you look foolish.

Proot will work until Google will go beyond just blocking execve().

It will work, but copying data into rwx memory would be very inefficient along with being a security issue. When executables and libraries are used normally, they get paged from storage as needed. Only the pages that are actively used need to be in memory and can be paged out. Copying the data in-memory results in the entirety of the code being stuck in memory as dirty pages that can't just be dropped but rather need to be preserved. Copying everything into memory to execute it isn't going to scale well beyond small programs and libraries.

Do you know if there are plans to disallow dlopen() on shared libraries extracted to application data (not /lib) ? For now it is working, I even don't see any warning or avc denials when doing it.

Yes, as I mentioned, they added an auditallow rule for app_data_file as execute. That means it will generate audit warnings in the kernel log on Android Q. The holes in the policy are known and are slowly being addressed. The others are ashmem/tmpfs execute and execmem. Injecting new native code is something they can and are working on preventing via SELinux policy. My previous comments elaborated on this along with the current blockers for some of it like removing execmem.

My first comment mentioned these current holes (which are officially known, and commented on) and that they are only taking one more step towards enforcing prevention of dynamic native code. They took other steps earlier, like removing execmod and various other legacy execute permissions. Trying to use these known holes in the SELinux policy doesn't seem like a viable long-term approach.

They cannot prevent class loading or other interpreted code from being run from app_data_file via SELinux policy, but they can certainly do it for native code, and they've been working towards that for a long time. It's finished for a lot of the base system already. Doing it for third party apps is harder due to compatibility issues, since apps need to migrate to the officially supported way of doing it. That's why they are doing it so gradually and you hadn't noticed until the latest set of changes.

Their policy for malicious apps forbids far more than they can ever enforce via SELinux policy. Something being allowed by SELinux policy doesn't mean that it's permitted or ever was permitted. The reason they implemented this SELinux policy change is also not to forbid apps from doing something to enforce their malicious app policy. It wasn't a change to protect the system from apps, but rather to make apps themselves harder to exploit. Their higher level policy on code from outside of apks allows them to make the change without worrying they've prevented doing something that wasn't disallowed already.

@thestinger So, do you know a way to use fopen (the most proper file access way in C) on files via SAF, if it is so mature, properly implemented API? Passing file descriptors via unix sockets doesn't give direct access even to the allowed files (you can't pass a directory file descriptor, as you cannot get it). Import / Export pattern doesn't give direct access to the allowed files by its definition. P.S. this is off-topic, even though Android Q-related, so a short answer is preferred.

Copying everything into memory to execute it isn't going to scale well beyond small programs and libraries.

Luckily, modern devices have enough RAM for this. Measurements should probably be done, though.

So, do you know a way to use fopen (the most proper file access way in C) on files via SAF, if it is so mature, properly implemented API?

SAF provides access to more than regular local files and provides a more sophisticated access model than the Linux file access API is capable of representing. SAF is used for network shares, cloud storage and many other cases. Linux doesn't have the ability to revoke access to files, which exists on some other operating systems (https://lwn.net/Articles/546537/), and it also doesn't have a capability system for file descriptors like Capsicum (https://lwn.net/Articles/482858/). It doesn't make sense to expect the libc fopen implementation to fit into this. On the other hand, you can definitely make an implementation of the libc stdio API with SAF compatibility.

Termux already implements a storage provider itself, so other apps can access the internal data via SAF and the user can access it directly via the file manager too. It could provide a client side SAF implementation by having a command for mounting SAF files / directories within the file system hierarchy. That could be implemented by providing a more sophisticated stdio and POSIX file access implementation with support for a virtual file system for SAF. So, you could run a command like smount /mnt/cloud_storage, which would open up the system file access API, where you could select an SAF provider from a cloud storage app. You could also use it for local access, but it already has direct access to data scoped to itself that it exposes to other apps.

Most apps don't have any need to worry about these things, because they don't have a reason to expose higher-level functionality via a traditional command-line environment.

Passing file descriptors via unix sockets doesn't give direct access even to the allowed files (you can't pass a directory file descriptor, as you cannot get it).

The non-proxied file descriptors wouldn't provide you with access even if you could obtain them, since that's forbidden by SELinux policy. SAF does provide file descriptors for the proxied file handles.

It can't pass you raw file descriptors from the other side because POSIX. It's a higher-level API than raw POSIX file access where these things are implemented.

A compatibility layer is certainly possible, but as a lossy abstraction, like representing it as virtual mounts in the file system tree, and just handling all the management (requesting access, noticing access was revoked, etc.).

Luckily, modern devices have enough RAM for this. Measurements should probably be done, though.

It means if the executables and libraries add up to 300M, it will use 300M of memory, with the OS unable to page it out. It also only bypasses this initial incremental step they took towards improving the SELinux policy along with the planned follow-up step of disallowing app_data_file execute. It doesn't fix that it's a violation of the app policy and won't survive the eventual completion of the SELinux changes.

If they end up accomplishing what they started on, which involves removing about 3 more of the SELinux rules, injecting native code in untrusted_app won't be possible: ashmem/tmpfs execute, app_data_file execute and in the longer term execmem which would almost certainly remain for isolated_app or a variant of it. They could keep the ART JIT compatible by generating code in a separate process, similar to the Microsoft Edge approach, or they may end up deciding it isn't worth having due to them increasingly shifting the balance slightly towards security in these compromises. Chromium isn't impacted, since the renderer uses isolated_app. Firefox would only be impacted if it doesn't use a basic sandbox by then, and there aren't other browser engines of note not based on one of these. There are other JIT compilers, but they could be expected to use isolated_app sandboxes too.

So, it seems like the core function for accessing files in C/C++ is broken with nothing in return (that aforementioned "compatibility layer" wasn't provided by Google since the release of Android 4.4). This is exactly why nobody likes SAF, all other benefits like remote mounts are not important.

It means if the executables and libraries add up to 300M, it will use 300M of memory, with the OS unable to page it out.

This isn't really a concern provided that modern phones have 4GB+. Anyway, this is the only choice that keeps end-user experience the same with non-rooted device.

If they end up accomplishing what they started on, which involves removing about 3 more of the SELinux rules, injecting native code in untrusted_app won't be possible: ashmem/tmpfs execute, app_data_file execute and in the longer term execmem which would almost certainly remain for isolated_app or a variant of it

That's why I suggested using isolatedProcess for executable code + proot to intercept and bridge syscalls that require the help of untrusted_app. This solution is long-term and has good end-user experience. Provided that bridge layer will implement some security measures, it will become a secure sandbox (with "limited access to Android APIs"), so there will be no Google Play policy violation. Even if Google considers this a violation, users are always more important than disputable administrative decisions.

So, it seems like the core function for accessing files in C/C++ is broken with nothing in return (that aforementioned "compatibility layer" wasn't provided by Google since the release of Android 4.4). This is exactly why nobody likes SAF, all other benefits like remote mounts are not important.

Apps can still access files in both internal and external storage directly, and the user can access their directory in external storage via the Files app. It's not being broken, and SAF isn't involved in those basics. It's one of the options available to apps.

The primary purpose of SAF is providing a privacy-respecting way of accessing external data, where the user is fully in control and can choose the scope of access along with revocation being possible. It has to abstract storage access to provide that, and as part of that provides the ability to represent arbitrary forms of storage via the API rather than only subsets of the local file system.

You're assigning zero value to users having privacy and precise control over the scope of app data access, rather than it being all or nothing access to external storage where users are coerced into giving up their privacy and security simply to use many apps at all.

This isn't really a concern provided that modern phones have 4GB+. Anyway, this is the only choice that keeps end-user experience the same with non-rooted device.

Sure, I can agree with that, but it has significant drawbacks (substantial performance hit on system calls from ptrace, memory usage, and the security drawbacks of using rwx memory). There are some other issues like the fact that it has to emulate ptrace, since it can't be nested, and it's not a fully complete implementation of it, which could impact debugging.

That's why I suggested using isolatedProcess for executable code + proot to intercept and bridge syscalls that require the help of untrusted_app. This solution is long-term and has good end-user experience. Provided that bridge layer will implement some security measures, it will become a secure sandbox (with "limited access to Android APIs"), so there will be no Google Play policy violation. Even if Google considers this a violation, users are always more important than disputable administrative decisions.

Yes, I think that's a viable long-term option, and I doubt that they would consider it a violation of the policy. It's allowed as long as it's in a meaningful sandbox, and having it in isolated_app with proxied access to a sub-directory of the Termux data directory could be considered good enough, even though it still has fairly direct kernel access, etc.

The reason for the policy is to require that all code used by apps outside a sandbox is uploaded for review and analysis. It's a prerequisite of enforcing other policies. They aren't currently doing much enforcement as a whole, which is why it hasn't been noticed or enforced for Termux. That could change suddenly, of course.

CSP is essentially only defence for frontend (XSS for example), that is last thing you want to look at when defending against dangerous attack vectors. Yes it can be one of defences but it is surely not something of high importance for defending your server and your users data. If you mention it as high importance defence I really really hope you don`t manage any servers or handle user data...

I used CSP as a separate example of defence in depth, and it's definitely an important thing to implement for client-side web security. It's one of the few forms of defence in depth that are available there. It's quite comparable to implementing restrictions with SELinux policy on a server, and can implement comparable mitigations like disallowing code injection to avoid escalation of many bugs into code execution. It being defence in depth doesn't mean it isn't important. There are many examples of important defence in depth security features.

ASLR, NX, stack/heap canaries, CFI, memory allocator hardening, memory tagging, pointer authentication, etc. are all defence in depth for memory corruption vulnerabilities. Disallowing execmem is one of those too. It's obviously best to avoid having the memory corruption bugs in the first place, but that doesn't mean it isn't important to make as many classes of those bugs as possible unexploitable and to raise the cost of exploiting others. Even in a memory safe language, which avoids the bugs in the first place, there can be memory corruption bugs caused by bugs in the compiler, runtime or native library dependencies. They are not going to be avoided as a whole, thus efforts to add multiple layers of defence in depth alongside efforts to reduce the occurrence of vulnerabilities and discover existing ones.

I'm quite amused by you trying to question my competence for giving CSP as a well known example of defence in depth though. If you don't understand the concept, I don't really know what else to say. I tried my best to explain it and offer some relevant examples, like the well known guidance of implementing w^x policies on web / application servers, loosely comparable usage of CSP for client-side web content and of course native code. It's something very standard and highly valued. Preventing a file write vulnerability from becoming code execution doesn't mean you stop worrying about preventing file write vulnerabilities. They still matter, but it doesn't mean you don't try to reduce their impact and contain them when they do happen. An app extracting a zip file in an unsafe way should not lead to trivial code execution, but yet it does if they manually extract their libraries to app data instead of using the native library directory.

The principle of absolutely no direct access is best defence, not least privilege as privileges can be escalated.

I'm unsure on the relevance to the discussion. As I stated before, the importance of avoiding vulnerabilities doesn't mean it isn't also important to mitigate them when they do happen. Preventing a file write vulnerability from escalating into remote code execution or dumping data from a server is very useful. I don't think it's productive to go through basic obvious principles of security here, or to try explaining the need to compromise between different design goals. If you don't get it, that's fine, but there is no point in discussing it further. It's not the topic.

It is good development practices to keep backward compatibility and not to remove features only due to security.

That's not an attitude compatible with making systems with decent privacy and security. Closing security holes is one of the few reasons that backwards compatibility is broken by software like the Linux kernel and Android. It's not the topic at hand, but reducing attack surface is a very important part of securing a system too.

Software development involves balancing competing concerns and making design choices that achieve good compromises between them. The competing concerns are evolving and not something static. If you don't value privacy and security, it's understandable that you're upset when the balance shifts towards those. What's strange is an inability to see that these are valid, useful changes even if you disagree with the design choices. I do things differently than Google in my privacy and security hardening work and generally preserve compatibility via adding user controlled toggles to disable features, but there are major disadvantages to that.

Yes if you don`t know Android user base / release history it does not make sense. "Scoped Directory Access" was introduced only in Android 7, and till now >50% of users are stuck without it, so it is not so easy to give permission to access external shared storage.

This has nothing to do with what you're replying to.

You are completely misrepresenting facts - no I have not mentioned any shared storage changes in Android Q only about internal storage exec permission changes. From what I know there are no big SAF changes in Android Q.

No, I'm not misrepresenting facts. You're trying to project what you're repeatedly doing onto me is something that other people are able to easily see through. It's you who brought up these other topics in your poorly informed, inaccurate rants about other topics. Are you now going to deny that? Wow. You are the first person to mention SAF in this thread. The discussion was previously focused on the topic at hand: the app policy forbidding non-sandboxed executable code that isn't from the apk and more specifically the introduction of SELinux rules forbidding dynamic native code execution violating that policy (currently only for running executables directly, but with more changes lined up and the predictable closing of the other remaining holes further down the road).

If you don't want to be called out on your bullshit, don't do it in the first place. I don't understand what you hope to accomplish by coming here and spreading misinformation / confusion along with disrupting the discussion. You are repeatedly being disingenuous, manipulative and dishonest. It's ridiculous to keep needing to refute outright lies and manipulations from you. If you don't have any useful information or ideas to contribute, why are you here?

As I understand @thestinger have mentioned implementing something like fuse, that I guess would not be so easy to implement, if possible at all.

I mentioned that it's possible to write a thin wrapper around SAF for the file access APIs representing it as virtual file paths. It's hardly impossible or even particularly hard to implement. A command for requesting SAF access and then exposing it via a virtual path is a straightforward concept. Have you missed the discussion about using ptrace to intercept exec and other file system access calls to change how they behave? It could also be done without ptrace, as it would be fine if it only worked for programs using libc rather than doing their own system calls like Go. It's not at all part of what needs to be implemented to work around this though. Termux is barely impacted by the storage changes since it has a nice storage provider already, as I mentioned. Doing more is a completely optional frill that never needs to be implemented and should really be discussed elsewhere if there's even interest in that.

I'm quite amused by you trying to question my competence for giving CSP as a well known example of defence in depth though.

You are still missing the point, let`s spell it out to you: you first need to make sure your web app is secure against main threats not against threats that are rarely used - that was main point. Of course there are lots of ways to attack and lots of ways to defend, but if your main defences are down there is no difference you do some kind systems defence or not.

It is good development practices to keep backward compatibility and not to remove features only due to security. That's not an attitude compatible with making systems with decent privacy and security.

That is absolutely attitude you should take - if you have nothing to do security for (no users) there is no difference in security measures you take.

Software development involves balancing competing concerns and making design choices that achieve good compromises between them. The competing concerns are evolving and not something static.

Yes of course, but when you make security more important then functionality you loose functionality, you loose users.

No, I'm not misrepresenting facts. You're trying to project what you're repeatedly doing onto me is something that other people are able to easily see through. It's you who brought up these other topics in your poorly informed, inaccurate rants about other topics.

Yes you are. The part you are commenting about was only about how you sacrifice functionality of app due to security, to be precise exec data directory. SAF was mentioned in absolutely different context (as other losses due to Google change of mind). And to my best knowledge SAF in no way changes exec permission on Android Q, SELinux does that.

If you don't want to be called out on your bullshit, don't do it in the first place.

You calling this bullshit - telling millions of users - no your app cant work because some "security expert" decided - no you cant use some functions because he thinks it is more secure. You know how many of users that apps I work on had complains about security / privacy - 0 ? Now image what will happen when app goes down because critical functionality is denied due to security.

So before calling something bullshit first at least make some project that has not 10 users, sell it and then try to tell those users that it will not work anymore just because of yea you know - security.

This whole discussion is should about how bad it is to deny exec in data directory and proper workarounds (not VM or ptrace) if any.

You are repeatedly being disingenuous, manipulative and dishonest.

You calling me dishonest, really? when you outright lie, just for example one of your lies on sandbox is allowed by policy, when it is clearly stated that only VM is allowed:

It's allowed as long as it's in a meaningful sandbox.

If you want to discuss more about this please grow up and go back to main topic, otherwise at least be civilized while commenting.

I'll mark future comments (and some of the previous comments) that are about philosophical topics (in my opinion) rather than about the android Q security enhancement and what it means for termux as spam.

Is this a potential use case for Dynamic Delivery using App Bundles?

Android App Bundles seem to have an issue where they force extractNativeLibs to be false.

You can force extractNativeLibs=true in an Android App Bundle, by placing android.bundle.enableUncompressedNativeLibs=false into gradle.properties.

The ability to run execve() on files within an application's home directory will be removed in target API > 28. Here is the issue on Google bug tracker: https://issuetracker.google.com/issues/128554619 As expected it is yet another "working-as-intended", furthermore, as commented in the issue,

This seems to completely break Termux in a long-term, as all its packages contain executables.