That is also not what I would expect from the vtu file...

Hmmm.... yes the assemblies appear to be in the right place as you say:

In [2]: for a in o.r.core:

...: print(a.spatialLocator.getRingPos())

(2, 5)

(2, 6)

(2, 4)

(1, 1)

(2, 1)

(2, 3)

(2, 2)Its possible you might need a minus sign in front of the first line:

lattice map: |

- F F

F F F

F FBy I don't know why it would only effect the vtu... I'll try to look into it more later...

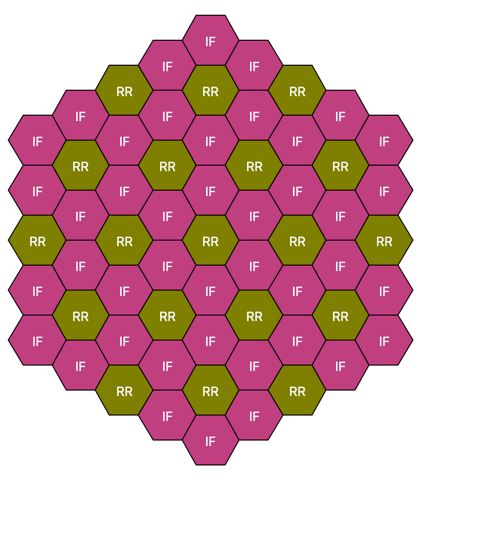

I'm attempting to model an HTGR minicore and having a few issues / sticking points. The core is a simple 2-ring hex grid with a prismatic-like pin lattice Core Pin lattice

Pin lattice

Blueprints.yaml

```yaml blocks: fuel: &block_fuel grid name: pins duct: &comp_duct shape: Hexagon material: HT9 Tinput: 600 Thot: 600 ip: 32 op: 32.2 fuel: shape: Circle material: UZr Tinput: 600 Thot: 600 id: 0.0 od: 0.8 latticeIDs: [FP] clad: shape: Circle material: Zr Tinput: 600 Thot: 600 id: fuel.od od: 0.9 latticeIDs: [FP] pitch: shape: Hexagon material: Void Tinput: 600 Thot: 600 ip: 3 op: 3 latticeIDs: [FP, CL] cool pin: shape: Circle material: Graphite Tinput: 600 Thot: 600 id: 0 od: fuel.od latticeIDs: [CL] coolant: shape: DerivedShape material: Graphite Tinput: 600 Thot: 600 assemblies: heights: &heights - 50.0 axial mesh points: &mesh - 2 fuel: specifier : F blocks : &fuel_blocks - *block_fuel height: *heights axial mesh points: *mesh material modifications: U235_wt_frac: - 0.127 xs types : &IC_xs - A systems: core: grid name: core origin: x: 0.0 y: 0.0 z: 0.0 grids: !include minicore-coremap.yaml ```Coremap.yaml

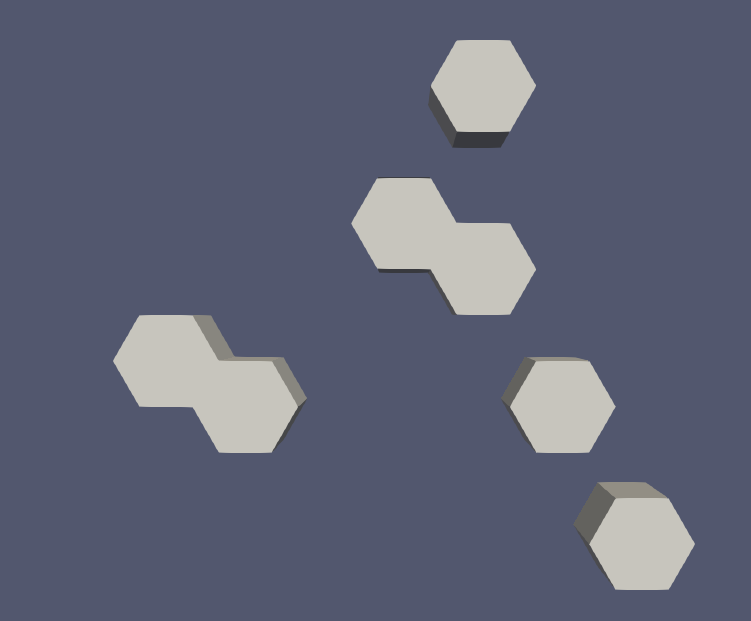

```yaml core: geom: hex symmetry: full lattice map: | F F F F F F F pins: geom: hex symmetry: full lattice map: | - - FP - FP FP - CL CL CL FP FP FP FP FP FP FP FP FP CL CL CL CL FP FP FP FP FP FP FP FP FP CL CL CL CL CL FP FP FP FP FP FP FP FP FP CL CL CL CL FP FP FP FP FP FP FP FP FP CL CL CL FP FP FP ```ARMI 5b7d215 doesn't have any issue running the model and writing a datafile. The output of armi vis-file minicore.h5 yields

gives some spurious messages about the assemblies. I think this also causes the vtu file to not reflect the core geometry, or what I believe the core geometry should indicate

As a note, the current HEAD 1fd21f8236763f43c3bc25b866122aa9789486bc produces similar issues, but with different assembly naming

I've thought about not using the

lattice mapfor the pin grid and instead usinggrid contentswhich may let me specify the pin position with tuples (ring, pos) if I'm understanding the docs right - https://terrapower.github.io/armi/.apidocs/armi.reactor.blueprints.gridBlueprint.html#armi.reactor.blueprints.gridBlueprint.GridBlueprint.gridContentsAs a consistency check, if you load this reactor up, the fuel lattice appears to be present in each block with the correct lattice structure. Checked based on the

spatialLocatorfor circular components in a fuel block.