I am also facing the error @snehitvaddi did u solve it ?

Closed snehitvaddi closed 4 years ago

I am also facing the error @snehitvaddi did u solve it ?

Yes, I did resolve. It worked after I changed the version of Tensorflow.

@snehitvaddi could you share your colab notebook to this mail id inocajith21.5@gmail.com . I tested in both cpu and gpu mode its giving me cannot connect to X server

no problem got it from your repo thanks

@ajithvallabai Is the code from my repo working fine?

@snehitvaddi no it showed "cannot connect to x server" then i commented the cv2.imshow line . After that it showed nothing in colab so i started to create conda and use in my pc

@ajithvallabai The error is caused by opencv. You need to comment 3 block of lines that: show the final frame (imshow), waitkey (like for when you want to press a letter in the keyboard to close the opencv window), and the one that "closes all windows". Basically, you cannot play videos in Colab. The alternative is to copy the videos to your drive (using %cp), and watch them there. I also couldn't play video in colab. I am trying to find a way to do so.

@snehitvaddi Thanks now it works i didnt comment out waitkey i think. may be you can add below code to your colab code if u like For faster computing

with tf.device('/gpu:0'):

!python object_tracker.py --video ./data/video/traffic.mp4 --output ./data/video/traffic-results2.avi --weights ./weights/yolov3.tfFor cannot connect server x error

%%writefile object_tracker.py

import time, random

import numpy as np

from absl import app, flags, logging

from absl.flags import FLAGS

import cv2

import matplotlib.pyplot as plt

import tensorflow as tf

from yolov3_tf2.models import (

YoloV3, YoloV3Tiny

)

from yolov3_tf2.dataset import transform_images

from yolov3_tf2.utils import draw_outputs, convert_boxes

from deep_sort import preprocessing

from deep_sort import nn_matching

from deep_sort.detection import Detection

from deep_sort.tracker import Tracker

from tools import generate_detections as gdet

from PIL import Image

flags.DEFINE_string('classes', './data/labels/coco.names', 'path to classes file')

flags.DEFINE_string('weights', './weights/yolov3.tf',

'path to weights file')

flags.DEFINE_boolean('tiny', False, 'yolov3 or yolov3-tiny')

flags.DEFINE_integer('size', 416, 'resize images to')

flags.DEFINE_string('video', './data/video/test.mp4',

'path to video file or number for webcam)')

flags.DEFINE_string('output', None, 'path to output video')

flags.DEFINE_string('output_format', 'XVID', 'codec used in VideoWriter when saving video to file')

flags.DEFINE_integer('num_classes', 80, 'number of classes in the model')

def main(_argv):

# Definition of the parameters

max_cosine_distance = 0.5

nn_budget = None

nms_max_overlap = 1.0

#initialize deep sort

model_filename = 'model_data/mars-small128.pb'

encoder = gdet.create_box_encoder(model_filename, batch_size=1)

metric = nn_matching.NearestNeighborDistanceMetric("cosine", max_cosine_distance, nn_budget)

tracker = Tracker(metric)

physical_devices = tf.config.experimental.list_physical_devices('GPU')

if len(physical_devices) > 0:

tf.config.experimental.set_memory_growth(physical_devices[0], True)

if FLAGS.tiny:

yolo = YoloV3Tiny(classes=FLAGS.num_classes)

else:

yolo = YoloV3(classes=FLAGS.num_classes)

yolo.load_weights(FLAGS.weights)

logging.info('weights loaded')

class_names = [c.strip() for c in open(FLAGS.classes).readlines()]

logging.info('classes loaded')

try:

vid = cv2.VideoCapture(int(FLAGS.video))

except:

vid = cv2.VideoCapture(FLAGS.video)

out = None

if FLAGS.output:

# by default VideoCapture returns float instead of int

width = int(vid.get(cv2.CAP_PROP_FRAME_WIDTH))

height = int(vid.get(cv2.CAP_PROP_FRAME_HEIGHT))

fps = int(vid.get(cv2.CAP_PROP_FPS))

codec = cv2.VideoWriter_fourcc(*FLAGS.output_format)

out = cv2.VideoWriter(FLAGS.output, codec, fps, (width, height))

list_file = open('detection.txt', 'w')

frame_index = -1

fps = 0.0

count = 0

while True:

_, img = vid.read()

if img is None:

logging.warning("Empty Frame")

time.sleep(0.1)

count+=1

if count < 3:

continue

else:

break

img_in = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

img_in = tf.expand_dims(img_in, 0)

img_in = transform_images(img_in, FLAGS.size)

t1 = time.time()

boxes, scores, classes, nums = yolo.predict(img_in)

classes = classes[0]

names = []

for i in range(len(classes)):

names.append(class_names[int(classes[i])])

names = np.array(names)

converted_boxes = convert_boxes(img, boxes[0])

features = encoder(img, converted_boxes)

detections = [Detection(bbox, score, class_name, feature) for bbox, score, class_name, feature in zip(converted_boxes, scores[0], names, features)]

#initialize color map

cmap = plt.get_cmap('tab20b')

colors = [cmap(i)[:3] for i in np.linspace(0, 1, 20)]

# run non-maxima suppresion

boxs = np.array([d.tlwh for d in detections])

scores = np.array([d.confidence for d in detections])

classes = np.array([d.class_name for d in detections])

indices = preprocessing.non_max_suppression(boxs, classes, nms_max_overlap, scores)

detections = [detections[i] for i in indices]

# Call the tracker

tracker.predict()

tracker.update(detections)

for track in tracker.tracks:

if not track.is_confirmed() or track.time_since_update > 1:

continue

bbox = track.to_tlbr()

class_name = track.get_class()

color = colors[int(track.track_id) % len(colors)]

color = [i * 255 for i in color]

cv2.rectangle(img, (int(bbox[0]), int(bbox[1])), (int(bbox[2]), int(bbox[3])), color, 2)

cv2.rectangle(img, (int(bbox[0]), int(bbox[1]-30)), (int(bbox[0])+(len(class_name)+len(str(track.track_id)))*17, int(bbox[1])), color, -1)

cv2.putText(img, class_name + "-" + str(track.track_id),(int(bbox[0]), int(bbox[1]-10)),0, 0.75, (255,255,255),2)

### UNCOMMENT BELOW IF YOU WANT CONSTANTLY CHANGING YOLO DETECTIONS TO BE SHOWN ON SCREEN

#for det in detections:

# bbox = det.to_tlbr()

# cv2.rectangle(img,(int(bbox[0]), int(bbox[1])), (int(bbox[2]), int(bbox[3])),(255,0,0), 2)

# print fps on screen

fps = ( fps + (1./(time.time()-t1)) ) / 2

cv2.putText(img, "FPS: {:.2f}".format(fps), (0, 30),

cv2.FONT_HERSHEY_COMPLEX_SMALL, 1, (0, 0, 255), 2)

#cv2.imshow('output', img)

if FLAGS.output:

out.write(img)

frame_index = frame_index + 1

list_file.write(str(frame_index)+' ')

if len(converted_boxes) != 0:

for i in range(0,len(converted_boxes)):

list_file.write(str(converted_boxes[i][0]) + ' '+str(converted_boxes[i][1]) + ' '+str(converted_boxes[i][2]) + ' '+str(converted_boxes[i][3]) + ' ')

list_file.write('\n')

# # press q to quit

# if cv2.waitKey(1) == ord('q'):

# break

vid.release()

if FLAGS.ouput:

out.release()

list_file.close()

#cv2.destroyAllWindows()

if __name__ == '__main__':

try:

app.run(main)

except SystemExit:

passSo, are you able to play the video on colab??

no i colab we cant play . we can move it to our google drive that would be the best solution .as we can forward and reverse .

But i know a way to open web cam from google colab may be you can find ways with it .

from IPython.display import display, Javascript

from google.colab.output import eval_js

from base64 import b64decode

def take_photo(filename='photo.jpg', quality=0.8):

js = Javascript('''

async function takePhoto(quality) {

const div = document.createElement('div');

const capture = document.createElement('button');

capture.textContent = 'Capture';

div.appendChild(capture);

const video = document.createElement('video');

video.style.display = 'block';

const stream = await navigator.mediaDevices.getUserMedia({video: true});

document.body.appendChild(div);

div.appendChild(video);

video.srcObject = stream;

await video.play();

// Resize the output to fit the video element.

google.colab.output.setIframeHeight(document.documentElement.scrollHeight, true);

// Wait for Capture to be clicked.

await new Promise((resolve) => capture.onclick = resolve);

const canvas = document.createElement('canvas');

canvas.width = video.videoWidth;

canvas.height = video.videoHeight;

canvas.getContext('2d').drawImage(video, 0, 0);

stream.getVideoTracks()[0].stop();

div.remove();

return canvas.toDataURL('image/jpeg', quality);

}

''')

display(js)

data = eval_js('takePhoto({})'.format(quality))

binary = b64decode(data.split(',')[1])

with open(filename, 'wb') as f:

f.write(binary)

return filenameif u execute above command and allow camera in the pop up u can take a photo .and u can see the live web cam also during that time .

i took above code from code snippets that is available at the left corner with symbol <>

@ajithvallabai Thanks man!!

Yes, I did resolve. It worked after I changed the version of Tensorflow.

Which version of tensorflow?

这里得 ”net/%s:0“ 是对应tensorflow的1.0系列版本,如果是tensorflow的2.0系列版本为 “%s:0”

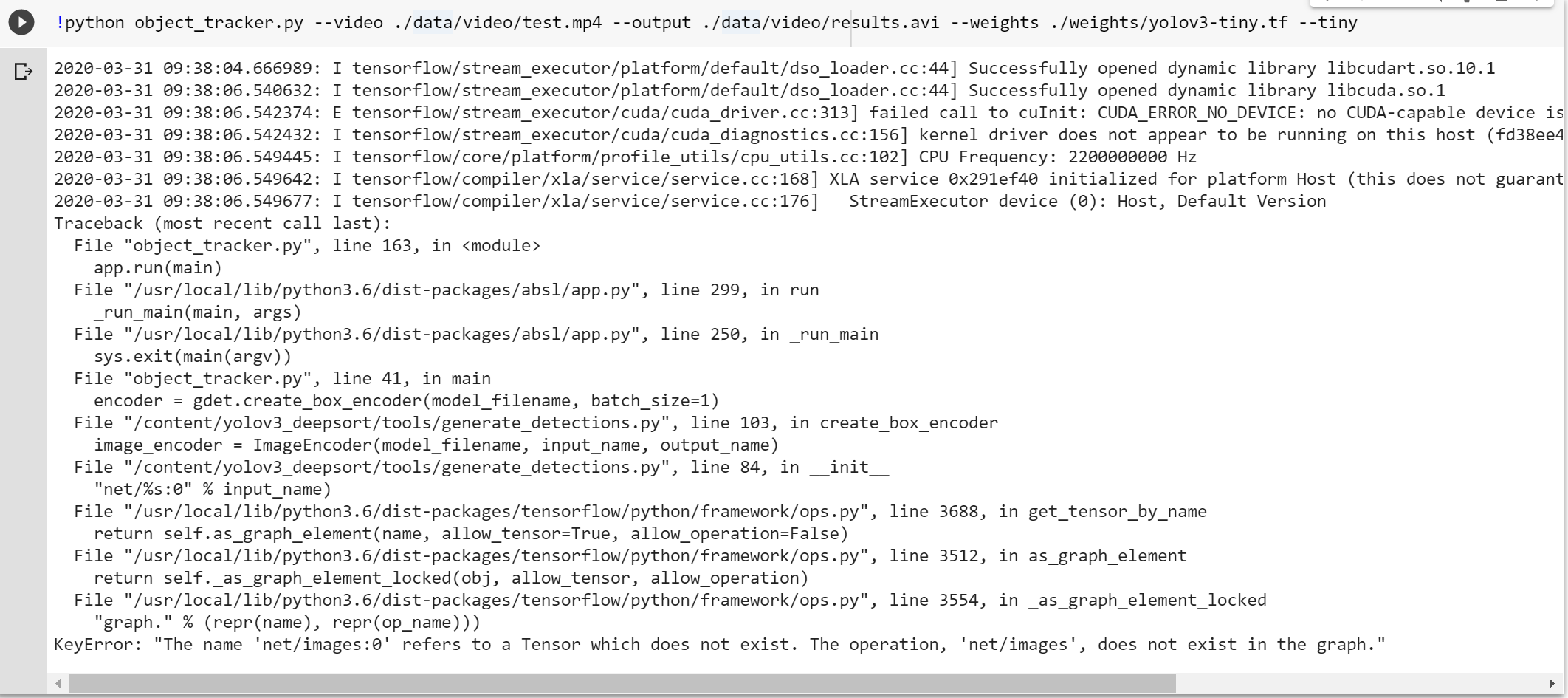

Facing this error. I am running this in Google Colab I have followed your video tutorial and copy-pasted as it is.