Those packages would just need to make some changes to the code that makes predictions. I suspect that DALEX is nearly there since it works with parsnip and that should be the same as what a workflow would need. @pbiecek

I don't think that we would make any packages in this area since the existing ones cover all of the ground.

The best thing that you can do is to put in DALEX and iml GH issues with reproducible examples using a workflow.

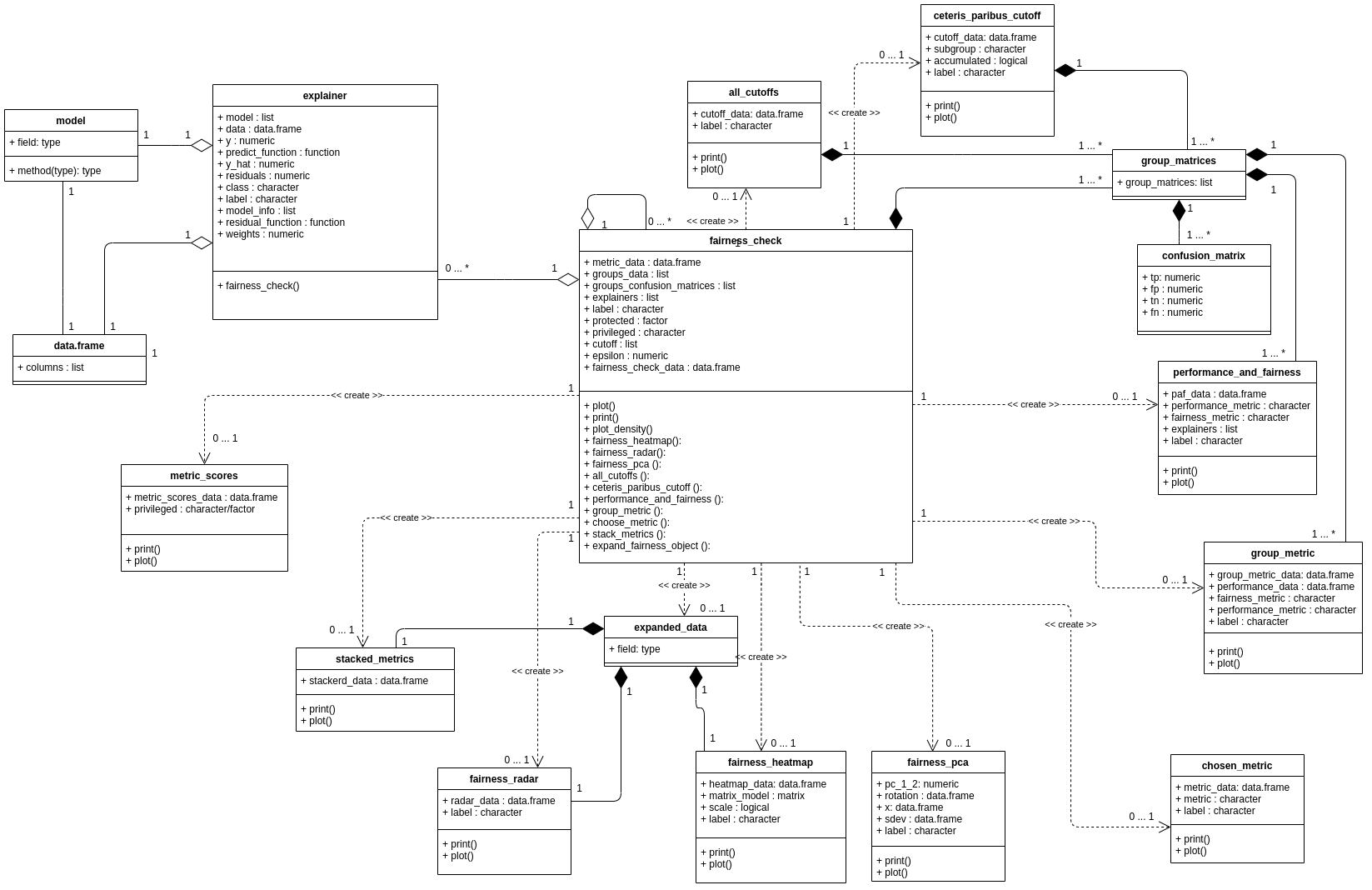

It would be great when methods for making models and predictions better explainable would be made available through tidymodels and could be added to a workflow, for example like the ones in the

DALEXandimlpackage. Are there any plans to do this?