Sounds like an interesting technique but I do not understand why you would want to refresh the buffer 5000 times per second when that represents 1/100th of a 50Hz frame. Surely, 1/10th of a frame would be largely enough and absolutely imperceptible to humans. Moreover it would provide even more protection against jitter.

UPDATE: Toni has released a WinUAE beta with this! Click here

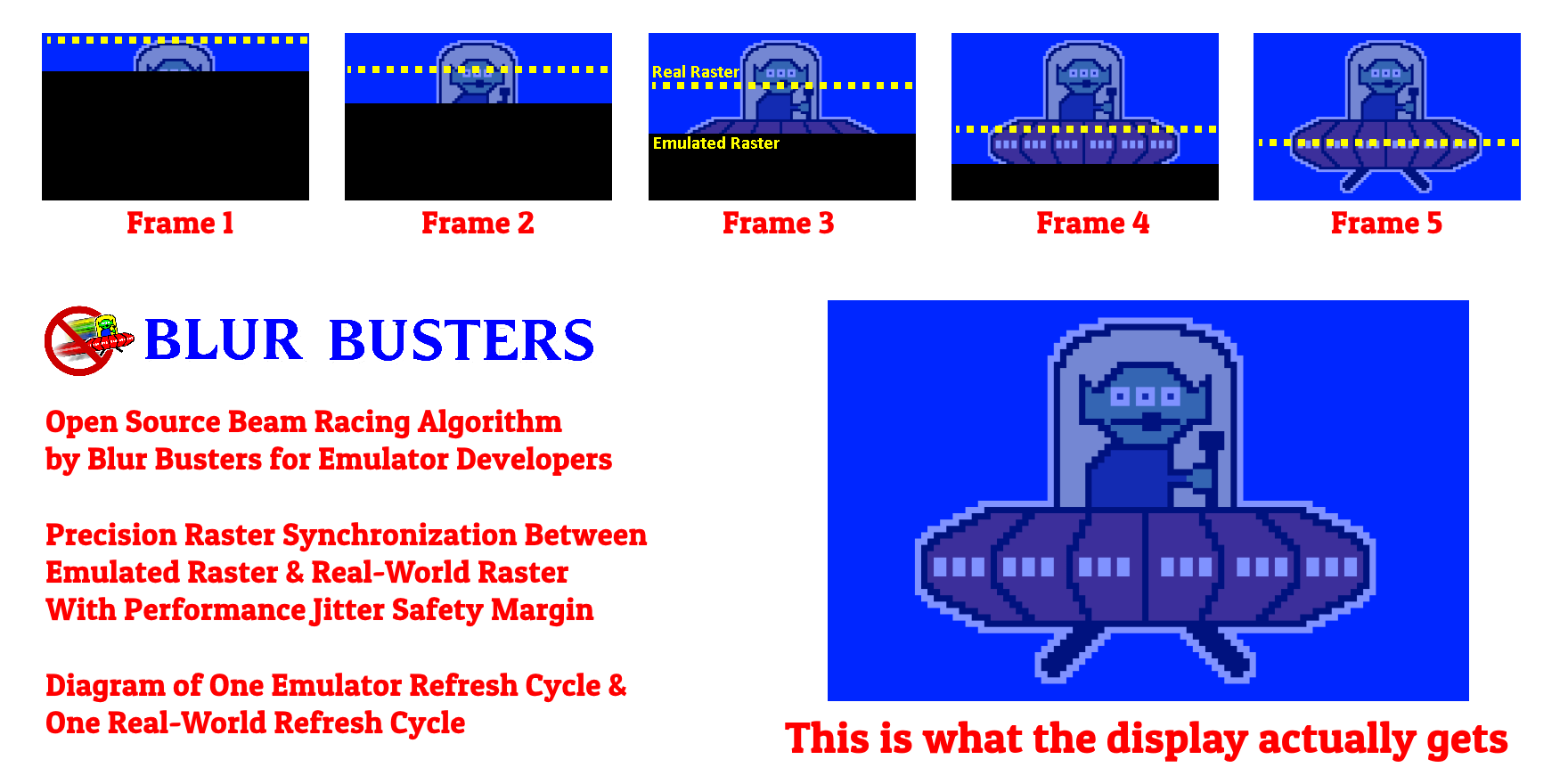

The algorithm simple diagram:

The algorithm documentation: https://www.blurbusters.com/blur-busters-lagless-raster-follower-algorithm-for-emulator-developers/

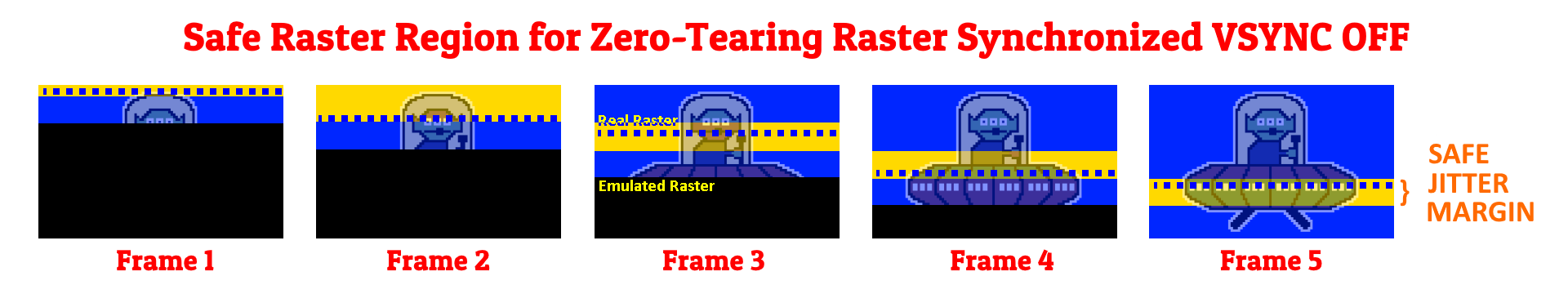

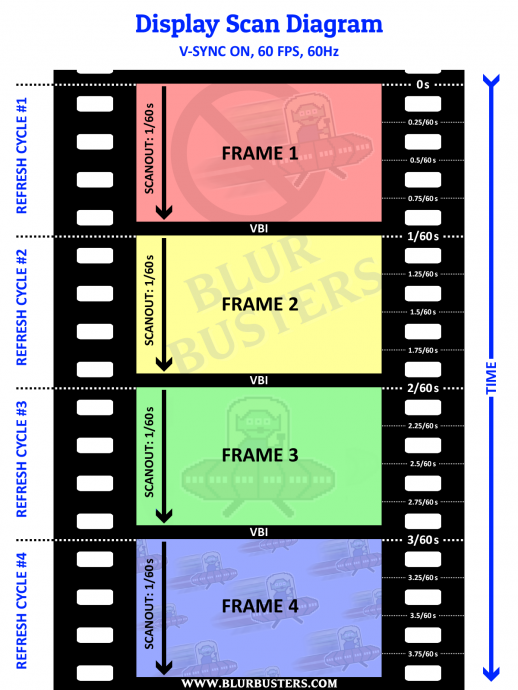

Basically, ultra-high-page-flip-rate raster-synchronized VSYNC OFF with no tearing artifacts, to achieve a lagless VSYNC ON. Achievable using standard Direct 3D calls.

Ideally rendering to front buffer is preferred, but modern graphics cards can now do thousands of buffer swaps per second of redundant frame buffers (to simulate front buffer rendering) -- so this is achievable within the sphere of standard graphics APIs utilizing VSYNC OFF and access to the graphics card's current raster.

You'd map the real raster (e.g. #540 of 1080p) to the emulated raster (e.g. #120 of 240p) for beam chasing of scaled resolutions.

Basically, works with raster-accurate emulators (line-accurate or cycle-accurate) to simulate a rolling-window frame slice buffer, for synchronizing emulator raster to real world raster (tight beam racing).

EDIT: After I posted this, I have learned at least one other have simultaneously invented a (formerly-before-unreleased) jitter-forgiving beam-chasing algorithm, I'm happy to share due credit. I'm open sourcing a raster demo soon, keep tuned. (see subsequent posts)