Hello, @David-Development

Due to security reason, Digdag isolates each task environment. It has some limitation, and a similar question already posted. It seems that your question related to #785.

Open David-Development opened 5 years ago

Hello, @David-Development

Due to security reason, Digdag isolates each task environment. It has some limitation, and a similar question already posted. It seems that your question related to #785.

@hiroyuki-sato thanks for the response!

My "problem" is, that we have a several scripts in our container that call each other using relative paths. If the workdir is set to /tmp/..., our scripts won't be able to find each other anymore. The only workaround would be to use absolute paths.

"Due to security reason, Digdag isolates each task environment."

In a shared environment (local execution) I agree. However if I use docker containers for each job, what's the security concern there? The containers are isolated by them self. Or is one container instance used for multiple workers? Is the /tmp/ directory mounted from the host? Or does the /tmp/ directory only exist within the container?

Hi, @David-Development

As far as I know, there isn't a way to avoid overwritting workdir if you run docker by digdag.

You may use sh>: operator to run docker like below

timezone: UTC

_export:

MINIO_HOST: "127.0.0.1:9001"

MINIO_USERNAME: "minio"

MINIO_PASSWORD: "minio"

+my_task_1:

for_each>:

crawler: [abc, def]

_do:

sh>: docker run --rm python:3 pwd[FYI] you can also use for_range operator instead of for_each to run subtasks multiple times. https://docs.digdag.io/operators/for_range.html#for-range-repeat-tasks-for-a-range

Hello, @David-Development

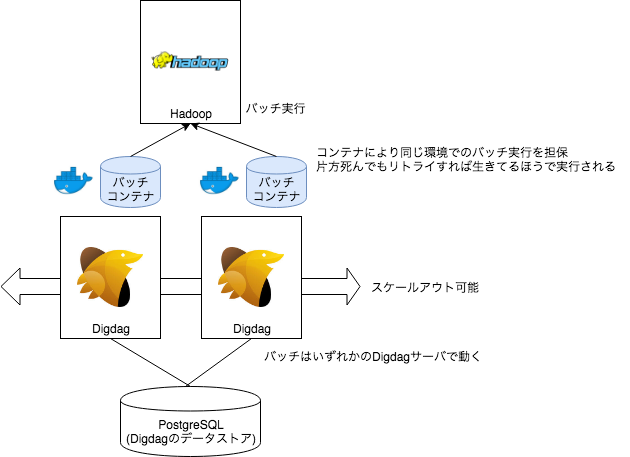

MicroAd(one of Digdag user company in Japan) use Digdag with Docker. https://developers.microad.co.jp/entry/2018/05/24/131136

They use py> operator for executing a query in Hadoop.

They don't process datum in Docker container. Instead, datum stored in Hadoop.

so, Digdag becomes scalable.

They also use S3 and s3_wait> operator for waiting for task completion.

Thank you! It got it working by using my own storage mechanism now using minio manually (I guess similar to the hadoop example).

timezone: Europe/Berlin

_export:

MINIO: "-e MINIO_HOST=minio:9000 -e MINIO_USERNAME=minio -e MINIO_PASSWORD=minio"

+make_lm:

_parallel: true

for_each>:

chunk_idx: [0,1,2,3]

_do:

sh>: docker run --rm ${MINIO} -e DOCKERSTAGE="7.1" -e CHUNK_COUNT=8 -e CHUNKS_PER_NODE=4 -e CHUNK_IDX=${chunk_idx} <my-image>I'm currently writing my thesis on different workflow engines and I thought that it might be possible to define input/output data which will be handled and versioned by the workflow engine (digdag). Kind of similar to Argo, a workflow engine for Kubernetes clusters which allows users to define the input/output files. In general, the Common Workflow Language follows a similar approach (Example). As well as defined in the Workflow Definition Language (Structure)

I'm trying to get my workflow up and running with digdag and docker. After starting the container, the workdir which is defined in the Dockerfile of the container gets overwritten. I can't seem to find a way to set the workdir properly.

Example Workflow:

The output is something like this:

/tmp/digdag-tempdir3664891302268477936/workspace/3_report_32_180_1070820252556486184My use-case is, that I have a work-flow that consists of multiple steps, each step is somewhat complex and has it's own docker container with it's own entrypoint and workdir.

Another problem is, that I'm not sure how to pass intermediate results / data between steps or how to scale this application onto multiple servers (e.g. have multiple workers)? Is there any documentation on this that I missed? The documentation only says:

Thanks! I really like digdag.