In the upper right corner of your link it says artifacts (1). There you can download the lighthouse result.

Closed kaihendry closed 4 years ago

In the upper right corner of your link it says artifacts (1). There you can download the lighthouse result.

I was expecting to see some score inline.

Why can't this just be on a URL instead?

Hi @kaihendry , thank you for your suggestion. Yes, as @emilhein recommended, you can download artifacts and explore the results. It would be great to display some high-level results in logs after each URL run.

Some ideas:

use cliuI for formatting a table

display categories with a custom bar chart, based on https://github.com/icyflame/bar-horizontal

Display 6 metrics, like in LH report, if performance category available)

Display budgets table, like in LH report, if budgetPath set

I'll also shamelessly plug the kind of cli output we use in the psi cli project.

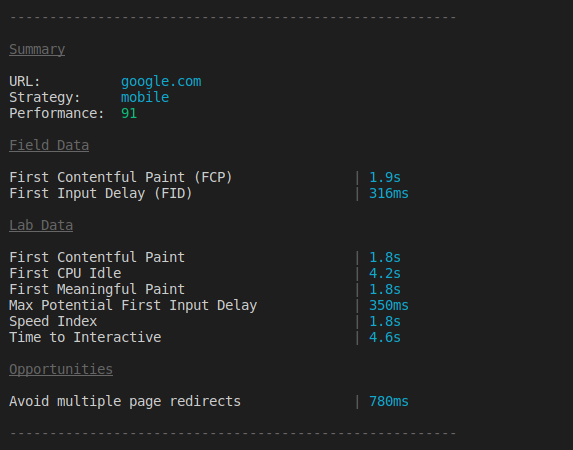

Something like this:

(obv we would just have lab data.)

+1 to adding some charts/sparklines though, especially for opportunities.

Im actually really loving cliui. Made a quick and dirty mockup of the perf category:

Wow @exterkamp, this looks AMAZING! I like small details like a circle/square/triangle, colors, measurements, small piecharts. Overall formatting is 🔥.

I've started to expand this idea in a separate repo. I think it might be useful in ChromeLabs/psi, lhci itself and obviously here, so maybe having it be separate makes sense to keep this repo cleaner and just pull it in and call it like lhci. Thoughts?

I found https://github.com/codechecks/lighthouse-keeper which ends up displaying a markdown report of the result (example).

I am guessing this uses the output parameter of https://developer.github.com/v3/checks/runs/#update-a-check-run with a bunch of markdown?

Just wanted to point out that could be an option as well..

I am guessing this uses the output parameter of https://developer.github.com/v3/checks/runs/#update-a-check-run with a bunch of markdown?

From what I can tell actions can't return data like that yet? (or I just can't find the docs :confused: )

We could get an octokit client and then post a second status check with all this fun output...But then each action run would have 2 status checks, and I don't think you can make the action not fire off a check in the UI?

Looking to @paulirish's https://github.com/paulirish/lighthouse-ci-action/pull/2 it's possible to have checks with markdown results as a part of CI. I'm going to hack on this as well :)

Going to try to boil down this issue...

Goals: clearly communicate to the developer the most important details.

Potential surfaces:

Right now I think using a Check is the most flexible choice here.

I think maintaining a LHR->check-markdown formatter will be somewhat painful, but that's an option. It's worth noting that raw HTML is accepted as Github-flavored Markdown, and <details> and <table> blocks work.. so a surprising amount of the HTML report can render decently when interpreted as GFM:

I totally agree using the download artifacts thing is terrible and we 100% need something better..

Ideally, we would really consider the UX here a tad more...

I'd be curious to hear more of the usecases that inspire these feature requests.

Thanks, @paulirish, for the excellent overview of our options!

Goals: clearly communicate to the developer the most important details.

I completely agree with the goal.

A potential solution could look like:

A missing piece is a long-time storage of results and display of them.

temporary-public-storage is excellent, but it's just for 7 days and is public. Custom LHCI server is another option but requires the setup and support of Server/PG + updates and sync of LHCI versions.

We could try to store results in Github Gist and use Github Viewer to display them. It will need to support a file name and a commit sha (now the viewer finds a report by gist id). So the history of Lighthouse reports will be aggregated in the gist and checks use Lighthouse Viewer with a link to filename/sha.

To display changes and compare results, we could try to implement stateless Lighthouse Comparer. It will be similar to Lighthouse Viewer but powered by LHCI UI to compare two reports. In combination with Gists, it will be easy to privately store all results, have links to reports, and easily explore changes.

Gist storage requires the creation of the deploy keys, which is a trade-off. (not sure if it's possible to avoid)

It is just an idea. I'd like to hear your feedback.

- What information do we most want to see?

- link to the actual report

- know the score per category, per url. Compared with budget.

- nice to have: see a quick overview of failing items

For me a Check which passes/fails based on perf. budget would work. If the check passes, I'm not interested in more information. If it fails I want to know, without too many steps, what's wrong. I think a Check is perfect for both use cases.

Some more inspiration for the UI for Checks below. Made In the pre GitHub Actions/Lighthouse CI era with a GitHub App which used WebPageTest and it's Lighthouse feature:

Also: I agree with @alekseykulikov on A missing piece is a long-time storage of results and display of them.. It's a high treshold to set up a custom server.

Thank you, everyone, for a fantastic discussion with plenty of ideas!

The final solution is to use annotations to communicate each failed assert, and it's shipped with 2.3.0 release.

@paulirish made a great argument in favor of checks https://github.com/treosh/lighthouse-ci-action/issues/2#issuecomment-558395025

But after a bit of hacking, we found a few issues with Github Check:

Annotations have a minor issue now, they are plain text and don't support markdown. I hope it will be fixed in the future.

curious if we have considered making the information available with core.setOutput.

seems this would allow developers to easily build all these custom solutions themselves rather than trying to satisfy everyone with a single solution.

you could also pass the url to results into a github comment for example

@magus how is the output exposed to the user? It wasn't clear from the docs and I don't know anything that uses it

Here's a super reduced example I created to show how something like this could work

https://github.com/magus/lighthouse-ci-output/runs/638824574?check_suite_focus=true

steps is a global that has keys for each step with an id specified in a workflow. you can index into it inside the workflow file to pass values into steps. in the example above the action.yml defines the metadata output, sets it as output inside action.js, and then echoes it back in the final workflow step using that global namespace ({{ steps.lhci.outputs.metadata }})

Without knowing details on the exact format of the .lighthouse directory generated, I think the rough flow for this repo would be something like ...

.lighthouse directory and store in-memory in objectsteps

const core = require("@actions/core");

// ...

core.setOutput("metadata", JSON.stringify(metadata));id they want for this step in their workflow

- name: Audit URLs using Lighthouse

id: lhci

uses: treosh/lighthouse-ci-action@v2

with:

urls: |

https://example.com/

https://example.com/blog{{ steps.lhci.outputs.metadata }}

- name: Write fancy Lighthouse CI github PR comment

run: ./scripts/githubComment.js '${{ steps.lhci.outputs.metadata }}'Wow yeah this example is super useful. Thank you.

So we can basically store strings, and the expectation is that output props are probably JSON.

Well that's easy enough.. I think we just need to decide on the schema of the output object but that seems doable. I'll file a new issue to track this.

Ah! Turns out this is already filed at #41. We can followup there.

Does anyone have an action that pretty prints this info?

@ChildishGiant there surely must be nicer ways to do this, but the snippet below works (add/remove what you need). Those steps needs to come after the lighthouse step(s):

name: Lighthouse

on:

status:

jobs:

lighthouse:

runs-on: ubuntu-latest

timeout-minutes: 20

steps:

// ... Lighthouse step(s) omitted

- id: read-manifest

run: |

echo ::set-output name=manifest::$(cat .lighthouseci/manifest.json)

- uses: actions/github-script@v4

if: github.event.context == 'deploy/netlify' && github.event.state == 'success'

env:

MANIFEST: ${{ steps.read-manifest.outputs.manifest }}

with:

script: |

const manifest = JSON.parse(process.env.MANIFEST);

console.log('\n\n\u001b[32mSummary Results:\u001b[0m');

var rows = manifest.map((row) => {

return {

"url": row.url.substring(row.url.indexOf("netlify.app") + 11),

"performance": row.summary.performance,

"accessibility": row.summary.accessibility,

"seo": row.summary.seo

}

});

console.table(rows);I've created a separate Lighthouse Viewer Action that displays the reports in Command Line Interface as a workaround. Perhaps it could be integrated as an optional feature in the future.

To use the action, set continue-on-error: true for Lighthouse CI Action, and feed the resultsPath and outcome from it as the input parameters:

name: Lighthouse Auditing

on: push

jobs:

lighthouse:

runs-on: ubuntu-latest

name: Lighthouse-CI

steps:

- name: Checkout

uses: actions/checkout@v3

- name: Audit with Lighthouse

id: lighthouse

continue-on-error: true

uses: treosh/lighthouse-ci-action@v10

with:

urls: |

https://example.com/

configPath: './lighthouserc.json'

- name: Display Report

uses: jackywithawhitedog/lighthouse-viewer-action@v1

with:

resultsPath: ${{ steps.lighthouse.outputs.resultsPath }}

lighthouseOutcome: ${{ steps.lighthouse.outcome }}Screenshot:

I can see the results were uploaded somewhere: https://github.com/kaihendry/ltabus/runs/251577950

Now how do I view them?

Thanks!