@TobiasNickel I just learnt about txml the other day and was really impressed. Great library. However, I haven't gotten around update the benchmark yet. Will do it sometimes later this week.

Closed TobiasNickel closed 3 years ago

@TobiasNickel I just learnt about txml the other day and was really impressed. Great library. However, I haven't gotten around update the benchmark yet. Will do it sometimes later this week.

I found when returning bigger data, and not doing the extra work in the worker, the performance goes down.

this is probably because it has to copy the data around hence worse performance?

yes, the data in each process are new objects and they get serialized into the shared memory buffer. the smaller it is the better.

piscina also calls some sync function to ensure data is not changed while reading. each function call to piscina causes one sync. maybe it even can improve performance by batching some worker calls together. but this would be quite some work to hit a good balance.

This is now quite theoretical. I will do some experiments....

Hi @TobiasNickel

I add the txml worker and fxp worker version to this branch https://github.com/tuananh/camaro/pull/123 (copied from your fork)

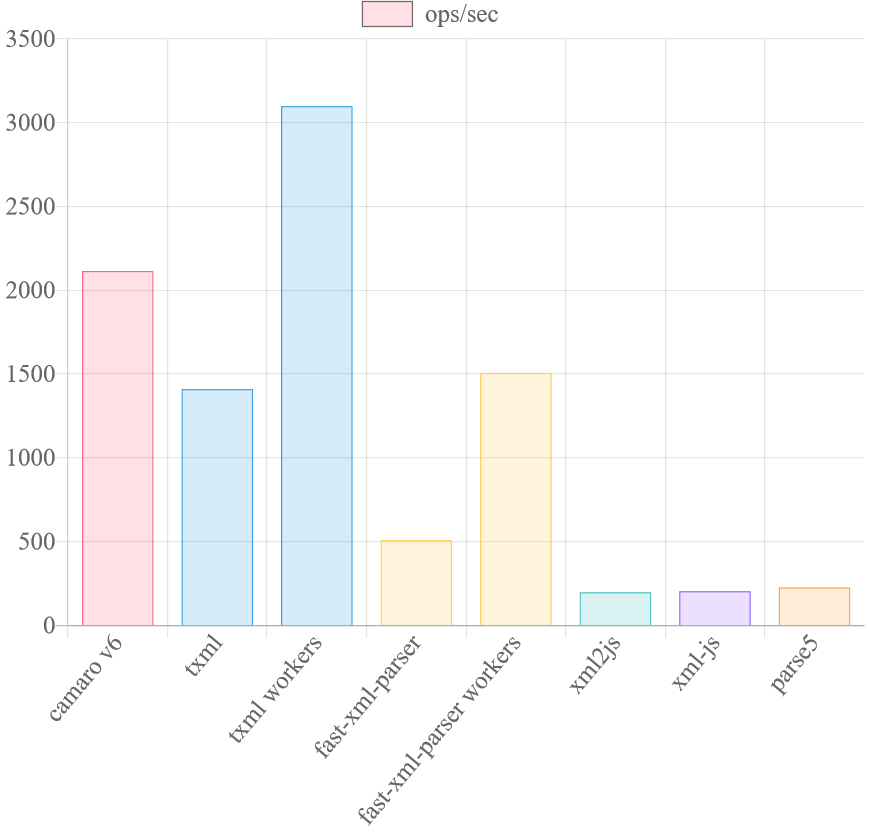

On my local machine (node v14.15.4), this is what I'm getting with 100kb test file

camaro v6: 2944 ops/sec

fast-xml-parser worker: 1159 ops/sec

txml worker: 2732 ops/sec

fast-xml-parser: 362 ops/sec

xml2js: 152 ops/sec

xml-js: 159 ops/secwith 60kb test file

camaro v6: 4978 ops/sec

fast-xml-parser worker: 2228 ops/sec

txml worker: 5023 ops/sec

fast-xml-parser: 792 ops/sec

xml2js: 304 ops/sec

xml-js: 274 ops/secwith 7kb test file

camaro v6: 12953 ops/sec

fast-xml-parser worker: 11403 ops/sec

txml worker: 19493 ops/sec

fast-xml-parser: 6803 ops/sec

xml2js: 2428 ops/sec

xml-js: 2457 ops/secyes, your results look right. 👍 I think my first entusiastic results where from a specific configuration where txml did not do all of the work.

Hi, I am the developer of txml an other xml parser for Javascript. I was about to post an article about how my txml is the fastest xml parser in Javascript, but then I came across this project. wow, this performance benchmark are impressive and have a little bit hurt my feelings ;-)

So I could not resist, I had to look deeper into what is making camaro so fast. previously I also compared myself to fast-xml-parser, and found mine is 2-3 times faster.

I saw you are using piscina and I really loved this solution. I already see other use cases for it other than xml parsing. So, I thought, the comparison should also take into consideration, that other libraries also can be used with piscina. The results can be found in my fork: TobiasNickel/camaro

For txml I in the worker, I also implemented a similar code to what what camaros xpath templates are doing. I found when returning bigger data, and not doing the extra work in the worker, the performance goes down. So the more filtering is possible inside the worker the better.

I am not sure if camaro could profit from a small if-statement outside the worker and when the data is small to do parsing in main-thread.

my world is ok again, having txml be the fastest xml parser. And I want to thank you for this great project. For people who prefer xpath camaro is a great option and without this project, I would not have head of piscina.