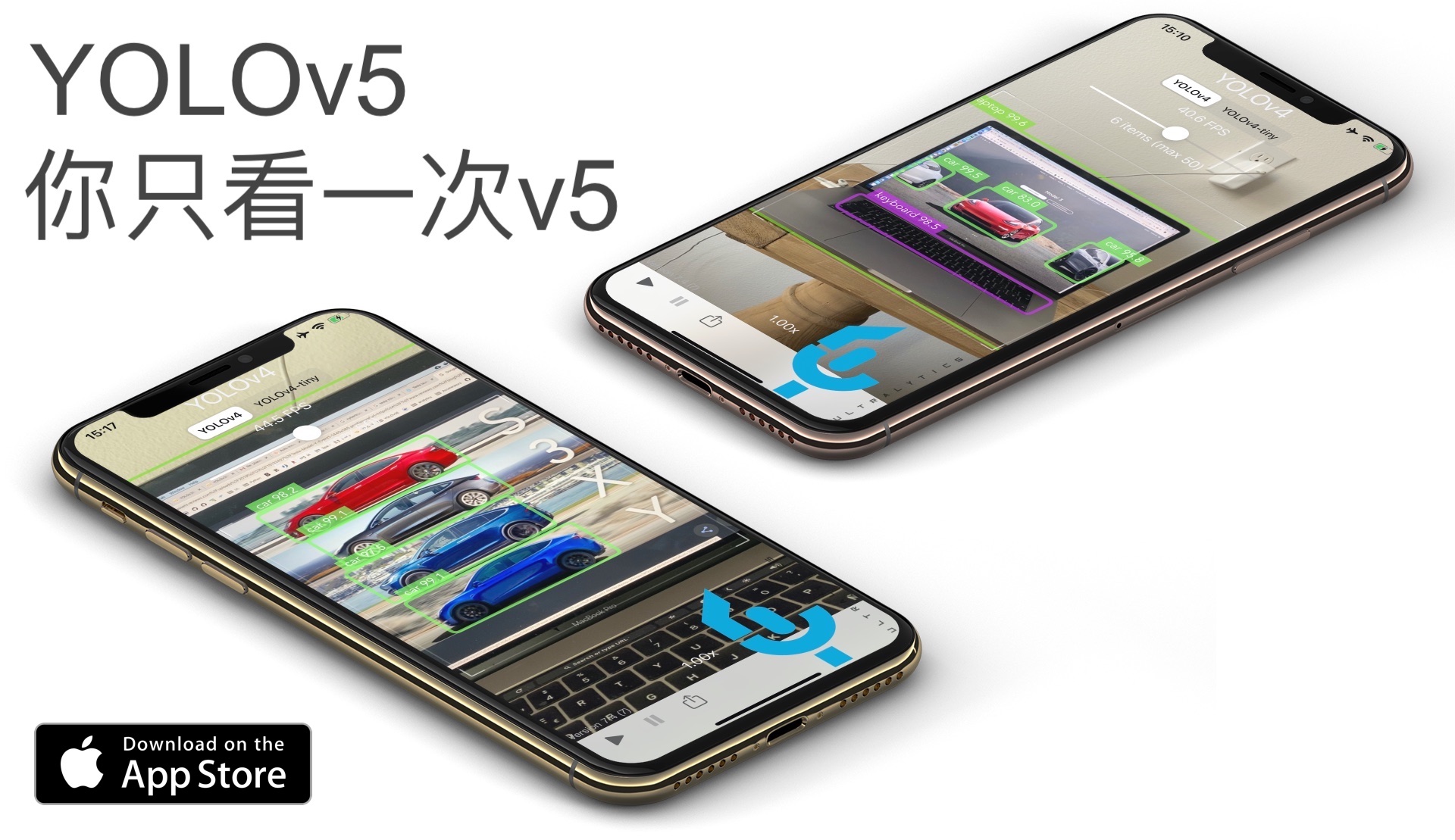

Hello @Al-Razi-KR, thank you for your interest in our work! Ultralytics has open-sourced YOLOv5 at https://github.com/ultralytics/yolov5, featuring faster, lighter and more accurate object detection. YOLOv5 is recommended for all new projects.

To continue with this repo, please visit our Custom Training Tutorial to get started, and see our Google Colab Notebook, Docker Image, and GCP Quickstart Guide for example environments.

If this is a bug report, please provide screenshots and minimum viable code to reproduce your issue, otherwise we can not help you.

If this is a custom model or data training question, please note that Ultralytics does not provide free personal support. As a leader in vision ML and AI, we do offer professional consulting, from simple expert advice up to delivery of fully customized, end-to-end production solutions for our clients, such as:

- Cloud-based AI systems operating on hundreds of HD video streams in realtime.

- Edge AI integrated into custom iOS and Android apps for realtime 30 FPS video inference.

- Custom data training, hyperparameter evolution, and model exportation to any destination.

For more information please visit https://www.ultralytics.com.

Input:

Output: