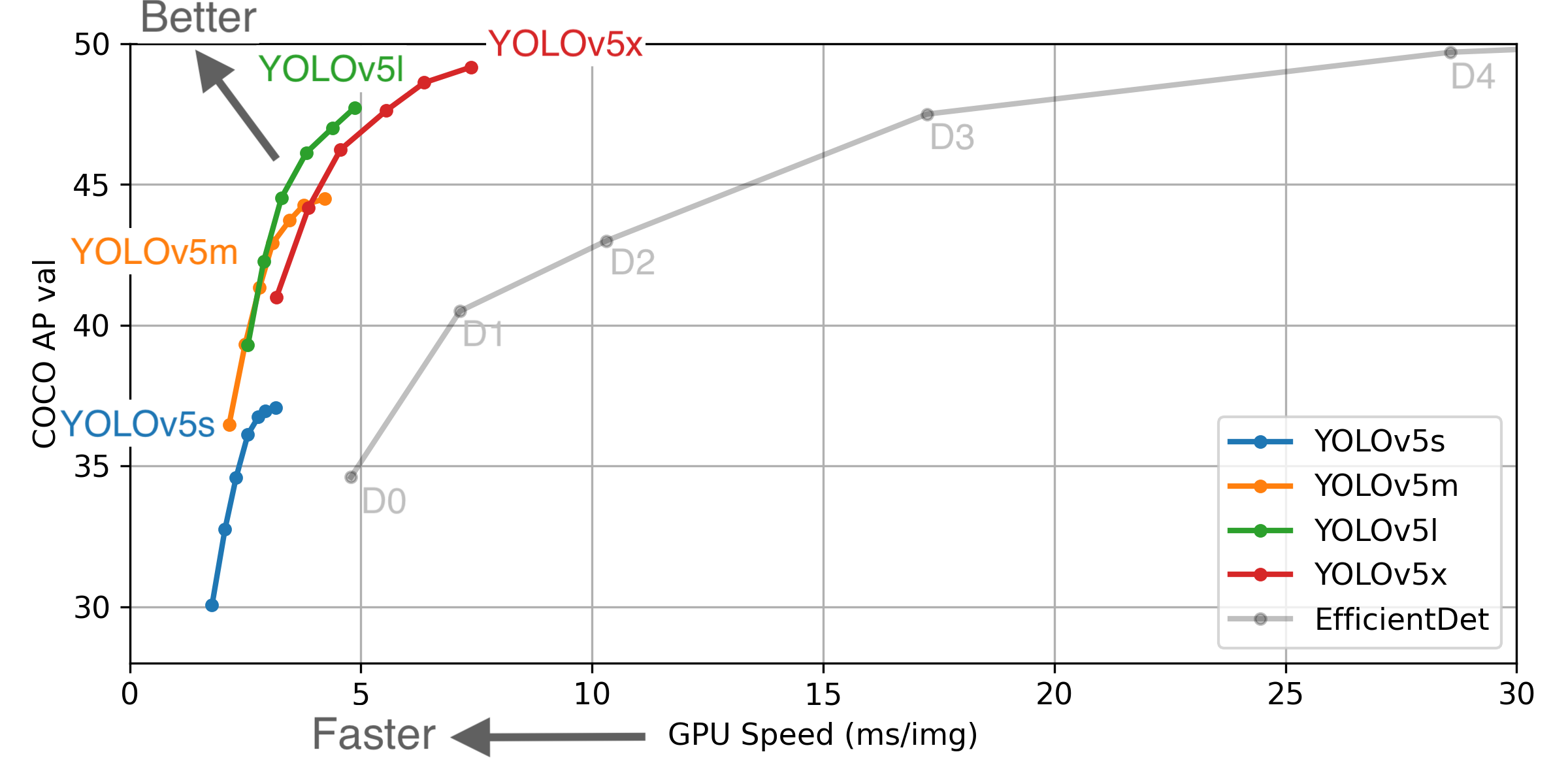

Ultralytics has open-sourced YOLOv5 at https://github.com/ultralytics/yolov5, featuring faster, lighter and more accurate object detection. YOLOv5 is recommended for all new projects.

** GPU Speed measures end-to-end time per image averaged over 5000 COCO val2017 images using a V100 GPU with batch size 32, and includes image preprocessing, PyTorch FP16 inference, postprocessing and NMS. EfficientDet data from google/automl at batch size 8.

** GPU Speed measures end-to-end time per image averaged over 5000 COCO val2017 images using a V100 GPU with batch size 32, and includes image preprocessing, PyTorch FP16 inference, postprocessing and NMS. EfficientDet data from google/automl at batch size 8.

- August 13, 2020: v3.0 release: nn.Hardswish() activations, data autodownload, native AMP.

- July 23, 2020: v2.0 release: improved model definition, training and mAP.

- June 22, 2020: PANet updates: new heads, reduced parameters, improved speed and mAP 364fcfd.

- June 19, 2020: FP16 as new default for smaller checkpoints and faster inference d4c6674.

- June 9, 2020: CSP updates: improved speed, size, and accuracy (credit to @WongKinYiu for CSP).

- May 27, 2020: Public release. YOLOv5 models are SOTA among all known YOLO implementations.

- April 1, 2020: Start development of future compound-scaled YOLOv3/YOLOv4-based PyTorch models.

Pretrained Checkpoints

| Model | APval | APtest | AP50 | SpeedGPU | FPSGPU | params | FLOPS | |

|---|---|---|---|---|---|---|---|---|

| YOLOv5s | 37.0 | 37.0 | 56.2 | 2.4ms | 416 | 7.5M | 13.2B | |

| YOLOv5m | 44.3 | 44.3 | 63.2 | 3.4ms | 294 | 21.8M | 39.4B | |

| YOLOv5l | 47.7 | 47.7 | 66.5 | 4.4ms | 227 | 47.8M | 88.1B | |

| YOLOv5x | 49.2 | 49.2 | 67.7 | 6.9ms | 145 | 89.0M | 166.4B | |

| YOLOv5x + TTA | 50.8 | 50.8 | 68.9 | 25.5ms | 39 | 89.0M | 354.3B | |

| YOLOv3-SPP | 45.6 | 45.5 | 65.2 | 4.5ms | 222 | 63.0M | 118.0B |

APtest denotes COCO test-dev2017 server results, all other AP results in the table denote val2017 accuracy.

All AP numbers are for single-model single-scale without ensemble or test-time augmentation. Reproduce by python test.py --data coco.yaml --img 640 --conf 0.001

SpeedGPU measures end-to-end time per image averaged over 5000 COCO val2017 images using a GCP n1-standard-16 instance with one V100 GPU, and includes image preprocessing, PyTorch FP16 image inference at --batch-size 32 --img-size 640, postprocessing and NMS. Average NMS time included in this chart is 1-2ms/img. Reproduce by python test.py --data coco.yaml --img 640 --conf 0.1

All checkpoints are trained to 300 epochs with default settings and hyperparameters (no autoaugmentation).

Test Time Augmentation (TTA) runs at 3 image sizes. Reproduce** by python test.py --data coco.yaml --img 832 --augment

For more information and to get started with YOLOv5 please visit https://github.com/ultralytics/yolov5. Thank you!

❔Question

I change the network slightly and cannot use the pre-trained? How can I start training a model from scratch? I use --weights '', but after train 2 epochs, I meet the wrong: WARNING: non-finite loss, ending training tensor([nan, nan, 0., nan], device='cuda:0') What can i do? thank you for your reply!

Additional context

WARNING: non-finite loss, ending training tensor([nan, nan, 0., nan], device='cuda:0')