The loss is down, so I wounder whether the definition of loss lead to this problem?

Closed lianuo closed 5 years ago

The loss is down, so I wounder whether the definition of loss lead to this problem?

@lianuo

same issue, i change cls to 2(bg and person), it looks like cls confidence have sth wrong in training

@lianuo @Ricardozzf I've not tried to continue training from the official yolov3 weights. It probably won't pick up smoothly where Joseph Redmon and company left off for a number of reasons, such as the optimizer starting with no knowledge of the previous optimizer's momentum and LR. There are also a few primary differences between my training and the official darknet training:

train.py uses the Adam optimizer in place of SGD. I could not get SGD to converge with the yolov3 learning rate.models.py. Note that BCEWithLogitsLoss that I use produces the same loss as BinaryCrossEntropyLoss + torch.sigmoid() on the first term, but BCEWithLogitsLoss is preferable for numerical stability reasons. If you want to try to continue training from yolov3.weights you need to use BinaryCrossEntropy or BCEWithLogitsLoss as in the commented line below.

lcls = nM * CrossEntropyLoss(pred_cls[mask], torch.argmax(tcls, 1))

# lcls = nM * BCEWithLogitsLoss2(pred_cls[mask], tcls.float())@lianuo how many epochs did you train this way? If you make the switch the BCE does this help?

@Ricardozzf your results don't look good. Are you training from scratch or resuming training from yolov3 weights like @lianuo? If you suspect class confidence has a problem it must be because I've swapped CE for BCE. You can switch BCE back on by switching the commented lines above. But also note that if you are training from scratch you need significant number of epochs before things start looking good. In my training I see about 0.50 mAP on COCO2014 validate set after 40 epochs (3 days of training on a 1080 Ti).

@glenn-jocher Thank you for reply! I just try resume training from official yolov3 weights with optimizer = torch.optim.SGD(model.parameters(), lr=.0001, momentum=.9, weight_decay=5e-4, nesterov=True) and switch to BCEWithLogitsLoss the precision is down to 0.18 and recall grow to 0.6.just like previous settings.

It is strange , that when I run test.py with this trained weight , I can still have high sore,see the screamshot:

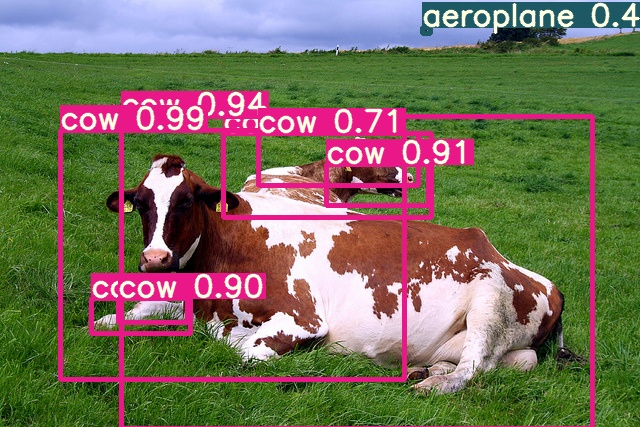

but when I run detect.py with this trained weight. the result is still not good.like this:

but when I run detect.py with this trained weight. the result is still not good.like this:

Is this because of the method of evaluate mAP?

@glenn-jocher have you use the weights you trained (0.50 mAP on COCO2014) to test a image? could you share the weight or the test result of images? it is a little strange that the score is high while the image testing result is not good... Thank you so much for reply

@Ricardozzf thanks for you information.I am not alone ,haha.

@glenn-jocher thanks for your reply

i have trained the model from scratch for 14 epochs on a TITAN X. In order to make full use of GPU, i chaged batch_size from 12 to 16, and other conf is default.

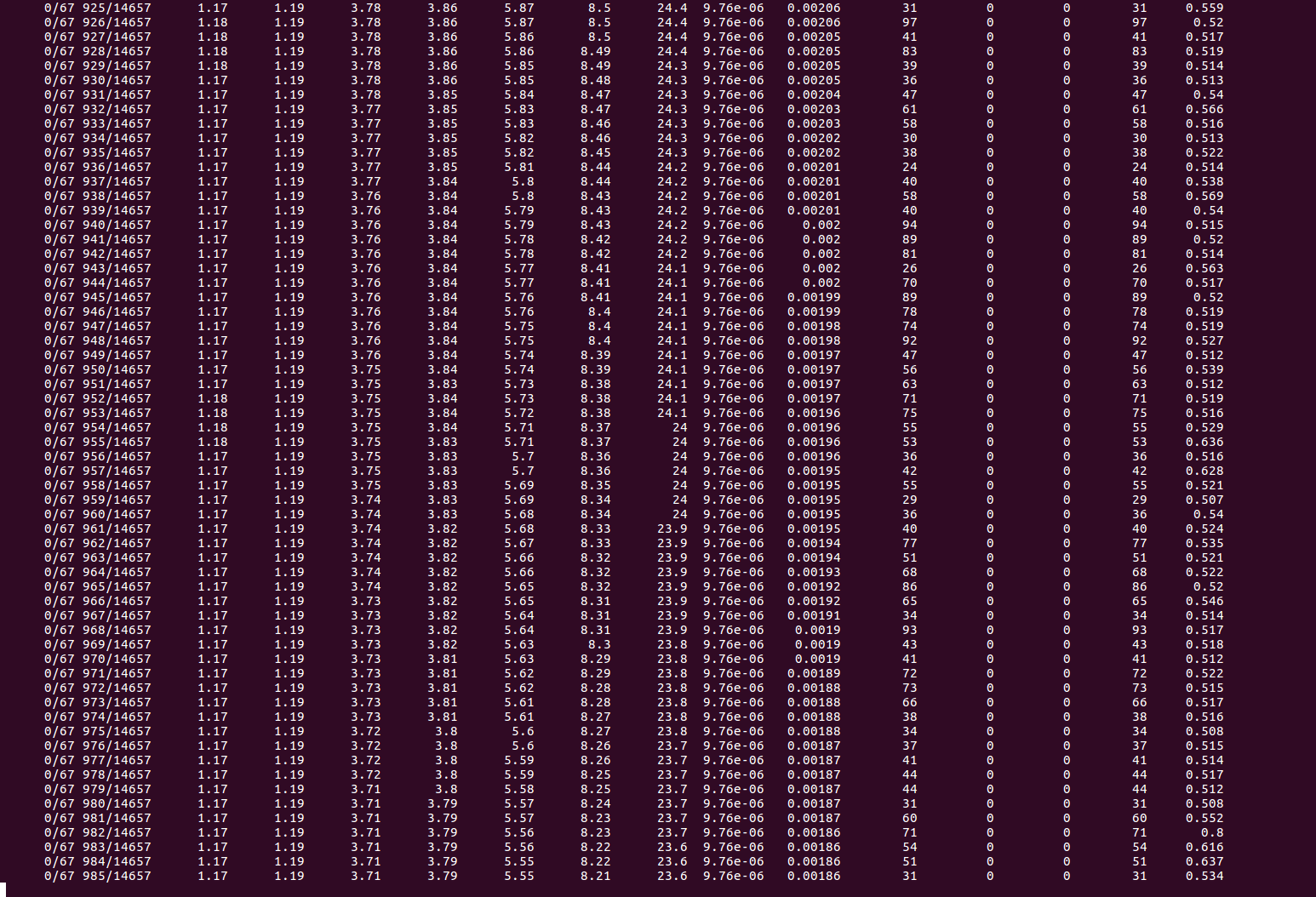

In training, the model looks good:

In testing, I use crowdhuman dataset, the score is high

In testing, I use crowdhuman dataset, the score is high

Although the score in training and testing is high, the result processed by detect.py is bad, maybe one thing could be confirmed, testing score didn't match results of detect.py

Although the score in training and testing is high, the result processed by detect.py is bad, maybe one thing could be confirmed, testing score didn't match results of detect.py

I hope the information is useful to us.

@lianuo @Ricardozzf thats a good question, I will compare my test.py and detect.py results. I am at epoch 37 training on COCO2014. If I run test.py I see this:

+ Sample [4998/5024] AP: 0.7528 (0.4926)

+ Sample [4999/5024] AP: 0.8333 (0.4927)

+ Sample [5000/5024] AP: 0.5543 (0.4927)

Mean Average Precision: 0.4927If I then use the epoch 37 checkpoint latest.pt with detect.py I see this on my example image, which is the same problem you guys are seeing.

I'm wondering if I caused this by switching from BCE to CE. In xView when I used this code I had to increase my -conf_thresh in detect.py to ~0.99 to reduce FP. If I increase -conf_thresh to 0.99 now (and change -nms_thresh to 0.45 to match test.py) then I get this. Better, but still not quite right.

This is a bit apples and oranges comparison though. The official weights are at 160 epochs and my latest.pt is only at 37 epochs, so its possible that training up to 160 will resolve this problem.

I don't understand why test.py is producing such a high mAP though, especially since it uses a very low -conf_thresh of 0.5. You guys are right, there is an unresolved issue somewhere. I will try and investigate more. The problem seems twofold:

test.py is possibly over-reporting mAP on trained checkpoints, even though it correctly reports mAP on the official YOLOv3 weights, an odd inconsistency. This seems to be the easiest issue to resolve, so I'll look at this first.Any ideas are appreciated as well!

@lianuo @Ricardozzf the overly-high mAPs you were seeing before should be partly fixed in the latest commits, which fixed mAP calculations (see issue #7). The official weights now produce .57 mAP, but the trained weights that before gave me 0.50 mAP now return about 0.13 mAP, much more in-line with the poor boxes you see in your images.

I still don't understand the actual cause of the poor training results however.

@glenn-jocher Thank you for reply~

@glenn-jocher the loss is still decrease when training ,do you think the loss function need to modify?

No warm-up process found for SGD. According to the paper of YOLO9000 and the official code, we need to warm-up the first 1000 iterations to make it better converge: warmup_lr = lr * batch_size / burn_in, where lr = 1e-3, batch_size = 64 and burn_in = 1000

@glenn-jocher The usage of CrossEntropyLoss might be incorrect. The input shape is (nB, nA, nG, nG, nC), but the pytorch-doc suggests it to be (nB, nC, ...). (See https://github.com/ultralytics/yolov3/blob/9514e7443891ab2eaa106292a041cb0b8770f7c3/models.py#L115) Besides, the torch.argmax(tcls, 1) fetches C from dim=1, but the shape of tcls is actually (nB, nA, nG, nG, nC). Maybe we need to permutate the dims so that C is at dim=1 .

@xyutao I looked into the CELoss function, I think this part is ok. When I start training and debug this spot, the dimensions look good (assuming nC = 80 and assuming we have 47 targets here in the first batch of nB=12 images). I think mask is eliminating all the other dimensions:

tcls.shape

Out[2]: torch.Size([12, 3, 13, 13, 80])

tcls = tcls[mask]

tcls.shape

Out[3]: torch.Size([47, 80])

lcls = nM * CrossEntropyLoss(pred_cls[mask], torch.argmax(tcls, 1))

Out[4]: tensor(206.37325, grad_fn=<MulBackward1>)

pred_cls[mask].shape

Out[5]: torch.Size([47, 80])

torch.argmax(tcls, 1).shape

Out[6]: torch.Size([47])I linked to your comment on the SGD warmup however, this is a good catch! Issue #4 is open on this. By the first 1000 iterations do you mean the first 1000 batches?

@glenn-jocher Yeah, the first 1000 batches of batch_size=64.

please help,i have the same error, did you guys solve this problem?thanks!

@lianuo Hi, just wondering how you loaded a pre-trained weights. Did you add this line of code in train.py?

# Initialize model

model = Darknet(opt.cfg, opt.img_size)

model.load_weights(opt.weights_path)@lianuo I found out from detect.py that you add this line:

load_weights(model, weights_path)

But, now, I'm getting a different error from datasets.py:

Have you encountered this problem; if yes, how do you deal with it?

@jaelim you resume training from a trained model (i.e. latest.pt) by setting opt.resume = True:

https://github.com/ultralytics/yolov3/blob/68de92f1a118ddc2f5b117c6421bc4827c6c9f1f/train.py#L50-L53

If you are seeing the error you mentioned it is because you failed to define a proper path to an image, or image folder in detect.py line 14 (no images are loaded). Make sure there are only image files in the path if you specify a path. Also please do not ask questions unrelated to the main issue title in this thread. https://github.com/ultralytics/yolov3/blob/68de92f1a118ddc2f5b117c6421bc4827c6c9f1f/detect.py#L14

@xyutao I've switched from Adam to SGD with burn-in (which exponentially ramps up the learning rate from 0 to 0.001 over the first 1000 iterations) in commit a722601:

Unfortunately this caused width and height loss terms to diverge when training from scratch. I saw that these are the only unbounded outputs of the network (all the rest are sigmoided), so I was forced to sigmoid them and create new width and height calculations, after which the training converged. The original and updated ones I made in this commit are:

https://github.com/ultralytics/yolov3/blob/a722601ef61149cc9e5135f58c762310627c970a/models.py#L121-L131

If I plot both of these in MATLAB it looks like the lack of a ceiling on the original code is causing the divergence problem. It may be that the original width/height equations are incorrect. Does anyone know where to find the original width and height darknet calcuations?

>> x=linspace(-3,3);

>> y1 = exp(x);

>> y2 = ((logsig(x) * 2).^2);

>> fig; plot(x,y1,'.-'); plot(x,y2,'.-'); h=gca; h.YLim=[0,5]; legend('original','updated'); xyzlabel('network output','anchor width multiple'); fcnfontsize(14)

@lianuo @Ricardozzf @xyutao @CF2220160244 @jaelim I have good news. A significant bug in the loss function was found today in issue #12, namely a problem size_average-ing the various loss terms. This caused the lconf_obj term to be 80 times too large (80 = COCO class count), which caused the network to over-detect objects, which I believe was the major problem many of you saw in your training.

I fixed this in commit cf9b4cfa5226c103cfd6e950fc789a6e45a96584, and after the change observed that SGD with burn-in now converges with the original YOLO width/height calculations, so I placed those back in in commit 5d402ad31a53a44fd7dda46c75bb1dc43df9923b.

Update: Sorry guys I think I might have spoken too soon. The changes help, but resuming training from yolov3.pt still causes P and R to drop from initially high values to lower values after ~50 batches. I think we are getting closer to the source of the problem however, which I feel is in the model loss term somewhere. TODO: I also need to ignore non-best anchors with > 0.50 iou to match yolov3.

@glenn-jocher great work , you are approaching the truth~ recently I test Andy's solution, it could resume training from original weight which could maintain the high Pre and Recall, may be you can find something useful from his code https://github.com/andy-yun/pytorch-0.4-yolov3

@lianuo yes this is the ultimate test. If the repo loss terms are perfectly aligned to darknet then the P and R terms should not degrade once you continue training from the official weights. More work to do, but I think it's getting closer.

@lianuo Andy-yun has a very different loss function. This would be easy to implement, but I don't understand several parts of it, which seem incorrect. I've raised an issue on his repo to get some answers, such as the 3 questions below. By the way, even if the loss is perfectly equal to darknet, if the learning rate is not perfectly aligned from darknet epoch 160, P and R will start to drop, so we need to know exactly the darknet learning rate at epoch 160 (UPDATE: see issue #18, it should be impossible to resume training with no P and R loss as final darknet lr = 0).

https://github.com/andy-yun/pytorch-0.4-yolov3/issues/22

I think you should use BCELoss for loss_cls, as the YOLOv3 paper section 2.2 clearly states "During training we use binary cross-entropy loss for the class predictions."

Why is MSELoss used in place of BCELoss for loss_conf? Did you make this choice yourself or did you see this in darknet?

Why divide loss_coord by 2?

https://github.com/andy-yun/pytorch-0.4-yolov3/blob/master/yolo_layer.py#L161-L164

loss_coord = nn.MSELoss(size_average=False)(coord*coord_mask, tcoord*coord_mask)/2

loss_conf = nn.MSELoss(size_average=False)(conf*conf_mask, tconf*conf_mask)

loss_cls = nn.CrossEntropyLoss(size_average=False)(cls, tcls) if cls.size(0) > 0 else 0

loss = loss_coord + loss_conf + loss_clsSo the training is still not figured out ? by the way, is it really possible to train it from scratch without a pretrained Imagenet weights ?

@TreB1eN SURE.... The final mAP (416 416 55.0mAP 160 epochs) with weight trained from scratch is a bit lower than yolov3.weight ( 416*416 55.3). That's my training result.

@libzzluo so you trained COCO 2014 from scratch with this repo? This is wonderful news!! I haven't had time to get to 160 epochs so I wasn't sure if the training code was mature or not (mostly was unsure about the loss function and the optimizer).

Do you know which exact commit you used to achieve these results (and did you make any changes to get it to work?) Thanks!!

@libzzluo hi did you train from scratch to achieve the result? What did you change? Because i am getting weird results see https://github.com/ultralytics/yolov3/issues/22 thank you in advance!

All, I trained to 60 epochs using the current setup. I used batch size 16 for the first 24 hours, then reverted to batch size 12 accidentally for the rest (hence the nonlinearity at epoch 10). A strange hiccup happened at epoch 40, then learning rate dropped from 1e-3 to 1e-4 at epoch 51 as part of the LR scheduler. This seemed to produce much accelerated improvements in recall during the last ten epochs. The test mAP at epoch 55 was 0.40 with conf_thresh = 0.10, so I feel if I continued training until perhaps epoch 100 we might get a very good mAP, especially seeing the Recall improving so well during epochs 51-60.

The strange thing is that I had to lower conf_thresh to 0.10 to get this good (0.40) mAP, otherwise I see 0.20 mAP at the default conf_thresh = 0.50. I am going to restart training with a constant 16 batchsize, and hopefully the epoch 40 hiccup does not repeat.

@glenn-jocher Interesting. Is this with the default COCO mAP calculation or with the one in the repository? Because they have quite a large difference.

@glenn-jocher By the way - is this with the BCE or CE for the loss functions (BCE being equivalent to original implementation)? Are there any other architectural changes?

@nirbenz This is with one BCE loss for all anchors. I'm surprised this works, since nearly all anchors are 0, with only a few 1's, but this seems to be how the darknet loss function is, since resuming training works well like this.

The mAPs are calculated from test.py in this repo. The test.py code is closer to the official code now, after I made some changes about 2 months ago. The official weights produce 0.57 mAP using test.py (at a 0.5 conf_thresh). I'm going to retrain on GCP for about 100 epochs and see where the mAP goes. Should take about a week or so.

@glenn-jocher Why are you training from random weights? Darknet initially loads darknet53 weights, then the training starts (refer #6)

@okanlv yes you are right, I should try training from darknet53 weights. I've downloaded the weights, but I don't have a simple way to load them into the randomly-initialized yolov3 model right now... have you done this before? I can try and develop a new function to handle this if not.

@glenn-jocher Yes, I have implemented it. I can send a pull request if you wish.

UPDATE: I see the existing load_weights() function works fine in models.py for darknet53, I just need to implement a smart cutoff so it doesn't attempt to load layers past 74 if presented with a darknet53.conv.74 weights file. I'll implement this in a new commit. Ok, I've added a few lines to train.py to find and load darknet53 weights if not resuming training. I'll start this on GCP and see how it goes:

https://github.com/ultralytics/yolov3/blob/741626c55bbbc13436a2e49e8b3e1dbd5711fbd0/train.py#L76-L80

@glenn-jocher Great, waiting for the training results

@glenn-jocher since your mAP code is still a bit different from MS-COCO code (which among other things takes object sizes into account) I wonder if you (or anyone else) tested this repository's results against the pycocotools test code.

@okanlv GOOD NEWS! I tested the first 10 epochs with randomly vs darknet53.conv.74 initialised weights, and the darknet53.conv.74 initialization produces much better results. I will continue training darknet53.conv.74-initialized version up to 68 epoch over the coming week to see how it does. The latest commit automatically initializes yolov3 with darknet53.conv.74 when training from scratch.

@nirbenz yes issue #9 had someone run the official COCO mAP code on this repo, but I was not able to get get a pull-request from him to update the repo. https://github.com/ultralytics/yolov3/issues/9#issuecomment-420953072

Well then. I get these numbers, using official COCO SDK. Notes:

608

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.326

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.571

Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.335

Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.189

Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.354

Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.430

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 1 ] = 0.278

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 10 ] = 0.416

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.434

Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.276

Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.459

Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.551416

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.308

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.543

Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.311

Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.081

Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.285

Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.443

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 1 ] = 0.264

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 10 ] = 0.393

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.409

Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.161

Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.404

Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.536I will PR the code used to make this once I clean it up a bit.

@glenn-jocher Hi! I tried darknet53.conv.74 initialised weights when training. But TP and FP became 0 after hundreds of iterations gradually.

@DeepPower yes this is normal. The first 1000 epochs are burn-in (see https://github.com/ultralytics/yolov3/issues/2#issuecomment-423247313), where the LR slowly ramps from 0 to its full initial value of 1E-3. After batch 1000 then training proceeds normally, and you should see TPs start to appear in epoch 1 and increase steady from there onward. The majority of epoch 0 shows almost no TPs (this is normal). Full training may take up to 70 or more epochs remember (at least a week of COCO training on a 1080Ti).

@nirbenz those are veryyy close to the official values! I get 0.57 mAP on yolov3.pt using test.py with the default parameters confidence=0.50, nms=0.45, iuo=0.5. BUT I noticed that yes reducing the confidence threshold massively helps the test.py mAP. I found that checkpoints trained using this repo usually show the highest mAP around 'confidence = 0.10'. It would be really cool to plot mAP vs confidence threshold.

@glenn-jocher Yes, they are! Good news indeed. I have also noticed that different implementations require different tweaking of thresholds. The Keras YOLOv3 implementation also requires a different threshold to get equivalent results to the Darknet one.

@glenn-jocher I have finished training darknet53.conv.74-initialized version to 68 epoch. But the mAP of the latest.pt is only 0.2387 using test.py. Here is the curves of losses.

How is your result?

How is your result?

@DeepPower Have you trained your own dataset on this code? i meet some trouble while training my data, the precision and recall keep low and never change .

@DeepPower thanks for the feedback. You need to vary conf_thresh to get the best mAP. Usually I test values between 0.01 - 0.50. In the current repo the best value seems to be around 0.1 - 0.2. For example if you run this you should get a higher mAP, around 0.40 I think (but yes still lower than what we want): python3 test.py -img_size 416 -weights_path weights/latest.pt -conf_thres 0.10

My results look like this, comparing random initialization vs darknet53.conv.74 initialization. Your results look much smoother than mine. My training is on GCP preemptible instances which stop every 24 hours, or about every 10 epochs. I think this is causing spikes in my losses, which is very frustrating because theoretically the training should resume with no breaks at all (the model and the optimizer states are both saved and then replaced, so I don't understand my spikes... possibly a pytorch issue).

mAP is 0.42 at conf_thresh = 0.20 at epoch 80. I will start some multiscale training here.

@sporterman Sorry, i haven't trained my own dataset.

@glenn-jocher Thanks for your reply and great work. I have varied conf_threshto 0.2, and mAP is 0.41 at epoch 68. There are still some problems that we need to solve.

@DeepPower yes the performance is still not as good for training as darknet unfortunately. I tried a few epochs of multi_scale training after epoch 80 and this did not seem to help. I've tried to align everything as closely as possible to darknet, so for example if you resume training from the official yolov3.pt weights the P and R values are very steady (though still dropping slightly over time). This makes me think the loss function is correct, or at least very close to the original darknet loss function. Inference works well, so the problem can not be there, it must be in the training-only code, which could be optimizer, LR scheduler, loss function, target building functions, IOU function, augmentation function...

@nirbenz @okanlv @DeepPower @okanlv @xiao1228 Good news I think. I thought about the problem a bit and decided that the loss terms needed rebalancing. In my last plot you can see Classification is consuming the great majority of the loss, which means that it is being optimised at the expense of all the other losses. Ideally the 6 losses would be roughly equal in magnitude so that they are all optimised with equal priority.

So I made a commit that multiplied Objectness loss by 10, and divided Classification loss by 10: https://github.com/ultralytics/yolov3/blob/e04bb75ff1a77dc3d62f06d85d0a98cb9eb024a7/models.py#L166-L176

I ran this for most of the day on GCP, and after about 10 epochs I overlaid the 3 different trainings I'd done. This new approach seems vastly better, in particular at increasing Recall compared to before. I thought this was exciting enough to post the news right away, I'll have to train for another week to get to 70+ epochs and see the true effect. I'm wondering if there isn't a better way to more automatically balance these 6 equally important loss terms. They seem roughly equal now after 10 epochs, but maybe theres a way to update the balancing terms every epoch with the previous epochs gains. Any ideas?

UPDATE 1: mAP is 0.43 (-conf_thresh 0.20) at epoch 20. Updated plots below (green).

UPDATE 2: mAP is 0.46 (-conf_thresh 0.20) at epoch 35. Updated plots below (green).

UPDATE 3: mAP is 0.46 (-conf_thresh 0.30) at epoch 49 :( Jumps in loss observed during training, possibly due to many restarts of preemtable GCP VM. New commit 45c55677239a8c65caed83cc0025a84a06b490a5 to run test.py after each training epoch commit and record training mAP to results.txt. Starting new training from scratch using PyTorch 1.0 on GCP. Will post new comment when new results start coming in.

Thanks for your improvement of this YOLOv3 implementation. I have just test the training ,got some problem . I follow these steps.

4.I save the weight with precision0.2, and run the detect.py the result like this , if I do not train,the orginal wight can get this result:

if I do not train,the orginal wight can get this result:

I do not know whether I used wrong parameters or something else, lead to generation of many bbox . could you give me some suggestion? Thank you~