@logic03 you're probably going to want a larger dataset. There's not much point evolving hyperparameters to a tiny dataset.

Closed glenn-jocher closed 3 years ago

@logic03 you're probably going to want a larger dataset. There's not much point evolving hyperparameters to a tiny dataset.

@logic03 you're probably going to want a larger dataset. There's not much point evolving hyperparameters to a tiny dataset.

But I only have one category of pedestrians.At the beginning, I used INRIA pedestrian data set (only 614 images in the training set), and I found that the mAP calculated could reach 0.9. However, when I detected other pedestrian videos, there were many omissions, which I thought was because the data set was too small and the generalization ability was not strong enough.Later, I used the Hong Kong Chinese pedestrian data set, and the mAP was only 0.8, but it worked well in detecting my own pedestrian video.So I tried to put the two data sets together, and the mAP was 0.85, which was not as good as the second case when detecting the video.So I wonder if it has something to do with the super parameters, because when I trained the test before, the super parameters were the same default initial value.Have you ever encountered this situation?

@logic03 well, for generalizability, the larger and more varied the dataset the better. On the plus side, if your dataset is small, training and evolving will be faster.

So what is the approximate number of times I'm going to evolve when I use the hyperparametric evolution mechanism?

@logic03 well, for generalizability, the larger and more varied the dataset the better. On the plus side, if your dataset is small, training and evolving will be faster.

May I ask if this hyperparametric evolution mechanism is based on the strategy proposed in this paper? 《Population Based Training of Neural Networks》 Max Jaderberg, Valentin Dalibard, Simon Osindero, Wojciech M. Czarnecki, Jeff Donahue, Ali Razavi, Oriol Vinyals, Tim Green, Iain Dunning, Karen Simonyan, Chrisantha Fernando, Koray Kavukcuoglu (Submitted on 27 Nov 2017 (v1), last revised 28 Nov 2017 (this version, v2))

@logic03 it's a simple mutation-based genetic algorithm: https://en.wikipedia.org/wiki/Genetic_algorithm

300 generations is a rough number to try to run, though of course if you see no changes after a certain point it's likely settled into a minimum.

Hi,

I wanted to evolve the hyperparameters after getting rid of hsv_* in the hyp dictionary (they don't make sense in my non-RGB data). I was looking into train.py and found this line:

hyp[k] = x[i + 7] * v[i] # mutate

Is this 7 a magic number so hyp and x elements do match?

EDIT: Nevermind, I'm guessing now it is because x is a line read from evolve.txt and its first 6 values correspond to some extra metainfo other than hyperparameters.

@aclapes yes that's correct! There are 7 values (3 losses and 4 metrics) followed by the hyperparameter vector in each row of evolve.txt.

PR's are welcome, thank you!

Hello, i wanted to evolve my hyp, in python3 train.py --data data/coco.data --weights '' --img-size 320 --epochs 1 --batch-size 64 -- accumulate 1 --evolve, which epochs in should set?

And how can i use xy and wh loss?

@joey1616 the more --epochs the more your results will correlate to full training results, but of course the longer everything will take also. There is no right answer, but we've been using about 10% of full training as a good proxy for full training results.

If you look at most training results you will see a corner in the mAP. Before this corner the results can be pretty uncertain and don't mean quite as much, but in general once mAP turns this corner its a fairly straight slope for the rest of training, which means your results there are a very good indicator of your final results. For the coco trainings below this happens around 50-60 epochs.

@joey1616 oh and individual xywh losses are deprecated. GIoU is a single loss that regressses the bounding boxes.

I noticed there is another type of corner also, that shows up earlier, at 8 or 9 epochs. Perhaps for COCO we could get away with evolving only to this level. That would save a lot of time compared to 30 or 60 epochs...

Thank you very much and my cls loss is very small,it is about 2.19e-07 in evolve when map@0.5 =0.175.Can i use this cls loss in my train?

@joey1616 I don't understand your question. cls loss should not normally be that small.

@joey1616 I don't understand your question. cls loss should not normally be that small.

Sorry,i mean that my cls loss gain is about 2.19e-07,and it was generated in evolution.It's too small so I don't know if this parameter should be used.

If it produces a high mAP then use it, if not don't.

Hi, I set the epoch as 20 and evolve for 10 times.

I run like this

And then I get result as:

I wonder how to pick the best hyper-parameters.

Thank you very much.

I wonder how to pick the best hyper-parameters.

Thank you very much.

@ChristopherSTAN blue dot is your best result. It will be the first row in evolve.txt.

Hi, I know this question is repetitive. But I need some assistance. How do these parameters in darknet framework map with ultralytics hyperparameters? From the above, this is the information I gathered. Please do correct me. I need help with the parameters indicated as "?" Thank you so much!

darknet: ultralytics

batch: batch-size(input_param)

subdivisions: ?

width: img-size(input_param)

height: img-size(input_param)

channels: ?

momentum: momentum

decay: weight_decay

angle: degrees

saturation: hsv_s

exposure: ?

hue: hsv_h

learning_rate: lr_0

burn_in: (use lr_1)

max_batches: ?

policy: ?

steps: ?

scales: scale

Hello, which 'evolve.txt' file should we use to get higher mAP? can we create our own file?

@taylan24 evolve.txt files are created during hyperparameter evolution, they store the results of each generation.

Hello, are the values of the best result from the same combination?

I am trying to tune the hyper parameters for my custom data set. Using the evolve option, I get the following results. However, the fitness is too small and the precision and recall are always zero. Can you tell me any way to increase the fitness (and mAP)

@ankitahumne evolution always starts from a beginning state. You should ensure your beginning state shows acceptable performance before starting the process. In other words, garbage in, garbage out.

@glenn-jocher Thanks, that would save a lot of time. But to arrive at decent hyper parameters, trail and error is the only way? Also, my precision and recall are always zero. It doesnt seem normal. Any hints to improve that?

@ankitahumne these are two separate topics. Before doing any evolution you need to train normally with default settings and make sure you see at least a minimum level of performance. If not you have a problem.

@glenn-jocher I did try with the default settings and that was pretty bad, so I am using --evolve to tune the hyperparameters. The results are slightly better in terms of P,R,mAP and F1. Looks like that's the way to proceed then - keep tuning hyperparameters. I tried a mix of changing hyp, multiscale, conf-thres, iou-thresh .

@glenn-jocher:

I would like to ask you about the purpose of this topic.

First of all, the default hypeparameters are set inside the train.py file.

If we want to tune the hyperparameters efficiently for our custom dataset. We need to specify the option --evolve ? And then the hyperameters are automatically adjusted during the training? Correct me if I am wrong. Thank you so much.

@leviethung2103 hyps are static while training. --evolve with evolve them over many trainings.

This is the result on training on a custom data with evolve. The values I get at the end of evolution are the same as the start. Is this normal? Doesn't look so to me :/ @glenn-jocher

This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

@glenn-jocher Two video cards 16g, epochs set to 300, evolve iteration to 200, the result exceeds the GPU memory, how to solve this?

@glenn-jocher epochs set to 30, evolve iteration to 200, The following error:

Ultralytics has open-sourced YOLOv5 at https://github.com/ultralytics/yolov5, featuring faster, lighter and more accurate object detection. YOLOv5 is recommended for all new projects.

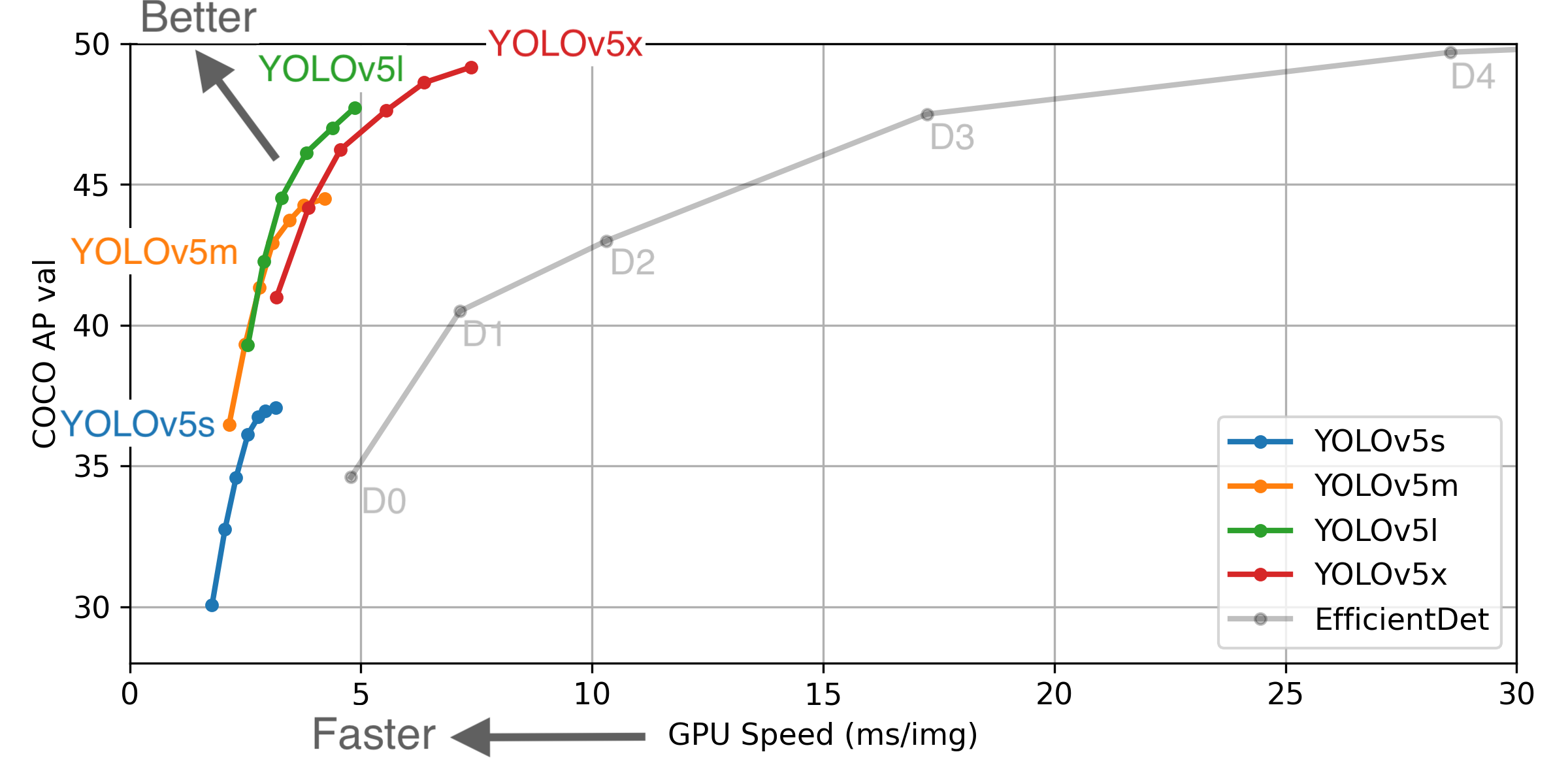

** GPU Speed measures end-to-end time per image averaged over 5000 COCO val2017 images using a V100 GPU with batch size 32, and includes image preprocessing, PyTorch FP16 inference, postprocessing and NMS. EfficientDet data from google/automl at batch size 8.

** GPU Speed measures end-to-end time per image averaged over 5000 COCO val2017 images using a V100 GPU with batch size 32, and includes image preprocessing, PyTorch FP16 inference, postprocessing and NMS. EfficientDet data from google/automl at batch size 8.

| Model | APval | APtest | AP50 | SpeedGPU | FPSGPU | params | FLOPS | |

|---|---|---|---|---|---|---|---|---|

| YOLOv5s | 37.0 | 37.0 | 56.2 | 2.4ms | 416 | 7.5M | 13.2B | |

| YOLOv5m | 44.3 | 44.3 | 63.2 | 3.4ms | 294 | 21.8M | 39.4B | |

| YOLOv5l | 47.7 | 47.7 | 66.5 | 4.4ms | 227 | 47.8M | 88.1B | |

| YOLOv5x | 49.2 | 49.2 | 67.7 | 6.9ms | 145 | 89.0M | 166.4B | |

| YOLOv5x + TTA | 50.8 | 50.8 | 68.9 | 25.5ms | 39 | 89.0M | 354.3B | |

| YOLOv3-SPP | 45.6 | 45.5 | 65.2 | 4.5ms | 222 | 63.0M | 118.0B |

APtest denotes COCO test-dev2017 server results, all other AP results in the table denote val2017 accuracy.

All AP numbers are for single-model single-scale without ensemble or test-time augmentation. Reproduce by python test.py --data coco.yaml --img 640 --conf 0.001

SpeedGPU measures end-to-end time per image averaged over 5000 COCO val2017 images using a GCP n1-standard-16 instance with one V100 GPU, and includes image preprocessing, PyTorch FP16 image inference at --batch-size 32 --img-size 640, postprocessing and NMS. Average NMS time included in this chart is 1-2ms/img. Reproduce by python test.py --data coco.yaml --img 640 --conf 0.1

All checkpoints are trained to 300 epochs with default settings and hyperparameters (no autoaugmentation).

Test Time Augmentation (TTA) runs at 3 image sizes. Reproduce** by python test.py --data coco.yaml --img 832 --augment

For more information and to get started with YOLOv5 please visit https://github.com/ultralytics/yolov5. Thank you!

@glenn-jocher How to use the parameters of evolve.txt in training?

@hande6688 see the yolov5 tutorials. A new hyp evolution yaml is created that you can point to when training yolov5: python train.py --hyp hyp.evolve.yaml

This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

@glenn-jocher why the result of me about evolve is like the nayyersaahil28 users, and i had been modified code like fig1-2 ,

@nanhui69 your plots look like this because you have only evolved 1 generation. For the best performing and most recent evolution I would recommend the YOLOv5 hyperparameter evolution tutorial, where the default code evolves 300 generations:

@glenn-jocher but i have chaged the code "for _ in range(265): # generations to evolve---------------------------" ? what's problem?

@glenn-jocher python3 train.py --epochs 30 --cache-images --evolve & ? should i need specify the my custom weight ,not the pretrainweight?

@nanhui69 you can evolve any base scenario, including starting from any pretrained weights. There are no constraints.

@nanhui69 you can evolve any base scenario, including starting from any pretrained weights. There are no constraints. i only want to solve the problem as the evolve‘s png shows above -- only one point ? what should i do?

@nanhui69 your plots look like this because you have only evolved 1 generation. For the best performing and most recent evolution I would recommend the YOLOv5 hyperparameter evolution tutorial, where the default code evolves 300 generations:

@nanhui69 your plots look like this because you have only evolved 1 generation. For the best performing and most recent evolution I would recommend the YOLOv5 hyperparameter evolution tutorial, where the default code evolves 300 generations:

YOLOv5 Tutorials

- Train Custom Data 🚀 RECOMMENDED

- Weights & Biases Logging 🌟 NEW

- Multi-GPU Training

- PyTorch Hub ⭐ NEW

- ONNX and TorchScript Export

- Test-Time Augmentation (TTA)

- Model Ensembling

- Model Pruning/Sparsity

- Hyperparameter Evolution < ---------------------------

- Transfer Learning with Frozen Layers ⭐ NEW

- TensorRT Deployment

oh, i will check it again。。。。。

@nanhui69 your plots look like this because you have only evolved 1 generation. For the best performing and most recent evolution I would recommend the YOLOv5 hyperparameter evolution tutorial, where the default code evolves 300 generations:

YOLOv5 Tutorials

- Train Custom Data 🚀 RECOMMENDED

- Weights & Biases Logging 🌟 NEW

- Multi-GPU Training

- PyTorch Hub ⭐ NEW

- ONNX and TorchScript Export

- Test-Time Augmentation (TTA)

- Model Ensembling

- Model Pruning/Sparsity

- Hyperparameter Evolution < ---------------------------

- Transfer Learning with Frozen Layers ⭐ NEW

- TensorRT Deployment

when i follow yolov5 steps ,the evolve.txt have only one line? what's that?

@nanhui69 YOLOv5 evolution will create an evolve.txt file with 300 lines, one for each generation.

@nanhui69 YOLOv5 evolution will create an evolve.txt file with 300 lines, one for each generation.

so ,what's wrong with me ? and when evolve.txt exists ,to run evolve style, error appears? hyp[k] = x[i + 7] * v[i] # mutate IndexError: index 18 is out of bounds for axis 0 with size 18

Training hyperparameters in this repo are defined in train.py, including augmentation settings: https://github.com/ultralytics/yolov3/blob/df4f25e610bc31af3ba458dce4e569bb49174745/train.py#L35-L54

We began with darknet defaults before evolving the values using the result of our hyp evolution code:

The process is simple: for each new generation, the prior generation with the highest fitness (out of all previous generations) is selected for mutation. All parameters are mutated simultaneously based on a normal distribution with about 20% 1-sigma: https://github.com/ultralytics/yolov3/blob/df4f25e610bc31af3ba458dce4e569bb49174745/train.py#L390-L396

Fitness is defined as a weighted mAP and F1 combination at the end of epoch 0, under the assumption that better epoch 0 results correlate to better final results, which may or may not be true. https://github.com/ultralytics/yolov3/blob/bd924576048af29de0a48d4bb55bbe24e09537a6/utils/utils.py#L605-L608

An example snapshot of the results are here. Fitness is on the y axis (higher is better).

from utils.utils import *; plot_evolution_results(hyp)