I've noticed when it runs "just fine" it acutally uses both GPUs.

This should maybe be reflected in the tutorial section in https://docs.ultralytics.com/yolov5/tutorials/hyperparameter_evolution

The section

# Multi-GPU

for i in 0 1 2 3; do

nohup python train.py --epochs 10 --data coco128.yaml --weights yolov5s.pt --cache --evolve --device $i > evolve_gpu_$i.log &

doneseems not up to date anymore

Search before asking

YOLOv5 Component

Training, Evolution

Bug

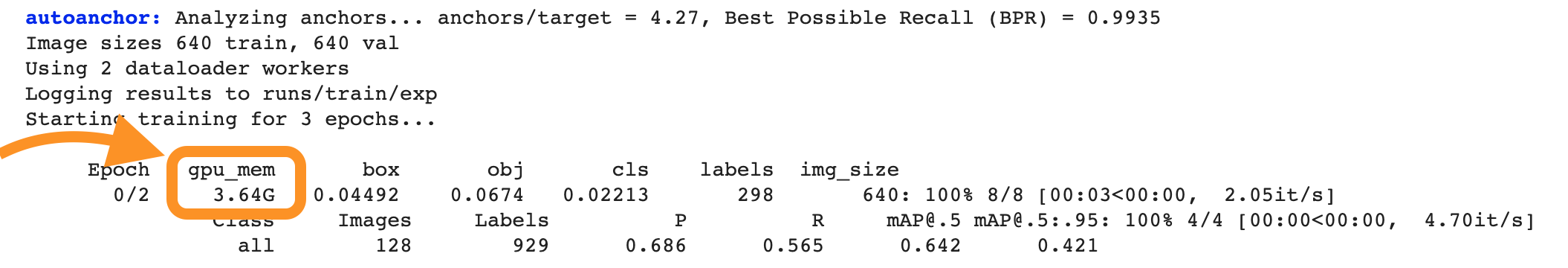

RuntimeError: CUDA out of memory. Tried to allocate 126.00 MiB (GPU 0; 10.76 GiB total capacity; 9.45 GiB already allocated; 93.69 MiB free; 9.57 GiB reserved in total by PyTorch)

Environment

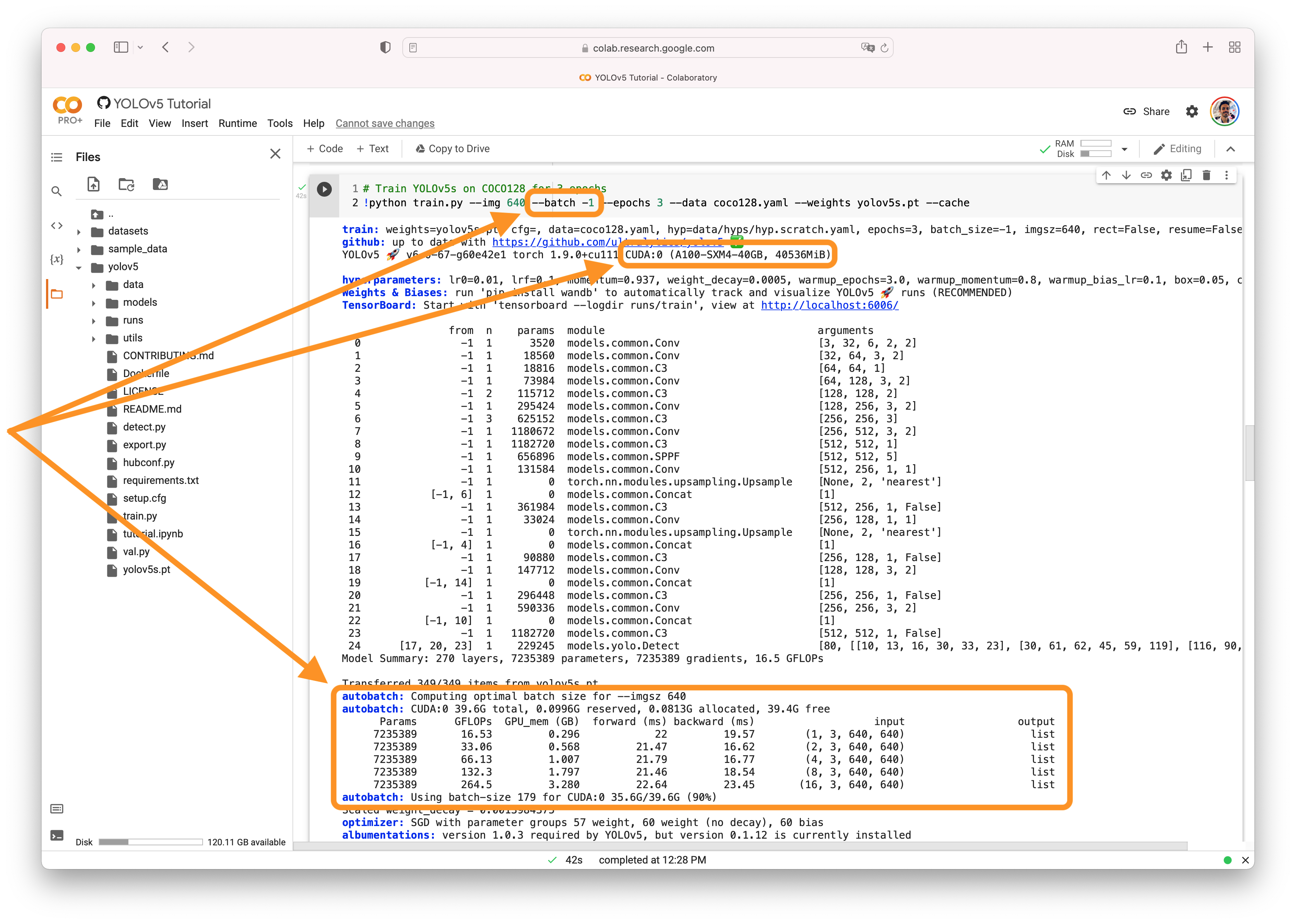

YOLOv5 v6.0-134-gc45f9f6 torch 1.8.1+cu102 CUDA:0 (GeForce RTX 2080 Ti, 11019MiB)

Minimal Reproducible Example

python train.py --epochs 10 --data gpr_highway.yaml --weights yolov5x6.pt --cache --evolve 10 --device 0

Gives the OOM error

python train.py --epochs 10 --data gpr_highway.yaml --weights yolov5x6.pt --cache --evolve 10

Runs just fine.

Additional

No response

Are you willing to submit a PR?