Hi rjesud, I expect @ngreenwald may have some specific advice to add, but in the meantime here's a few things to look at.

One notable difference between your data and the benchmarks described in #553 is that you specify image_mpp=0.325. The model was trained with 0.5, so the first thing the application does is to resize your image to match the resolution that the model was trained with. If you can, please try the options described below and let us know the results so that we can diagnose the problem.

- Run predictions on your data without specifying the resize. The results may not be perfect, but it will confirm that the rest of the system is working correctly.

labeled_image = app.predict(im, compartment='whole-cell') -

Run the resize function in isolation and see if it completes in a reasonable time:

from deepcell_toolbox.utils import resize shape = im.shape image_mpp = 0.325 model_mpp = app.model_mpp scale_factor = image_mpp / model_mpp new_shape = (int(shape[1] * scale_factor), int(shape[2] * scale_factor)) image = resize(image, new_shape, data_format='channels_last')

Hi, Thanks for developing this useful tool! I’m eager to apply it in our research. I am encountering what I believe is an out of memory crash when processing a large digital pathology whole slide image (WSI). This specific image Is 76286 x 44944 (XY) at .325 microns/pixel. While this is large, it is not atypical in our WSI datasets. Other images in our dataset can be larger. I have done benchmarking with the data and workflow described in another ticket here: https://github.com/vanvalenlab/deepcell-tf/issues/553. I can confirm results and processing time (6min) as shown here: https://github.com/vanvalenlab/deepcell-tf/issues/553#issuecomment-940622124. So it appears things are working as expected. So, I am not sure where I am hitting an issue with my image. I assumed that the image was being sent to GPU in memory-efficient batches. Perhaps this is occurring at the pre-processing or post-processing stage? Should I explore a different workflow for Mesmer usage with images of this size?

I am using: Deep-cell 0.11.0 Tensorflow 2.5.1 Cudnn 8.2.1 Cudotoolkit 11.3.1 GPU: Quadro P6000 computeCapability: 6.1 coreClock: 1.645GHz coreCount: 30 deviceMemorySize: 23.88GiB deviceMemoryBandwidth: 403.49GiB/s

CPU: 16 cores, 128 GB RAM

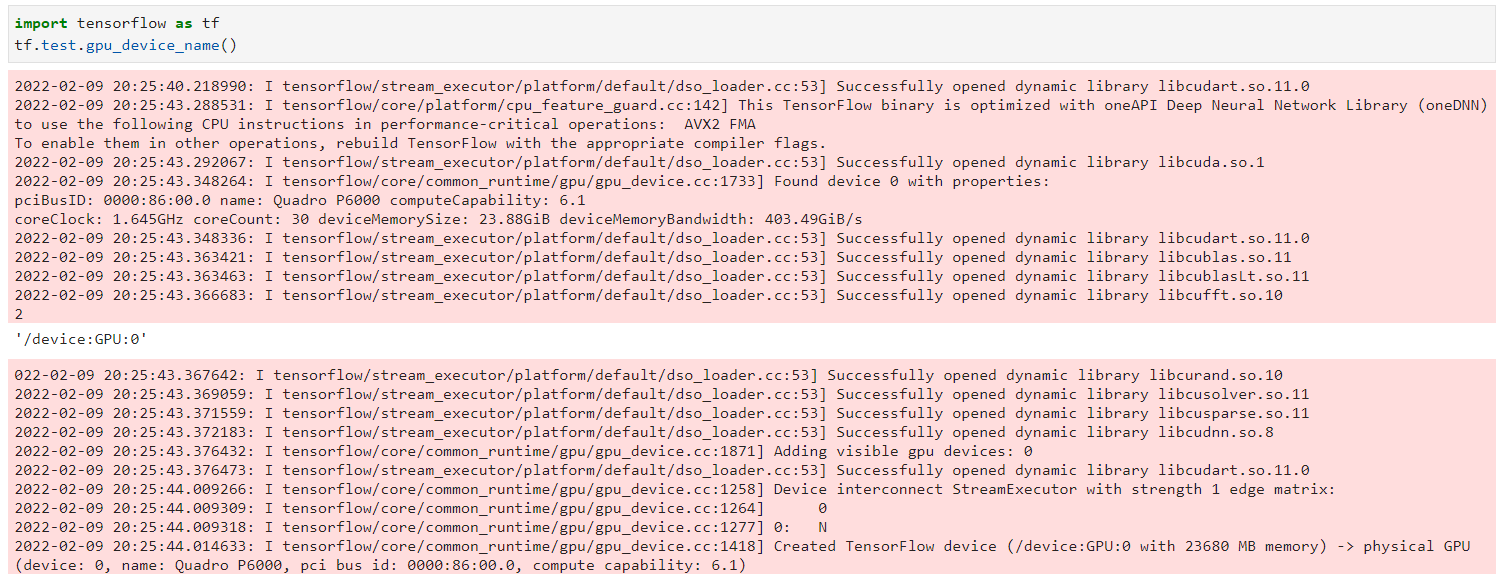

Seems to be talking to GPU:

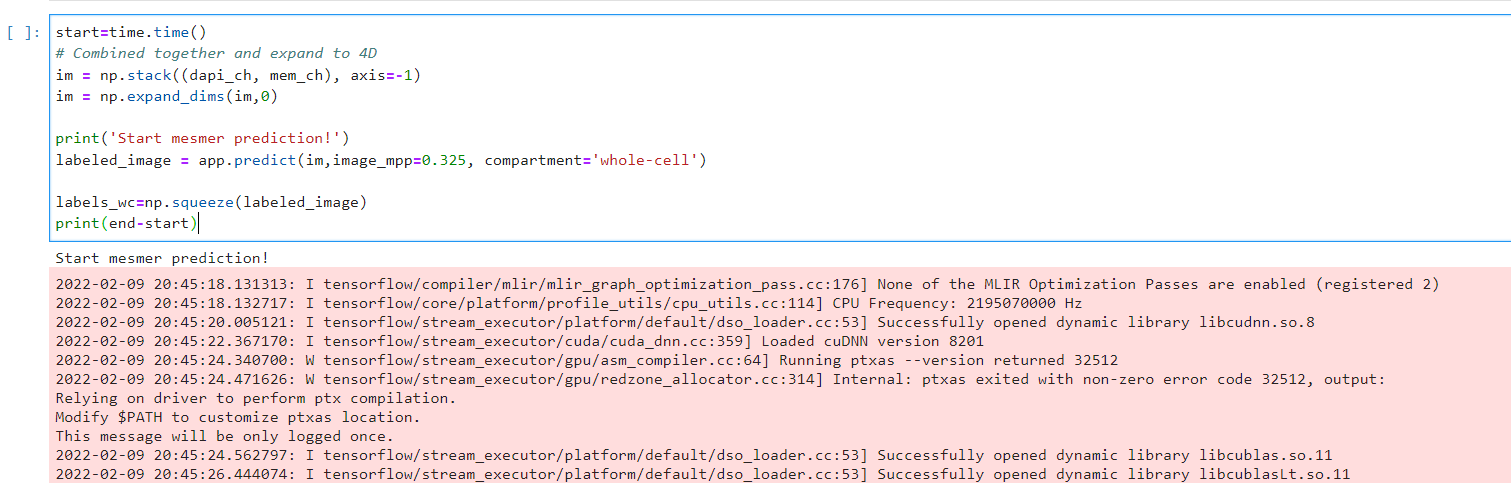

Unfortunately, does not complete: