Postman extension has been deprecated

Closed github-learning-lab[bot] closed 3 years ago

Postman extension has been deprecated

Before the Azure Function can run, we have to install all the necessary package dependencies. We will be using the parse-multipart and node-fetch packages (more details on that later). These packages must be manually installed in the console using npm install.

At this point, you should have created a new HTTP trigger function in your Azure portal along with the Function App. If you have not done this, please do it now. Navigate to your Function App. This is not the function code, but the actual app service resource.

In the left tab, scroll down to Console.

Enter these commands in order:

npm init -y

npm install parse-multipart

npm install node-fetchThe first creates a package.json file to store your dependencies. The next two actually install the necessary packages.

Note: If you get red text like WARN, that is expected and normal

You should be good to go! Reach out to your TA's if there are any issues!

Once you are done, write a comment describing what you completed.

Completed Installing the packages successfully

In your Azure Portal, go into your HTTP function and into the index.js file. You should see starter code there that has something like module.exports....

This is the file that we will be editing.

The Azure Function needs to:

What is happening in this flowchart?

HTML Page: Where the user will submit an image; this page will also make a request with the image to the Azure Function.

Azure Function: Receives the request from HTML Page with the image. In order to receive it, we must parse the image...

We're going to be focusing on Part 1, which involves parsing multipart form data.

In any HTML <form> element that receives involves a file upload (which ours does), the data is encoded in the multipart/form-data method.

The default http encoding method is application/x-www-form-urlencoded, which encodes text into name/value pairs and works for text inputs, but it is inefficient for files or binary inputs.

multipart/form-data indicates that one or more files are being inputted. Parsing this type of data is a little more complicated than usual. To simplify the process, we're going to use an npm library called parse-multipart.

To import Node packages, we use the require function:

var multipart = require("parse-multipart");This imports the parse-multipart package into our code, and we can now call any function in the package using multipart.Function().

Your function should look like this:

var multipart = require("parse-multipart");

module.exports = async function (context, req) {

context.log('JavaScript HTTP trigger function processed a request.');

};

Before we start parsing, go to the parse-multipart documentation for some context on what the package does. Look specifically at the example in the Usage section and what they are doing, as we're going to do something similar.

Notice that multipart.Parse(body, boundary) requires two parameters. I've already gotten the boundary for you – just like the documentation example, our boundary is a string in the format "----WebKitFormBoundary(random characters here)".

In the multipart.Parse() call, you need to figure out what the body parameter should be.

Hint: It should be the request body. Think about the example Azure function. How did we access that?

//here's your boundary:

var boundary = multipart.getBoundary(req.headers['content-type']);

// TODO: assign the body variable the correct value

var body = '<WHAT GOES HERE?>'

// parse the body

var parts = multipart.Parse(body, boundary);To move on, comment the code that you currently have in the Azure Function below.

var multipart = require('parse-multipart'); var fetch = require('node-fetch');

module.exports = async function (context, req) { context.log('JavaScript HTTP trigger function processed a request.');

// receiving an image from an html form

//enctype = multipart/form-data

//parse-multipart library

var body = req.body;

//returns ---WebkitFormBoundaryjf;ldjfdlf

var boundary = multipart.getBoundary(req.headers['content-type']);

//returns an array of inputs

//each object has filename, type, data

var parts = multipart.Parse(body,boundary);

//array has 1 object(an image)

var image = parts[0].data;

var analysis = await analyzeImage(image, context);

context.res = {

body: {

analysis

}

}

context.done()}

async function analyzeImage(image, context){ const subscriptionKey = process.env['subscriptionKey']; const endpoint = process.env['endpoint'];

const uriBase = `${endpoint}/face/v1.0/detect`;

let params = new URLSearchParams({

'returnFaceAttributes': 'facialHair'

})

context.log(uriBase);

context.log(uriBase + '?' + params.toString());

let resp = await fetch(uriBase + '?' + params.toString(), {

method: 'POST',

body: image,

headers: {

'Content-Type': 'application/octet-stream',

'Ocp-Apim-Subscription-Key': subscriptionKey

}

})

let data = await resp.json();

return data;}

Congrats! You've made it to the first checkpoint. We're going to make sure your Azure Function can complete its first task: parsing the image.

Hint: take a look at the var parts... line

{ "analysis": [ { "faceId": "4181f016-51ec-4bf1-bf07-be1933854ec8", "faceRectangle": { "top": 139, "left": 107, "width": 174, "height": 174 }, "faceAttributes": { "facialHair": { "moustache": 0.6, "beard": 0.6, "sideburns": 0.6 } } }, { "faceId": "80a9dc59-d76a-469c-a573-0afbdbf019d9", "faceRectangle": { "top": 525, "left": 298, "width": 147, "height": 147 }, "faceAttributes": { "facialHair": { "moustache": 0.1, "beard": 0.1, "sideburns": 0.1 } } } ] }

This step is fairly straightforward:

Record and save the API endpoint and subscription key, as we'll be using it in the following parts.

Once you are done, write a comment describing what you completed.

What does the Face API do?

The Face API will accept the image and return information about the face, specifically emotions.

Went to the configuration blade and viewed my subscription key and endpoint. I saved them on Azure and referred to it in my code.

Recall the purpose of this Azure Function:

What is happening in this flowchart?

Azure Function: We are now going to be creating parameters that the Azure Function will use to send a request to the Face API Endpoint we just created.

In this section, we'll be focusing on Part 2.

At this point, your Azure function should look like this:

var multipart = require("parse-multipart");

module.exports = async function (context, req) {

context.log('JavaScript HTTP trigger function processed a request.');

var boundary = multipart.getBoundary(req.headers['content-type']);

var body = req.body;

var parts = multipart.Parse(body, boundary);

};We're going to create a new function, outside of module.exports that will handle analyzing the image (this function is async because we will be using the await keyword with the API call).

This function will be called analyzeImage(img) and takes in one parameter, img, that contains the image we're trying to analyze. Inside, we have two variables involved in the call: subscriptionKey and uriBase. Substitute the necessary values with your own info.

async function analyzeImage(img){

const subscriptionKey = '<YOUR SUBSCRIPTION KEY>';

const uriBase = '<YOUR ENDPOINT>' + '/face/v1.0/detect';

}Now, we want to set the parameters of our POST request and specify the exact data that we want.

The documentation for the Face API is here. Read through it, and notice that the request url is this:

https://{endpoint}/face/v1.0/detect\[?returnFaceId]\[&returnFaceLandmarks]\[&returnFaceAttributes]\[&recognitionModel]\[&returnRecognitionModel][&detectionModel]

All of the bracketed sections represent possible request parameters. Read through Request Parameters section carefully. How can we specify that we want to get the emotion data?

In order to specify all of our parameters easily, we're going to create a new URLSearchParams object. Here's the object declared for you. I've also already specified one parameter, returnFaceId, as true to provide an example. Add in a new parameter that requests emotion.

let params = new URLSearchParams({

'returnFaceId': 'true',

'<PARAMETER NAME>': '<PARAMETER VALUE>' //FILL IN THIS LINE

})To move on, comment the code in your Azure Function below. BUT DO NOT INCLUDE YOUR SUBSCRIPTION KEY. DELETE IT, and then copy your code in.

var multipart = require('parse-multipart'); var fetch = require('node-fetch');

module.exports = async function (context, req) { context.log('JavaScript HTTP trigger function processed a request.');

// receiving an image from an html form

//enctype = multipart/form-data

//parse-multipart library

var body = req.body;

//returns ---WebkitFormBoundaryjf;ldjfdlf

var boundary = multipart.getBoundary(req.headers['content-type']);

//returns an array of inputs

//each object has filename, type, data

var parts = multipart.Parse(body,boundary);

//array has 1 object(an image)

var image = parts[0].data;

var analysis = await analyzeImage(image, context);

context.res = {

body: {

analysis

}

}

context.done()}

async function analyzeImage(image, context){ const subscriptionKey = process.env['subscriptionKey']; const endpoint = process.env['endpoint'];

const uriBase = `${endpoint}/face/v1.0/detect`;

let params = new URLSearchParams({

'returnFaceAttributes': 'facialHair'

})

context.log(uriBase);

context.log(uriBase + '?' + params.toString());

let resp = await fetch(uriBase + '?' + params.toString(), {

method: 'POST',

body: image,

headers: {

'Content-Type': 'application/octet-stream',

'Ocp-Apim-Subscription-Key': subscriptionKey

}

})

let data = await resp.json();

return data;}

What is happening in this flowchart?

Azure Function: We are now going to be using the Azure Function to send a POST request to the Face API Endpoint and receive emotion data.

parts[0])There are many ways to make a POST request, but to stay consistent, we're going to use the package node-fetch. This package makes HTTP requests in a similar format as what we're going to use for the rest of the project. Install the package using the same format we did for parse-multipart.

//install the node-fetch pacakge

var fetch = '<CODE HERE>'Read through the API section of the documentation. We're going to make a call using the fetch(url, {options}) function.

API Documentation can be tricky sometimes...Here's something to help

We're calling the fetch function - notice the await keyword, which we need because fetch returns a Promise, which is a proxy for a value that isn't currently known. You can read about Javascript promises here.

In the meantime, I've set the url for you- notice that it is just the uriBase with the params we specified earlier appended on.

For now, fill in the method and body.

async function analyzeImage(img){

const subscriptionKey = '<YOUR SUBSCRIPTION KEY>';

const uriBase = '<YOUR ENDPOINT>' + '/face/v1.0/detect';

let params = new URLSearchParams({

'returnFaceId': 'true',

'returnFaceAttributes': 'emotion'

})

//COMPLETE THE CODE

let resp = await fetch(uriBase + '?' + params.toString(), {

method: '<METHOD>', //WHAT TYPE OF REQUEST?

body: '<BODY>', //WHAT ARE WE SENDING TO THE API?

headers: {

'<HEADER NAME>': '<HEADER VALUE>' //do this in the next section

}

})

let data = await resp.json();

return data;

}Finally, we have to specify the request headers. Go back to the Face API documentation here, and find the Request headers section.

There are two headers that you need. I've provided the format below. Enter in the two header names and their two corresponding values.

FYI: The Content-Type header should be set to'application/octet-stream'. This specifies a binary file.

//COMPLETE THE CODE

let resp = await fetch(uriBase + '?' + params.toString(), {

method: '<METHOD>', //WHAT TYPE OF REQUEST?

body: '<BODY>', //WHAT ARE WE SENDING TO THE API?

//ADD YOUR TWO HEADERS HERE

headers: {

'<HEADER NAME>': '<HEADER VALUE>'

}

})Lastly, we want to call the analyzeImage function in module.exports. Add the code below into module.exports.

Remember that parts represents the parsed multipart form data. It is an array of parts, each one described by a filename, a type and a data. Since we only sent one file, it is stored in index 0, and we want the data property to access the binary file– hence parts[0].data. Then in the HTTP response of our Azure function, we store the result of the API call.

//module.exports function

//analyze the image

var result = await analyzeImage(parts[0].data);

context.res = {

body: {

result

}

};

console.log(result)

context.done();

To move on, comment your code below WITHOUT THE SUBSCRIPTION KEY.

var multipart = require('parse-multipart'); var fetch = require('node-fetch');

module.exports = async function (context, req) { context.log('JavaScript HTTP trigger function processed a request.');

// receiving an image from an html form

//enctype = multipart/form-data

//parse-multipart library

var body = req.body;

//returns ---WebkitFormBoundaryjf;ldjfdlf

var boundary = multipart.getBoundary(req.headers['content-type']);

//returns an array of inputs

//each object has filename, type, data

var parts = multipart.Parse(body,boundary);

//array has 1 object(an image)

var image = parts[0].data;

var analysis = await analyzeImage(image, context);

context.res = {

body: {

analysis

}

}

context.done()}

async function analyzeImage(image, context){ const subscriptionKey = process.env['subscriptionKey']; const endpoint = process.env['endpoint'];

const uriBase = `${endpoint}/face/v1.0/detect`;

let params = new URLSearchParams({

'returnFaceAttributes': 'facialHair'

})

context.log(uriBase);

context.log(uriBase + '?' + params.toString());

let resp = await fetch(uriBase + '?' + params.toString(), {

method: 'POST',

body: image,

headers: {

'Content-Type': 'application/octet-stream',

'Ocp-Apim-Subscription-Key': subscriptionKey

}

})

let data = await resp.json();

return data;}

Time to test our completed Azure Function! It should now successfully do these tasks:

This time, we won't need to add any additional code, as the completed function should return the emotion data on its own.

Only difference is that we should receive an output in Postman instead:

Make sure you're using an image with a real face on it or else it won't work. Here's an example of an output I get with this image:

Credits: https://thispersondoesnotexist.com/

Credits: https://thispersondoesnotexist.com/

{

"result": [

{

"faceId": "d25465d6-0c38-4417-8466-cabdd908e756",

"faceRectangle": {

"top": 313,

"left": 210,

"width": 594,

"height": 594

},

"faceAttributes": {

"emotion": {

"anger": 0,

"contempt": 0,

"disgust": 0,

"fear": 0,

"happiness": 1,

"neutral": 0,

"sadness": 0,

"surprise": 0

}

}

}

]

}To move on, comment the output you received from the POST request!

{ "analysis": [ { "faceId": "4181f016-51ec-4bf1-bf07-be1933854ec8", "faceRectangle": { "top": 139, "left": 107, "width": 174, "height": 174 }, "faceAttributes": { "facialHair": { "moustache": 0.6, "beard": 0.6, "sideburns": 0.6 } } }, { "faceId": "80a9dc59-d76a-469c-a573-0afbdbf019d9", "faceRectangle": { "top": 525, "left": 298, "width": 147, "height": 147 }, "faceAttributes": { "facialHair": { "moustache": 0.1, "beard": 0.1, "sideburns": 0.1 } } } ] }

Please complete after you've viewed the Week 2 livestream! If you haven't yet watched it but want to move on, just close this issue and come back to it later.

Help us improve BitCamp Serverless - thank you for your feedback! Here are some questions you may want to answer:

Livestream went well, the content was challenging but fun. It did help completing the homework. The pace was good and it was easy to follow along. Maybe a bit more interaction/checkups on the crowd.

Below is a written format of the livestream for this week, included for future reference. To move on, close this issue.

Last week, you should've learned the basics of how to create an Azure Function, along with the basics of triggers and bindings.

In the livestream, we're going to code a HTTP trigger Azure Function that detects facial hair in a submitted picture.

We'll be going over how to:

Like last week, we'll be creating an HTTP Trigger to parse the image and analyze it for beard data! 🧔

Tip: It might be helpful to keep track of the Function App name and Resource Group for later in the project.

We are going to be using some npm packages in our HTTP Trigger, so we must install them in order for our code to even work.

Commands to type into your console:

npm init -y

Last step:

Be sure to add these initializing statements into the code:

var multipart = require('parse-multipart');

var fetch = require('node-fetch');This defines multipart and fetch , which we will use soon in the code below. ⬇️

The first step is to navigate to the new HTTP trigger you created earlier. You should see this at the top of the default code:

module.exports = async function (context, req) {

context.log('JavaScript HTTP trigger function processed a request.');We're going to be working in this function!

The parse-multipart library (multipart) is going to be used to parse the image from the POST request we will later make with Postman to test.

1️⃣ First let's define the body of the POST request we received.

var body = req.body;If you were to context.log() body , you would get the raw body content because the data was sent formatted as multipart/form-data:

------WebKitFormBoundaryDtbT5UpPj83kllfw

Content-Disposition: form-data; name="uploads[]"; filename="somebinary.dat"

Content-Type: application/octet-stream

some binary data...maybe the bits of a image.. (this is what we want!)

------WebKitFormBoundaryDtbT5UpPj83kllfw2️⃣ Secondly, we need to create the boundary string from the headers in the request.

var boundary = multipart.getBoundary(req.headers['content-type']);If you were to context.log() boundary , you would receive --WebkitFormBoundary[insert gibberish] , which is a boundary that helps you determine where the image data is. This boundary will help us parse out the image data. Take a look at the boundaries in the raw payload above in step 1 ⬆️

3️⃣ Third! Now we'll be using multipart again to actually parse the image.

var parts = multipart.Parse(body,boundary);This returns an array of inputs, which contains all the different files that were in the body. In our case, we only have one: the picture!

4️⃣ Finally. We can now access the image with this short and sweet line of code:

var image = parts[0].data;The array "image" only has one object, the picture, so we've now successfully parsed the image from the body payload 🎉

Here's what your code should look like now:

var multipart = require('parse-multipart');

var fetch = require('node-fetch');

module.exports = async function (context, req) {

context.log('JavaScript HTTP trigger function processed a request.');

// receiving an image from an html form

//enctype = multipart/form-data

//parse-multipart library

var body = req.body;

//returns ---WebkitFormBoundaryjf;ldjfdlf

var boundary = multipart.getBoundary(req.headers['content-type']);

//returns an array of inputs

//each object has filename, type, data

var parts = multipart.Parse(body,boundary);

//array has 1 object(an image)

var image = parts[0].data;

}We're now going to create a Microsoft Cognitive Services Face API:

Press Face and fill out the necessary information

There are some secret strings we're going to need in order to communicate with the Face API. This includes the endpoint and the subscription key.

Enter into your Face API resource and click on Keys and Endpoint. You're going to need KEY 1 and ENDPOINT

Now, head back to the Function App, and we're going to add these values into the Application Settings. Follow this tutorial to do so.

Naming your secrets

You can name it whatever you want, but make sure it makes sense. We named it "face_key" and "face_endpoint."

Let's begin by defining a new async function (analyzeImage()) that we're going to call later in the module.exports() function. This will take in the image data we parsed as a parameter, make a request to the Face API, and return the beard data.

That's kind of a lot... so let's start!

1️⃣ Start the function and define our secrets 🔑

async function analyzeImage(image){

const subscriptionKey = process.env['face_key'];

const endpoint = process.env['face_endpoint'];Remember the secrets we added in the application settings earlier? Now we're assigning them to the variables subscriptionKey and endpoint. Notice how we access the values with process.env['name'] .

2️⃣ Defining the URI and request parameters 📎

const uriBase = `${endpoint}/face/v1.0/detect`;

let params = new URLSearchParams({

'returnFaceAttributes': 'facialHair'

})uriBase is the URI of the Face API resource we are going to be making a POST request to.

params are what we're storing the parameters of the request in. We want the Face API to return beard data from the image, so we put 'facialHair' as the value of 'returnFaceAttributes' .

3️⃣ Sending the request 📨

let resp = await fetch(uriBase + '?' + params.toString(), {

method: 'POST',

body: image,

headers: {

'Content-Type': 'application/octet-stream',

'Ocp-Apim-Subscription-Key': subscriptionKey

}

})We're now going to use fetch to make a POST request. Adding all the variables we defined previously in uriBase + '?' + params.toString() we get something like this: [Insert your endpoint]/face/v1.0/detect?returnFaceAttributes=facialHair.

We send the image data (this is the image parameter we will call the function with) in the body and headers. 'Content-Type' is the format our image data is in, and 'Ocp-Apim-Subscription-Key' contains the subscription key of the Face API.

4️⃣ Receiving and returning data 🔢

let data = await resp.json();

return data;Now all we have to do is access the beard data from resp , which we defined as the response from using fetch to POST, in json format.

We're done with the analyzeImage() function! It returns the beard data we requested using fetch. However, we still have one last step.

5️⃣ Call the function

var analysis = await analyzeImage(image);Head back to the module.exports() function because we need to call analyzeImage() for it to actually execute. Recall that we defined image using var image = parts[0].data; and got the image data from parsing the raw body.

Now, we're simply passing the image data into the async function (note the await!) and directing the output to analysis.

context.res = {

body: {

analysis

}

}

context.done()To close out the function, we return analysis (the beard data and what was outputted from analyzeImage()) in context.res. This is what you will see when you successfully make a POST request to our HTTP Trigger.

🥳 Here's what your final HTTP Trigger should look like:

The npm dependencies and module.exports():

var multipart = require('parse-multipart');

var fetch = require('node-fetch');

module.exports = async function (context, req) {

context.log('JavaScript HTTP trigger function processed a request.');

// receiving an image from an html form

//enctype = multipart/form-data

//parse-multipart library

var body = req.body;

//returns ---WebkitFormBoundaryjf;ldjfdlf

var boundary = multipart.getBoundary(req.headers['content-type']);

//returns an array of inputs

//each object has filename, type, data

var parts = multipart.Parse(body,boundary);

//array has 1 object(an image)

var image = parts[0].data;

var analysis = await analyzeImage(image);

context.res = {

body: {

analysis

}

}

context.done()

}The awesome analyzeImage() function:

async function analyzeImage(image){

const subscriptionKey = process.env['face_key'];

const endpoint = process.env['face_endpoint'];

const uriBase = `${endpoint}/face/v1.0/detect`;

let params = new URLSearchParams({

'returnFaceAttributes': 'facialHair'

})

let resp = await fetch(uriBase + '?' + params.toString(), {

method: 'POST',

body: image,

headers: {

'Content-Type': 'application/octet-stream',

'Ocp-Apim-Subscription-Key': subscriptionKey

}

})

let data = await resp.json();

return data;

}Nearly there! Now let's just make sure our HTTP Trigger actually works...

Since our Azure Function will be taking a picture in the request, we are going to be using Postman to test it

You can install it from the Chrome Store as a Chrome extension.

What will Postman do?

We are going to use Postman to send a POST request to our Azure Function to test if it works, mimicking what our static website will do. Our HTTP trigger Azure Function receives an image as an input and outputs beard data!

Now it is time to send a POST request to the HTTP Trigger Function, so using the drop-down arrow, change "GET" to "POST" The goal? Receive beard data from an inputted image.

Go to your Function's code and find this:

Click to copy!

content-type into Key and multipart/form-data into Value. image into Key and use the dropdown to select "file" in order to upload an image.

To move on, comment any questions you have. If you have no questions, comment Done.

Done

Postman, APIs, and requests

Later, when we begin to code our Azure Function, we are going to need to test it. How? Just like our final web app, we'll be sending requests to the Function's endpoint.

Since our Azure Function will be taking a picture in the request, we are going to be using Postman to test it.

You can install Postman from the Chrome Store as a Chrome extension.

What will Postman do?

We are going to use Postman to send a POST request to our Azure Function to test if it works, mimicking what our static website will do.

Our HTTP trigger Azure Function will be an API that receives requests and sends back information.

To introduce you to sending requests to an API and how Postman works, we'll be sending a GET request to an API.

You can choose to sign up or skip and go directly to the app.

Close out all the tabs that pop up until you reach this screen

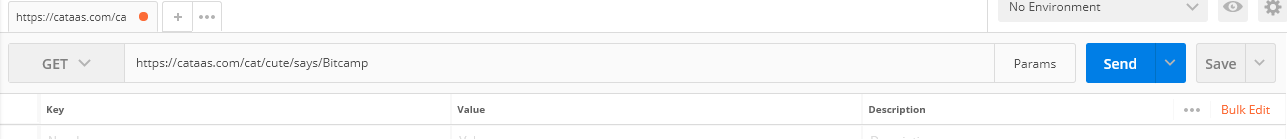

Now it is time to send a GET request to a Cat Picture API. The goal? Receive a cat picture with "Bitcamp" written on it in a specified color and text size.

Try it out yourself:

color(color of the text) andtext(font size)Stuck? Check here:

1. **Specifying the API Endpoint:** Enter https://cataas.com/cat/cute/says/Bitcamp, which is the API endpoint, into the text box next to GET  2. **Setting Parameters:** Click on "Params" and enter `color` into Key and the color you want (eg. blue) into Value. Enter `text` into the next Key row and a number (eg. 50) into Value. 3. **Click `Send` to get your cat picture**

Interested in playing around with the API? Documentation is here.

To continue, comment your cat picture 🐱