@lorp thanks for raising this, and for the detailed and clear write-up.

@litherum it would be interesting to hear the WebKit perspective on this. Was this simply an oversight, so it should be treated as a spec-compliance browser bug, or was this a deliberate decision and if so, what was the rationale?

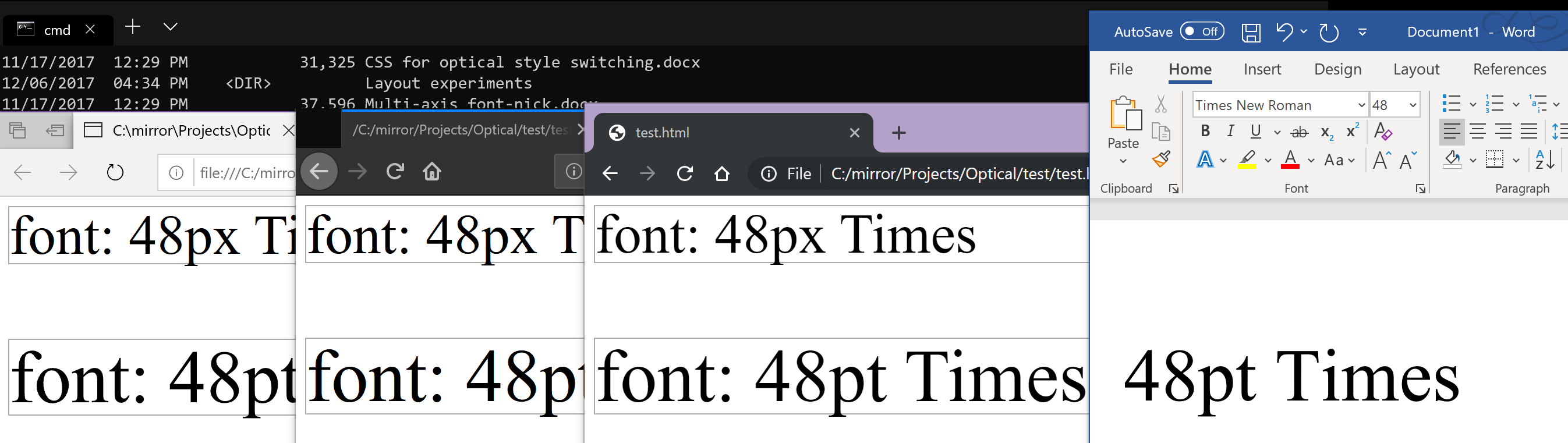

(Left to right: Edge (MSHTML-based), Firefox 69.0.3, Chrome 77.0.3865.120, MS Word win32 build 1911).

(Left to right: Edge (MSHTML-based), Firefox 69.0.3, Chrome 77.0.3865.120, MS Word win32 build 1911).

Introduction

This proposal extends the CSS Fonts Module Level 4

font-optical-sizingproperty by allowing numerical values to express the ratio of CSSpxunits to the the units used in theopszOpenType Font Variation axis. The ratio is intended to be multiplied byfont-size, measured inpx, allowing control over the automatic selection of particular optical size designs in variable fonts.The proposal resolves the conflicting implementations of the

font-optical-sizing: autobehaviour, and provides additional benefits for font makers, CSS authors, and end-users.Examples

font-optical-sizing: 1.0;current Apple Safari behaviour where 1px = 1 opsz unitfont-optical-sizing: 0.75;Apple TrueType and OpenType behaviour where 1px = 0.75 opsz units (1px = 0.75pt in many user agents)font-optical-sizing: 0.5;custom behaviour where 2px = 1 opsz unit, to “beef up” the text (e.g. in an accessibility mode for visually impaired end-users)font-optical-sizing: 2.0;custom behaviour where 1px = 2 opsz units, to reduce the “beefiness” of the text (suitable for large devices)font-optical-sizing: auto;use thefont-optical-sizingratio defined in the user agent stylesheetBackground

OpenType Font Variations in CSS

When the OpenType Font Variations extension of the OpenType spec was being developed in 2015–2016, Adam Twardoch and Behdad Esfahbod proposed the addition of the low-level

font-variation-settingsproperty to the CSS Fonts Module Level 4 specification, modeled afterfont-feature-settings.For higher-level control of font variations, there was general consensus that the

font-weightproperty would be tied to thewghtfont axis registered in the OpenType specification,font-stretchwould be tied towdth, whilefont-stylewould be tied toitalandslnt.OpenType opsz variation axis and CSS font-size

The consensus was that the CSS

font-sizeproperty could be tied to the axis registered for optical size,opsz. Theopszaxis provides different designs for different sizes. Commonly, a lower value on theopszaxis yields a design that has wider glyphs and spacing, thicker horizontal strokes and taller x-height. The OpenType spec suggests that “applications may choose to select an optical-size variant automatically based on the text size”, and states: “The scale for the Optical size axis is text size in points”. Apple’s TrueType Variations specification (on which OpenType Font Variations is based) also mentions point size as the scale for interpreting theopszaxis: “'opsz', Optical Size, Specifies the optical point size.” It is notable that neither the OpenType spec nor Apple’s TrueType spec addresses the interpretation ofopszvalues in environments where the typographic point is not usefully defined.Optical sizing introduces a new factor in handling text boxes in web documents. If the font size of a text box changes, the proportions of the box not remain constant because of the non-linear scaling of the font; typically the width grows at a slower rate than the height, because of the optical compensations in typeface design mentioned above. Realizing that many web documents may rely on the assumption of linear scaling, Twardoch proposed an additional CSS property

font-optical-sizing:auto: “enables” optical sizing by tying the selection of a value on theopszaxis to the font size changenone: “disables” optical sizing by untying that selection, so font size change happens linearlyThe

font-optical-sizingproperty is currently part of CSS Fonts Module Level 4 working draft.Controversy: opsz axis and CSS font-size (px vs. pt)

Unfortunately recent browser developments introduced ambiguity in terms of how

opszvalues should be interpreted:~Most browser implementers interpret

opszas expressed in CSSptunits (points). If optical sizing is enabled, all text has itsopszaxis set to the value of the font size inpt.~ [In fact, Chrome and Firefox, as well as Safari, interpretopszinpxunits. Updated thanks to @drott’s comment below.]Apple in Safari has decided to interpret

opszas expressed in CSSpxunits (pixels). If optical sizing is enabled, all text has itsopszaxis set to the value of the font size inpx.Font makers and typographers are upset at Apple’s decision. They design fonts with the assumption that

opszis expressed in points. Sincepxvalues are commonly higher thanptvalues (typically at a ratio of 4:3) interpretingopszinpxmeans the that a higher optical size will be chosen than intended. For 9pt/12px text, theopszdesign12will be chosen, which will yield text that is too thin, too tightly spaced, and potentially illegible. They argue that the user experience will degrade, and optical sizing will actually yield worse results than no optical sizing, effectively defeating the whole purpose and unjustly giving variable fonts bad reputation. Inconsistent behaviour with the same font will cause problems for font makers and CSS authors.Apple defends this decision, suggesting that CSS authors can simply set

font-variation-settings: 'opsz' n.CSS authors object that using

font-variation-settingsbreaks the cascade for font styling and, because of the nature of optical size, is unsuitable for application at the document root level. Therefore it will not get used.Proposed resolution: numerical values in font-optical-sizing

The CSS

font-optical-sizingproperty currently controls the relationship betweenfont-sizeandopszby means of a simple switch (auto/none). We propose to allow a numeric value forfont-optical-sizing. This value expresses the ratio ofopszunits to CSSpx. Examples:font-optical-sizing: 1.0;current Apple Safari behaviour where 1px = 1 opsz unitfont-optical-sizing: 0.75;Apple TrueType and OpenType behaviour where 1px = 0.75 opsz units (1px = 0.75pt in many user agents)font-optical-sizing: 0.5;custom behaviour where 2px = 1 opsz unit, which would “beef up” the text (suitable for very small devices)font-optical-sizing: 2.0;custom behaviour where 1px = 2 opsz unit, which would “reduce the beefiness” of the text (suitable for large devices)font-optical-sizing: auto;use thefont-optical-sizingratio defined in the user agent stylesheetResults

User agents can ship with default

font-optical-sizingother than 1.0. (The CSS specification might recommend 0.75 as a reasonable default for most situations.)Font makers can ship a single font whose

opszaxis works as intended in browsers as well as print.CSS authors can change the value whenever they like, independently of the choices made by browser vendors and font makers.

CSS authors can specify a different

font-optical-sizingratio for different media queries, including print, or for aesthetic purposes.End-users can be offered accessibility modes that choose low values for

font-optical-sizingto ensure lower-than-defaultopszvalues and more legible text.Note

Proposers