@svgeesus

Closed smfr closed 4 years ago

@svgeesus

Isnʼt this just #300 reloaded?

Unless the author or user requested something else, the browser would be expected to use sRGB as its working color space for webcompat.

I don't think so. Authors will be using lab()/lch() for their consistent treatment of lightness, so a gradient from lch(50% 100 10deg) to lch(50% 100 100deg) should use lightness 50% for all its colors. You won't get that if colors are interpolate in sRGB, and I don't think authors should have to opt the entire page into a working lab colorspace to get this.

When gradients and animations use lab()/lch() colors, should the gradient/animation interpolate in the lab() colorspace?

Ideally, yes. But they won't by default, because then the second half of a gradient from

would be different in implementations that support lab() and lch(). So for webcompat, the default needs to be sRGB as at present.

Isnʼt this just #300 reloaded?

Yes.

I don't think authors should have to opt the entire page into a working lab colorspace to get this.

I agree, which is why a per-element working colorspace, rather than a brute force all-or-nothing working colorspace for the whole page, is more flexible and author friendly.

I think we all agree that Lab interpolation is in every way superior, and the only reason we can't switch everything to that by default is backwards compat, right?

Assuming the above statement is correct, I think anything where authors have to explicitly enable lab interpolation is a bad idea from a usability point of view and should be a last resort if nothing more reasonable can be implemented. It seems reasonable to me that as long as there's at least 1 non-sRGB color, the interpolation should happen in Lab, since there's no backwards compat concern.

If a gradient/animation uses a mixture of lab() and rgb() colors, I assume it would fall back to RGB interpolation.

If you interpolate Lab and sRGB via sRGB interpolation, you could end up having an abrupt jump in the first interpolation step, since the Lab color may be outside the sRGB gamut. Given that sRGB is rather small compared to Lab, that's not a rare case, but significantly more than 50% likely. Interpolation must always happen in a superset of the gamuts of the colors involved, so Lab is a safe choice, whereas sRGB is not.

What happens if you mix lab() and colors in one of the other predefined colorspaces (display-p3, rec-2020 etc)?

Given that Lab encompasses all visible colors, Lab interpolation should be safe here too.

I agree, which is why a per-element working colorspace, rather than a brute force all-or-nothing working colorspace for the whole page, is more flexible and author friendly.

I don't think per-element would cut it either, ideally it needs to be per operation. You could have a transition and a gradient on the same element, from different stylesheets. One of them is between sRGB colors, the other between Lab colors. You need to interpolate the former in sRGB for webcompat, but the latter in Lab because sRGB would produce terrible results (since the colors could very likely be both out of sRGB gamut). There could be a document or element color space to fall back on perhaps, but interpolating Lab colors in sRGB should never happen.

It seems reasonable to me that as long as there's at least 1 non-sRGB color, the interpolation should happen in Lab, since there's no backwards compat concern.

I think this is definitely moving in the right direction, but would suggest modifying this so that if the gradient contains multiple colorspaces, interpolation should happen in an appropriate space that is a superset of them all.

Edit:

I think we all agree that Lab interpolation is in every way superior, and the only reason we can't switch everything to that by default is backwards compat, right?

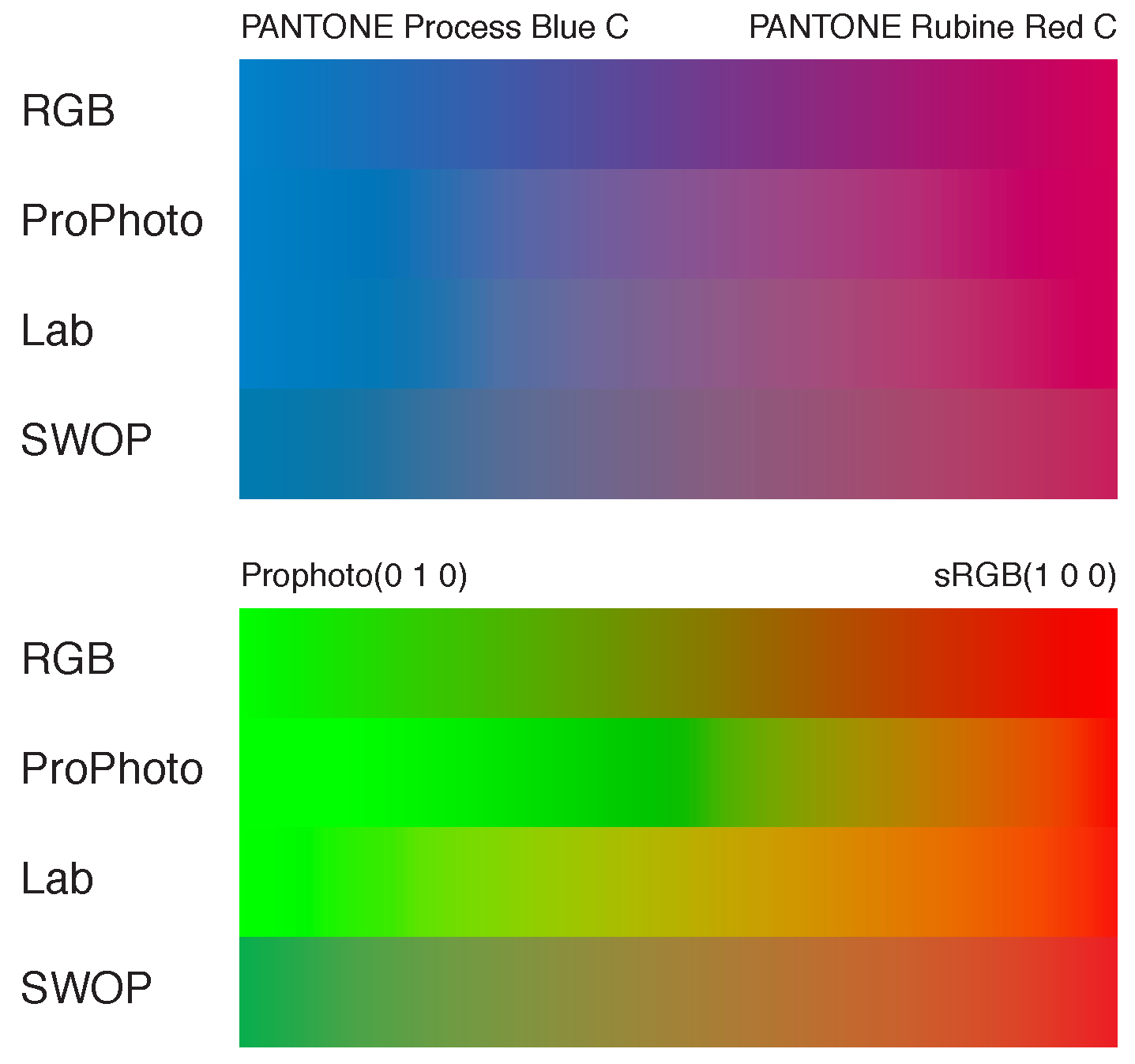

Actually while testing for https://github.com/w3c/csswg-drafts/issues/2023 I found this not always to be the case. Gradients between two Pantone colors based on the Lab space didn't look as "correct" to me as a gradient between them in the CMYK space. Subjective, yes, but I don't think we can assume Lab will always be the best choice. I can knock up an example if this would be useful.

How about the following heuristic then:

The heuristic can easily be overridden to a wider color space with the relative color syntax from #3187 that is added in Color 5.

A gradient from device-cmyk to sRGB, or sRGB to Lab, or some other exotic combination is fairly unikely to give good results, and I'd expect would be down to the user not understanding what they've asked for.

Not necessarily. It could be between a variable/user defined color and a fixed color. I don't think we should brush off such use cases.

Given that Lab encompasses all visible colors, Lab interpolation should be safe here too.

Yes.

I don't think per-element would cut it either, ideally it needs to be per operation. You could have a transition and a gradient on the same element, from different stylesheets. One of them is between sRGB colors, the other between Lab colors. You need to interpolate the former in sRGB for webcompat, but the latter in Lab because sRGB would produce terrible results (since the colors could very likely be both out of sRGB gamut).

Ouch, you are right. (This is why SVG had separate color-interpolation and color-interpolation-filters properties, with different initial values).

Interpolation between colors of different color spaces, where one color space is a strict subset of the other is done in the wider color space

Do we particularly need that one? "wider" is not easily determined; for example display-p3 and a98-rgb are largely overlapping; though prophoto-rgb is wider (and includes imaginary colors)

Do we particularly need that one? "wider" is not easily determined; for example display-p3 and a98-rgb are largely overlapping; though prophoto-rgb is wider (and includes imaginary colors)

I was wondering about that. I think 1 and 3 are sufficient, especially if there's a way to override the heuristic (e.g. relative color syntax to convert between color spaces)

Having a requirement to blend in Lab when you know you can get better, and faster results in another space better seems counter-productive. It might be useful to leave this door open for implementers, but I'm not very invested in this position so long as point 1 remains.

blend in Lab when you know you can get better, and faster results in another space

Could you give an example where blending in another space than Lab is better? I can only think of blending in LCH.

Also, the proposal is not to force blending in Lab, just to have that as a sensible default. Overriding to explicitly use another space would still be allowed. The point is to not require such an override every single time (to avoid blending in sRGB).

Could you give an example where blending in another space than Lab is better? I can only think of blending in LCH.

That's a good question. PDF to the rescue.

That's an export from Acrobat, so it's obviously sRGB and I've got no control over how the colors were shifted, but it's a reasonable representation. Original PDF is blend.pdf

The first blend is between two largely in-gamut print colors, the second is the case we're specifically talking about here: a color which is out of gamut in sRGB on the left, a color which is in-gamut in sRGB on the right.

So to answer your question Chris: No, no I can't. The Lab blend is clearly superior in this case.

I like the idea of defaulting to Lab for interpolation if the two colors are in different spaces, and otherwise using the specified color space, since it makes it easier to understand what will happen. Something like Lea suggests to explicitly convert a color value into a particular color space (e.g. using the #3187 syntax), in case a different one is wanted for interpolation, could be the mechanism to choose the space.

/* transition between sRGB green and p3 100% red in Lch */

.x { background-color: lch(from green); transition: 1s background-color; }

.x:hover { background-color: lch(from color(display-p3 1 0 0)); }Though one down side is that I think it would be more useful to interpolate between two display-p3 colors in Lab space, but that behavior is the one that would need more syntax.

@LeaVerou:

- Interpolation between colors of the same color space is done in that color space

- Interpolation between colors of different color spaces, where one color space is a strict subset of the other is done in the wider color space

- Any other interpolation is done in Lab

I think we could relax the second rule to only affect strict supersets of sRGB. We could also amend the third rule if it was possible for the author or user to explicitly select a working color space.

By the way, are color keywords necessarily in sRGB? Static predefined ones probably are (although, like HSL and HWB, they are defined in a section of their own), but what about currentcolor and system colors?

Perhaps we could combine a global working color space per stylesheet with local working color spaces per element or per style rule.

lab() or lch() notation is used for one of the base colors or for currentcolor, device-cmyk(), color() notation if there is only one being used, not counting sRGB, color-space property, defaulting to the global working color space, but perhaps initialized to CIELAB. @color-space at-rule, defaulting to sRGB. Since no one's brought it up yet:

SVG 1 defined a color-interpolation property, which would affect how gradients and animations would interpolate (and was also supposed to affect compositing, and some other operations). Implementations are inconsistent currently in what it does affect. There is discussion about removing some or all of the definition from SVG 2, in https://github.com/w3c/svgwg/issues/366

The SVG color-interpolation accepts two options, sRGB and linearRGB (RGB without any gamma correcting, just linearly interpolate the values in the rgb() vector), plus an auto option. But it could logically be extended to include other color spaces, including lab/lch.

But…

This would be a “per element” setting, not a “per operation” setting, and as Lea mentioned, this may be too coarse for some authors: changing the setting for one thing (e.g., a gradient) could have unanticipated side effects. Either way, we'd need clearer definitions of what the property affected.

The CSS Working Group just discussed Do gradients/animations using lab/lch colors interpolate in the Lab colorspace?.

/me I'm looking at this web page and the original PDF side by side on a wide color gamut monitor.

/me my eyes! my eyes!

what about

currentcolorand system colors?

Good point. CurrentColor (like everything else) started out as sRGB but could now be any <color>.

"The keyword currentcolor represents value of the color property on the same element."

And the ED currently says The names resolve to colors in sRGB. but also (Two special color values, transparent and currentcolor, are specially defined in their own sections.).

So the sec needs to stop saying that currentColor is a named color. Or say that all the X11 colors and transparent are sRGB but that currentColor has no such restriction.

my eyes! my eyes!

/me downloads the pdf

MY EYES!

... noise of me frantically checking I uploaded the correct file ...

Thinking about the cylindrical spaces (HSL and LCH) - the intention is, iirc, that they interpolate the components as stated (rather than doing a linear interpolation thru the space), so if you interpolate yellow->blue will be green in the middle, not gray, right?

Assuming that's the case, how do we handle interpolation to a zero-chroma color? Blue->white should presumably just have the blue become paler and paler until you hit white, right? It should not interpolate the hue angle to some arbitrary value, such as going to 0deg and thus becoming purplish in the middle, right?

Assuming that's the case, then we almost certainly want this to apply both to zero-chroma and "very close to zero" chroma; we don't want a discontinuity in behavior when one of the endpoints is exactly on vs slightly off the L axis.

This implies we want to define something thematically similar to premultiplied alpha; we want the hue-angle interpolation to favor the higher-chroma color, such that if going from "has chroma" to "zero chroma", the hue angle doesn't change until the final moment (when it no longer matters); when going from "higher chrome" to "lower chroma", the hue angle interpolates non-linearly, staying close to the higher-chroma hue for most of the transition before quickly transitioning to the lower-chroma hue at the end.

I don't know if there's already some industry approaches to this, but if not, perhaps we could define something akin to the gradient-midpoint approach, selecting the "midpoint" based on the relative ratios of the chromas?

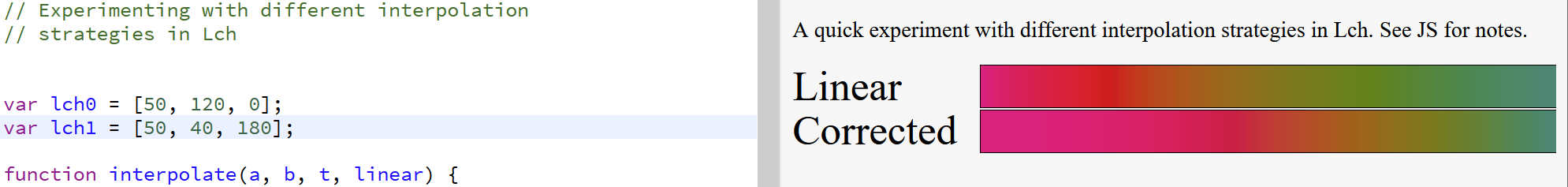

@tabatkins you mean this sort of case?

div {

background: linear-gradient(to right, lch(50% 120 0deg), lch(50% 0 180deg));

}That's exactly the kind of observation that diverts me from the stuff I'm supposed to be doing. I couldn't find any JS color libraries that had ideas on this. But I think it's essentially just reducing the contribution to the hue of the gradient from colors with lower chroma.

Here's my best effort (note it's not an actual suggestion, just testing the concept): https://jsbin.com/qegehec/1/edit?js,output.

Here's a visual example of the kinds of discontinuities @tabatkins is talking about...

https://o.img.rodeo/video/upload/v1588360555/Screen_Recording_2020-05-01_at_12.09.09_PM.mp4

...using D3's color interpolation, which turns 'white' into L: 100 a:0 b:0

Right. (We're gonna default to shortest-path for hue angle, so 0deg to 359deg would be very little change, but 0deg to 180deg would show off the same issue, yeah.)

@tabatkins

I thought we had decided that premultiplied alpha was a mistake and we should've just handled transparent specially? Now we want to repeat this?

Ok, here's an idea: Perhaps we should just define chroma and hue as undefined, NaN (or some other special value) when L=0 or L=100 and hue as undefined when chroma = 0, and say that when interpolating between a defined and an undefined value, we get the defined value throughout? Because that's basically what's happening here and if I'm not mistaken, this shall give us the desired result in every case. Not sure what happens when interpolating between two undefined values though.

@eeeps Thanks, that's quite illuminating. What app is this?

I can see the problem with the discontinuity, but I'm not sure about handling it. How close is close enough? It's a bit of a slippery slope (literally!)

@LeaVerou it's https://eeeps.github.io/cam02-color-schemer/, which I built on top of http://gramaz.io/d3-cam02/

(which uses Jch instead of Lch, and always turns#fff into J*100 a*-2 b*-1 (cartesian) & J*100 c3 h211° (cylindrical), not L:100,a:0,b:0 – so I botched the details... but the point remains)

@faceless2 I'm seeing some hard-to-explain-to-others behaviour in your example. Take this pair of colours for example:

var lch0 = [50, 120, 0];

var lch1 = [50, 40, 180];I might reasonably expect a perceptually uniform gradient, but it is all bunched up because it is trying to avoid the discontinuity at C=0.

@eeps by the way , from Safdar et al Perceptually uniform color space for image signals including high dynamic range and wide gamut

"There have however, been reported unexpected computational failures in CIECAM02 (and hence in CIECAM02 based uniform color spaces) [20]."

Yes, I noticed that too, but I was hoping I could distract you with how much better it looked when chroma in lch[1] was almost 0 :-)

There's no basis to the formula I used other than it seemed to fit - perceptually I don't think it's great for the reasons you've pointed out.

But I do think gradually reducing the contribution of the hue as the chroma decreases is the right approach, rather than any sort of special handling when chroma=0 (which doesn't help when chroma is almost 0). If it has any merit, it's as a testbed.

If I get some time I'll tinker with it to see if I can improve things.

I agree that the contribution from undefined hue should merge in gradually, but over a much smaller interval.

BTW if tinkering, the hue difference should not be allowed to exceed 180.

I thought we had decided that premultiplied alpha was a mistake and we should've just handled transparent specially? Now we want to repeat this?

Nope, premultiplied is still the way to go. Previous threads are:

I agree that the contribution from undefined hue should merge in gradually, but over a much smaller interval.

Yeah, something that sticks to almost linear for most chroma differences, and only exposes a significant difference when one chroma starts getting close to zero, would be fine I think. Basically, if you can still see a reasonable amount of the hue, it should continue to be more or less uniform.

Okay, so the request is for some math that achieves:

Hmm, I'd have to play with the math a bit to see it, but I suspect what I'm asking for is a sigmoid function, tuned so that the flipover is roughly where you stop being able to easily see the hue. The output of the sigmoid tells you how much the midpoint of the hue transition should deviate from the actual midpoint, using the same "midpoint" mechanics as gradient transitions today.

FWIW, d3-color also does it with a NaN value: https://observablehq.com/@d3/achromatic-interpolation?collection=@d3/d3-color

@tabatkins I'm a little concerned that this may be too much magic. Is there going to be an opt-in or opt-out from this behavior?

No, I wouldn't expect so. It's doing the same thing as alpha premultiplication, which we don't give control over.

And on that note, uh, alpha should also be handled in a similar fashion. I think it's easy enough to make Lab just do the same premultiplied thing, but I don't know how Lch() would work.

Doing gradients in Lch is going to not work. There is no reason to do any kind of image processing in Lch/Lab except if you want to use them for what they were designed : predict perceptual color differences (delta E).

For your education :

And on that note, uh, alpha should also be handled in a similar fashion. I think it's easy enough to make Lab just do the same premultiplied thing, but I don't know how Lch() would work.

Do you understand that Lab has a cubic root built-in ? How do you exactly intend to premultiply an alpha layer, supposed to model optical occlusion, with something that doesn't respect energy conservation ?

For your education : Do you understand that Lab has a cubic root built-in ?

@aurelienpierre I request you to read and understand Positive Work Environment at W3C: Code of Ethics and Professional Conduct. Explanations are welcome, but there is no need to treat others contributing to this thread as if they are uneducated or incompetent.

In addition, while I understand that posting a pirate PDF of a copyrighted book was probably well intentioned (and it is a good book, I have the first and second editions) please refrain from doing so in future.

Moving on to your technical points: yes, Lab and LCH are intended to be perceptually uniform and they are being added to CSS for precisely that reason. Your claim that their sole reason for existing is to calculate deltaE is incorrect. They are used for many other purposes such as gamut mapping or the creation of perceptually even color scales or the creation of perceptually even color harmonies, to mention a few.

And yes, compositing and blending should take place in a linear-light colorspace such as XYZ. The Compositing and Blending Level 1 specification does not, which I argued against for many years; eventually compatibility with industry standard tools (such as Photoshop blending modes) was deemed more important. So Compositing and Blending currently has the following known deficiencies:

For backwards compatibility it will always do so by default, but I do hope to add linear-light alternatives as an opt-in alternative in the future.

Explanations are welcome, but there is no need to treat others contributing to this thread as if they are uneducated or incompetent.

This thread shows blatant ignorance on the topic of color spaces when people try do bend alpha/occlusion and non-linear gradient together. I don't assume, I just read.

Your claim that their sole reason for existing is to calculate deltaE is incorrect. They are used for many other purposes such as gamut mapping or the creation of perceptually even color scales or the creation of perceptually even color harmonies, to mention a few.

Which are only Delta E applied as a numerical minimizer of visual error while doing color mapping. It's still Delta E. It's only Delta E all the way, otherwise they wouldn't have bothered to make 3D euclidean spaces where color differences are the euclidean norm.

The Compositing and Blending Level 1 specification does not, which I argued against for many years; eventually compatibility with industry standard tools (such as Photoshop blending modes) was deemed more important. So Compositing and Blending currently has the following known deficiencies:

I truly hope they didn't forgot to support Microsoft IE 8 in their standard. Everyone needs broken technology, especially when it's opensource and the whole industry relies on it.

Lab has been a first-order color-space in both PDF and Photoshop for many years, with support for both gradients and alpha. While it's certainly possible that Adobe are also "blatently ignorant on the topic of color spaces", it seems unlikely.

And yet they are. Being the first competitor on a market doesn't prevent bad design decisions at early stage. Then they have to get on with that. Don't give meaning to mistakes. Lab is not intended for pixel manipulations. It is a color model to predict color differences while changing viewing conditions.

Also Lab is old and flawed (no HDR support, not even hue-linear), and there are a dozens of newer spaces that tried to fix it (CIECAM02, CIECAM16, IPT, IPT-HDR, JzAzBz, Lab-HDR, etc.). So I wonder why devs still use that old flawed thing from 1976. My bet is because it is the only one they know (as in "blatantly ignorant").

If you understand the very concept of occlusion, from an optical point of view, you know that alpha occlusion in Lab is plain non-sensical. Just paint on some glass sheet with a marker and overlay that on whatever scene : the occlusion you get as a result cannot be modelled accurately in Lab, simply because you have a bloody cubic root in the mix that completely messes your radiometric ratios.

You seem to be under the impression this issue somehow relates to the use of Lab as a blend color space. It doesn't. @svgeesus has explained this already.

I'm sure your opinions on the use of Lab and the failure of the graphics arts industry to recognise their error in adopting it are of great interest, but that is not the topic of this issue. If your concern is specific to the CSS specification, feel free to open a new issue.

there are a dozens of newer spaces that tried to fix it (CIECAM02, CIECAM16, IPT, IPT-HDR, JzAzBz, Lab-HDR, etc.). So I wonder why devs still use that old flawed thing from 1976. My bet is because it is the only one they know (as in "blatantly ignorant").

Your bet is wrong. Once more I ask you to stop assuming ignorance on behalf of others and treating them with contempt.

/me goes back to evaluating JzAzBz for CSS Color 5 and in preparation for the next ICC meeting on HDR

Responding to your technical point:

Lab is not intended for pixel manipulations. It is a color model to predict color differences while changing viewing conditions.

Lab is not very good at predicting color differences if the viewing conditions vary very far from a natural daylight like D50. This is why CSS Color 4 (like ICC do with their profiles) uses a separate chromatic adaptation step before computing Lab values of the corresponding colors. Currently using Bradford (again, aligning with ICC and industry practice) although I am also evaluating CAT16.

@aurelienpierre technical arguments are welcome here, insults and theories on motivations are not. Please use the guidelines in our code of conduct while contributing here: https://w3c.github.io/PWETF/

Meanwhile, CSS is still broken but work ethics stay positive.

Just do everything in linear RGB and allow users to tag in which space they input RGB numbers. The standard supports a zillion of fancy color "spaces" (or more accurately, shortcuts) while doing the very basics wrong.

Web-dev candy is something to care about once the basics work. CSS animations were good for nothing last time I checked, blurs and drop shadows fail miserably to produce anything somewhat related to real world and produce ringing when overlayed on images.

ICC has no say in that. They care about printing. Completely different scope, different industry, different needs. Try OpenColor IO. Try ACES. Try stuff designed for multiple outputs, that properly separates view transforms and master pipeline.

Nothing is done in linear RGB except when the user specifically asks for it, as they might when the output level is used as a mask. Blur and other filters are straightforward alpha composition in sRGB or linear RGB, as the user chooses.

It's clear you know something about color, but most of your assertions about its use in CSS have been incorrect. And even if you did have anything useful to contribute, your apparent inability to convey your ideas without inexplicably calling someone an idiot in the process has rendered them worthless. It seems like a colossal waste of your time to me, but suit yourself. I've set your 𝛼=0

Saying that

Nothing is done in linear RGB except when the user specifically asks for it, as they might when the output level is used as a mask. Blur and other filters are straightforward alpha composition in sRGB or linear RGB, as the user chooses.

without seeing the major bug and a fundamental design mistake it is, is why you get

your apparent inability to convey your ideas without inexplicably calling someone an idiot in the process

There is literaly zero polite way to describe the clusterfuck in this thread. https://github.com/w3c/csswg-drafts/issues/4647#issuecomment-571537439 is one of the most self-explanatory one. How is a gradient from green to red skewing to a large orange part better than the "RGB" labelled one (what RGB by the way ?). What is the point of showing a gradient from "ProPhoto 0, 1, 0", which is an imaginary color, in any other shorter gamut RGB (which involves gamut clipping anyway) ?

Image processing is light transport all the way. Color happens in your brain, no need to bother about it if you are not inside a CMS doing chromatic adaptation and such. Light is well known and well modelled by physics. None of the light physics involves "perceptual" cubic roots functions.

The only part where I use Luv space in my software is for controls in GUI. Then Luv parameters are converted to Yuv -> Yxy -> XYZ -> RGB, and the pixel processing happens in RGB.

What really makes me rage here, is the problems caused by image operations in Lab/name-your-perceptual-mess are well known in a universe of retouchers and CGI artists that seems entirely parallel to yours.

Alpha composition is not alpha composition in sRGB. Alpha means occlusion. Occlusion is an optical thing. Optics don't care about your OETF. No CGI artist in his right mind would overlay CGI on top of real footage in any space with a non-linear OETF. That simply doesn't work.

I have had to vent my feelings about the specification process in the past, but this related to stuff I had engaged in already, while @aurelienpierre is rambling before having contributed at all (as far as I can tell). What they apparently also do not get is that CSS effectively is both a UI and a storage method for color values that brings with it twenty years of legacy and billion-fold compatibility concerns. Please, keep it to productive reviews and proposals. They will be well appreciated.

PS: Singular they, obviously.

When gradients and animations use lab()/lch() colors, should the gradient/animation interpolate in the lab() colorspace?

If a gradient/animation uses a mixture of lab() and rgb() colors, I assume it would fall back to RGB interpolation.

What happens if you mix lab() and colors in one of the other predefined colorspaces (display-p3, rec-2020 etc)?