I don't see how this would be compatible with user selection of content.

Closed guest271314 closed 5 years ago

I don't see how this would be compatible with user selection of content.

@martinthomson The feature request would be compatible and consistent with user selection of content. The "Take a Screenshot" feature of Firefox Developer Tools provides a basic template of how to implement the feature, by way of selection of the content that should be captured, which could be translated into the appropriate corresponding MediaStreamTrack constraints.

resizeMode: "crop-and-scale" roughly provides such functionality now, if a user takes the time to test and determine the resulting output.

One example use case is getDisplayMedia() being executed for a window opened with window.open(), where it is not possible to hide scrollbars, location and title bars; though the expected resulting output is a webm video without scrollbars, location and title bars. After a day of testing finally was able to use CSS to achieve the requirement (at Chromium 73) just moments ago at https://github.com/guest271314/MediaFragmentRecorder/tree/getdisplaymedia-webaudio-windowopen

<!DOCTYPE html>

<html>

<head>

<title>Record media fragments to single webm video using getDisplayMedia(), AudioContext(), window.open(), MediaRecorder()</title>

</head>

<body>

<h1 id="click">open window</h1>

<script>

const click = document.getElementById("click");

const go = ({

width = 320, height = 240

} = {}) => (async() => {

const html = `<!DOCTYPE html>

<html>

<head>

<style>

* {padding:0; margin:0;overflow:hidden;}

#video {cursor:none; object-fit:cover;object-position: 50% 50%;}

video::-webkit-media-controls,audio::-webkit-media-controls {display:none !important;}

</style>

</head>

<body>

<!-- add 30 for title and location bars -->

<video id="video" width="${width}" height="${height}"></video>

</body>

</html>`;

const blob_url = URL.createObjectURL(new Blob([html], {

type: "text/html"

}));

let done;

const promise = new Promise(resolve => done = resolve);

const mediaWindow = window.open(blob_url, "getDisplayMedia", `width=${width},height=${height + 30},alwaysOnTop`);

mediaWindow.addEventListener("load", async e => {

console.log(e);

const mediaDocument = mediaWindow.document;

const video = mediaDocument.getElementById("video");

const displayStream = await navigator.mediaDevices.getDisplayMedia({

video: {

cursor: "never", // this has little/no effect https://github.com/web-platform-tests/wpt/issues/16206

displaySurface: "browser"

}

});

console.log(displayStream, displayStream.getTracks());

let urls = await Promise.all([{

src: "https://upload.wikimedia.org/wikipedia/commons/a/a4/Xacti-AC8EX-Sample_video-001.ogv",

from: 0,

to: 4

}, {

src: "https://mirrors.creativecommons.org/movingimages/webm/ScienceCommonsJesseDylan_240p.webm#t=10,20"

}, {

from: 55,

to: 60,

src: "https://nickdesaulniers.github.io/netfix/demo/frag_bunny.mp4"

}, {

from: 0,

to: 5,

src: "https://raw.githubusercontent.com/w3c/web-platform-tests/master/media-source/mp4/test.mp4"

}, {

from: 0,

to: 5,

src: "https://commondatastorage.googleapis.com/gtv-videos-bucket/sample/ForBiggerBlazes.mp4"

}, {

from: 0,

to: 5,

src: "https://commondatastorage.googleapis.com/gtv-videos-bucket/sample/ForBiggerJoyrides.mp4"

}, {

src: "https://commondatastorage.googleapis.com/gtv-videos-bucket/sample/ForBiggerMeltdowns.mp4#t=0,6"

}].map(async({...props

}) => {

const {

src

} = props;

const blob = (await (await fetch(src)).blob());

return {

blob,

...props

}

}));

click.textContent = "click popup window to start recording";

const canvas = document.createElement("canvas");

canvas.width = width;

canvas.height = height;

const ctx = canvas.getContext("2d");

ctx.font = "20px Monospace";

ctx.fillText("click to start recording", 0, height / 2);

video.poster = canvas.toDataURL();

mediaWindow.focus();

mediaWindow.addEventListener("click", async e => {

video.poster = "";

const context = new AudioContext();

const mediaStream = context.createMediaStreamDestination();

const [audioTrack] = mediaStream.stream.getAudioTracks();

const [videoTrack] = displayStream.getVideoTracks();

videoTrack.applyConstraints({

cursor: "never",

width: 320,

height: 240,

aspectRatio: 1.33,

resizeMode: "crop-and-scale"

});

mediaStream.stream.addTrack(videoTrack);

console.log(videoTrack.getSettings());

const source = context.createMediaElementSource(video);

source.connect(context.destination);

source.connect(mediaStream);

[videoTrack, audioTrack].forEach(track => {

track.onended = e => console.log(e);

});

const recorder = new MediaRecorder(mediaStream.stream, {

mimeType: "video/webm;codecs=vp8,opus",

audioBitsPerSecond: 128000,

videoBitsPerSecond: 2500000

});

recorder.addEventListener("error", e => {

console.error(e)

});

recorder.addEventListener("dataavailable", e => {

console.log(e.data);

done(URL.createObjectURL(e.data));

});

recorder.addEventListener("stop", e => {

console.log(e);

[videoTrack, audioTrack].forEach(track => track.stop());

});

video.addEventListener("loadedmetadata", async e => {

console.log(e);

try {

await video.play();

} catch (e) {

console.error(e);

}

});

try {

for (let {

from,

to,

src,

blob

}

of urls) {

await new Promise(resolve => {

const url = new URL(src);

if (url.hash.length) {

[from, to] = url.hash.match(/\d+|\d+\.\d+/g).map(Number);

}

const blobURL = URL.createObjectURL(blob);

video.addEventListener("play", e => {

if (recorder.state === "inactive") {

recorder.start()

} else {

if (recorder.state === "paused") {

recorder.resume();

}

}

}, {

once: true

});

video.addEventListener("pause", e => {

if (recorder.state === "recording") {

recorder.pause();

}

resolve();

}, {

once: true

});

video.src = `${blobURL}#t=${from},${to}`;

})

}

recorder.stop();

} catch (e) {

throw e;

}

}, {

once: true

});

});

return await promise;

})()

.then(blobURL => {

console.log(blobURL);

const video = document.createElement("video");

video.controls = true;

document.body.appendChild(video);

video.src = blobURL;

}, console.error);

click.addEventListener("click", e => {

go();

}, {

once: true

});

</script>

</body>

</html>The Media Capture Screen Share API should provide a means to achieve such a requirement; by either fine-tuning the constraints for such selection, or allowing the user to select the content to be captured in similar fashion as the Firefox "Take a Screenshot" Developer Tool.

What is the reason such functionality should not be provided by this API?

@martinthomson BTW cursor: "never" currently does not output expected result. Again, CSS needs to be used to not display the cursor.

@martinthomson The above workarounds would not be necessary if an HTML <video> could be considered a "device" or window, where MediaRecorder would not stop when the underlying media resource is changed when src attribute of HTMLVideoElement is changed (new MediaStreamTracks added to the captured stream); that is, the MediaStreamTrack of kind "video" could be configured with the option to be read as a single MediaStreamTrack even when src is changed (similar to RTCPeerConnection <transceiverInstance>.receiver.track, and the single video track created by getDisplayMedia()).

@martinthomson Tested the code at Mozilla browser at console; getDisplayMedia() is not executed at plnkr. The workaround to not record the title and location bar at Chromium 73

// add 30 to height for Chromium 73 to not record title and location bar

// Firefox 68 records location bar using the same code

// TODO: adjust to not record location bar at Firefox

const mediaWindow = window.open(blob_url, "getDisplayMedia", `width=${width},height=${height + 30},alwaysOnTop`);which sets the window height to 30 px greater than the height MediaStreamTrack constraint still records the location bar at Nightly.

It seems there are multiple requirements here.

One is for a browser to allow a user selecting a part of a screen. I do not see benefits in adding a constraint for that so this seems like an implementation decision, not a spec one. Implementing this partial screen selection probably need some thoughts. For instance, how to present to users which part of the screen is being captured.

The second requirement is to capture a browser tab w/o title, location bar, slider... This seems somehow more closely related to cursor. Are you only interested in the latter?

@youennf Am only interested in concatenating multiple media files into a single media file. While attempting to achieve that requirement have tried several approaches where summaries of some of the approaches tried can be found at each branch of the above-linked repository.

One is for a browser to allow a user selecting a part of a screen. I do not see benefits in adding a constraint for that so this seems like an implementation decision, not a spec one. Implementing this partial screen selection probably need some thoughts. For instance, how to present to users which part of the screen is being captured.

The live window, tab, appliacation is already presented to the user at Chromium 73, minimized in a grid display. Firefox provides a dropdown next to the location bar. The selected screen at the grid at Chromium can could be scaled to 2/3 or the entire screen selected for capture if the user, for example, selects a radio to toggle on exact selection, in similar manner that Firefox Developer Tools provides a means to select a region of a screen. Notice, the specification already has an exact constraint modifier. This proposal is not asking for what should not already be provided, given a specifier exists named exact, the normal course of updating an API with more exacting features; specifications and standards are, in general, not static.

The requirement is to capture the exact dimensions of a given display, monitor, screen, application, window, <video>, etc.

The selected screen can be translated to sx, sy, sWidth, sHeight constraints. Alternatively, the user can programmatically set the input constraints directly videoTrack.applyConstraints({screenX:100, screenY:100, screenWidth, screenHeight}).

The benefits for the end-user should be self-evident: exacting capture of a given screen or application, without unnecessary portions of the screen or application. Such an specification extension of sx, sy, sWidth, sHeight is consistent with the current specification width, height, resizeMode, etc. For the getDisplayMedia() API (potentially for ImageCapture API), since the entire screen is being captured, it is reasonable to have constraints which select only part of a screen, similar to CanvasRenderingContext2D.drawImage() https://developer.mozilla.org/en-US/docs/Web/API/CanvasRenderingContext2D/drawImage implementation

sxOptional The x-axis coordinate of the top left corner of the sub-rectangle of the source image to draw into the destination context.

syOptional The y-axis coordinate of the top left corner of the sub-rectangle of the source image to draw into the destination context.

sWidthOptional The width of the sub-rectangle of the source image to draw into the destination context. If not specified, the entire rectangle from the coordinates specified by sx and sy to the bottom-right corner of the image is used.

sHeightOptional The height of the sub-rectangle of the source image to draw into the destination context.

> The second requirement is to capture a browser tab w/o title, location bar, slider... This seems somehow more closely related to cursor. Are you only interested in the latter? The constraint `cursor:"never"` does not work. Filed an issue at wpt to address the fact that there are no wpt tests coded to independently verify that fact. In the mean time, created workarounds for the non-working constraint using CSS. Created a workaround for not recording the local and title bars at Chromium 73 after a day trying different approaches. The original branch of using `getDisplayMedia()` to record multiple media resources used `requestFullScreen()` to avoid having to address not capturing portions of default browser GUI. Tried `kiosk` mode at Chromium, though functionality in that mode is minimal. Decided to revisit attempting to exclude portions of the screen that were not needed to be captured. The above code, modified since posting the comment, does not record the title and location bars at Chromium 73, mainly using CSS. Will dive in to Firefox next. These workarounds can be omitted if existing technologies were incorporated at least into this API, which by its very name is relevant to capturing an entire screen, thus it is reasonable to conclude that there might be portions of an entire screen which would need to be excluded, or, conversely, included to the exclusion of certain parts of the screen. Am only requesting rectangular selection, not triangles or circles, or parallelograms, though those dimensions, too, should ultimately be possible.

What I mean is that crop constraints at getDisplayMedia call time does not make a lot of sense.

Allowing a web page to efficiently manipulate video tracks (be they screen, camera or peer connection originating) with operations like cropping makes sense. This relates somehow to requirements expressed in https://www.w3.org/TR/webrtc-nv-use-cases/#funnyhats*

@youennf Crop constraints at getDisplayMedia() call makes sense from perspective here. Yes, similar to the linked document, though, again the requirement for this user is to capture video from an HTMLVideoElement (or, preferably, without having to use a <video> element at all; e.g., new OfflineVideoContext(data).startRendering() similar to OfflineAudioContext().startRendering(); decode/encode/read/write media in a Worklet or Worker context - potentially faster than "real-time", without necessarily having the play the media using the browsers' Web Media Player implementation; that is, an image: <video> presented on the browser surface) where multiple media files (audio and/or video could be concatenated into a single matroska or webm file; motivation: https://creativecommons.org/about/videos/a-shared-culture; https://mirrors.creativecommons.org/movingimages/webm/ScienceCommonsJesseDylan_240p.webm (create such video in the ostensibly FOSS browser without using any third-party code - use only the code shipped with the FOSS browser; or determine if such a requirement is not possible)), which is essentially the output of the browsers' respective Web Media Player implementation; initial attempt https://stackoverflow.com/questions/45217962/how-to-use-blob-url-mediasource-or-other-methods-to-play-concatenated-blobs-of described in more detail at https://github.com/whatwg/html/issues/2824, https://github.com/whatwg/html/issues/3593, https://github.com/w3c/media-source/issues/209, https://github.com/w3c/mediacapture-record/issues/166 and https://github.com/w3c/mediacapture-main/issues/575. HTMLMediaElement.captureStream() and MediaRecorder currently do not provide a means to capture multiple video media sources when the <video> src attribute is changed. replaceTrack() and getDisplayMedia() is the closest have arrived at meeting the requirement at both Chromium and Firefox. Chromium crashes the tab when MediaSource is captured using captureStream() https://github.com/w3c/media-source/issues/190; canvas.captureStream() with AudioContext.createMediaStreamDestination() has noticeable lack of quality ("pixelation") at closing image frames; replaceTrack() mutes a minimal though noticeable portion of the last 1 second of audio; getDisplayMedia() has the issue of having to perform two user gestures and use CSS to remove portions of the screen other than the <video>; ended event is dispatched or not differently at Chromium and Firefox https://github.com/w3c/mediacapture-fromelement/issues/78; and other bugs or interoperability concerns found during the process of trying to compose code for both Chromium and Firefox; still not sure why MediaRecorder is specified to stop recording if a MediaStreamTrack is added or removed from the MediaStream.

If the <video> element could be listed as a "device", e.g., "videoouput" at enumerateDevices(); or <video> could be selected as an application or window for getDisplayMedia() (the Web Media Player which drive the HTML <video> element is essentially an application), or MediaRecorder could be modified to not be specified to stop recording when the underlying media resource of a <video> is changed at src attribute, or using the functionality of replaceTrack() within the MediaRecorder (MediaRecorder.stream.replaceTrack(withTrack)) and/or MediaStream specification (MediaStream.replaceTrack(withTrack)) which would not dispatch stop at MediaRecorder (same as transceiver.receiverInstance.track being the same track with potentially different media sources with a single "timeline"), then that might lead to resolution of the requirement.

Edit

Taking it a step further, an API which exposed the respective browsers' MediaDecoder/MediaEncoder and webm writer code. And/or human-readable form of EBML for the ability to write audio and video as XML (https://github.com/vi/mkvparse; https://github.com/vi/mkvparse/blob/master/mkvcat.py) or JSON, then compress, if necessary into a webm container (achieved similar uncompressed using <canvas> Web Animation API (images as keyframes), and Web Audio API; with ability to adjust playback rate and reverse the media; synchronization of audio with images was an issue; lost the tests).

@youennf Firefox includes viewportOffsetX and viewportOffsetY for resulting object returned by navigator.mediaDevices.getSupportedConstraints(), though the constraints are not set for the MediaStreamTrack of kind "video". Is there a specification which clearly indicates which constraints are capable of being applied to a MediaStreamTrack depending on which API is used to get the MediaStream and MediaStreamTrack?

@youennf FWIW after testing various approaches which included Firefox freezing the operating system on several occasions requiring hard re-boot, setting dom.disable_window_open_feature.location at "about:preferences" at Firefox results in the title bar not being recorded. Not sure how such functionality could be incorporated into Media Capture Screen Share API, though would be helpful for the use case of not sharing specified regions of the captured window, screen, application.

So what happens when you capture part of the screen, and the user moves the window?

@alvestrand What do you mean by "moves the window"?

Firefox includes

viewportOffsetXandviewportOffsetY

These are non-spec and should be removed. They originate from a browser-tab sharing experiment Firefox had behind a pref years ago, and worked solely with applyConstraints to move which area of a web page (specifically) to capture, independent of end-user scrolling. The idea was to let a viewer, using a data channel, scroll independently from the presenter, with the obvious privacy implications that follow. We have no current plans to revive this effort.

In short, they weren't general purpose pixel croppers, which I agree with @youennf belongs elsewhere.

Is there a specification which clearly indicates which constraints are capable of being applied to a MediaStreamTrack

Track constraints are source-specific. If the specification of a source does not mention a constraint, then it is not supported for tracks from that source. https://github.com/w3c/mediacapture-main/issues/578 is hoping to clarify this.

@jan-ivar Am not certain what the issue, hesitancy and/or reluctance is with adding a constraint which provides a means to capture only part of a screen that will be shared? That is, if am gathering the hesitancy to add this constraint correctly from the responses so far in this issue.

This feature request is consistent with screen capture (still image and live stream) programs, and in fact, consistent with the concept of setting width, height and resizeMode. This proposal simply asks requests to further refine the screen to be captured.

It is reasonable to have the ability to select only part of a screen both by a selection tool to physically outline the portions of the screen to be captured and by setting sx, sy, sWidth, sHeight constraints.

Why should such functionality not be specified?

@jan-ivar FWIW dove in to getDisplayMedia() and removing specific portions of the screen while trying to record multiple media resources to a single webm file using MediaRecorder (various attempts, nuances, caveats can be read at the branches at https://github.com/guest271314/MediaFragmentRecorder); that is how this issue came about and why filed this issue moments ago requesting the feature that the replaceTrack method that, browsing the history of the method, you championed by defined as a method of MediaStream https://github.com/w3c/mediacapture-main/issues/586.

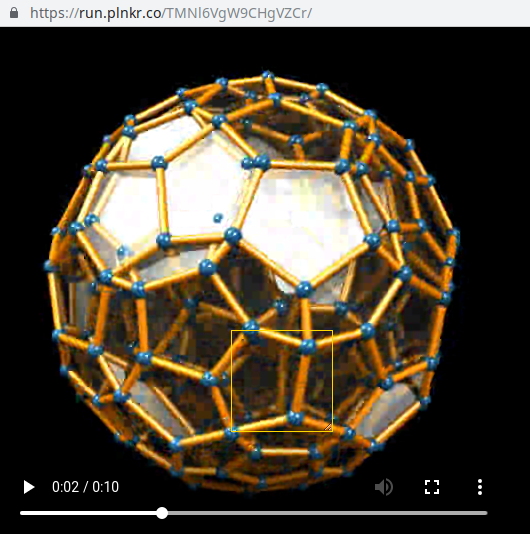

A rudimentary POC https://plnkr.co/edit/UmrSwN?p=preview to select only part of a screen for a screen shot (the movement and resizing of the selection element can be fine-tuned to have similar functionality as, for example, https://codepen.io/zz85/pen/gbOoVP)

<!DOCTYPE html>

<html>

<head>

</head>

<body>

<script>

(async() => {

function screenShotSelection() {

const div = document.createElement("div");

const styles = {

background: "transparent",

display: "block",

position: "absolute",

outline: "thin solid gold",

width: "100px",

height: "100px",

left: "calc(50vw)",

top: "calc(50vh)",

overflow: "auto",

resize: "both",

cursor: "move",

zIndex: 100

};

div.id = "screenShotSelector";

div.ondragstart = e => {

console.log(e);

};

div.ondragend = e => {

const {

clientX, clientY

} = e;

e.target.style.left = clientX + "px";

e.target.style.top = clientY + "px";

}

Object.assign(div.style, styles);

div.draggable = true;

document.body.appendChild(div);

}

const video = document.createElement("video");

video.controls = true;

video.style.objectFit = "cover";

video.style.lineHeight = 0;

video.style.fontSize = 0;

video.style.margin = 0;

video.style.border = 0;

video.style.padding = 0;

video.loop = true;

video.src = "https://upload.wikimedia.org/wikipedia/commons/d/d9/120-cell.ogv";

document.body.insertAdjacentElement("afterbegin", video);

video.addEventListener("play", async e => {

screenShotSelection();

const bounding = document.getElementById("screenShotSelector").getBoundingClientRect();

const stream = await navigator.mediaDevices.getDisplayMedia({

video: {cursor:"never"} // has no effect at Chromium

});

const [videoTrack] = stream.getVideoTracks();

const imageCapture = new ImageCapture(videoTrack);

const osc = new OffscreenCanvas(100, 100); // dynamic

const osctx = osc.getContext("2d");

screenShotSelector.addEventListener("dblclick", async e => {

console.log(window.getComputedStyle(e.target).left, window.getComputedStyle(e.target).top);

osctx.drawImage(await imageCapture.grabFrame(), parseInt(window.getComputedStyle(e.target).left), parseInt(window.getComputedStyle(e.target).top), 100, 100, 0, 0, 100, 100); // dynamic

console.log(bounding, URL.createObjectURL(await osc.convertToBlob()));

videoTrack.stop();

video.pause();

}, {

once: true

})

}, {

once: true

});

})();

</script>

</body>

</html>

Ideally, specific for a screen shot (i.e., https://github.com/w3c/mediacapture-screen-share/issues/107)

1) once permission is granted to capture a screen, application of tab a draggable and resizable element having transparent background is appended to a fullscreen selection UI;

2) once a region has been selected for example using dblclick event on the draggable and resizable element a MediaStreamTrack is created and exists for the time to capture a single image is having the dimensions of the selection element, without the entire screen being captured then drawn again to resize to the element dimensions; the MediaStreamTrack is immediately stopped and ended once the single image is captured

where the permission is not for a screen shot, but for a continuous media stream to be captured

1) only the selected region is captured (for example, the bounding client rectangle of the <video> element at the example code) not the entire screen;

2) all other MediaStream and MediaStreamTrack functionality remains the same

I would use this in my implementation of WebRTC. Getting exact dimensions is useful, and it is currently not allowed (eg. https://developer.mozilla.org/en-US/docs/Web/API/MediaDevices/getDisplayMedia section about TypeError (no exact or min spec's allowed)).

Is this question still open for discussion?

@hoolahoop How does not allowing setting min or exact constraints affect selecting only a part of the screen?

The issue has not been closed, yet.

This seems to be possible to do with current APIs, so no compelling reason seen for more browser surface.

I think the spec allows browsers to have a way to crop if they wanted to, but I don't think the application should have control which part of the screen the user can pick

@alvestrand @henbos Have you tried "Take a Screenshot" at Firefox Devloper Tools? That is what am asking to be specified as part of mediacapture-screen-share. Is there a compelling reason such functionality should not be specified?

Is there a compelling reason such functionality should not be specified?

This functionality is allowed by the spec. The spec stays away from any specific way to constrain user selection UI so I do not see what the spec could say related to the proposed functionality.

"Take a Screenshot" at Firefox Devloper Tools does not seem to require any specific spec.

This is really up to browser implementors to decide whether to add support for that functionality or not.

Have you tried "Take a Screenshot" at Firefox Devloper Tools? That is what am asking to be specified as part of mediacapture-screen-share. Is there a compelling reason such functionality should not be specified?

I still don't see mediacapture-screen-share as a screenshot API, which makes me reluctant to add any language advising the browser to that it must or even should have this type of a picker. I'm not saying there aren't any use cases for capturing part of a screen, but I'm not compelled that we should attempt to mandate this, and as-is browsers are allowed to implement such a picker.

If this use case was more compelling for getDisplayMedia then I do think we should advise implementations so that you don't end up having to do cropping as part of the application depending on whether or not the browser supported cropping, but again this is not the intent of getDisplayMedia, and I think it is within the browser's decision about what UI to support.

For what you're asking I would like to see a different API than getDisplayMedia.

@henbos

but again this is not the intent of getDisplayMedia

See the current language in the specification, emphasis added

Abstract

This document defines how a user's display, or parts thereof, can be used as the source of a media stream using getDisplayMedia, an extension to the Media Capture API [GETUSERMEDIA].

We could ignore that language and write a specification from scratch which included the same language to achieve double-redundancy.

It that is what you believe to be necessary, where to post the specification? WICG discourse? Am not a "member" of W3C, and not really interested in becoming beholden to an organization, particularly one which cannot write the words "patent and copyright" when that is what they are asking about. The specification should be very simple. In fact, since Mozilla has already written the and and implemented the code, the only question would be is will Chrome implement the specification? Using getUserMedia() is a logical choice for the solution. Yes, specifications should give guidance on UI functionality, to avoid multiple different actual implementations which could vary widely. One example of variance is WebM files output by MediaRecorder. Where without specifying track order, the tracks can be in an arbitrary order, adding complexity to the case of merging the WebM files output by Chrome and Firefox both withing the same browser and between the two browsers. That does not even get to h264 code in WebM the Chrome supports, though WebM was proffered as only having certain codecs.

In any event, how do you suggest to proceed?

@henbos There is an existing "Screenshot API" topic at WICG discourse https://discourse.wicg.io/t/proposal-screenshot-api/2412. There are comments citing privacy and security concerns. Well, getDisplayMedia() can currently leak information relating to tabs, windows, and applications where permission was not granted to be captured https://github.com/w3c/mediacapture-screen-share/issues/108#issuecomment-521846010. Does not appear to be particularly alarming to some maintainers of the specification.

but again this is not the intent of getDisplayMedia

From perspective here getDisplayMedia() is well-suited to be capable of selecting only part of the screen for capture, as the language in the specification currently states right now.

Can you explain the reasoning for your statement concerning the intent of getDisplayMedia()?

How does the reading of the specification infer what was not the intent of the specification when the actual language includes the term

or parts thereof?

@henbos The intent of this issue was to select a portion of the screen to record, e.g., a <video> element. Since HTML <video> is not an "Application", in general, it would be necessary to use ImageCapture.grabFrame() and getDisplayMedia(). Since PictureInPictureWindow is considered an "Application" a partial workaround https://github.com/guest271314/MediaFragmentRecorder/tree/getdisplaymendia-pictureinpicture that has limitations on width and height. The similarity to a screenshot proposal or issue is incidental. The user should still be able to select only part of the screen using this API, without having to use other APIs to exclude portions of the screen not intended to be captured. That is what lead to the awareness that getDisplayMedia() at Chrome can leak tabs, applications and windows not granted permission to capture.

Chromium issue https://bugs.chromium.org/p/chromium/issues/detail?id=994953 Firefox bug https://bugzilla.mozilla.org/show_bug.cgi?id=1574662

What is the status on this issue? Restricting access to only a certain part of the screen would be greatly beneficial to the user for privacy reasons.

@MonsieurWave The issue was closed at https://github.com/w3c/mediacapture-screen-share/issues/105#issuecomment-521667012. No current movement.

@MonsieurWave One workaround is to use ImageCapture.grabFrame() and canvas.captureStream() to capture individual images from MediaStreamTrack, perform processing on the image then set the images at the <canvas>, essentially requiring two MediaStreams and two MediaStreamTracks, see https://bugs.chromium.org/p/chromium/issues/attachmentText?aid=397381.

But this only works on a ScreenCapture within the browser, does it?

But this only works on a ScreenCapture within the browser, does it?

Can you clarify "within the browser"?

At Chromium it should be possible to select a "Tab" (unmute event of MediaStreamTrack fires under certain conditions https://bugs.chromium.org/p/chromium/issues/detail?id=1099280); "Application", inclucing Picture-In-Picture window; "Entire screen".

Images from the MediaStreamTrack as ImageBitmap can be captured using ImageCapture.grabFrame(). Process the frame to select, or remove parts of the frame. Transfer or draw the post-process frame to canvas where MediaSteamTrack from canvas.captureStream() (MediaStream) should be the post-process frames.

From perspective here one simpler procedure could be to provide a means to select only part of a screen to capture using constraints, thus this issue.

@MonsieurWave

For example, this code scales the entire screen to the dimensions passed as constraints, neither resizeMode nor applyConstraints() have any effect on the surface captured

var recorder, track;

navigator.mediaDevices.getDisplayMedia({video: {width: 320, height: 240, displaySurface: 'window',resizeMode:'none'}})

.then(async stream => {

const [track] = stream.getVideoTracks();

const {width, height} = track.getSettings();

try {

await track.applyConstraints({width: 320, height: 240});

console.log('constraints:', width, height, await track.getConstraints());

} catch(e) {

console.warn(e.message);

}

recorder = new MediaRecorder(stream);

recorder.ondataavailable = e => {

console.log(e.data);

if (recorder.state === 'recording') {

track.stop();

recorder.stop();

const video = document.createElement('video');

document.body.appendChild(video);

video.onloadedmetadata = e => console.log(video.videoWidth, video.videoHeight);

video.src = URL.createObjectURL(e.data);

}

}

recorder.start(1000);

});

8b295485-3452-4d90-a3a3-ef38e8e5b0a3.webm.zip

One approach to capture only the previews of capture shown in prompt which that was intended to be fixed in the specification by https://github.com/w3c/mediacapture-screen-share/pull/114

var recorder, track;

navigator.mediaDevices.getDisplayMedia({video: true})

.then(async stream => {

const [track] = stream.getVideoTracks();

const canvas = document.createElement('canvas');

canvas.width = 640;

canvas.height = 480;

const ctx = canvas.getContext('bitmaprenderer');

const canvasStream = canvas.captureStream();

const [canvasTrack] = canvasStream.getVideoTracks();

track.onmute = track.onended = e => console.log(e);

canvasTrack.onmute = canvasTrack.onended = e => console.log(e);

const imageCapture = new ImageCapture(track);

requestAnimationFrame(async function paint() {

try {

if (track.enabled) {

const bitmap = await imageCapture.grabFrame();

const frame = await createImageBitmap(bitmap, 200, 0, 640, 480);

ctx.transferFromImageBitmap(frame);

canvasTrack.requestFrame();

requestAnimationFrame(paint);

} else {

return;

}

} catch(e) {

console.warn(e);

}

});

try {

console.log('constraints:', track.getSettings(), canvasTrack.getSettings());

} catch(e) {

console.warn(e.message);

}

recorder = new MediaRecorder(canvasStream);

recorder.ondataavailable = e => {

console.log(e.data);

track.enabled = false;

track.stop();

const video = document.createElement('video');

document.body.appendChild(video);

video.onloadedmetadata = e => console.log(video.videoWidth, video.videoHeight);

video.src = URL.createObjectURL(e.data);

}

recorder.start();

setTimeout(_ => recorder.stop(), 1000);

});@MonsieurWave Entering fullscreen can reduce the captured screen to only document.body, without browser tabs being captured. Depending on CSS rules it might be necessary to perform further tasks to get the required part of the screen. The workaround does not provide a means to capture a specific part of the screen, and only that part of the screen, initially.

What is the status on this issue? Restricting access to only a certain part of the screen would be greatly beneficial to the user for privacy reasons.

The spec allows a user agent to implement capture of a part of a screen. Do you have use cases where capturing a part of a screen would be best compared to say sharing a tab or a window?

My specific use case is for recording data (ie taking a screen shot) of data/image displayed by another application. Taking the whole window of the application would reveal compromising meta-data also displayed by the application, which the user would not like to show.

getDisplayMedia seems overkill for this use-case. This might be best served as an extension to HTML Media Capture, a file picker that would allow taking a screenshot instead of an image file or a camera.

This might be best served as an extension to HTML Media Capture, a file picker that would allow taking a screenshot instead of an image file or a camera.

However, the user would thus need to use an external application to take the screenshot, thus requiring a lot more user input.

If you try <input type="file" accept="image/*" capture> on Safari iOS, user will be directed to the camera app to take a picture. Once done, the page has access to the picture.

<input type="file" accept="image/*"> shows a menu where user can decide to open the camera app, use an existing image...

A similar flow could be implemented for screenshots.

@youennf

The spec allows a user agent to implement capture of a part of a screen. Do you have use cases where capturing a part of a screen would be best compared to say sharing a tab or a window?

Provide a means to select only part of a screen to capture is a self-evident use case: The user only wants to capture certain portions of the tab, window, monitor surface.

Is your question essentially why a user would want to capture only parts of a display surface, instead of use getDisplayMedia() exclusively for video confenferencing where the entire screen is captured?

Desktop browsers other than MacOS based do not support capture.

The user can have multiple cameras connected to the machine, with each camera capturing a specific region of the display surface; for art, security, comparative analysis; etc.

Capture of specific DOM elements in the document (still image and motion capture) instead of capturing tabs, other windows, prompts, etc. just to capture specific parts of the display surface.

Capture of video being played on the screen, where the only part that needs to be captured is the video being played, not other elements in the document, or in the window.

This can be achieved usinf constraints to getDisplayMedia() or applyConstraints()

videoTrack.applyConstraints({screenX:100, screenY:100, screenWidth, screenHeight})

Why would this not be specified?

@youennf This issue was filed initially due to, at the time, Chromium browser crashing when attempting to render variable width and height (pixel dimensions) video at HTMLMediaElement when using captureStream() with MediaRecorder, in pertinent part see https://github.com/guest271314/MediaFragmentRecorder/tree/chromium_crashes, https://bugs.chromium.org/p/chromium/issues/detail?id=992235#c29. Thus the gist of the code at OP was to capture video being played on the screen, adjusting width and height.

Do wait around for specification authors, maintainers or implementers to do stuff, here. If there is a bug observed, generally immediately commence creating multiple workarounds, proof-of-concepts, and testing. This issue just happens to strike a chord with users that obviously have other use cases, particularly taking screen shots, where again, exclusion of tabs, other windows, and non-essential parts of the capture should be excluded, or rather, the ability to capture precisely what is intended to be captured the first time, and nothing else.

Do you have use cases where capturing a part of a screen would be best compared to say sharing a tab or a window?

@youennf I think I have one such use case completely away from the initial topic of screenshots. My (and others) default behaviour when sharing an entire screen used to be to share a screen with nothing on it - take the scenario of a laptop and an external monitor; I used to use the laptop screen as a "blank canvas" I could put content on - applications of all nature. When you're sharing content in a meeting or such, its a completely fluid thing - one minute I might be sharing code, another I could be looking at some docs in a browser. I don't want to keep switching which application is shared using getDisplayMedia so I just share an entire window. The trouble is I used to use my laptop monitor because it wasn't 4k (like my external monitor) - it meant people could actually read what was being shared etc etc.

Long story short, I've just changed from having one external 4k monitor to 2 and I no longer have my laptop open - so what option do I get given when wanting to do exactly what I did before? I'll show you.

This is the output of Chrome's getDisplayMedia GUM - you can see I have 3 monitors.... an ipad pro using sidecar (my temporary hack for this scenario), a vertical 4k monitor and a horizontal 4k monitor.

Some others have their own solutions like changing the resolution on their monitor to lesser so that they can share their screen and make it easily readable but that's not going to work here. I have a vertical monitor that will never share well. Should I rotate it every time I want to do a screen share in a meeting and take the resolution down? No. Should I change the resolution on the primary monitor? No - because then everything else on my display would get shown to the people on the other end of the call - thats another partial reason for always using the "blank canvas" of the laptop monitor before - when you work with multiple clients - you don't want your clients seeing content from your other clients.

So now, I'm lucky enough to be in this position. I've got two lovely 4k monitors infront of me and an ipad pro enabling my "hacky" solution for the moment. But for a moment don't think about the specifics, and go back to something else I just said.... I need a way to be able to share multiple applications all within one stream without showing off the content on the rest of my display - that's a perfect use case for this and if its being done to enable privacy reasons - then it should be down to the browser to do it and not be up to the application to action with cropping (ouch... cropping of real time video within javascript userland....) - for privacy reasons the browser should be the one giving over a stream only containing what the user selected when they say.... dragged an area they're OK with sharing. The way I see this working is very similar to how Quicktime does screen recording - you drag an area, everything else goes slightly darker and you're able to see exactly what has been shared with the application.

So what do you think? This is definitely more than wanting to create screenshots. This is about offering flexibility. But while we're at it - with the likes of project fugu around.... don't we want web applications and the like to be just as good as say Zoom? Zoom lets me share a portion of my screen.... why shouldn't the browser?

@danjenkins

I think I have one such use case completely away from the initial topic of screenshots.

The initial use case was actually to capture variable width and height video displayed on a screen using getDisplayMedia() to avoid the now-fixed Chromium bug where the browser would crash when processing variable pixel dimension video.

Screenshot use cases and issues were mentioned as also applicable after that initial use case, which at the time was not simple to explain what was trying to do (also using Picture in Picture window for the same use case).

@danjenkins tl;dr https://github.com/guest271314/MediaFragmentRecorder/tree/chromium_crashes, https://bugs.chromium.org/p/chromium/issues/detail?id=992235 and links included therein for the origin of this issue. See also https://bugs.chromium.org/p/chromium/issues/detail?id=1100746 for "Tab" and "Application" capture issue. See https://github.com/w3c/picture-in-picture/issues/163 for Picture-In-Picture issue.

In lieu of specified fix, a general solution piping original MediaStreamTrack to canvas or at Chromium using ImageCapture and ImageBitmapRenderingContext can be composed and developed to address the various use cases, to refine the procedure and have code algorithm ready to go if the specification language is eventually added - else just use the code developed outside of the specification.

@danjenkins

I need a way to be able to share multiple applications all within one stream without showing off the content on the rest of my display

One approach is to utilize document.body or HTMLVideoElement requestFullscreen() https://github.com/guest271314/MediaFragmentRecorder/blob/getdisplaymedia-webaudio/MediaFragmentRecorder.html#L30 to exclude portions of the screen not intended to be shared.

@danjenkins Another issue is that even though https://github.com/w3c/mediacapture-screen-share/pull/114 fixed https://github.com/w3c/mediacapture-screen-share/issues/108 in the specification, Chromium still captures UI, which means that given an HTML element that is effective a green square, simulating the window on your 4k monitor, the first frame can still include the UI - which is not expected to be exposed in the capture

. See the green square in the first image when capturing the entire screen, and the prompt itself captured, which should be the position of the green square, which is the only part of the screen that is expected to be captured. Test a basic implementation for yourself. Try with and without

. See the green square in the first image when capturing the entire screen, and the prompt itself captured, which should be the position of the green square, which is the only part of the screen that is expected to be captured. Test a basic implementation for yourself. Try with and without await new Promise(resolve => setTimeout(resolve, 7000)); to observe the prompt, which can include other screen not intended to be captured, captured nonetheless

document.body.style="padding:0;margin:0";

document.body.innerHTML = '<div style="position:relative;display:block;left:calc(50vw);top:calc(50vh);background:green;width:200px;height:200px"></div>';

onclick = e => navigator.mediaDevices.getDisplayMedia({video:true})

.then(async stream => {

// wait for prompt to fade out

await document.body.requestFullscreen();

await new Promise(resolve => setTimeout(resolve, 7000));

const [track] = stream.getVideoTracks();

const imageCapture = new ImageCapture(track);

const frame = await imageCapture.grabFrame();

const canvas = document.createElement('canvas');

const ctx = canvas.getContext('bitmaprenderer');

const {width, height} = frame;

const {x, y, width: w, height: h} = document.querySelector('div').getBoundingClientRect();

console.log(x,y,w,h);

const bitmap = await createImageBitmap(frame, x, y, w, h);

canvas.width = w;

canvas.height = h;

// repeat this process to create a video from ImageBitmap's

ctx.transferFromImageBitmap(bitmap);

track.stop();

document.body.appendChild(canvas);

document.exitFullscreen();

});In the case of capturing the window on the 4k monitor would try using "Application" capture to capture Chromium instance window instead of the "Entire Screen". Though note the issue relevant to un-documented and un-specified behaviour of Chromium implementation firing mute and unmute events on the MediaStreamTrack when no activity is "rendered" in the application.

Ultimately this shouldn't be a userland addition. This should be a browser feature. Lets say I only want to share the top half of my vertical monitor. I have something private and confidential on the bottom half that I haven't moved off it - there's no way I'm giving say Google Meet access to my entire screen, would I trust that application to not be sending that data somewhere? What you're describing above feels like a hack around a solution - and I'm not even certain if its really fulfils the solution to the problem - what if I don't have the browser foregrounded at all. I'm showing off VsCode and another browser different to the one running the meeting application I'm sharing my screen with.... In the web world we stop HTTPS pages being able to talk to HTTP APIs for a reason.... how is this any different? You want the user to know the web application truly only has access to the portion of the screen I allowed it access to. Also, the above solutions only work if developers implement the ability to do it - shouldn't it be a browser GUM "thing" so that any web application taking advantage of getDisplayMedia is able to use it?

@danjenkins If requestFullscreen() is not called the height of navigation, title, bookmarks bar must be included in the calculation to acquire the correct coordinates of the element intended to be captured.

Firefox provide a "Take a Screenshot" feature which allows the user to select only a portion of a screen. That option should be provided for

getDisplayMedia()in the form of constraints where specific coordinates can be passed; e.g., using.getBoundingClientRect()in the form of{topLeft:<pixelCoordinate>, topRight:<pixelCoordinate>, bottomLeft:<pixelCoordinate>, bottomRight:<pixelCoordinate>}, or at the selection UI, similar to how Firefox implements "Take a Screenshot" feature. Use case: The user only wants to share a specific element, e.g. a<video>.