If it works on some sites but not others (like Github) it is probably prevented by the Content Security Policy, but a good browser/extension combo is not affected by that for the reasons Patrick mentioned.

Open Myndex opened 5 years ago

If it works on some sites but not others (like Github) it is probably prevented by the Content Security Policy, but a good browser/extension combo is not affected by that for the reasons Patrick mentioned.

Thank you @patrickhlauke and @alastc — that makes sense... I am actually concerned about how these third party extensions are accessing page data including secure information.

It sounds like browser developers need some "standards" then — way way off topic from here — I guess that'll be the next crusade.

OS X does have invert and contrast in system prefs, but that affects the entire system, and is this really cumbersome to use.

RELATED: One of the perception problems I've discovered using multiple systems and browsers over the last week of testing is the anti-aliasing of small text varies quite a bit per system (and screen pixel density), so small text often ends up much much lighter (and lower contrast) than it was specified in the CSS. This is more a browser rendering/system display problem than a design problem, though designers should be made aware in terms of a "guideline." Nevertheless, another point in how complex the issue really is.

A

designers should be made aware in terms of a "guideline."

but designers have no influence over this, as it comes down to individual users and how these users have set up their system (similar to how designers have no influence over other factors, like whether or not a user's monitor is properly calibrated, uses an appropriate color profile, is set too bright or too dark, etc).

the only appropriate action here seems to me an informative note somewhere (perhaps in the understanding documents) that explains that regardless of any contrast calculation (even with the updated algorithm) things may not work perfectly for every user due to variations in actual rendered output / viewing conditions.

so small text often ends up much much lighter (and lower contrast) than it was specified in the CSS.

This looks closely related to issue #665, the discussion there is useful.

Regarding overwriting page styles -- even with a browser extension it is very hard to overwrite page styles without throwing out author styles completely. This is because of the CSS rules that govern hierarchy with ids because highest, etc. even when !important is used.

If you throw out page styles altogether then you lose so much of the feel of the sites and other visual clues that are used for grouping elements, etc. and so it's not really fair to a low vision user to lose all of this information that they didn't have an issue with to gain contrast on another element that had less than sufficient contrast. That's why despite some people saying we don't need a contrast SC anymore because users can just overwrite the colors -- I disagree with them.

Most mobile browsers don't support extensions and so you can't get user styles in many of those situations as well.

designers should be made aware in terms of a "guideline."

but designers have no influence over this,

Yes they do, they choose fonts and size. Using too thin/light of a font at too small a size is going to have anti aliasing problems which reduce apparent contrast. What I am saying is that a "guideline" on how small and light fonts render with less contrast due to antialiasing. Something like:

"It is important to remember that even if a color pair is a PASS by the contrast checker, that small and thin fonts may be affected by antialiasing effects that will reduce perceived contrast".

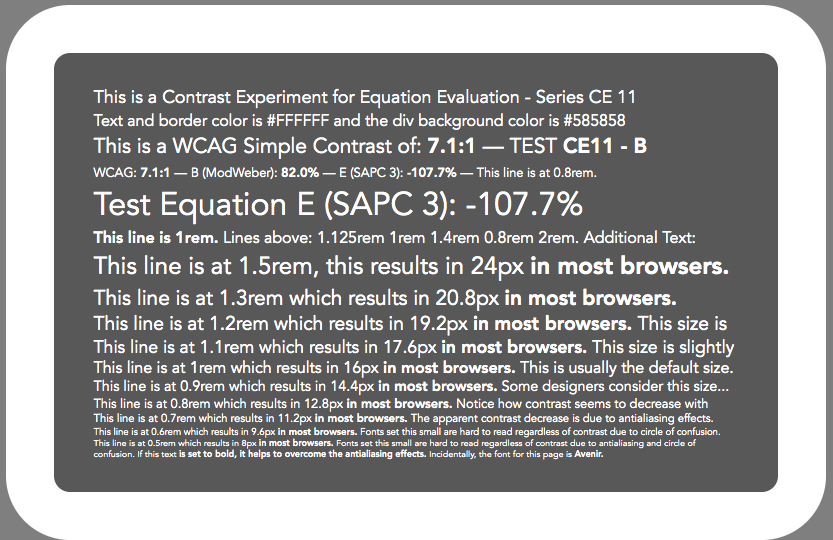

This examines more in detail the Modified Weber and the SAPC 3 equations. SAPC 3 has many more patches to examine as it is appearing most uniform at the moment.

There are two versions, dark text on light BG (CD10) and light text on dark BG (CD11),

1) THIS IS AN ALPHA LEVEL TEST — WORK IN PROGRESS! Among other things, these pages are NOT responsive, and intended for desktop only.

2) The FONT for these tests is Avenir, and the CSS sheet does have a font-face tag set. But if overridden, or the font does not look like the sample below, please let me know. I'll probably switch to something like Arial for the wide area test. I've been using this because the "normal" is fairly thin, and the "bold" is fairly bold. (The normal is the font's "medium" and the bold is 'black").

3) The SAPC3 percentages are scaled with the aim of making an "easy to remember" set of values:

4) Each DIV has a very large border - this is to emulate the BACKGROUND the DIV is on (i.e. consider the contrast between the DIV contents and the Border, and NOT between the border and the overall page in this case). The OVERALL BG of the page at #777 is not part of the judgement criteria.

A CE12 sample: EDIT: CE12 is a more uniform test of light text on dark than CE11. CE12 also demonstrates threshold levels more effectively. Ignore CE11.

Please comment or questions!

Thank you,

Andy

Hi @Myndex,

Thanks, I'm having a look, just trying to work out how I should try to evaluate the results. (I'm not really target audience, but as an example.)

For example, in CE12 at 80%, the first one appears to have a bit more contrast than the second, although when I look away and look back I sometimes make a different choice! The last one at 80% appears the most contrasting to me, with the really white text. (I know the backround is lighter there, but it still stands out more to me.)

The text size within each doesn't particularly affect me, I just have to focus a little more at the sub 0.7rem lines. Actually, looking at the 60% or less examples I do struggle at under 0.8rem.

Is the idea that people rank by contrast, and we see which measure matches that best?

Or perhaps: What is the smallest line you can confortably read?

Hi @alastc

Thanks, I'm having a look, just trying to work out how I should try to evaluate the results. (I'm not really target audience, but as an example.)

Everyone is the target audience for this, actually, This is not to evaluate any particular "standard criteria". STEP ONE in solving this issue is finding an equation that is "more perceptually uniform" across a RANGE of lightness/darkness.

That is, For a given series of dark-to-light samples at a given percentage, each one should seem "perceptually" the same contrast.

Such an equation is "impossible" because room ambient light and monitor brightness interact. This is seen most easily in the threshold level tests.(At the bottom of the pages). On CE10 (dark text on light), go near the bottom of the page for instance to the 18.5% series (or even the 8% series) as these are close to threshold they are most "obviously" affected by room light and screen brightness. The darkest samples and the brightest samples will be more or less readable based on screen brightness and room light, and not at the same time unless the screen and room light are "exactly ideal".

For example, in CE12 at 80%, the first one appears to have a bit more contrast than the second,

Are you looking at the MODWEBER 80% or the SAPC 3 80%?

I think I just realized I need to put serial numbers on all of these so we can communicate about each! Oops (like I said this is an ALPHA test).

although when I look away and look back I sometimes make a different choice! The last one at 80% appears the most contrasting to me, with the really white text.

This is called ADAPTATION. And also points out how total screen luminance is a big part of the perception of contrast.

If you look away, you adapt to something ELSE, and then look to the screen, and it seems different due to different adaptation effects.

(I know the backround is lighter there, but it still stands out more to me.)

The "MODWEBER" light text on black at 80 will look more contrasty at the lighter value, and is a little less uniform than SAPC 3. Scrolling down to SAPC at 70%, each test patch should seem closer.

Still the darkest test patches will have the greatest variance due to a variety of conditions of light adaptation etc. The darkest patches are a big part of my focus as they are what is most wrong with the current math assessment.

THE OVERALL IDEA

The main idea here is to find a setting/equation that gives a similar, relative, perceptual contrast across an entire range of conditions of light, screen brightness, etc. By doing this, any two numbers plugged into the equation will give a reasonably predictable answer on the resultant perceived contrast.

The text size within each doesn't particularly affect me, I just have to focus a little more at the sub 0.7rem lines. Actually, looking at the 60% or less examples I do struggle at under 0.8rem. Is the idea that people rank by contrast, and we see which measure matches that best?

Using the smallest text as a "place to look" does every patch in a group of a particular contrast setting seem equally readable.

Or perhaps: What is the smallest line you can confortably read?

This is less about absolutes like that (at least right now — absolutes will be determined by experiments using a test with test subjects).

Looking at CE10 and CE12, I want to find math where each patch is equally readable (perceptually uniform) at a given target contrast.

Note that due to 8 bit resolution, the percentages will all be a little off the exact target, and that's not terribly important. mainly looking to define math that returns perceptually uniform results over a range of dark/light at a given percentage.

NOTE: I removed the MODWEBER tests as I'm concerned they were causing confusion.

They will be placed in a separate document.

On some initial perceptions:

Hi @alastc From the other issue as it relates here:

However, whether there should be a guideline to prevent maximum contrast is a slightly different question. There is a certain amount of user control that is easier to apply to too-much contrast, compared to not-enough. If someone made a solid case for a guideline about too much contrast it would be considered.

This is certainly part of the process in the experiments for #695 — There are existing guidelines and standard though as well. FAA Human Factors specifies the following:

On the subject of maximum contrast, as I have different impairments in each eye I can discuss my own issues as they relate to this.

My left eye, with a Symfony IOL implant has a substantial vitreous detachment and floaters., some of which tend to "hang out" right in front of the fovea. The right eye with a CrystalLens IOL has developed a membrane (soon to be removed by YAG) so at the moment that eye has both lower contrast and increased scatter similar to the cataract the IOL replaced.

YUK!!

But it is helping me evaluate these conditions of contrast (I am trying to get through a lot of this before the membrane is removed in a couple weeks, LOL).

The following were with a MacBook with the screen approx 12"-14" away and high room lighting reflecting on the screen, and it the "gloss" screen, and screen brightness set to less than the halfway point.

The following contrast figures are using the output of the SAPC 3 method, measured as a percentage.

Right Eye Only no glasses:

With BOTH eyes, glasses off:

WITH glasses and both eyes:

As I read more existing research as well as conduct my own studies and experiments, it is clear that contrast is just one part of the overall considerations for visual accessibility.

Interim thoughts based on the present research. These are "alpha" thoughts, not absolute conclusions by any means.

Along the way through experimentation, observation, existing research, and my own design experience, I find the following items are interdependent as they pertain to legibility:

Within Designer's Control

Outside Designer's Control (User Related)

So while the display luminance and environment are outside of our control, they are not a mystery either. We know that ambient light is a consideration as it lowers contrast. We know that the 80 nit specification for an sRGB monitor is far obsolete, when consumers have phones that can go well above 1200 nits, and a even the cheapest phones can display 400 to 500 nits. We also know that modern LCD displays have better contrast and lower glare/flare than the CRTs some standards were written around.

Also with visual impairments, while there are many (I have personally experienced several), most are well understood. Severe impairments require assistive technology beyond what a designer can do. But within the designer's purview are things such as not locking zoom. I've personally encountered many sites I could not access because I was unable to zoom in to enlarge the text. Such restrictions on a user adjusting the display are completely unacceptable.

Similarly, designers going for form over function, or toward trendy hard to read fonts and color combinations (which technically PASS current WCAG standards, yet are illegible) is indeed a problem. Fortunately many browsers now offer a "reader mode" that disposes of all the trendy CSS de-enhancements.

A few considerations regarding common eyesight degradation:

The above red and green are at the same luminance (#FF0000 and #009400) so the luminance contrast is zero (or 1:1 in WCAG math). The blue should be readable as it's much darker, despite being at the max of #0000FF. This is because blue makes up only 7% of luminance. In the above example, the blue is 2.1:1 per WCAG math, or SAPC3 28%.

BELOW: The red and green are set at the same luminance as maximum blue. (R #9E0000 G #005a00 B #0000FF).

There are several related issues here on GitHub They are this (695), and also #665, #713, #360, #236, #700, and #346. The research I am discussing in this thread applies to a number of other issues including these. All are tightly intertwined in terms of how a standard (for WCAG 3.0 and beyond) should be developed, but the main focus for THIS issue (695) is developing a method for easy programatic contrast assessment that provides values that are perceptually uniform and therefore accurate relative to human perception.

Current Experiments In the current experiments CE10 and CE12, I may have over compensated for darker color pairs slightly, but still evaluating in different ambient conditions.

Once a stable method of assessment is found, the next step is a trial with test subjects to evaluate specific range limits for accessibility criterion. At the moment I am working on a webapp with the idea that people all over can go through the assessments using the device and environment they normally use. While that lacks a clinical control, if the sample size is large enough the data should be very instructive.

Two weeks ago I indicated that an incremental pull request for a few ideas could/should be added for WCAG 2.2 (not a new equation, just a couple refinements). Those proposed items are:

1. Minimum and maximum luminance. Using the current WCAG math, set specific minimum luminance for the lightest element and maximum luminance for the darkest element. This should prevent some of the combinations that "pass" but are illegible.

AAA: a WCAG 7:1 contrast AND the lightest element no darker than #B0B0B0 (43.4% luminance).

AA: a WCAG 4.5:1 contrast AND the lightest element no darker than #999 (31.8% luminance).

A: a WCAG 3:1 contrast AND the lightest element no darker than #808080 (21.6% luminance).

2. Minimum padding. Using the WCAG Contrast math, if a text container (a DIV or P etc.) has a background that is a contrast ratio of more than 1.5:1 against the larger background it is on, it requires a minimum padding of 0.5em around the text. A padding of at least 1em is advised if the contrast between the DIV and the larger page background is a contrast exceeding 3:1

3. sRGB corrections @svgeesus indicated he was going to do this, namely correct the sRGB math issue and remove the reference to the sRGB working draft which is obsolete.

Before I start forming the pull requests, I thought I'd bring these up again for discussion. The justifications for 1 and 2 are the experiments shown throughout this thread. The justification for 3 is that the math is wrong, and should be correct in a standards document.

In order of magnitude:

(3) sRGB corrections, if @svgeesus is tackling those, best to leave that part alone.

(1) Including min/max luminance, could you point to which of the many bits posted above shows that?

I'd rather get past "should prevent" to having some evidence of preventing that (i.e. testing with people), and to understand what sort of combinations would now be ruled out (as that can cause legal changes).

The current SC text says "contrast ratio of at least 4.5:1", with no caveat on that, so it would be a large change to a current SC, which is more difficult to do.

(2) Min padding: This requires more discussion, it changes the testing criteria quite a lot and starts to dictate the design.

For example, is a measure relative to the font size necessary? Larger text has larger padding, but is that flowing from how perception works? Nievely I might assume that smaller text would need proportionally larger padding.

Also, must it be extra padding? If you don't have that sort of padding, would increasing the contrast on the inner background have a similar effect?

Hi @alastc

In post https://github.com/w3c/wcag/issues/695#issuecomment-483805436 above

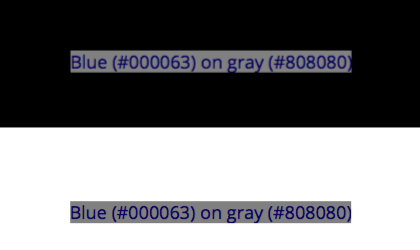

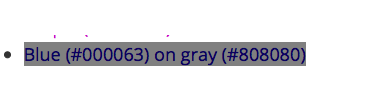

About half way down the post it shows the need for padding, from a bit that WebAim had on their site, showing how 4.5:1 does not verify legible contrast in that case. Here is the example image for convenience

:

That was the post where I brought local adaptation and surround effects into the discussion.

:

That was the post where I brought local adaptation and surround effects into the discussion.

The Suggested Limit Values The figures I listed in my above post were taken from experiment CE10, as limit levels that would prevent problems such as the dark blue text problem in that clip in post 483805436.

The thinking is that it would be a good incremental change that would help "pave the way" toward an equation change in WCAG 3.0

Perhaps it should be listed as an "advisory" ? As in "As an advisory for future accessibility standards, it is recommended to limit the brightest color to be no darker than..."

2) This again is interdependent on the other 4 "designer control" factors listed in post https://github.com/w3c/wcag/issues/695#issuecomment-490838091 from late yesterday. Those being font size, weight, luminance contrast, padding & spacing, and secondary elements.

To answer your contrast question, yes all five factors all work together. So there are certainly contrast combos that don't "need" padding, which is why I mentioned relative contrast as a key to padding size. Here's the whole tidbit from WebAim:

The yellow text container has almost no contrast against the white (1.07:1) so it would not need padding per the proposed spec. The blue grey though - the grey is contrast 3.9:1, so per my suggestion it would need a 1em padding as it is over 3:1 ... However, yes, if the dark blue text was instead white like the surrounding background then padding would probably not be needed as that white text would be close to the white BG the eye is adapted to and the grey would be in essence a single contrasting element.

So here is that example piece again, but replacing the dark blue text with white, making the text 3.9:1 against the grey:

And if we make the text and container darker, here the text is at 1.5:1, and the grey is darker to maintain 4.5:1 .

So with this example it would appear that so long as either the DIV or the TEXT it contains are at a contrast less than 1.5:1 to the larger background padding isn't specifically needed, as opposed to what I originally suggested regarding just the DIV's contrast as the deciding factor.

(Just to note here as another example, the top one with white text is WCAG 3.9:1 contrast and the lower one with grey text is WCAG 4.5:1 contrast, but the grey text is a lower perceptual contrast despite being a higher reported contrast from the current math.)

As to size, I was thinking relative to body text/block text. But a big bold headline font would likely not need a full 1em around it. So perhaps em is not the right unit to use, I personally use em because it's relative and thus useful in responsive situations.

So I understand your concerns for both of these. Nevertheless I hope I have provided ample support for them through this thread. Even though they are not the prime targets, they are both part of a path toward a unified and perceptually accurate visual assessment criterion.

But if making them as a "standard" or rule is too much, perhaps there is a way to provide recommendations that are not "hard rules" with the idea that those recommendations will evolve into rules/standards at a later date. This would allow for some "easing" into larger changes, letting designers and testers know the direction something is going to change to.

Updated Evaluation Pages.

CE14 (Dark text on light BG) and CE15 (Light on dark) are up.

There is a serial number on each patch, which relates similar patches between CE14 and CE15 for contrasts 40% and up. The serial number should help in discussion as ther are ove 100 patches per page.

Patches are grouped by contrast, in groups for 100%, 80%, 70%, 60%, 50%, 40%, and several lower contrast groups including threshold tests.

I anticipate that 100% will relate to WCAG 7:1, 70% to 4.5:1, 50%: to 3:1, and 25% to 30% I believe will be a good target for a DIV against a background. All to be determined by a live study.

NOTE: this math (SAPC) is very slightly opinionated in that the dark values are given just a little more weight as they are most affected by ambient light. Also, depending on monitor settings you may perceive a very slight increase in contrast in the middle greys, this is related to the "system gamma gain" which is not implicitly being counter-acted (as it is not encoding either). And this effect varies depending on monitor setting.

The serial number is at the end of the largest line of text,such as this #CE14-19. This should make it easier of anyone has questions or comments.

As a side note, and to emphasize the importance of surround effects, in the earlier post above, this little tidbit:

Those colors are WCAG 4.5:1 contrast yet terribly hard to read. Here are those exact same color values, but on a DIV with ample padding:

In the case of a bright white page like this one on GitHub, dark colors like this require ample padding.

I will answer any questions or comments, or further discussion, but otherwise I'm going to let these issues rest a bit to sleep on it.

Dear Andy, I have read your issue and many of the papers. I am not sure how we get from astronomical luminance to luminance in rgb, but it is done.

If you could send a bibliography or your readings I will get started.

Many issues you bring up: font size, polarity and ambient light are significant issues I have encountered. The biggest problem we have found with partial sight is it is just that: partial. Each individual has something different that is missing. Thus no one configuration works for all. That being said, the science and aesthetics need reexamination. I doubt we can get paid to do it, but we can make a contribution if we prove our results. The reflow criteria is the result of careful quantitative analysis. We can do the same with this ... if it is not beyond our scientific capabilities.

We won't know if we don't try.

Best, Wayne Dick

On Thu, Apr 25, 2019 at 7:37 AM Myndex notifications@github.com wrote:

Hi @svgeesus https://github.com/svgeesus !

I am so happy you joined this thread, I'm a big fan of your posts elsewhere — I find we are normally in agreement, and you always bring an additional perspective to complex and difficult or abstract issues.

The TL;DR summary of my views on the proposal: strong agree, both for the fairly modest changes for 2.2 and the more important and meaningful changes for 3.0. I have minor quibbles with occasional points of detail, but the proposal is well argued, well-referenced, coherent, and deserves to be very seriously considered. Bravo!

Thank you very much. this contrast issue became a central focus that I came across while working on a larger project relating to color (namely, a CSS framework, and a planned series of articles). Since I came across this contrast issue, I have dived deeper down the color perception rabbit hole than I can remember (hint: it's a really deep hole, LOL)

And it sounds like I need to review some of my early posts if I am not making complete sense. Not only that but some of my initial opinions and hypotheses have shifted, others strengthened, and new ones have emerged.

I do want to address any confusions of course. Just briefly my background is film/TV in Hollywood, VFX, Editing, Colorist, etc. (I'm normally listed as Andrew Somers in credits). We do nearly all of our work in a 32bit float linear workspace, meaning gamma 1.0 (flat diagonal line from 0 to sun, no curve) as is common today.

BACKGROUND ON TERMS THAT HAVE CAUSED CONFUSION: In the early days of digital filmmaking, Kodak developed Cineon and the 10bit per channel .cin file which became DPX. The data in the file of a scan of a film negative, and was referred to as being "log". Because the "opposite" of log in linear, this led many in the industry to calling video "linear" and DPX "log", despite the fact that video has a gamma curve. You can probably imagine the amount of confusion and frustration this caused, including an ugly shouting match between me and a certain well known colorist over issues caused by the resulting miscommunication.

I have always used the term linear to mean what it is: a straight line that represents the additive quality of light as it exists in the real world. This is in part thanks to my friend Stu Maschwitz (formerly with ILM and his company The Orphanage) who has been evangelical regarding linear, see his blog https://prolost.com/blog/2005/5/15/log-is-the-new-lin.html

The authority I always point to most of course is Charles Poynton who has been instrumental in setting hte record straight on these issues.

So my point is it's more than a little oops on my part if something I said regarding linear/gamma/perception wasn't clear, I'll review that soon/this weekend. I've also been working up a glossary and list of references to add some clarity to this thread, thank you for pointing those out.

But I was not trying to imply that monitor gamma was not dealt with or ignored — What I was trying to say was that luminance is linear and therefore is far from perceptually uniform, and simple contrast fails to accommodate perception.

On some of your other questions: But just to address what I was saying briefly to perhaps clarify: Simple Contrast (SC) does not take human perception into account. The SC math is (L1/L2) and is really only useful for the ratio between 0 or min, and max. It should be noted that between 0 and max, a variant of Weber is the same: (Lmax - Lmin)/Lmin so if Lmin is close to zero (0.04) and max is 100, then you have 99.6/0.04 which is not significantly different than L1/L2 and because it is at the extremes, the gamma curve doesn't play into it. L1/L2 is how manufacturers define the "total contrast" of their monitors, and it's a fine metric for that (though the newest monitor standards (SID IDMS, that are replacing VESA) are using Perceptual Contrast Length (PCL) I pasted a screen shot below).

SC (simple contrast L1/L2) uses luminance as input values, and so does Weber and Michaelson contrast for that matter. In fact so does PCL before it converts luminance to more or less an L* curve. But L1/L2 is not suitable for describing perception of text contrast. Weber is more the standard for text. I was really talking about the contrast equation, not so much the alphabet soup of light and perception measurements.

Nevertheless as for monitor gamma, I believe I was partly referring to system gamma gain, something I found in experiments was adding in the expected sRGB system gamma gain of 1.1 improved the perceived contrast vs the reported contrast numbers. But also, I think I was stumbling through trying to relate why simple contrast failed to accommodate perception, and pointing to gamma and other perception curves. I have a much better description I'm posting later with the glossary.

Also those statements on the problem were early on and perhaps a little premature as I had not completed a series of tests, and I hope I didn't add to confusion — going back to edit some of the earlier posts now.

So I'm pretty sure we're on the same page.

On excessive flare: one of the little bones I have to pick is that some of the WCAG is based on obsolete working drafts, even when there is a claim to be using a standard — sRGB working draft vs IEC standard is one example. The sRGB working draft is not the standard, but WCAG is using (incorrect) math from it, and also a 5% flare figure. From what I've seen in the IEC standard, the flare is defined as 1 cd/m^2, although I have also seen 0.2 nit and 4.1 nit (I don't have the most current IEC at my fingertips at the moment). And that original draft, plus some of the standards like the 1988 ANSI are based on CRT monitors, not LCD. LCD screens have a very different flare characteristic. Consider that CRTs were curved making them a glossy specular fisheye reflector on top of phosphor dots that themselves where highly reflective, coupled with low output (80 cd/m^2 LOL!) there's little comparison to modern LCD and especially mobile devices.

Modern LCDs are flat, and either matte or high gloss, and substantially darker in ambient than a typical CRT. Because they are flat, they can be angled to exclude bright light source reflections, especially the high gloss ones (the persistent problem with the high gloss is the mirror effect, bad for Hawaiian shirts!)

For mobile? iPhones are over 700 cdm^2, and Samsung has phones with over 1100 cd/m^2. To couple with this, relevant research shows that flare causes problems in the blacks, and becomes a non-factor as screen luminance increases. (All this again showing how out-of-touch the sRGB 80 cd/m^2 spec is).

I measured several monitors around the studio here, with monitors off (so measured flare/amb reflection only) some near daylight windows but no direct daylight on the monitor face — all measured less than 1 cd/m^2 per my Sekonic Cine 558. Not exhaustive by any means, but definitely a different world from CRTs.

But my other issue with the 0.05 "flare" is that it seemed more like a way to brute force the SC into something more useful, along with setting the contrast standard to 4.5 which seemed a bit of an odd number compared to existing standards (which usually specify 3:1 & 7:1).

As such, I suppose I shouldn't use the word "excessive" as you point out mobile has made ambient conditions an even more complicated issue. IN FACT: Perhaps there needs to be a different standard for mobile vs desktop.

Lstar The current standard isn't doing anything with L, but on the subject of "why modify L ", I'm not saying "should" just that some experiments I've conducted with various contrast methods — Weber, Michaelson, PCL, Bowman, along with some of my own using additional exponents or offsets to adjust for various perceptual needs. Experiments are asking questions like, is it possible to have a single equation that takes negative/positive design polarity into account, or do we need to adjust the curve near black, etc etc.

As an example the PCL equation I'm testing is from the SID IDMSv1p03b.pdf monitor standard, and it uses a bunch of weird exponents, but if you break it down it is very similar to L* in terms of curve shape but with significant changes near black. Here's the PCL math from the IDMS standard:

[image: Screen Shot 2019-04-25 at 3 27 51 AM] https://user-images.githubusercontent.com/42009457/56729676-b5dcef80-670a-11e9-8d28-259615a39710.png

It looks like PCL may be derived from some of Mark Fairchild's ICAM image appearance model, but I haven't gotten too deep into iCAM, as I think "that level" is a WCAG 4.0 kind of idea.

At the moment I'm working on a web app test to test some of these ideas on a wide base of test subjects.

Again, I thank you for your comments, good to know when I stop making sense! LOL.

Andy

— You are receiving this because you were mentioned. Reply to this email directly, view it on GitHub https://github.com/w3c/wcag/issues/695#issuecomment-486699793, or mute the thread https://github.com/notifications/unsubscribe-auth/AB6Q4F2RZ34NKJI662XAEHDPSG625ANCNFSM4HF2F5YQ .

Hi @WayneEDick

I got the email and am going to reply more in depth there, but just want to add to this thread:

I have live experiments on my Perception Research page. Experiments CE14 and CE15 are the most current ones, but I have some more pending shortly.

https://www.myndex.com/WEB/Perception

And I am moving a lot of the research over to my ResearchGate account here:

_https://www.researchgate.net/profile/Andrew_Somers3/projects_

I am going to avoid posting more "interim" information here, and wait until there is a more concrete path forward. I'll explain more in the email.

Andy

Sorry to have not commented in this for so long, but I only have two bits to add. @Myndex wrote:

- sRGB corrections @svgeesus indicated he was going to do this, namely correct the sRGB math issue and remove the reference to the sRGB working draft which is obsolete.

I was delighted to see @svgeesus in this thread. Is the corrected sRGB math issue public facing anywhere? This is, of course, the lowest of the low-hanging fruit, so it would be very nice to wrap that one up.

@Myndex, your (1) and (2) are larger changes, but they could crafted as new an additional Success Criteria. The queue for WCAG 2.2 is already pretty significant, but I do not think that is any reason to stop working them as new issues.

@Myndex wrote (emphasis added):

The point here is that the "contrast ratios" created by the equations listed in the WCAG documents are not useful or meaningful for determining perceptual luminance contrast.

The problem with hue is how people with color deficient vision rely on luminance contrast.

What this shows is that luminance contrast is more important than color contrast for readability. But it should also show that a normally sighted person may misinterpret the lack of a luminance contrast.

I should have added some background flavor text earlier. The WCAG maths is only about luminance contrast, and that was a deliberate choice. The working group had so much discussion about the difference between “color” and “hue”, but I must confess of that has left my mind by now!

The goal at the very beginning (back in 1999, maybe earlier) was to rule out color combinations that were problematic for people with retinitis pigmentosa and color blindness (all common types). By happy accident (or brilliant insight, probably Gregg V.), the working group realized that we could just focus on relative luminance and not worry about prescribing certain palettes (which I think was the expectation at the start). In terms of validating the formula, the focus was on testing using people with these particular impairments. The clinical evaluation was much less concerned (at least initially) about addressing low vision more generally (since those needs are so variable), and not at all concerned with perceptual contrast for people with “normal” vision (since WCAG is about disability, and not aesthetics).

Gregg V. recently posted about this background.

We have had more than a decade now with the formula, and it is working well for people with low vision generally. It is remarkable that it works as well as it does, considering that it is really pretty simple.

Sorry to have not commented in this for so long, but I only have two bits to add. @Myndex wrote:

Hi @bruce-usab ! As you may know I have joined W3C Accessibility WG & Low Vision Task Force, some things I'll be discussing more at length on the mailing list.

1. sRGB corrections @svgeesus indicated he was going to do this, namely correct the sRGB math issue and remove the reference to the sRGB working draft which is obsolete.

I was delighted to see @svgeesus in this thread. Is the corrected sRGB math issue public facing anywhere? This is, of course, the lowest of the low-hanging fruit, so it would be very nice to wrap that one up.

There is an issue listed here, #360, which I have made a few comments in regarding the sRGB threshold being incorrect (WCAG lists 0.03928 when it should be 0.04045 — it should be noted that the difference has no effect for 8bit values but IMO it should be corrected as the publicly available WCAG documents are widely cited and should be correct/authoritative on such matters.

I believe svgeesus and I also agree on removing references to certain outdated/obsolete documents such as the original sRGB draft — that draft has a nice tutorial on the concepts but some of the math and figures are incorrect or not relevant to the actual standards.

That said, as I've determined it has no material impact on the other research I am doing on other issues (695, 665, etc) I haven't been pursuing that fix further as I believe svgeesus is doing so.

@Myndex, your (1) and (2) are larger changes, but they could crafted as new an additional Success Criteria. The queue for WCAG 2.2 is already pretty significant, but I do not think that is any reason to stop working them as new issues.

I do intend to continue working on this — how and when is "most appropriate" to incorporate such changes is of course relevant to your normal workflow and vetting process.

In posts above: Path Forward (2.2 vs 3.0 Adoption Thoughts) AND 2.2 Pull Request

I simply was offering what I felt was a reasonable "steps toward implementation," for discussion and to form the basis of a plan.

I should have added some background flavor text earlier. The WCAG maths is only about luminance contrast, and that was a deliberate choice. The working group had so much discussion about the difference between “color” and “hue”, but I must confess of that has left my mind by now!

Yes, I do know that the WCAG is intended to refer to just luminance contrast, I was just reiterating that luminance contrast is a "primary importance" and color contrast is widely considered to be a distant lower priority for the reasons we all know regarding color vision deficiencies.

I possibly should review all that I wrote initially, as I may have not been clear on certain issues. What I had meant was not that the WCAG was trying for something other than luminance contrast - I do realize that was the intent, what I had meant is that perceived contrast in real world environments is not well accounted for by the current WCAG math/method, as some of the experimental results I posted above demonstrate.

The goal at the very beginning (back in 1999, maybe earlier) was to rule out color combinations that were problematic for people with retinitis pigmentosa and color blindness (all common types). By happy accident (or brilliant insight, probably Gregg V.), the working group realized that we could just focus on relative luminance and not worry about prescribing certain palettes (which I think was the expectation at the start).

Yes, in fact this is well known and discussed in the human factors research & guidelines of NASA and the FAA. luminance contrast is a key factor far over color contrast. (references belowRefs 1,2,3,4) Though there are some color issues to be considered which I'll discuss below.

In terms of validating the formula, the focus was on testing using people with these particular impairments. The clinical evaluation was much less concerned (at least initially) about addressing low vision more generally (since those needs are so variable), and not at all concerned with perceptual contrast for people with “normal” vision (since WCAG is about disability, and not aesthetics). Gregg V. recently posted about this background.

Ah, yes, this is some of the information I was hoping to see, as mentioned in some posts above. I saw on the mailing list that someone at Lighthouse was involved (I assume Dr Arliti?)— good to know. Is any of the data or research from these studies available? As a matter of due diligence I'd like to review if possible.

I have read a lot of Dr Arliti's research, I never read anything that directly supported the current equation or standard however.

We have had more than a decade now with the formula, and it is working well for people with low vision generally.

(Emphasis added) I had originally been making the analogy that "a broken clock is right twice a day." though that's not the most accurate analogy. I believe that confirmation bias may be at play here, and I include myself and my initial reactions when I came across the WCAG 1.4.3 et al., in that I certainly had my own biases based on working with colorspaces and digital imagery in the film/TV industry.

By that I mean, my initial experiments showed some very inconsistent results of the method, and my initial reaction was to blame the use of a simple contrast ratio. The actual problem is deeper, more complex, as I detail below:

It is remarkable that it works as well as it does, considering that it is really pretty simple.

Weber is also pretty simple, and also the common standard for "complex stimuli" such as text. But Weber is also not a great fit when used on monitors without modification the equation. And as part of the results of my "first round" research that is summarized in the posts above, I can tell you that I found that the "standard" Weber contrast is in fact no better than the method the WCAG is using, particularly in a dark environment, and for lower contrast comparisons.

The reasons have to do with some unique properties of emissive displays and the environment they exist in. It should be noted that luminance and relative luminance in isolation does not matter if the stimulus is emissive or reflective — 120 cd/m2 is 120 cd/m2 regardless of if emitted or reflected.

HOWEVER, the environmental ambient lighting makes a very big difference. For reflective stimulus, increasing the ambient light will increase the perceived lightness of the reflective object as well — thus other things being equal, increasing ambient improves perceptions of reflected stimulus. Also, as ambient light increases, the eye's light adaptation also changes to compensate, but so long as the reflected stimulus is also affected (i.e. becomes lighter) then relative perception should remain consistent (between photopic vision ranges of 8cd/m2 to 520 cd/m2.)

The opposite is true for monitors, which are negatively impacted by an increase of ambient light, and the ambient light competes with the emissive light both for relative contrast and for eye adaptation levels.

This is what brings us to the conclusion that something more robust and representative of perception in real world environments is needed.

There is something more to color though, and it can affect anyone even with color vision deficiencies, and that is chromatic aberration. Chromatic aberration describes how light through a lens (prism) is deflected differently depending on wavelength. Shorter wavelength light (blue) is literally "bent more" than longer wavelengths (red). The result is that for a given stimulus, the focal point on the retina for the blue portions is different than the focal point for the red.

In an inexpensive simple lens this is seen as a red or green "halo" around an object. Nature provided the human with some correction by placing most of the blue cones in the peripheral vision, and the red and green cones in the center (fovea).

Nevertheless, as we age, our natural lens and vision system causes greater chromatic aberration which results in lower acuity due to "glare," scatter, and defocusref 5. Chromatic aberration is made worse when contrast gets too high. But also, certain specific color combinations (especially pure red against pure blue) can cause significant problems, even if the luminance contrast is "okay."ref 6, Designing with Blue

See also my post above on CA.

As such and to summarize my first round of resea rch:

Contrary to my initial impressions, I found the WCAG math of (Lw+0.05)/(Lb+0.05) is not substantially worse than a plain "ordinary" Weber contrast, though placing the +0.05 offset on both sides of the slash is unusual and has little effect. Rewriting it as (Lw)/(Lb+0.05) makes more sense and is similar to:

The Huang/Peli modified Weber of (Lhi - Llo)/(Lhi + 0.05) which I discuss in this post above. Though from my experiments I would leaning toward an offset of 0.1: (Lhi - Llo)/(Lhi + 0.1)

Nevertheless, I have been exploring many approaches to contrast assessment methods. The world is full of different techniques, some of which I discuss. Yet there is little research that considers the modern, graphically rich, web page or app. That has become a central focus of my research,

Any experienced/skilled designer is going to provide adequate luminance contrast as that is a function of good design practice.

It is my intent to provide the research and empirical foundations for functional tools in this regard. Tools which should allow the creation of reasonable standards and guidelines. As to the present topic (perceptual contrast), I have identified the following factors are being interrelated and essentially inseparable — more or less in order of criticality to legibility (assuming at least above contrast threshold):

Thank you,

Andy

References:

@Myndex, thanks for joining the AG WG and LV taskforce!

RBruce, apparently it was brilliance and Gregg V...:-)

One thought about viewport size (knowing we don't really want to go into it here)...for today and future proofing we should consider viewing/examining this in a very broad array of viewports -- which is not limited to mobile/desktop -- we need to include tablet, and much larger web-TV UIs, IR/VR, and Iot/Wot devices which can include wall size displays...

On Fri, Jun 7, 2019, 4:14 PM bruce-usab notifications@github.com wrote:

@Myndex https://github.com/Myndex, I tried to send you a private email. Please respond to that if you have it, or ping me back in this thread if its missing.

— You are receiving this because you are subscribed to this thread. Reply to this email directly, view it on GitHub https://github.com/w3c/wcag/issues/695?email_source=notifications&email_token=ABL6VSRBYSOXA7CKOXWFQV3PZK6RBA5CNFSM4HF2F5Y2YY3PNVWWK3TUL52HS4DFVREXG43VMVBW63LNMVXHJKTDN5WW2ZLOORPWSZGODXG4CSI#issuecomment-500023625, or mute the thread https://github.com/notifications/unsubscribe-auth/ABL6VSV35HD2TL7LPFA5BHLPZK6RBANCNFSM4HF2F5YQ .

This may not be helpful as Myndex has posted several examples of text which passes the current luminosity minimums but in Real Life are difficult to read if you've got poor vision, but I wanted to paste a very recent example of passing (at 4.74:1) black text on dark-blue background, a contrast which even for me are close enough in lightness (or should I say, darkness) that I have difficulty reading them. When they are hovered, these black links turn white, which then becomes readable to me but fails luminosity minimums (just barely, at 4.42:1). I could recommend they darken the blue background which would make the white text pass while still keeping the black text passing... but in reality that would make both states more difficult for people to read (and so of course I'm not going to recommend that).

This may not be helpful as Myndex has posted several examples of text which passes the current luminosity minimums but in Real Life are difficult to read if you've got poor vision, but I wanted to paste a very recent example of passing (at 4.74:1) black text on dark-blue background,

Hi @StommePoes

Yes, blue and black are (at least in a classical design sense) regarded as a poor color combo — though with the blue here most of the luminance is coming from green.

An issue with the present method is that it overstates the perceptual contrast of darker color pairs, and this is caused by a number of factors, including light adaptation.

In your example, the L* of the blue is about 50, which equates to an 18% grey card. Perceptually, depending on light adaptation of your eye, it should seem to be about halfway between black and white.

BUT other factors, such as light adaptation, glare from ambient lights, not to mention the age and clarity of the ocular media of the eye can affect this perception. In particular blue:

If you change the black text to white, your eye will be more "adapted" to the darker blue as that is more of the visual field, and so light text will be perceived to have higher contrast.

ALSO: Very recent research into the function of the new photoreceptor melanopsin indicate a connection with pupil dilation when the color blue is prominent in the visual field. To, a blue background can make the eye dilate, causing other colors (such as light text) to appear even lighter.

Somewhere is one of my posts, I indicated a suggestion that the brightest of two color be no darker that perhaps #A0A0A0 or perhaps #999 or #909090

As a reference:

The current math/method does not model positive vs negative contrast accurately, nor does it model perception of darker color pairs.

These are things being examined and addressed in current research for Silver.

Cheers,

Andy

Edit 6/19 I'll restate this as "does not accurately model an emissive display in real world ambient conditions": . It fails badly in the midrange colors as it does not adjust for human nonlinear perception. As such it should not be used to programmatically determine legibility of colors, esp. in the middle and darker ranges.

Just to clarify, the problem can be observed not just on emissive displays like oled and crt, but also on transmissive displays like back lit LCD as well, which is what most people use. It impacts any display type that relies on projection of light source to convey white. I would be curious to see if similar issue can be observed on screen projected image.

To your point, the formula doesn't work well when background is in mid range, but it can easily be overcome using foreground color closer to white point. Clearly, white font 3:1 is not the same as 3:1 of other color combinations including higher as shown below. Much to my chagrin, I've observed the same behavior even on printed paper though I cannot fully vouch for reproduction accuracy.

I do think the formula, while not perfect, has served humanities well. However, with growing user-base using dark-mode, more elderly population on digital, I wonder if we need more revision. What made the current formula so effective is its simplicity, but I think the world is ready for more robust formula that takes more nuanced approach. Maybe we need more than one formula. At minimum, takes into account if foreground element is lighter or darker, and if the foreground element is closer to white point.

Hi @incyphe and thank you for taking an interest in this.

Just to clarify, the problem can be observed not just on emissive displays like oled and crt, but also on transmissive displays like back lit LCD as well, which is what most people use.

That is a semantic argument - I am not talking about the display technology, only if the stimuli emits light. In the context I meant to encompass all displays that emit light. I clarified the post above with this note:

Note1: By "illuminated" I mean both emissive (led), and backlit transmissive (LCD) display types.

But the CONTEXT was relevant to another post somewhere in the thread that was making the distinction from passive signage which are reflective, as opposed to emitting light. Again, it's not the display technology I am talking about, only if the device emits light regardless of if through (LCD) or direct.

I would be curious to see if similar issue can be observed on screen projected image.

Do you mean something like a front-surface projection? Or an HUD? The discussion applies to anything where the display is emitting light, even if that emitted light is filtered (vie LCD) or reflected off a wall, or a semi-transparent glass (HUD) etc. A factor that will change for all these types is the gamma curve - but the gamma curve can be adjusted for perceptual uniformity among the types (within the limits of environment).

To your point, the formula doesn't work well when background is in mid range, but it can easily be overcome using foreground color closer to white point.

Then by definition it doesn't work well. "being overcome" by a workaround is not "working well."

The formula does not model human perception, and is not supported by 100+ years of human visual perception research. However, you seem to be responding to the first post in a thread of over 70 posts, and the later posts go far beyond that initial issue.

This has been my primary research project since April, and while this thread is not obsolete, it is not up to date either. I've mostly stopped posting results here, but will continue if you'd like. But first let's get you more up to date on the state of the art of this issue so we can be on the same page.

Substantial experimental results, current trials, and some discussion, are on my Perception page here:

https://www.myndex.com/WEB/Perception

There are over a dozen pages there to explore, that go far deeper than this thread which is somewhat in-depth itself. These pages are still "experimental" and should not be considered "final values" by any means. They are also "live" and subject to changes during evaluations.

I have some things on my ResearchGate account too, though I am a bit behind in updating there as well. I am presently involved in Silver which is revolutionary in many ways (far beyond contrast) and has absorbed much of my time.

1) Spatial Frequency can have a greater effect on perceived contrast than the distance between a pair of colors. (In a practical way Spatial Frequency relates to font weight and stroke width, but also font size and letter & line spacing).

2) I took a look at your other thread, and yes the display brightness is important — but what is important is max display luminance relative To the ambient lighting environment, and relative to the eye's light adaptation level.

3) Contrast, like color, is not "real"—it is a context dependant perception. The linear distance/difference between two luminance (light) values does not (in isolation) predict how a human perceives them. If you double the physical amount of light, we perceive only a small increase. The linear luminance point we perceive as halfway between full white (100%) and black (0%) is not 50%, but 18.4%. Perception is not linear to light.

4) Current standards were developed before sites like GoogleFonts, where bad font choices are easy and on-demand. 10 years ago, "WebSafe" fonts were dominant, and those fonts do not have ultra light weights like 200 or 100. Today, people are using 200 weight fonts all over the place, especially on body text, and to very bad effect. The current contrast standards fail for such thin fonts, especially when small.

5) Perhaps most important, all of these things, and several more, are all interconnected and inseparable. An arbitrary definition for a color contrast is meaningless without context of use and the many factors that affect contrast. 4.5:1 might be enough, it might be much more than is needed, and it might be far too little. It depends on the use case.

Clearly, white font 3:1 is not the same as 3:1 of other color combinations including higher as shown below. Much to my chagrin, I've observed the same behavior even on printed paper though I cannot fully vouch for reproduction accuracy.

As implied above, there is a lot more going on that just the colors, and that goes for print/reflective media as well.

I do think the formula, while not perfect, has served humanities well.

Only in the same way that a broken clock is right twice a day, and serves someone who happens to set their watch each day at the same time the clock broke.

Here's a principal problem: as mentioned above, the current standard does not take spatial frequency into account (at least not well, and definitely not for thin small fonts). But human nature being what it is, designers often seem to target 4.5:1 as the best practices target contrast, because that's "the standard" — but it is not even close to what contrast should be for a column of body text.

A combination of a weak standard, and the proliferation of thin fonts has resulted in massively unreadable websites. It's a serious problem that did not exist (at least not in the same way) before 2009. A look on the wayback machine certainly finds a lot of bad design, but at least the fonts were beefy and black against light. So no, it has not served humanities well. I'm making the argument that it has caused more problems that it intended to solve.

Infographics that describe some of the issues:

And a rough chart example:

This chart is linear to perception, not linear to light.

This chart is linear to perception, not linear to light.

Perceptual uniformity is important, and so is recognizing the most important aspects of perception (spatial frequency as an example) and THEN also recognizing how perception is shifted/offset for various impairments, and then determining the best practices for helping accessibility and accommodating impairments.

However, with growing user-base using dark-mode, more elderly population on digital, I wonder if we need more revision. What made the current formula so effective is its simplicity,

Simplicity isn't useful if it's wrong, and simplicity has nothing to do with "effectiveness". Effectiveness means it works and does the job and is useable.

And in this case, there is no point nor reason to be "simple". If we are talking about testing two colors, there is no real difference between

(L1 + 0.5)/(L2 + 0.5) and (L1 - L2)/(L1 + 0.1)

Both are computationally about the same, but the second one (Weber, Modified) is much more correct in terms of perception. But there are even better models, like PCL which are only marginally more complicated — and that's not relevant. People aren't doing this math on a pocket calculator, they are using web apps, and the code is trivial even for a more complicated model like SAPC, PCL, CIEMAC02, etc. They are all easily implemented in JS.

I'm not suggesting a complicated image model like HUNT, but right now an effective robust solution exists in about 12 lines of code.

but I think the world is ready for more robust formula that takes more nuanced approach. Maybe we need more than one formula. At minimum, takes into account if foreground element is lighter or darker, and if the foreground element is closer to white point.

The new algorithms are developed, tested, and functional. Some of the early results from early versions are in this thread, more recent result examples/tests on my Perception pages. I will be publishing the math and code soon. More and deeper evaluations are pending.

I will tell you that a single simple block of code will correctly predict perceptual contrast for both normal and reverse polarity (dark on light or light on dark) as well as make minimally invasive offsets to ensure that those with color vision deficiency are presented with enough contrast for readability.

In fact a breakthrough discovery this last weekend was that the math is surprisingly symmetrical in results, so that a 30% normal polarity (dark text on light or BoW) has the same perceptual contrast as -30% for reverse (WoB), though further evaluations are needed to dial in a few minor things.

Again thank you for your interest, I consider this a critically important issue and am happy to continue discussing it.

Andy

Dear @Myndex I would like to know if there is any way to use the math behind more accurate models to come up with color palettes that wold map to the range currently being accepted by WCAG 2.0 and 2.1 guidelines? A follow-up question connected to teh colors is the frequency scale and sizing. Some designers use CSS calc() function and the vw (viewport width) units, together with Rem units, and line-height to get so called fluid typography. What would be the first and most simple step to try and approach frequency scaling using similar approach applicable to CSS. If we could get to something sound, safe and simple, we could try to propose it as an option in frameworks like Bootstrap and Foundation.

Thank you for your effort, which is truely unmatched...

At long last there is a working javascriot tool as a proof of concept for some of the new directions in perceptual contrast prediction.

I have a live contrast checker tool that demonstrates the work in progress. It is preliminary, and not the standard as yet, but feel free to bash around with it — you'll see there are fewer false fails, but more importantly, fewer false PASSES which is the more substantial problem we are working to solve.

Work In Progress SAPC Contrast Tool: https://www.myndex.com/SAPC/

The pages includes some sample type specimens. NOTE: these are all STATIC (only the colors are dynamic) and there is not yet an indicator if a line of text is failing or passing. On the far left, you'll see a number such as L70 — this means that line of text requires at least 70% or higher contrast, so would be potentially a fail at a lower contrast.

This is not a normative tool, nor a standard — it is a work in progress pre-release beta.

Along with this, I also have a CVD simulator based on the Brettel model (generally considered an accurate representation of the most common color vision deficiencies) which is here: https://www.myndex.com/CVD/

Please feel free to ask me any questions.

Regards,

Andrew Somers Color Science Researcher

Dear @Myndex I would like to know if there is any way to use the math behind more accurate models to come up with color palettes that would map to the range currently being accepted by WCAG ....SNIP.... What would be the first and most simple step to try and approach frequency scaling using similar approach applicable to CSS.

Hi @pawelurbanski

I think there is a potential Venn diagram way of making a subset of colors that both takes advantage of the emerging tech, but also meets the current WCAG (by incorporating the false fails). I just posted a beta of a new tool for contrast: https://www.myndex.com/SAPC/

Your question regarding dynamic text size/weight is a little more difficult to answer, but it is something that is in the works here in my lab. On the SAPC link, you'll see some samples of text sizes and weights. I'll try and answer more completely a bit later, I have to run to a meeting right now.

Thank you for your effort, which is truely unmatched...

Thank you very much — when I started this thread almost a year ago now, I thought I'd be done in a couple months. LOL. Now I expect to be busy with this project for a few more years. In case you ever wondered how deep the perception rabbit hole is, I can tell you...

... It's really really deep (and I swear that cheshire cat is taunting me on purpose). What if I told you that half of what you see is made-up by your brain? 😳😎 The fact that perception is largely a function of your neurological system is one of the things that makes accurate prediction of contrast perception so incredibly difficult. But we're getting there!

Andy

Thanks for this. There is widespread confusion based on the existing WCAG recommendation. Typical authors don’t understand that it is a weak and inconsistent heuristic which mostly gives reasonable positive results (i.e. if the guideline says there is too little contrast, it is likely worth at least considering changing the color scheme) but produces many false negatives (i.e. the guideline says a color scheme is just fine, but it clearly has too little perceived contrast to be ideally legible).

A better heuristic would be very welcome.

Even an additional test as simple as “CIELAB L* difference should be at least 40” would make a big improvement I think.

Hi @jrus

Thanks for this. There is widespread confusion based on the existing WCAG recommendation.

You're telling me!! There is very widespread misunderstanding of human visual perception in general. It is an abstract and very non-intuitive subject. Color is not real. Contrast is not real. They are perceptions and extremely context sensitive. This makes a prediction model challenging

Typical authors don’t understand that it is a weak and inconsistent ...SNIP... produces many false negatives (i.e. the guideline says a color scheme is just fine, but it clearly has too little perceived contrast to be ideally legible).

With a random set of colors, about 50% false passes and 22% false fails compared to a perceptually uniform prediction model such as SAPC.

Even an additional test as simple as “CIELAB L* difference should be at least 40” would make a big improvement I think.

The beta tool link I posted ( https://www.myndex.com/SAPC/ ) is similar to L• difference, but more influenced by some of Fairchlld's work (RLAB), not to mention Hunt's model, and CIECAM02 etc.

Play with the tool, and please ask any questions or comments.

Thank You

Andy

I would note that some combinations (e.g., pure red text on a white background, white text on an orange background) that I have seen asserted as being false fails (by some people, not Andy) are actually quite problematic for certain not-uncommon visual disabilities. SAPC also returns low % for both of those combinations.

the guideline says a color scheme is just fine, but it clearly has too little perceived contrast to be ideally legible

@jrus can you provide some examples?

Look up at the top of the page, Andy provided an extensive collection of examples. The essential problem is that the WCAG heuristic is based on assuming that human lightness perception is logarithmic, but this is not an accurate model. I’m not sure who picked that model or why, but they should have consulted an expert on vision or colorimetry first.

For some purposes a logarithmic model can be useful because human vision can adapt to a wide range of scene brightnesses (if you leave your eyes 30 minutes to adapt, you can see in a very dim room or out in direct sun), but at any particular level of adaptation, lightness perception is roughly similar to a square root relationship.

Then there are additional adjustments that can be made based on which of the colors is in the background, etc. As Andy says, Mark Fairchild has done some good work on color appearance modeling, building on decades of work by other researchers. I highly recommend his book, Color Appearance Models.

Look up at the top of the page, Andy provided an extensive collection of examples.

I think you are talking about the color pairs with 2.9:1 luminosity ratio. I can live with them failing, as there is only one I would subjectively characterize as having good apparent contrast. Those samples still all rate < 80% with SAPC, so they are still fails.

I’m not sure who picked that model or why, but they should have consulted an expert on vision or colorimetry first.

See: http://www.w3.org/TR/WCAG20/#IEC-4WD SAPC is better, but SME were consulted.

I am talking about the examples of color pairs which are listed as “passing” the WCAG test (contrast ratio 4.5), but are not particularly legible, especially at small text sizes for readers with poor vision.

Another example might be #c05500 on #000000 (for comparison, #c05500 on #ffffc2 is declared to fail, even though it is dramatically more legible).

I think if I had to make a very simple rule for text contrast accessibility, I would recommend something like:

CIELAB

lightness difference ΔL > 35 as a minimum

lightness difference ΔL > 50 for typical body text and labels at normal size, or more where possible, especially for smaller or thinner fonts

chroma C < 75 for any background color chroma C < 50 for best results

and a recommendation to try not to have both foreground and background have high chroma.

But this is just off the top of my head, and I am not an expert in text legibility per se. It would be good to do some large-scale empirical testing on as wide a range of color schemes, devices, contexts, and individual readers as possible.

HI @jrus

Thank you for your comments. CIELAB is only quasi uniform and simple ΔL* is a weak predictor of contrast, as we determined last year. CIECAM02 is better but more complicated and for a different use case than we need. SAPC is a better predictor of perceived contrast if TEXT for readability, and that is its primary purpose. You can look at the javascript in the tool I linked to above, which should make it more clear. We'll have some tutorials published soon.

Also, I think you are referring to some very early posts in this thread, and we are past those now. You seem to be repeating a lot of the things I've stated here and on several other threads, so I'm going to guess we're on a similar page, however, I have yet to publish the several papers I am working on that cover this in-depth, and there are substantial developments that I am not yet public about.

In the meantime, please see my Perception Experiments page(s) for more of the state-of-the-art materials, at least preliminary items. I also have some things on my ResearchGate account, and if you search my username here you'll see a lot of posts that cover all of these issues.

The current SAPC (to be called APCA in the final I believe) is not yet using many of the extensions and modules that will expand it, partly as IP protections need to be completed first. One of the modules deals very directly with color/hue related issues.

It is not as simple as "this much L* difference" or "more/less chroma" in fact chroma contrast and luminance contrast are processed by SEPARATE channels in the visual cortex, and are largely independent. For accessibility, there are issues relating to CVD and Chromatic aberration, but I can not get into the details right now. Again, it is not as simple as saying "more/less that xx".

A key factor is spatial frequency. Again, the articles on my Perception page discuss this.

As for large scale empirical testing, again this is planned and the software is in development (PerceptEx — The Web Perception Experiment). I hope to have that running and collecting data this spring.

The new methods are part of 3.0, as they are a complete break from the current methods. As such, discussing the current methods is somewhat moot — those are not going to change. The change is part of the new generation, which is Silver/3.0. We already have a set of recommendations/levels — look for that soon.

Cheers,

Andrew Somers Color Science Researcher

Thanks @jrus, that is a good example, and SAPC rates at only 75.1%.

The false fail (SAPC 91.7) is less troubling to me because (1) it is failing against 4.5:1 (and not 3:1) and (2) it only takes a little tweaking (#ffffcd vs #ffffc2, also SAPC 91.7) to get a pass.

The false fail (SAPC 91.7) is less troubling to me because (1) it is failing against 4.5:1 (and not 3:1) and (2) it only takes a little tweaking (#ffffcd vs #ffffc2, also SAPC 91.7) to get a pass.

Hi @bruce-usab !

And just to add, some of these results from SAPC will be further refined with the color module, which is pending a few things before being made public, and I'll more of that below.

BUT ALSO please keep in mind that SAPC will show a DIFFERENT percentage depending on polarity! So if you reverse the background and text colors you will get a different result.

For the first example, the SAPC is actually even lower: BG=000000 Text=C05500 is an SAPC of -71.4%

The 75% figure is of the text @ 000 and the BG @ C05500

As for a quick preview of the color module trims, estimating the trims manually, the first example would probably be more around -58.6% and 62.7% depending on the polarity as above.

The second example, using the color module and if using the blue avoidance (under evaluation) was enabled along with the red offset, it would be -84.9%/77.2% and not 91.7%

This has to do with blue being a negative contrast contributor for foveal imaging, and also the problems with chromatic aberration.

These are some of the "secret goodies" which I am super eager to demonstrate more fully in the near future. If you look at these colors with these revised percentages in mind you'll see how they align more closely with our perception. Moreover, they better predict several impairment issues, which of course is why we are all here.

OF NOTE: With the color module in place, we will no doubt be revisiting the actual percentage levels.

Thanks!! Andy

Testing out the module, I plugged in #3BA545 as a background color with white and black text : Black Text = 91.9% SAPC ( L90 ) White Text = -75.5% SAPC ( L70 )

When I compare a sample of the L90 black text to a sample of the L90 white, the white appears as much or more clearly legible than the black text, even though it gets a significantly worse SAPC score.

Hi Mark @mrlebay thank you for your questions.

First to mention, this is not the final release algorithm/math. This is beta, and a simplified version. The complete set of equations and additional features are still under development.

Also, the bulk of the early evaluations and tests were using greyscales. There is a set of color and spatial frequency adjustment modules for the purpose of addressing people with CVD, and for adjusting for certain other variations.

This initial version is a public beta, and please do bash at it and see what problems you may find. I am aware of some discrepancies, particularly with high spatial frequencies (small and very thin fonts). More below:

Testing out the module, I plugged in #3BA545 as a background color with white and black text : Black Text = 91.9% SAPC ( L90 ) White Text = -75.5% SAPC ( L70 )

When I compare a sample of the L90 black text to a sample of the L90 white, the white appears as much or more clearly legible than the black text, even though it gets a significantly worse SAPC score.

Among other things, for this beta version, certain constants were chosen as "middle of the road" values, but will in a future revision be more sensitive to changes in spatial frequency and hue, and other factors. Also, these interim constants were tested closer to threshold, as that is more important for accommodating impairments. The constants will be replaced by variables in the future to more accurately predict contrast based on additional input parameters.

Also, something we are investigating is how different rasterizers handle light vs dark text. As you can see from the sample below (rasterized then zoomed up 800%) the dark text is rendered thinner than the light text. This changes the spatial frequency and "thicker" (lower freq) results in higher apparent contrast. But also the black is more blended with the BG, reducing the contrast further — note the vertical stroke of the letter p for instance, not even close to black.

This is one of the frequent problems with using very thin text for web content, and part of why accurate predictions of perceived contrast and readability is a challenging task. And again, these anomalies are being addressed for future revisions.

And thank you for pointing this out, I'll mention in closing that hue/saturation contrast and luminance contrast is processed separately in the brain, and the combination of perceptions are not always intuitive. Our goal here is to ensure readability, but complete human perception models and prediction is shockingly complex. One of my favorite examples is this illusion:

The CSS values of the Yellow dots are identical, but they look significantly different due to context.

I hope that answers your question, and please feel free to ask more. I hope to have some updates and adjustments soon for better consistency at higher contrast levels.

Thank you,

Andrew Somers Color Science Research

Yes, it it does. I'm playing around with a series of middle-of-the-road values for a project I'm working on. I'll test them on SAPC and share them here.

This whole thread has been a wonderful read. Thanks for all your posts @Myndex.

Yes, it it does. I'm playing around with a series of middle-of-the-road values for a project I'm working on. I'll test them on SAPC and share them here.

This whole thread has been a wonderful read. Thanks for all your posts @Myndex.

Hi Mark @mrlebay

Thank you very much!

Some data points I am collecting, as they affect contrast perception — if it's not much trouble, including the information would help with context:

1) User agent/browser, Operating system, and color management settings. 2) Display make/model, and if calibrated and type of calibration. 3) ICC profile if calibrated and using color management. 4) Ambient lighting conditions.

I discuss a "standard observer model" in one of my repositories here on GitHub. It is very preliminary, but intended to provide guidance on a reference envirnment for evaluations.

https://github.com/Myndex/SAPC

Thank you!!

Andy