That diffing would need to be either precisely defined or not exist at all, because node identity is observable by JS.

Open WebReflection opened 4 years ago

That diffing would need to be either precisely defined or not exist at all, because node identity is observable by JS.

@emilio like I've said, it's an implementation detail, the only concern for vendors is to not dispatch mutation events, or custom elements callbacks, if the parentNode during the process, is not different form the one present at the moment of the replace.

If it is, it's OK to dispatch all the things as usual, as their previous different container has the rights to know.

Like I've also mentioned, if this is not possible, this proposal is useless, and a big miss out from the standard, as you have to take into account minimum 1K of necessary user-land code to obtain the exact same result, vDOM or not, as it's been done for the last few years.

@emilio if needed, I've just created from scratch a diffing algorithm that fits in less than 100 LOC and it could be spec'd too.

The get function, in specs case, should instrument the engine to trigger callbacks and mutations when the passed value is -1, and ignore these when the passed value is 1 and the node was already present in the same parentNode.

Values like 0 and -0 can be ignored so ... anyone interested?

This kind of operation is only slow if you have lots of listeners, right? I'm pretty sure browsers have fast paths if you don't. Is that common, to have listeners but also not want them to fire at times?

I thought the main problem with perceived slowness was that folks triggered layout "accidentally" in a loop or some such.

@annevk apparently the entirety of the Web uses home made code to diff nodes in lists or any sort of layout. This would be like the most welcome helper of them all, if provided natively, but my latest library weights 450bytes once compressed, so I can live with that, but it'd be great if we could solve this super common need in the platform, imho.

I'm not sure how that addresses the questions posed. However, you might find #270 an interesting read.

@annevk you are right, I've answered something else ... the thing with listeners is that Custom Elements / components might do expensive operations in the disconnectedCallback or connectedCallback, and there's no way to know if these were triggered after a diffing, as the node maybe was just moved instead, so that actually a changedPosition callback could be useful instead, but that's a whole different story. Hopefully this answers better.

@annevk that proposal has gazillion comments, and I actually stopped understanding it at the second let textToken = changes.firstChild(token); line, as it's not clear where that token comes from, but if it's able to apply many changes at once in an optimized way, including inner DOM nodes manipulation, then it seems to address already this proposal too, so I believe we might close this one, and hope that one will end up working for the scenario mentioned in here too.

What do you think?

Yeah, using that issue as a tracker for all "diff" proposals seems reasonable to me. I don't really expect browsers to do anything here soon as it's rather difficult to come up with some kind of abstraction that would offer true measurable performance benefits and can also be easily adopted and implemented.

@annevk the measurable performance here is not so trivial, but at the same time not having this in core means every site needs to take into account extra JS to diff changes in the view.

That won't likely change neither, as the proposed API is not as easy as writing HTML via a tagged template literal, JSX, or whatsoever, but at least the code in charge of diffing can go.

Again, 450bytes for my latest library that does that is really not the issue of the modern Web, and yet if I could not use that and trust the browser instead, it'd be awesome, specially because I cannot grant through my tiny library that moved nodes won't trigger unnecessary callbacks or mutations, which is also something that API could take care of, as it has all the changes, and if a node never lost its parent container, I don't see any reason to trigger anything (but then maybe position mattered, so this might be side-effectish).

Feel free to close this if it's just a reference to the issue you'd like to bring there 👋

If you could quantify the cost somehow it might be interesting to see if it's valuable to have primitives for "perform this DOM operation", but don't invoke any of the callbacks. You get that for free if you don't have any callbacks, but indeed you cannot avoid some of them for certain use cases, but might still want to avoid calling them sometimes.

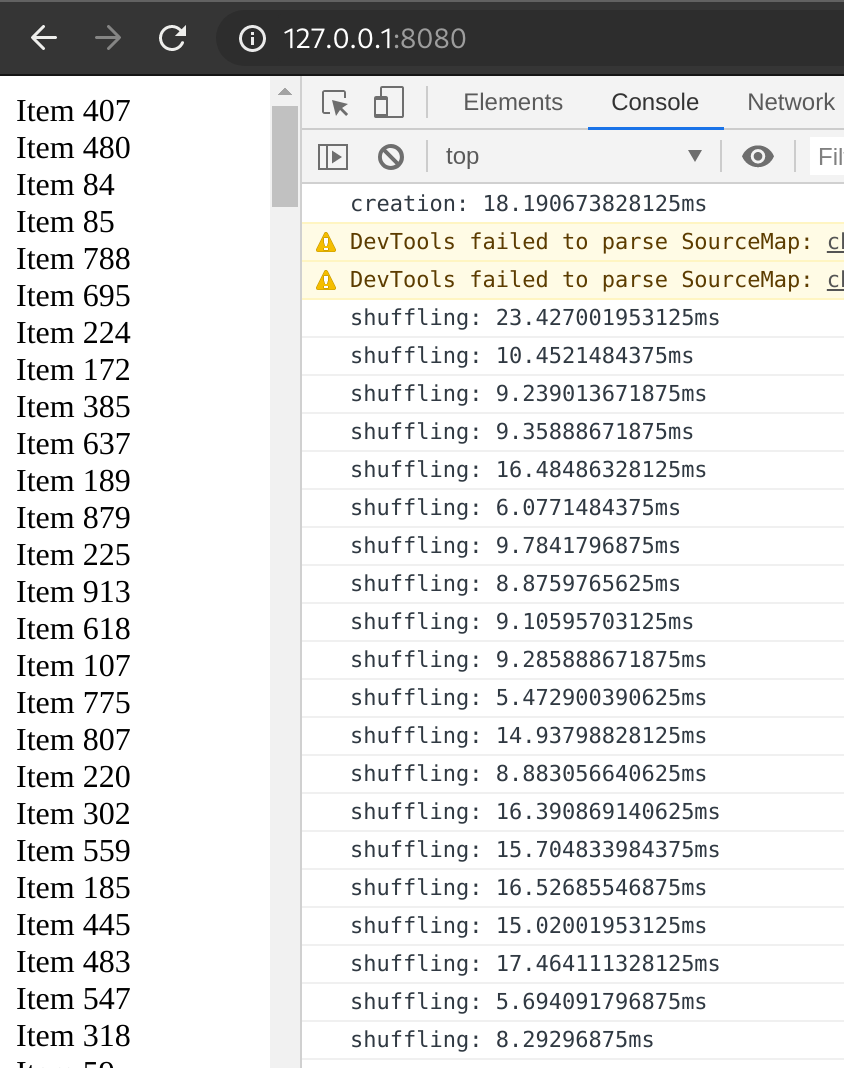

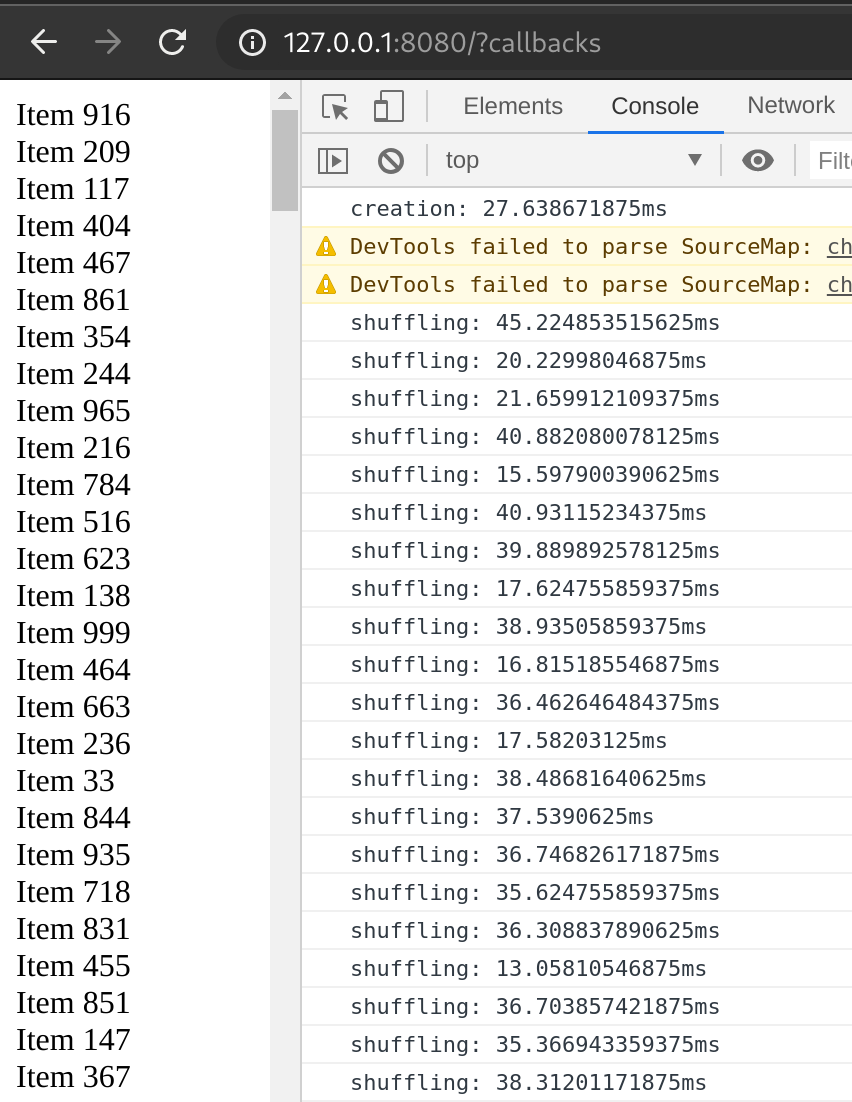

@annevk so this is a very basic test case you can test right away in your machine too:

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<title>Custom Elements Callbacks Cost</title>

<style>

custom-element {

display: block;

}

</style>

<script>

class NoCBElement extends HTMLElement {

get value() {

return this.textContent;

}

set value(value) {

this.textContent = value;

}

}

class CBElement extends NoCBElement {

connectedCallback() {

this.connected = (this.connected || 0) + 1;

this.setAttribute('connected', this.connected);

}

disconnectedCallback() {

this.disconnected = (this.disconnected || 0) + 1;

this.setAttribute('disconnected', this.disconnected);

}

}

customElements.define(

'custom-element',

/^\?callbacks?/.test(location.search) ?

CBElement : NoCBElement

);

this.onload = function () {

var container = document.body;

var items = [];

console.time('creation');

for (var i = 0; i < 1000; i++)

items.push(

container.appendChild(

create('custom-element', {value: 'Item ' + i})

)

);

console.timeEnd('creation');

setInterval(

function shuffle(randomly) {

var before = items.slice(0);

items.sort(randomly);

console.time('shuffling');

//* single pass

for (var i = 0; i < 1000; i++) {

before[i] = before[i].nextSibling;

container.insertBefore(items[i], before[i]);

}

//*/

/* multi pass

for (var i = 0; i < 1000; i++)

container.removeChild(before[i]);

for (var i = 0; i < 1000; i++)

container.appendChild(items[i]);

//*/

console.timeEnd('shuffling');

},

2000,

function randomly() {

return Math.random() - Math.random();

}

);

};

function create(name, props) {

return Object.assign(document.createElement(name), props);

}

</script>

</head>

</html>There is a commented version of the test that explicitly drops all nodes and append back, but I'd like to focus on the single pass case where nodes don't ever actually even leave their container, they are just moved around.

The connectedCallback and disconnectedCallback trigger each time this movement happens, which is undesired for the "shuffling" or general reordering purpose, as it's simple enough to eventually manually dispatch an event to their container after the shuffling happen, if knowing that such shuffling is important.

To trigger the callback version simply pass ?callback or ?callbacks to the URL, and check results out.

Of course the cost of each callback is also important, but the point is that one cannot possibly know what the connected and disconnected callbacks do, but most of the time do much more than simply updating an attribute (add events, clear events, add listeners, clear listeners, fetch content on connected, etcetera)

I hope this was useful.

I stumbled on this page while googling for replacing the elements of a range. Would this be a viable workaround, or does it have caveats needed to be considered?

const documentFragment = range.extractContents();

documentFragment.replaceChildren(newNodes);

range.insertNode(documentFragment);Edit: Oh I see this issue is about not triggering connectedCallbacks and disconnectedCallbacks. This wouldn't be a viable workaround.

Some Background

While many claims that the DOM is slow, I've been happily using it for about 20 years without any major performance issue, and in more than a challenging situation where performance matters the most.

However, most of the Web community, in the recent years, switched to this vDOM concept, unsatisfied by most common changes at once that should be reflected on the UI, and while I don't want to spam this proposal with all my libraries that never really needed a vDOM but competed pretty well anyway, I'd like to discuss the fact that I've been trying to use most common/known algorithms to produce the least amount of changes needed from UI A to become UI B.

I've tried the Levenshtein distance one, to realize it was working on a way too big matrix, switching to the famous E.Meyers's O(ND) diffing, to realize that I had to bail out after "50 snakes" or something, because too slow when thousand rows get replaced.

Last, but not least, I've recently discovered that a simplified version of the Hunt Szymanski algorithm works pretty damn well in every common DOM occasion, including the replacing thousand rows with new content.

However, the fact all this has to weight on user-land shoulders, when there is a clear opportunity to have it native, starts becoming an issue, specially because it's super hard to compete with non standard frameworks, including mine, so that I'd like to propose this addiction to the current Range API.

The Proposal

Among many useful methods, a

Rangeinstance can extract, delete, get content, but it's incapable of replacing such content in a single operation.The whole Web is full of fragments able to inject even thousand nodes in a reasonable speed, and at once, but there's no mechanism to ask the browser to replace many nodes with the same ease.

Sure, one could append a comment node after the last boundary of a fragment, delete the fragment content, and

insertBeforethat node, but this is more a hack than a solution, and it's also not optimizable right away, as the two operations are atomic, hence rightly independent.This

.replaceContents(...)method would like to propose, and analyze, the feasibility, and eventual performance benefits, of defining a range boundary, and replace through anArrayof nodes, all the range content at once, expecting some smart diffing provided behind the scene, in a world where diffing algorithms exist since about ever, and are mostly Open Source.The reason it should accept an

Array, instead of a fragment, is that we still miss persistent fragments on the platform, and a DOM node cannot simultaneously exist in two different containers.An

Arraycould contain literally any node that is either live or not on the document, enabling the diffing possibilities between current UI status and the new desired one from the developer.Details

When

range.replaceContents(entry)is invoked:entryis a generic DOM node, handle it as if it was an array with that single[entry]entryis anArray, replace all nodes in the range with those present in theArrayArraythat was already live on the document, and part of the very same container, avoid processing all common tasks such as notifying theMutationObserverof the container, or invokingdisconnectedCallbackand consecutivelyconnectedCallbackfor an operation that was meant to never trigger any of this, as the node was supposed to never leave its container, which is what every HTML/SVG user-land engine is trying to do these days by handArray, throw aTypeErrorImplementation Details

The proposal is kept simple on purpose, because usually optimizations/implementation details are not defined in specs, but my expectations is that browser engines can natively decide the best strategy to adopt in order to substitute the least amount of nodes in the document, so that behind the scene a 1000 rows replacement could result in "just two swapped rows" operation, and be blazing fast at that.

Regardless, the clause on how nodes that where there before should be handled is part of this proposal, because it's the most essential bit to make such proposal useful at all.

If that clause is ignored, or not implemented, nobody will use this proposal because inferior to what most modern frameworks can do already.

Conclusion

I think having such primitive on the Web would potentially be a game changer, and I/myself would instantly switch to such API as soon as implemented.

Best Regards