Thanks for the detailed report! I didn't even know about the existence of these alternative ways of invoking Python ... can you point me to where I can learn more about those? Sounds like a really nice way to avoid having to completely restart Dragon in order to reinitialize Python ...

Since I don't exactly know how those work, I'll instead share some generic information about how my system works in case this sparks any debugging ideas:

- I'm using the (x, y) coordinates from Tobii's API exactly as is. I don't add any offset to that. These get passed directly into the Mouse action with brackets for absolute position. The only exception is when unable to get gaze information it will use the center of the current window instead (sounds like you might have seen that sometimes when performing selections?).

- In the video you sent me separately, it looked like the problem was that the clicks were happening in the wrong place. I think the first debugging step is to figure out whether Tobii's coordinates are wrong or whether the Mouse action is doing the wrong thing.

- When performing selection, I take a screenshot both at the beginning of the utterance and the end of the utterance (unless the eyes have not moved much). I have noticed that it's natural to move the eyes quickly when performing selection, so you may need to dwell your eyes a bit at both ends of the utterance.

- What is the typical failure mode for the second and third methods you described? Is it like the video you sent me separately, where the cursor does move ... but to the wrong place?

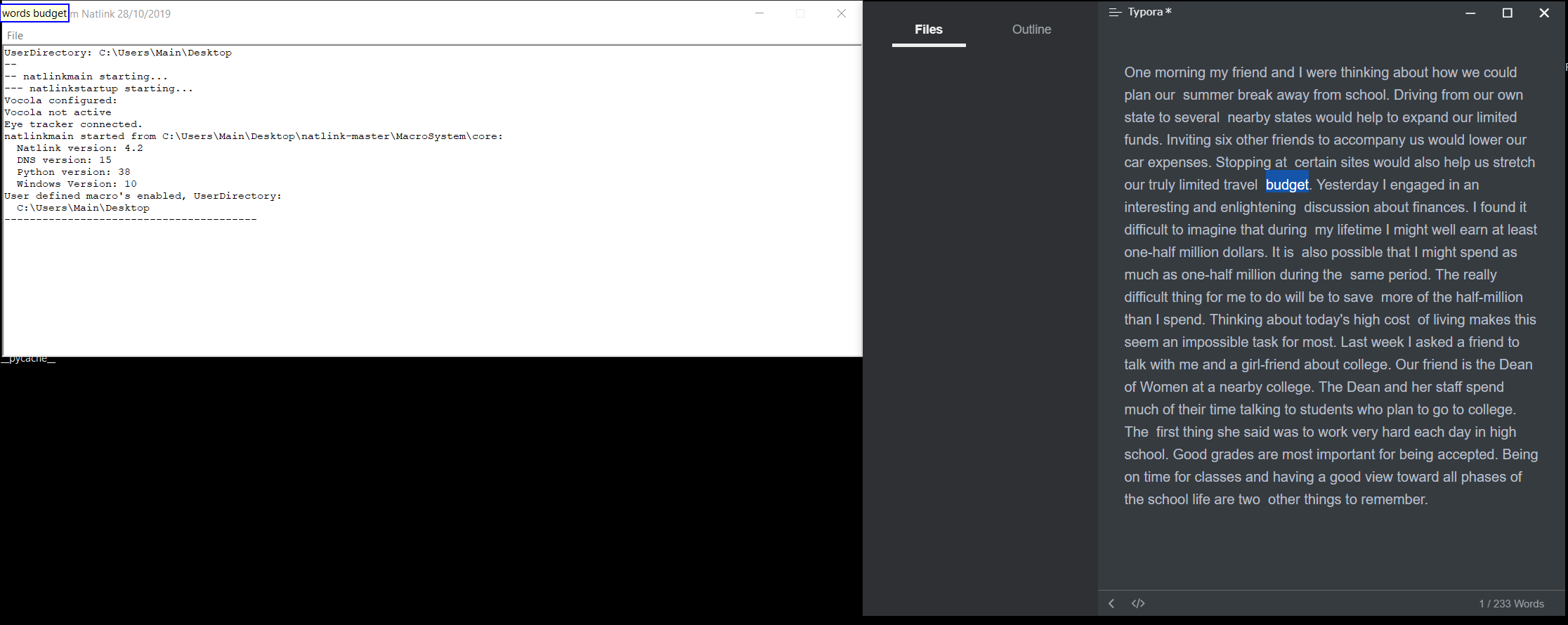

I'm testing this out on Python 3.8.2 32-bit.

I've tried several different ways to run this. The 2nd and 3rd methods do not function reliably. Is anything further I can do to help troubleshoot?

Running the grammar through natlink through the traditional method works correctly

_gaze-ocr.py. (in-process method.)python -m dragonfly load --engine natlink gaze-ocr.py --no-recobs-messagesin-process method.python -m dragonfly load --engine natlink gaze-ocr.py --no-recobs-messagesout-of-process method. (grammars on its own thread)Now what's interesting here several different behaviors appear.

The OCR seems to be working correctly marked as success and does indeed have a correct gaze location. However no text is highlighted. ocr_data.zip success

On occasion regardless of repeated tests it fails to create a selection and

Execution failed: SelectTextAction()is produced. Examining OCR data it does not reflect the gaze at the time of recognition. (Verified through Gaze Trace). In fact no matter where I look for (e.g. 4 corners of the screen) the coordinates of the gaze snapshot seems to be the same location. This is despite a significant pause after dictation.