These look like coordinates of five points.

See the comment above the line which you quoted:

standard 5 landmarks for FFHQ faces with 512 x 512

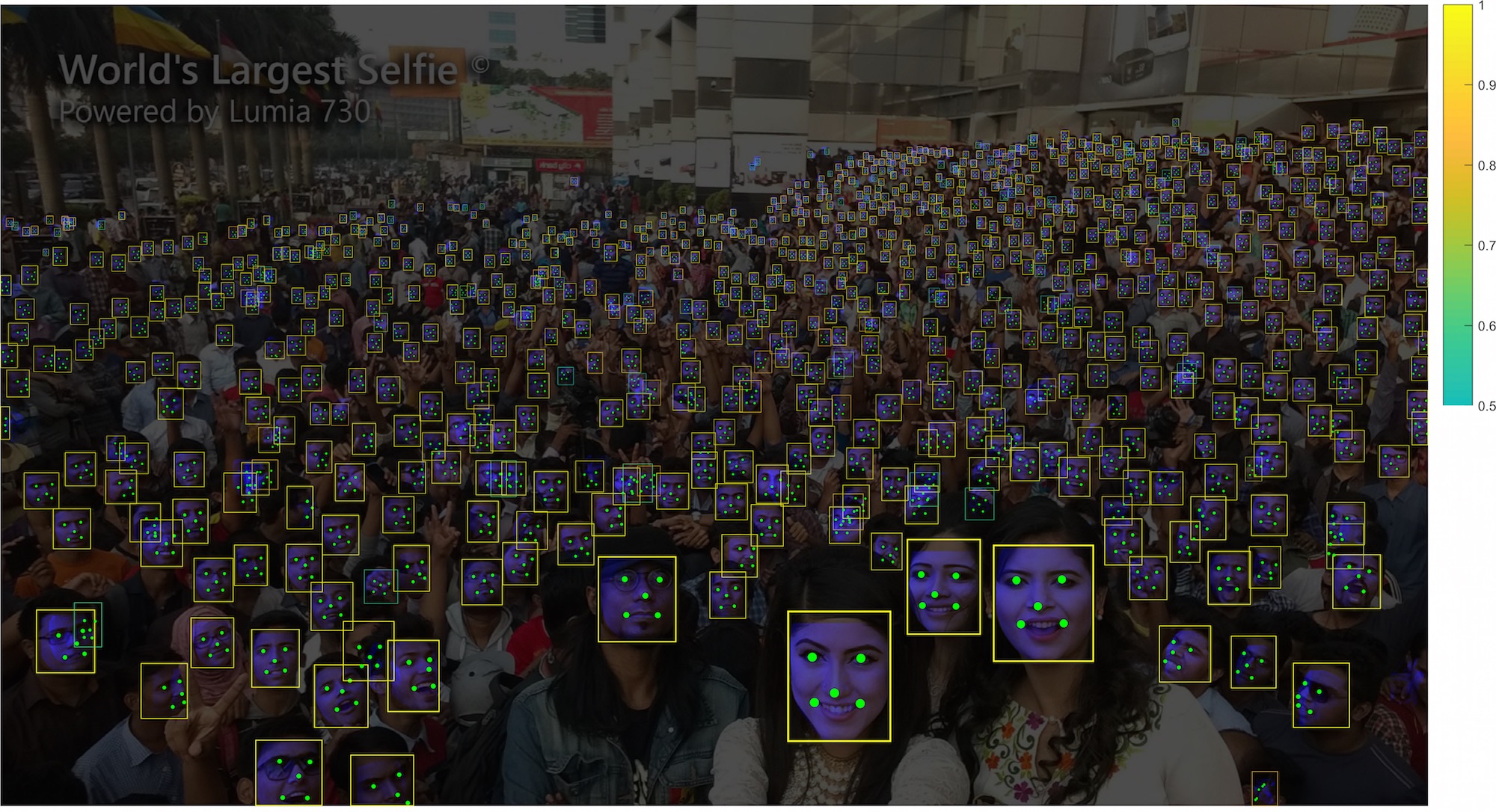

See the 5 colored dots on this image:

Closed glennois closed 2 years ago

These look like coordinates of five points.

See the comment above the line which you quoted:

standard 5 landmarks for FFHQ faces with 512 x 512

See the 5 colored dots on this image:

Thanks Woctezuma! I see it says it's for FFHQ, can I use these with LFW?

I'm still learning... thank you.

That comment got me worried 🤣

For FFHQ, the dlib library is used, so that face alignment is based on 68 landmarks. See: http://dlib.net/face_landmark_detection_ex.cpp.html

This example program shows how to find frontal human faces in an image and estimate their pose. The pose takes the form of 68 landmarks. These are points on the face such as the corners of the mouth, along the eyebrows, on the eyes, and so forth.

The face detector we use is made using the classic Histogram of Oriented Gradients (HOG) feature combined with a linear classifier, an image pyramid, and sliding window detection scheme. The pose estimator was created by using dlib's implementation of the paper: One Millisecond Face Alignment with an Ensemble of Regression Trees by Vahid Kazemi and Josephine Sullivan, CVPR 2014 and was trained on the iBUG 300-W face landmark dataset (see https://ibug.doc.ic.ac.uk/resources/facial-point-annotations/):

C. Sagonas, E. Antonakos, G, Tzimiropoulos, S. Zafeiriou, M. Pantic. 300 faces In-the-wild challenge: Database and results. Image and Vision Computing (IMAVIS), Special Issue on Facial Landmark Localisation "In-The-Wild". 2016. You can get the trained model file from: http://dlib.net/files/shape_predictor_68_face_landmarks.dat.bz2.

So the use of 5 landmarks is not specific to FFHQ at all. I think I saw it first in RetinaFace:

Perfect! Thank you so much for answering all my questions!

How do I calculate these numbers?

I want to calculate them for LFW and other datasets. I'm very new so I would appreciate if you can show how to calculate. 🍵

Thank you so much for your amazing library!