Hi,

I have also observed this issue with different images. It is difficult to get rid of it. What I did is to only compute matching between consecutive pictures. However this solution only works if you know that the pictures are in the good order and if there is in fact a good order.

If you cannot use this solution, I can advise you to use one of these solutions that might work:

- find a better line matching algorithm (personnally I haven't but there might be new existing code now)

- tune the thresholds inside LBD matcher (e.g. only accept matches with better descriptor similarities)

- define and tune a threshold after the hybrid ransac in calibration part. A contrario ransac gives you a NFA at the end and this value is supposed to tell you if the calibration worked well or not (-logNFA should be very high in good cases and very low otherwise)

- validate the calibration hypothesis by reprojecting 3D segment extremities. It is a bit complicated to explain here but the idea is to compute the 3D position of a segment extremity from its position in one picture (pic. 1) and the position of the matched line in the other picture (pic.2). From the 3D position you compute the projection on pic.2 and compute its distance with the corresponding segment (segment distance not line distance). When the distance is low, you validate this segment match otherwise you invalidate it. You perform this check on all the segment inliers and give a score to the calibration (e.g. #validated matches / #inlier matches) and with a threshold you validate or invalidate the image pair. Note that even in good cases, this value might be low but in bad cases it should be really close to 0.

I am sorry I cannot give you a good solution but I think one of the current biggest difficulty of SfM with lines is in fact the line matching...

Best,

Yohann

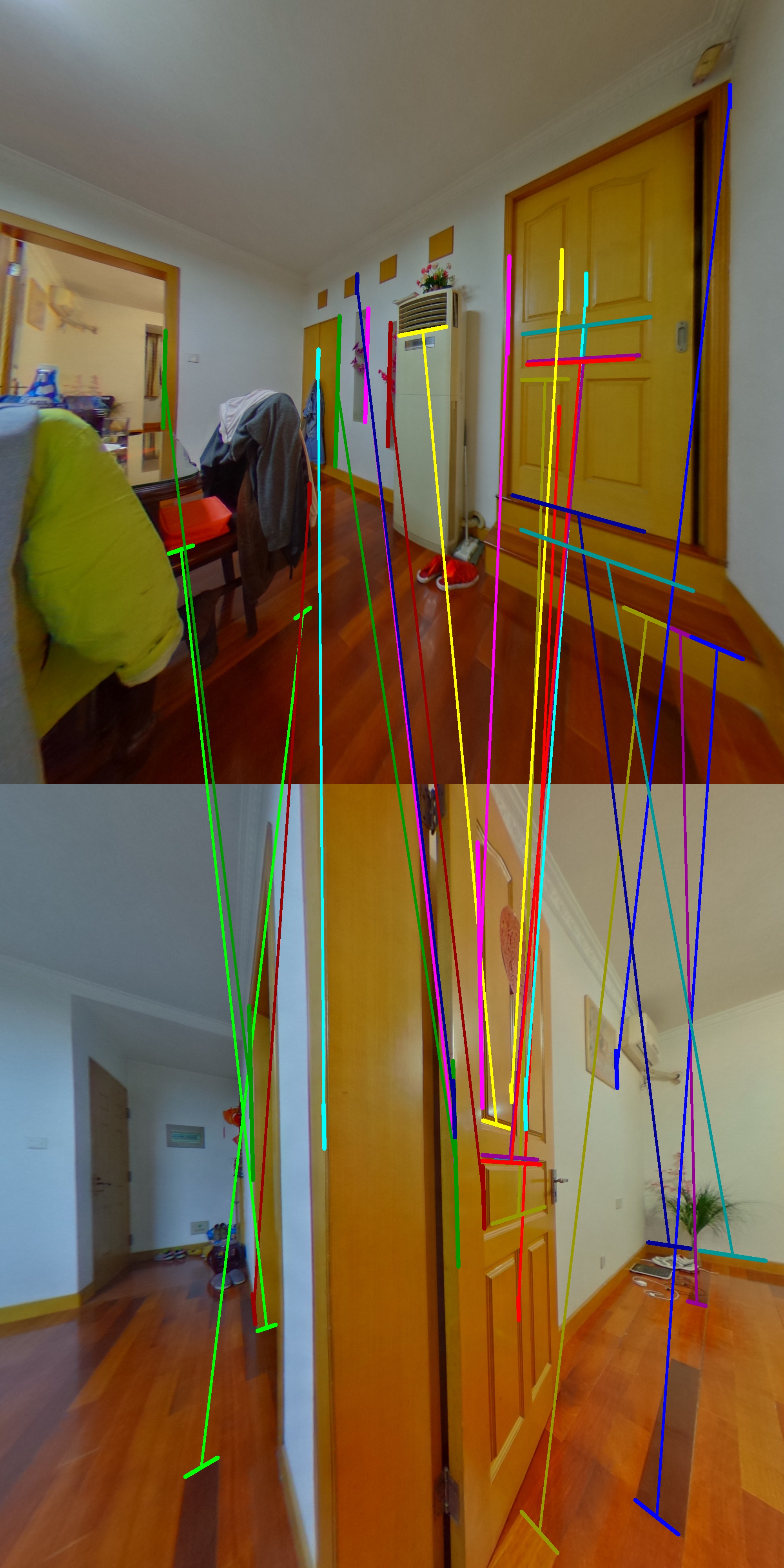

Hi, I've found the problem about the issue #4 . The result of line matching is terrible. There unexpectedly exist corresponding line pairs between two irrelevant images.

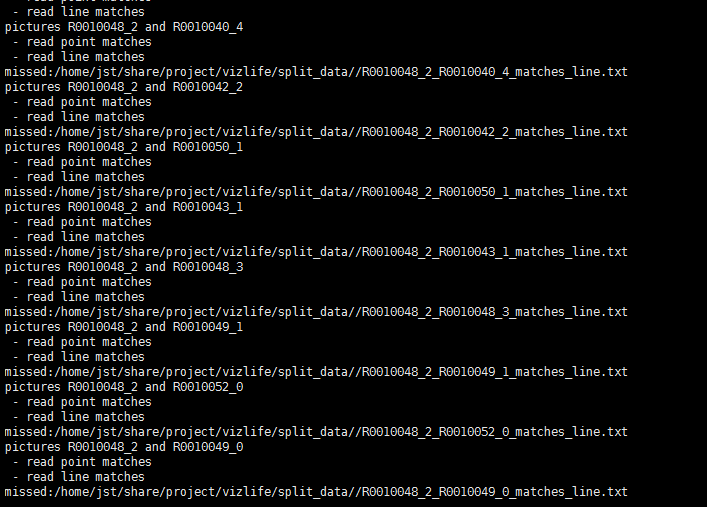

There are some warnings during line match stage, and missing line match files during calibration

Is Line Band Descriptor not as robust as sift point descriptor? Or the threshold of ransac stage in matching is not correct?