I'd also add that if it's not apparent from the plots, both models are under-predicting. sometimes by 20 years margin. It's getting worse as the age increases. I can plot the residuals to show the effect, but I think plots above are showing this clearly. Let me know if I can help by sharing more data.

Hi,

I've been doing some simple analysis over both pre-trained models, validating using appa-real dataset. Here's what I'm doing:

For each cropped and rotated image i.e. (_face.jpg) prefix:

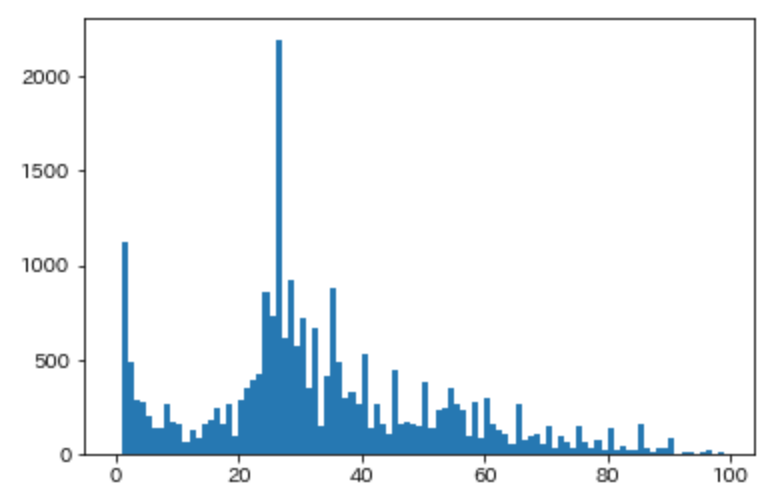

Note that I'm using real_age as supplied by appa dataset and not the expected value of apperent_age. In total I've sampled 1978 images, I see that:

I'm attaching some plots I've done, where my goal is to find if either I'm doing something incorrectly or maybe the accuracy is just off a bit? Maybe best to re-train on another dataset?

Again, thank you @yu4u for this project, I'm assuming I'm at fault here. Would love to get your feedback.