you are right, surely the output is the concatenated result of the last hidden state of forward LSTM and first hidden state of reverse LSTM, or BP will be wrong

Open hwijeen opened 5 years ago

you are right, surely the output is the concatenated result of the last hidden state of forward LSTM and first hidden state of reverse LSTM, or BP will be wrong

First of all, thanks for your great tutorial on pytorch! It's a great tip for beginners. I have a question about the way you use the output of a bidirectional model.

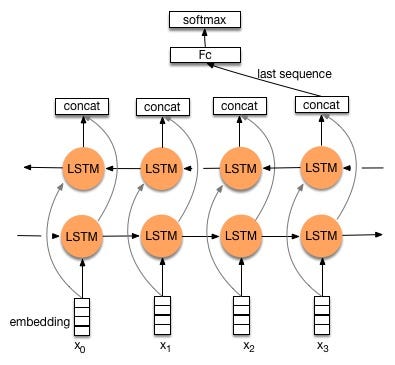

https://github.com/yunjey/pytorch-tutorial/blob/4896cefea1eb86cc96ff363c84767ca94cb559c6/tutorials/02-intermediate/bidirectional_recurrent_neural_network/main.py#L54-L58 From this code snippet, you took the LAST hidden state of forward and backward LSTM. I think the image below illustrates what you did with the code. Please refer to this why your code corresponds to the image below. Please note that if we pick the output at the last time step, the reverse RNN will have only seen the last input (x_3 in the picture). It’ll hardly provide any predictive power.(source)

Is this the way you intended? I think a more information-rich way of using the output of bidirectional LSTM is to concatenate the last hidden state of forward LSTM and first hidden state of reverse LSTM, so that both hidden states will have seen the entire input.

Thanks in advance!