Just created the issue to share the code

Closed carlosedubarreto closed 2 months ago

Just created the issue to share the code

Hello, mocap in blender, there is no position to move,Standing still, how to solve this problem, thank you

Hello @bboyalong01 , can you explain it more? I couldnt understand the problem. If you have a video showing the problem, it would be even better

https://github.com/user-attachments/assets/7430b19c-afbc-4916-9627-024f27cd4b15

https://github.com/user-attachments/assets/1b0ddda9-285a-4a4e-b10f-d1225f73caf5

Hello, here are 2 videos, one of which is rendered by blender, and the 3D coordinates of Pellvis in blender have not changed @carlosedubarreto

Hello @bboyalong01, I didnt noticed that problem on my tests.

I dont know what to suggest to you.

You can view my tests here https://bsky.app/profile/carlosedubarreto.bsky.social/post/3l4dqyd3sfm2u

Okay, thank you very much

Wow, thanks so much for your work @carlosedubarreto , I've been struggling about this for few days! This issue should rather be kept open for others who are looking for a solution.

Hi @carlosedubarreto , there is a pyramid in the origin of coordinate after running the script, do you know what's happening here?

Here is my pkl file if you would like to have a try: hmr4d_results.pt.zip

嗨@carlosedubarreto,运行脚本后坐标原点有一个金字塔,你知道这里发生了什么吗?

下面是我的pkl文件,如果您想尝试一下: hmr4d_results.pt.zip Hello, my problem is that after running the script, there is a pyramid at the origin of the coordinates, and the person has been making actions at this pyramid-like origin, without moving back and forth, left and right

@carlosedubarreto @kexul

Hello @kexul, thats the bone of the character, if i remember correctly, its the root bone.

You can hide in many ways, like, click on tye eye in the outline (top right corner) fir the object called armature, and it might be hidden.

It is needed for you to retarget the animation to another character, you need all the pyramids (bones) for that

If you render the result tye bones wont be seen too.

If you look at my examples, posted in a answer above, youll see that some of my videis there us tye pyramid and ithers don't. Dont worry about that.

@bboyalong01 I think i can imagine what is happening.

Did you reolace tye demo.py with the one i provided?

I guess that if instead you added the commands inside ut manually, something might be wrong and it is not getting the tranlation data correctly.

But its a hard guess, because, i imagine that if you did it wrong, the script would not work.

But to be sure, try tonreplace the demo filenwithbthe one ibsent on this issue. Thats my best guess

Hello @kexul, thats the bone of the character, if i remember correctly, its the root bone.

You can hide in many ways, like, click on tye eye in the outline (top right corner) fir the object called armature, and it might be hidden.

It is needed for you to retarget the animation to another character, you need all the pyramids (bones) for that

If you render the result tye bones wont be seen too.

If you look at my examples, posted in a answer above, youll see that some of my videis there us tye pyramid and ithers don't. Dont worry about that.

Thanks for your detailed reply! It's good to know. Unfortunately I'm not able to view your tests, it seems like the blue sky is blocked in my region.

Hello @kexul, thats the bone of the character, if i remember correctly, its the root bone. You can hide in many ways, like, click on tye eye in the outline (top right corner) fir the object called armature, and it might be hidden. It is needed for you to retarget the animation to another character, you need all the pyramids (bones) for that If you render the result tye bones wont be seen too. If you look at my examples, posted in a answer above, youll see that some of my videis there us tye pyramid and ithers don't. Dont worry about that.

Thanks for your detailed reply! It's good to know. Unfortunately I'm not able to view your tests, it seems like the blue sky is blocked in my region.

Oh sorry for that, and sadly my internet provider is out so I cant send all the the videos here, but I'll try to send one.

https://github.com/user-attachments/assets/3c725bad-330e-4c48-9f55-6be864e7c258

Hello @kexul, thats the bone of the character, if i remember correctly, its the root bone. You can hide in many ways, like, click on tye eye in the outline (top right corner) fir the object called armature, and it might be hidden. It is needed for you to retarget the animation to another character, you need all the pyramids (bones) for that If you render the result tye bones wont be seen too. If you look at my examples, posted in a answer above, youll see that some of my videis there us tye pyramid and ithers don't. Dont worry about that.

Thanks for your detailed reply! It's good to know. Unfortunately I'm not able to view your tests, it seems like the blue sky is blocked in my region.

Oh sorry for that, and sadly my internet provider is out so I cant send all the the videos here, but I'll try to send one.

blender_ljpIn59BGM.mp4

Thank you! ♥️♥️♥️

I used the demo.py file you provided, and later I used the PKL file you provided to test, and now I'm not next to the computer, see if it can run normally, with moving data, I can see the video, for example, just this walking video, I tested it at the origin of the standing still, no movement @carlosedubarreto

I used the demo.py file you provided, and later I used the PKL file you provided to test, and now I'm not next to the computer, see if it can run normally, with moving data, I can see the video, for example, just this walking video, I tested it at the origin of the standing still, no movement @carlosedubarreto

Oh man, sorry for that. But I think I wont be able to help with that, I have no further idea of what could be happening in your pc.

python tools/demo/demo.py --video=docs/example_video/tennis.mp4 -s, I used this command, and you are also using this command, right? I'll go back tonight and test again to find out why. @carlosedubarreto

@bboyalong01, yeah that's the one I'm using.

@bboyalong01, yeah that's the one I'm using.

Okay, thank you very much

I used the demo.py file you provided, and later I used the PKL file you provided to test, and now I'm not next to the computer, see if it can run normally, with moving data, I can see the video, for example, just this walking video, I tested it at the origin of the standing still, no movement @carlosedubarreto

Oh man, sorry for that. But I think I wont be able to help with that, I have no further idea of what could be happening in your pc.

Hello, found the reason, FBX model I downloaded into unity, it should be Maya, made a low-level mistake, really thanks, by the way, asking, can this generate smplx model driver, this has a finger bone

@bboyalong01 thanks for the info, indidnt even know that the unity one would work at all.

About the smplx, i think it cant generat the finger stuff

Hello @kexul, thats the bone of the character, if i remember correctly, its the root bone. You can hide in many ways, like, click on tye eye in the outline (top right corner) fir the object called armature, and it might be hidden. It is needed for you to retarget the animation to another character, you need all the pyramids (bones) for that If you render the result tye bones wont be seen too. If you look at my examples, posted in a answer above, youll see that some of my videis there us tye pyramid and ithers don't. Dont worry about that.

Thanks for your detailed reply! It's good to know. Unfortunately I'm not able to view your tests, it seems like the blue sky is blocked in my region.

Oh sorry for that, and sadly my internet provider is out so I cant send all the the videos here, but I'll try to send one.

blender_ljpIn59BGM.mp4

Hi @carlosedubarreto , I find that the palm is slightly different between the rendered video and imported in blender? The one in the video is a natural rest pose and the one imported is fully stretched. Is there any quick fix? Thanks!

@bboyalong01谢谢你的信息,我甚至都不知道团结会起作用。

关于smplex,我认为它不能生成手指的东西。 Unity, Maya, FBX models should be the root bone is different, the same as the other bones

I've made some changes to make it easier to run multiple people.

unpacking this zip file in the GVHMR root, it should replace the

tools\demo\demo.py

and

hmr4d\utils\preproc\tracker.py

the change I did also show the amount of people in the footage when you run the script

And this update I did also ignores if you already ran the code. The default script ignores and wont run the code again if it finds a result on the output folder (if you want to get multiple people it needs to run it all again, or else you'll get the same person all the time, even selectiong another person to have th result).

I hope it makes sense ☺️

So to select which person to get the animation, you have to change this setting in the

tools\demo\demo.py

Basically I left the person=0 to leave that as default after I use the code, and when I want other people I change the number accordinly.

Important info, python starts at 0, so if you what the first person you'll have to use person=0, if you want the second person, you'll have to use person=1and so on.

Hello @kexul Sorry i didnt noticed your message, the last 2 weeks has been very crazy here.

About the hand difference between the video and the ine imported in blender.

I think that the video rendered by the script uses SMPLX, while the data loaded in blender uses SMPL.

Actually this shouldn't make much difference, since GVHMR doesnt seems to get the finger tracking.

My suggestion would be to get the animation in blender and retarget to anithe character that has the finger bones (since smpl doesn't) and animate the fingers the way you want.

Ibthink its better this way also because the bones of SMPL and SMPLX characters are a pain to manually tweak, because the bone orientation is awkward. Tgere is a way to import it in blender in a way that the bones looks better, but doing that the import if the motion from GVHMR doesn't work correctly.

So thats why i suggest the retarget anyway.

Hello @kexul Sorry i didnt noticed your message, the last 2 weeks has been very crazy here.

About the hand difference between the video and the ine imported in blender.

I think that the video rendered by the script uses SMPLX, while the data loaded in blender uses SMPL.

Actually this shouldn't make much difference, since GVHMR doesnt seems to get the finger tracking.

My suggestion would be to get the animation in blender and retarget to anithe character that has the finger bones (since smpl doesn't) and animate the fingers the way you want.

Ibthink its better this way also because the bones of SMPL and SMPLX characters are a pain to manually tweak, because the bone orientation is awkward. Tgere is a way to import it in blender in a way that the bones looks better, but doing that the import if the motion from GVHMR doesn't work correctly.

So thats why i suggest the retarget anyway.

Thank your! ❤️ ❤️ ❤️

918_3_incam_global_horiz.mp4 blender_ex.mp4

Hello, here are 2 videos, one of which is rendered by blender, and the 3D coordinates of Pellvis in blender have not changed @carlosedubarreto

Make sure you dowload the SMPL 1.0.2 version which is the maya body model.

Is it possible to somehow get a bone names similar to mixamo? UE?

it's very painful to retarget manually

Hello @haruhix I did an addon called CEB 4d humans that not only runs the code from 4d humans research but also do other things like retargeting to most popular rigs, like mixamo, for example.

You dont have to install the VENV part to run the retargeting tool, just install the addon, you can get it here

https://github.com/carlosedubarreto/CEB_4d_Humans/releases/tag/1.12

in this simple video I show how to retarget to a a mixamo rig using the addon https://youtu.be/VNo6e0PMRuA?t=94

@carlosedubarreto Excuse me, Master carlosedubarreto.How to train custom videos and export them in pkl format, because I get an error saying there's no pytorch3d when running gvhmr on my Windows 11. I've been trying to install pytorch3d for several days and still can't get it installed

Hello @fxgame0003 , lol, I'm not master ☺️

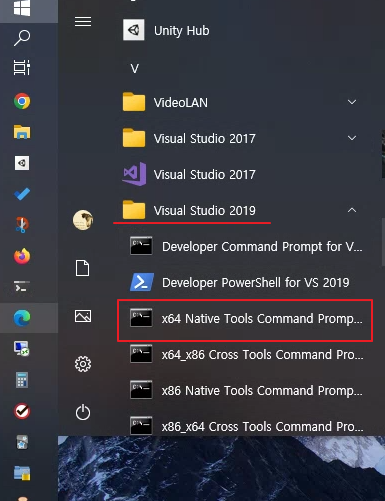

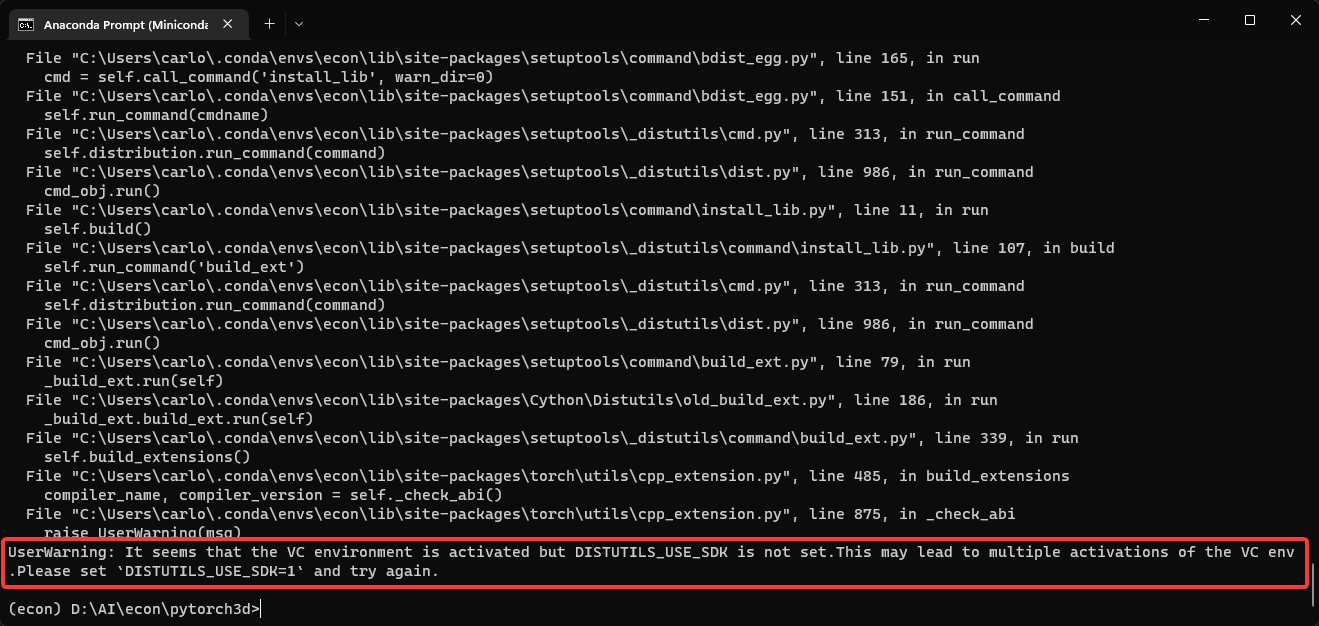

About pytorch3d, you can try these instructions below. Just make sure you have your GVHMRconda env active. In the images it shows "ECON" because I wrote those instructions for the ECON project, but I used almost the same steps to make it work with GVHMR

Oh, make sure you installed CUDA 12.1, and add the bin folder to your system path, it will be probably like this

Also make sure that after installing the cuda 12.1 you have the CUDA_PATH to the 12.1 version, probably like this

in any case to be sure that everything works, after installing cuda 12.1 restart you pc, its a good thing to do (not essential, but I think its easier doing this)

install these

conda install -c fvcore -c iopath -c conda-forge fvcore iopath

I think you dont need to load the VS Native command tools

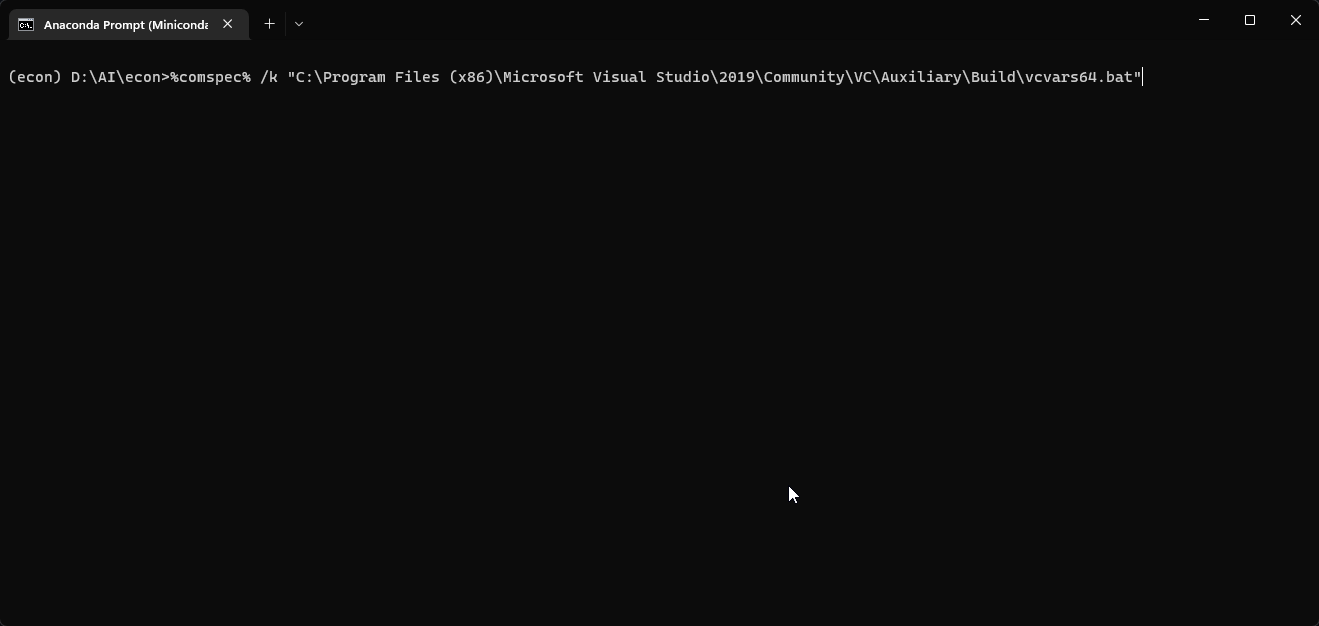

but in case you need to, it must be loaded after you activate the econ venv (with the previous packages already installed) to do that you could, in CMD pront, execute this line of command

%comspec% /k "C:\Program Files (x86)\Microsoft Visual Studio\2019\Community\VC\Auxiliary\Build\vcvars64.bat"

before you press enter it will looks something like this

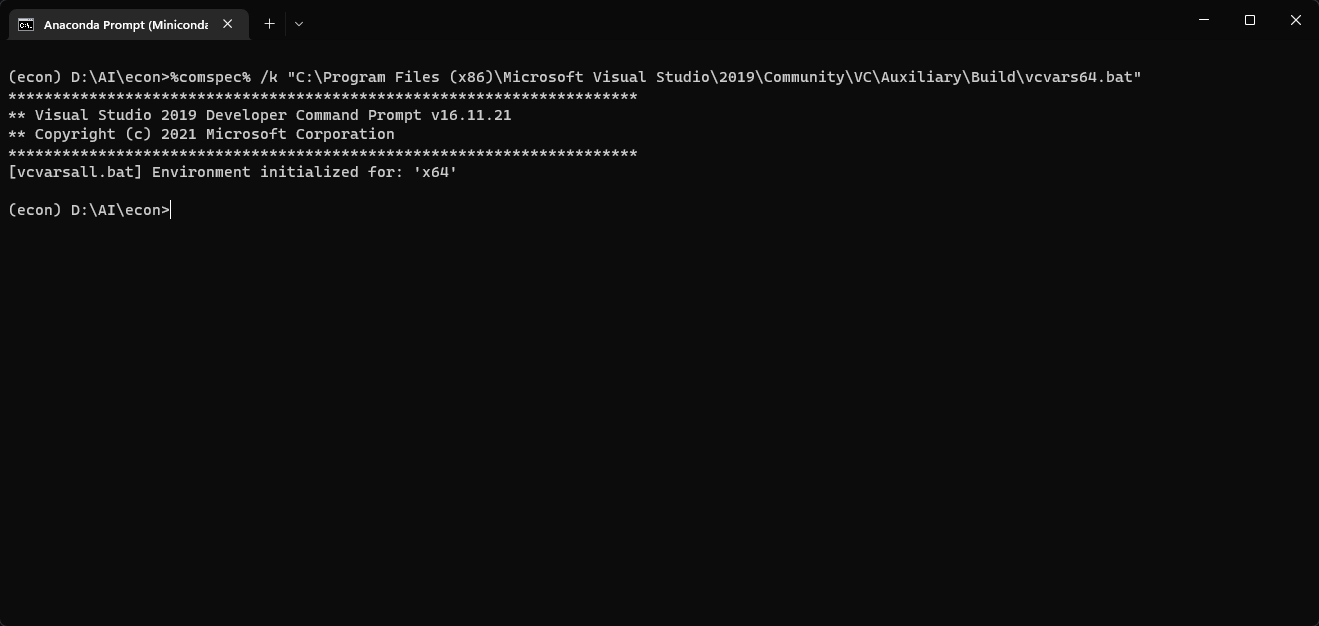

and after pressing enter, it might look like this

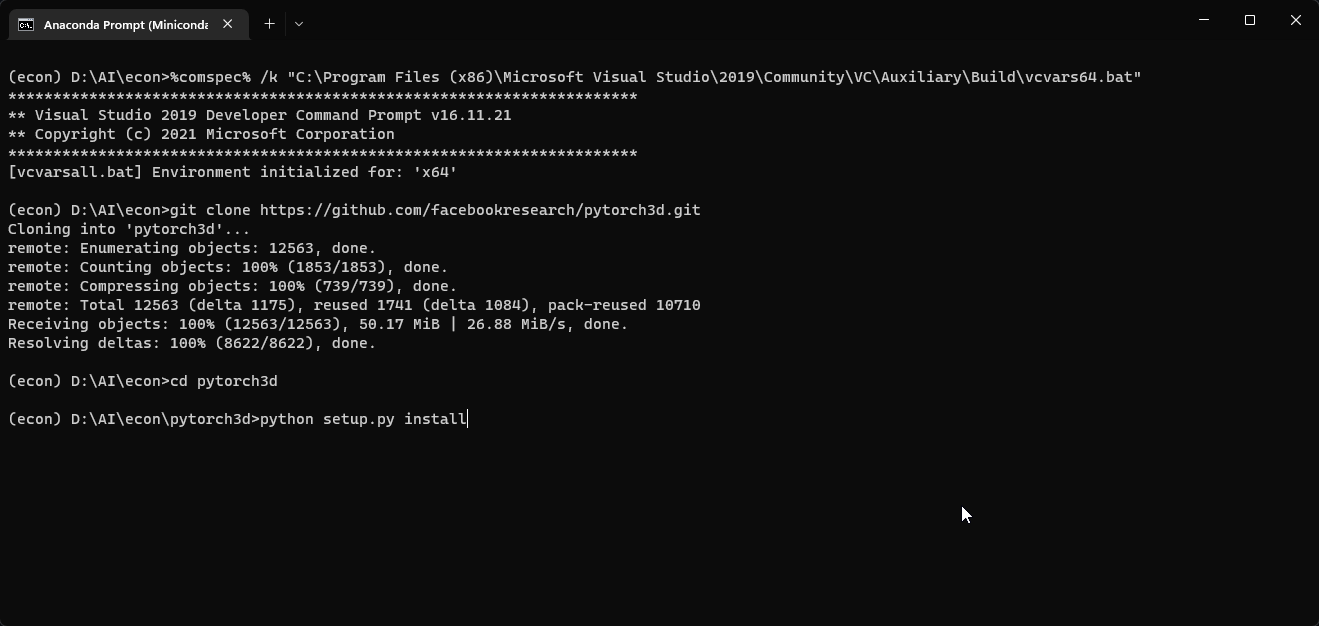

then get the pytorch3d package using

git clone https://github.com/facebookresearch/pytorch3d.git

enter pytorch3d folder

cd pytorch3d

python setup.py install

Probably you will receive this error

You just have to put this command:

set DISTUTILS_USE_SDK=1

and run again

python setup.py install

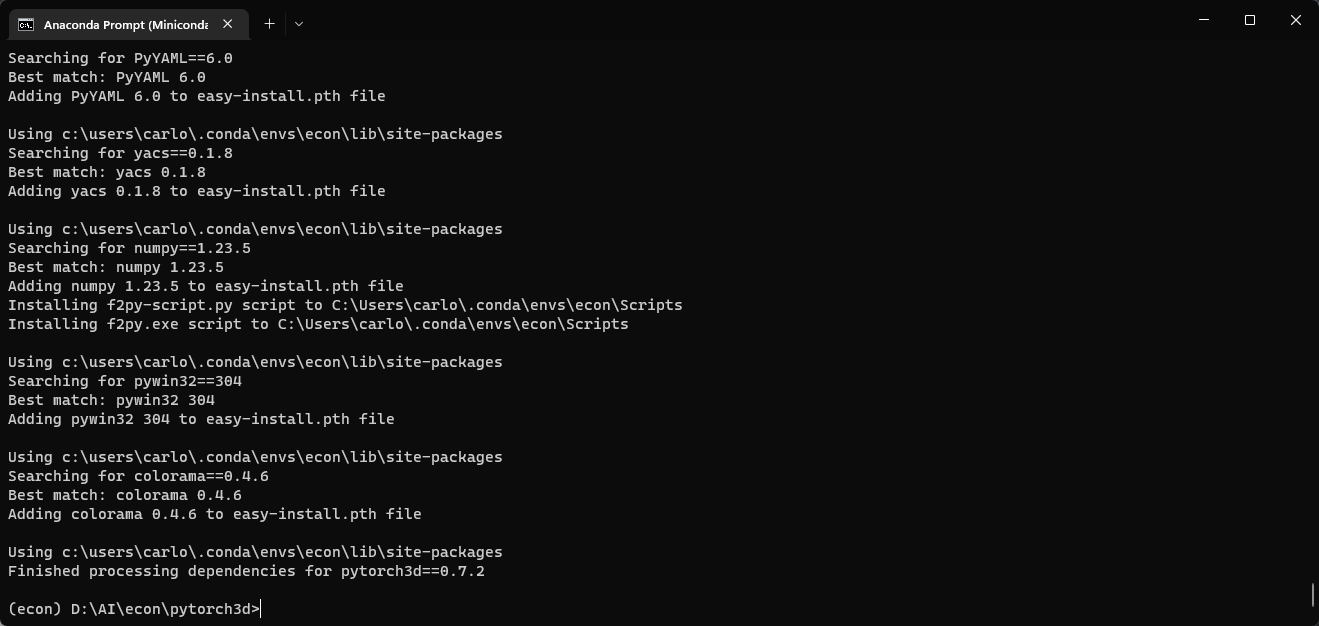

It will take A LOT OF TIME to finish and it will show something like this

Hello everyone, I am a novice and want to use my own video, apply code to generate actions, and import them into Blender. Can you share the whole process with me? Thank you

@SoCool668 , you can find more info on another issue, like this one https://github.com/zju3dv/GVHMR/issues/23

We've been talking a lot on CEB Studios Discord channel about the installation, but I think the main part you can get on this issue I showed above

Massimina did a great job compiling the steps.

@carlosedubarreto I installed CUDA v2.1, set the environment variable location, and set DLSTUTILS_USE_SDK=1, but it still reported an error. I'm a beginner and don't know much. Although I couldn't install it successfully, I still appreciate your response.

@fxgame0003 wow, you did it very well.

did you also installed the

conda install -c fvcore -c iopath -c conda-forge fvcore iopath

sadly the compiling errors are terrible to troubleshoot. but I know that when you have all the necessary things it works. But I cant help much understanding that error that showed.

take a look on this stackoverflow answer, I remember it helped me a lot making it work https://stackoverflow.com/a/74913303/9473295

I also noticed that you installed visual studio 2022, while I was able to install using visual studio 2019. Honestly I dont think that it is the problem, but maybe is..... if nothing alse works I would suggest to try installign visual studio 2019.

@carlosedubarreto I spent a lot of time and finally completed the installation of PyTorch3D. The issue was indeed as you mentioned—it was caused by Visual Studio 2022. I had to use Visual Studio 2019. Since I couldn't download Visual Studio 2019 from the official website, I had to find a way to download a large package, which was several tens of gigabytes, for local installation. My internet connection is unstable and frequently disconnects, but I eventually managed to install PyTorch3D. However, now I'm encountering another error when running GVHMR, and I'm not sure what the cause is.

@fxgame0003 There is a parson called Mana Light that was having this same Extractor problem and he/she wrote some steps that might help you

https://discord.com/channels/992698789569773609/992698790412812349/1287445600345788479

And sorry, he/she said also that was not able to install using vs2022.

here is a print of the text I linked in case you cannet get in the discord

And I would not suggest to do the fix crisper suggested, becaue as far as I know Mana light didnt it, and it worked for him/her

if you can get into the discord, because there was a huge discussion about the installation of GVHMR there and many people, even with the problems, were albe to install and use it.

BTW, to get in the discord, use this link https://discord.gg/BRuu43Nv2J

@carlosedubarreto My GVHMR on the computer is still showing some errors, but it's usable. I also installed PyTorch3D using VS2019. Unfortunately, my network doesn't allow Discord. Thank you so much for your multiple responses. You are a very kind and responsible person, and your answers have been very helpful to me. I truly appreciate it.

@fxgame0003 my pleasure. I'm happy that you were able to make it work in some way, that is what matters 🦾🎉

Thank you for making the whole thing work for Blender! Do you think it's possible to somehow extract the camera coordinates from the inference script? Such that the camera movement can be recreated in Blender? I haven't had a detailed look at the script, so I thought I'd first ask you before even trying to accomplish something like that.

Hello @philgatt , I do believe its possible, but I think for that you'll need the DPVO part (i didnt install it). The script I shared only deals with the SMPL character data, not the camera.

So I'm actually not ble to help much on this camera matter, sorry.

@carlosedubarreto Thank you, I'm gonna look into it!

@carlosedubarreto Just a quick follow up on this: I see you had some problems with DPVO in Windows (https://github.com/princeton-vl/DPVO/issues/46) Did you ever get it to work by any chance?

@philgatt , I was able to install DPVO when trying WHAM. I took some notes, I'll paste them below

The sad part is that some of the instructions are in portuguese (my native language) I'm in a rush now and was not able to translate them, but hopefully they'll make sense. Having more time left I can translate it to you

BTW, important note, those instructions were for WHAM, so you'll have to adapt the versions of CUDA and python packages accordingly

cd third-party/DPVO wget https://gitlab.com/libeigen/eigen/-/archive/3.4.0/eigen-3.4.0.zip unzip eigen-3.4.0.zip -d thirdparty && rm -rf eigen-3.4.0.zip conda install pytorch-scatter=2.0.9 -c rusty1s conda install cudatoolkit-dev=11.3.1 -c conda-forge

cd third-party/DPVOColocar o eigen-3.4.0 na pasta thirdparty usar CUDA 11.7 - testar com 11.3 pra ter certeza antes

conda install pytorch-scatter=2.0.9 -c rusty1sRodar a linha de baixo se nao tiver rodado a instalação do pytorch, por que no pytorch ja foi feito isso

%comspec% /k "C:\Program Files (x86)\Microsoft Visual Studio\2019\Community\VC\Auxiliary\Build\vcvars64.bat"

set DISTUTILS_USE_SDK=1pip install .pip install torch_scattertentar essa solução

Em resumo, é necessario trocar long por int64_t no arquivo dpvo/altcorr/correlation_kernel.cu

troquei nos aquivos:

correlation_kernel.cu

ba.cpp

ba_cuda.cu

no lietorch_gpu.cu retirar todos os "const"

@SoCool668 , you can find more info on another issue, like this one #23

We've been talking a lot on CEB Studios Discord channel about the installation, but I think the main part you can get on this issue I showed above

Massimina did a great job compiling the steps.

@SoCool668,你可以在另一个问题上找到更多信息,比如这个#23

我们已经在CEB Studios Discord频道上谈论了很多关于安装的事情,但我认为你可以在上面展示的这个问题上得到的主要部分

Massimina在编译步骤方面做得很好。

Thank you for your answer. Is there the URL of the CEB Studios Discord channel you mentioned? I will go there to learn more. Thank you.

@SoCool668 sure, the link for the discord is: https://discord.gg/BRuu43Nv2J

Hello. I was trying to make it work in blender and I was able to do it. Wanted to share the code with you all.

To make it work I had to add these lines of code in the

demo.pyfile that is in thetools\demofolderor unzip the file below and replace it. demo.zip I had to create these lines so I could open the file in blender without problems, or else I would have to install pytorch in it which would be a huge problem.

And to load in blender, you can use this blend file (you'll have to unzip it to get the blend file)

import_anim_GVHMR_b4.2_v2.blend.zip

And to make it work you have to have the SMPL fbx file, that you can download form the SMPL site (its the SMPL_maya.zip file to download) set the path for the SMPL FBX file, and the GVHMR result.pkl, like you can see in the example below

Run the script and you might have the result. Have fun PS.: this blend file was made in blender 4.2