[!NOTE] clickhouse-jdbc-bridge contains experimental codes and is no longer supported. It may contain reliability and security vulnerabilities. Use it at your own risk.

ClickHouse JDBC Bridge

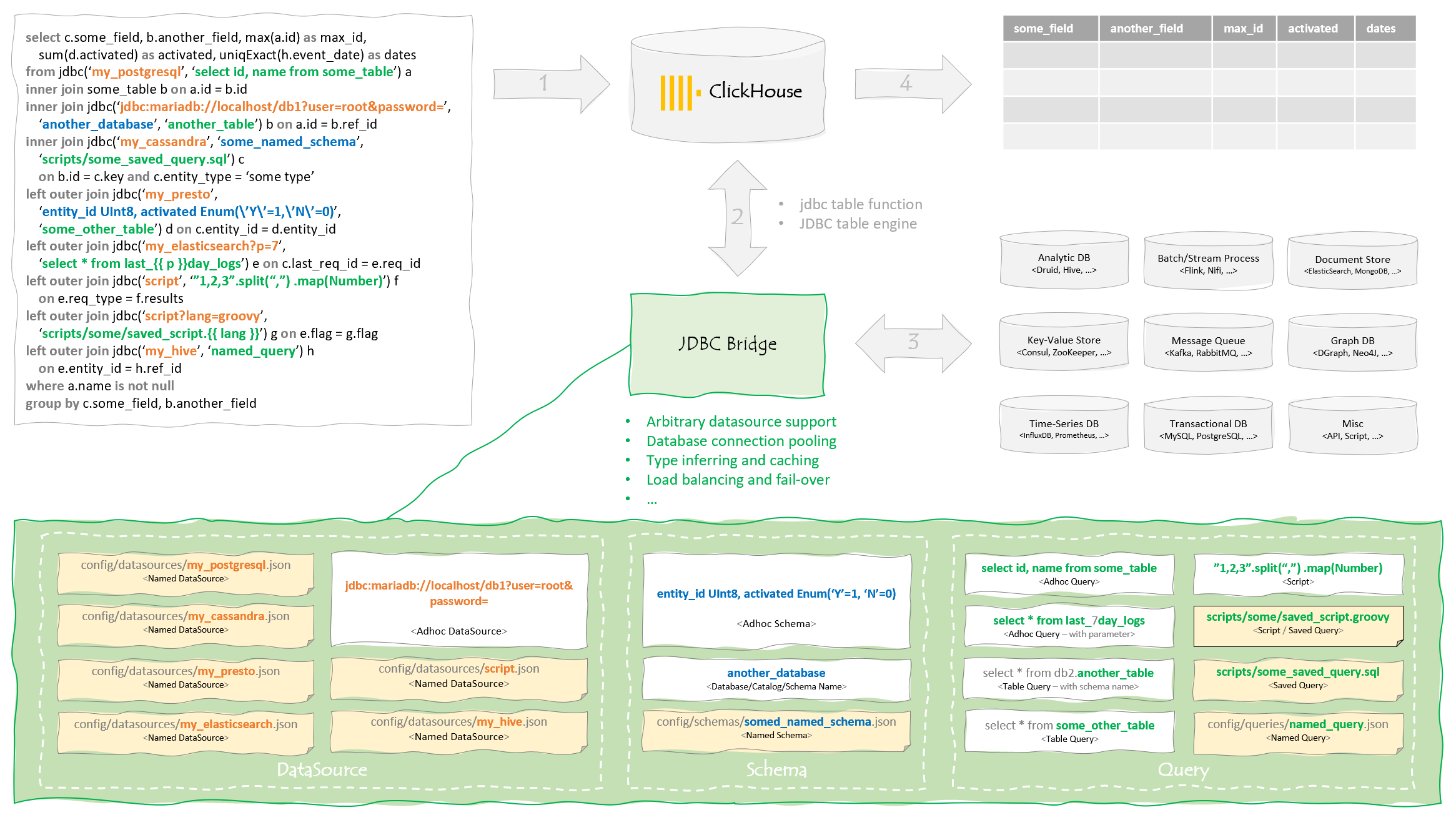

JDBC bridge for ClickHouse®. It acts as a stateless proxy passing queries from ClickHouse to external datasources. With this extension, you can run distributed query on ClickHouse across multiple datasources in real time, which in a way simplifies the process of building data pipelines for data warehousing, monitoring and integrity check etc.

Overview

Known Issues / Limitation

-

Connection issue like

jdbc-bridge is not runningorconnect timed out- see performance section and this issue for details -

Complex data types like Array and Tuple are currently not supported - they're treated as String

-

Pushdown is not supported and query may execute twice because of type inferring

-

Mutation is not fully supported - only insertion in simple cases

-

Scripting is experimental

Quick Start

-

Docker Compose

git clone https://github.com/ClickHouse/clickhouse-jdbc-bridge.git cd clickhouse-jdbc-bridge/misc/quick-start docker-compose up -d ... docker-compose ps Name Command State Ports -------------------------------------------------------------------------------------------------- quick-start_ch-server_1 /entrypoint.sh Up 8123/tcp, 9000/tcp, 9009/tcp quick-start_db-mariadb10_1 docker-entrypoint.sh mysqld Up 3306/tcp quick-start_db-mysql5_1 docker-entrypoint.sh mysqld Up 3306/tcp, 33060/tcp quick-start_db-mysql8_1 docker-entrypoint.sh mysqld Up 3306/tcp, 33060/tcp quick-start_db-postgres13_1 docker-entrypoint.sh postgres Up 5432/tcp quick-start_jdbc-bridge_1 /sbin/my_init Up 9019/tcp # issue below query, and you'll see "ch-server 1" returned docker-compose run ch-server clickhouse-client --query="select * from jdbc('self?datasource_column', 'select 1')" -

Docker CLI

It's easier to get started using all-in-one docker image:

# build all-in-one docker image git clone https://github.com/ClickHouse/clickhouse-jdbc-bridge.git cd clickhouse-jdbc-bridge docker build -t my/clickhouse-all-in-one -f all-in-one.Dockerfile . # start container in background docker run --rm -d --name ch-and-jdbc-bridge my/clickhouse-all-in-one # enter container to add datasource and issue query docker exec -it ch-and-jdbc-bridge bash cp /etc/clickhouse-jdbc-bridge/config/datasources/datasource.json.example \ /etc/clickhouse-jdbc-bridge/config/datasources/ch-server.json # you're supposed to see "ch-server 1" returned from ClickHouse clickhouse-client --query="select * from jdbc('self?datasource_column', 'select 1')"Alternatively, if you prefer the hard way ;)

# create a network for ClickHouse and JDBC brigde, so that they can communicate with each other docker network create ch-net --attachable # start the two containers docker run --rm -d --network ch-net --name jdbc-bridge --hostname jdbc-bridge clickhouse/jdbc-bridge docker run --rm -d --network ch-net --name ch-server --hostname ch-server \ --entrypoint /bin/bash clickhouse/clickhouse-server -c \ "echo '<clickhouse><jdbc_bridge><host>jdbc-bridge</host><port>9019</port></jdbc_bridge></clickhouse>' \ > /etc/clickhouse-server/config.d/jdbc_bridge_config.xml && /entrypoint.sh" # add named datasource and query docker exec -it jdbc-bridge cp /app/config/datasources/datasource.json.example \ /app/config/datasources/ch-server.json docker exec -it jdbc-bridge cp /app/config/queries/query.json.example \ /app/config/queries/show-query-logs.json # issue below query, and you'll see "ch-server 1" returned from ClickHouse docker exec -it ch-server clickhouse-client \ --query="select * from jdbc('self?datasource_column', 'select 1')" -

Debian/RPM Package

Besides docker, you can download and install released Debian/RPM package on existing Linux system.

Debian/Ubuntu

apt update && apt install -y procps wget wget https://github.com/ClickHouse/clickhouse-jdbc-bridge/releases/download/v2.1.0/clickhouse-jdbc-bridge_2.1.0-1_all.deb apt install --no-install-recommends -f ./clickhouse-jdbc-bridge_2.1.0-1_all.deb clickhouse-jdbc-bridgeCentOS/RHEL

yum install -y wget wget https://github.com/ClickHouse/clickhouse-jdbc-bridge/releases/download/v2.1.0/clickhouse-jdbc-bridge-2.1.0-1.noarch.rpm yum localinstall -y clickhouse-jdbc-bridge-2.1.0-1.noarch.rpm clickhouse-jdbc-bridge -

Java CLI

wget https://github.com/ClickHouse/clickhouse-jdbc-bridge/releases/download/v2.1.0/clickhouse-jdbc-bridge-2.1.0-shaded.jar # add named datasource wget -P config/datasources https://raw.githubusercontent.com/ClickHouse/clickhouse-jdbc-bridge/master/misc/quick-start/jdbc-bridge/config/datasources/ch-server.json # start jdbc bridge, and then issue below query in ClickHouse for testing # select * from jdbc('ch-server', 'select 1') java -jar clickhouse-jdbc-bridge-2.1.0-shaded.jar

Usage

In most cases, you'll use jdbc table function to query against external datasources:

select * from jdbc('<datasource>', '<schema>', '<query>')schema is optional but others are mandatory. Please be aware that the query is in native format of the given datasource. For example, if the query is select * from some_table limit 10, it may work in MariaDB but not in PostgreSQL, as the latter one does not understand limit.

Assuming you started a test environment using docker-compose, please refer to examples below to get familiar with JDBC bridge.

-

Data Source

-- show datasources and usage select * from jdbc('', 'show datasources') -- access named datasource select * from jdbc('ch-server', 'select 1') -- adhoc datasource is NOT recommended for security reason select * from jdbc('jdbc:clickhouse://localhost:8123/system?compress=false&ssl=false&user=default', 'select 1') -

Schema

By default, any adhoc query passed to JDBC bridge will be executed twice. The first run is for type inferring, while the second for retrieving results. Although metadata will be cached(for up to 5 minutes by default), executing same query twice could be a problem - that's where schema comes into play.

-- inline schema select * from jdbc('ch-server', 'num UInt8, str String', 'select 1 as num, ''2'' as str') select * from jdbc('ch-server', 'num Nullable(Decimal(10,0)), Nullable(str FixedString(1)) DEFAULT ''x''', 'select 1 as num, ''2'' as str') -- named schema select * from jdbc('ch-server', 'query-log', 'show-query-logs') -

Query

-- adhoc query select * from jdbc('ch-server', 'system', 'select * from query_log where user != ''default''') select * from jdbc('ch-server', 'select * from query_log where user != ''default''') select * from jdbc('ch-server', 'select * from system.query_log where user != ''default''') -- table query select * from jdbc('ch-server', 'system', 'query_log') select * from jdbc('ch-server', 'query_log') -- saved query select * from jdbc('ch-server', 'scripts/show-query-logs.sql') -- named query select * from jdbc('ch-server', 'show-query-logs') -- scripting select * from jdbc('script', '[1,2,3]') select * from jdbc('script', 'js', '[1,2,3]') select * from jdbc('script', 'scripts/one-two-three.js') -

Query Parameters

select * from jdbc('ch-server?datasource_column&max_rows=1&fetch_size=1&one=1&two=2', 'select {{one}} union all select {{ two }}')Query result:

┌─datasource─┬─1─┐ │ ch-server │ 1 │ └────────────┴───┘ -

JDBC Table

drop table if exists system.test; create table system.test ( a String, b UInt8 ) engine=JDBC('ch-server', '', 'select user as a, is_initial_query as b from system.processes'); -

JDBC Dictionary

drop dictionary if exists system.dict_test; create dictionary system.dict_test ( b UInt64 DEFAULT 0, a String ) primary key b SOURCE(CLICKHOUSE(HOST 'localhost' PORT 9000 USER 'default' TABLE 'test' DB 'system')) LIFETIME(MIN 82800 MAX 86400) LAYOUT(FLAT()); -

Mutation

-- use query parameter select * from jdbc('ch-server?mutation', 'drop table if exists system.test_table'); select * from jdbc('ch-server?mutation', 'create table system.test_table(a String, b UInt8) engine=Memory()'); select * from jdbc('ch-server?mutation', 'insert into system.test_table values(''a'', 1)'); select * from jdbc('ch-server?mutation', 'truncate table system.test_table'); -- use JDBC table engine drop table if exists system.test_table; create table system.test_table ( a String, b UInt8 ) engine=Memory(); drop table if exists system.jdbc_table; create table system.jdbc_table ( a String, b UInt8 ) engine=JDBC('ch-server?batch_size=1000', 'system', 'test_table'); insert into system.jdbc_table(a, b) values('a', 1); select * from system.test_table;Query result:

┌─a─┬─b─┐ │ a │ 1 │ └───┴───┘ -

Monitoring

You can use Prometheus to monitor metrics exposed by JDBC bridge.

curl -v http://jdbc-bridge:9019/metrics

Configuration

-

JDBC Driver

By default, all JDBC drivers should be placed under

driversdirectory. You can override that by customizingdriverUrlsin datasource configuration file. For example:{ "testdb": { "driverUrls": [ "drivers/mariadb", "D:\\drivers\\mariadb", "/mnt/d/drivers/mariadb", "https://repo1.maven.org/maven2/org/mariadb/jdbc/mariadb-java-client/2.7.4/mariadb-java-client-2.7.4.jar" ], "driverClassName": "org.mariadb.jdbc.Driver", ... } } -

Named Data Source

By default, named datasource is defined in configuration file in JSON format under

config/datasourcesdirectory. You may check examples at misc/quick-start/jdbc-bridge/config/datasources. If you use modern editors like VSCode, you may find it's helpful to use JSON schema for validation and smart autocomplete. -

Saved Query

Saved queries and scripts are under

scriptsdirectory by default. For example: show-query-logs.sql. -

Named Query

Similar as named datasource, named queries are JSON configuration files under

config/queries. You may refer to examples at misc/quick-start/jdbc-bridge/config/queries. -

Logging

You can customize logging configuration in logging.properties.

-

Vert.x

If you're familiar with Vert.x, you can customize its configuration by changing

config/httpd.jsonandconfig/vertx.json. -

Query Parameters

All supported query parameters can be found at here.

datasource_column=truecan be simplied asdatasource_column, for example:select * from jdbc('ch-server?datasource_column=true', 'select 1') select * from jdbc('ch-server?datasource_column', 'select 1') -

Timeout

Couple of timeout settings you should be aware of:

- datasource timeout, for example:

max_execution_timein MariaDB - JDBC driver timeout, for example:

connectTimeoutandsocketTimeoutin MariaDB Connector/J - JDBC bridge timeout, for examples:

queryTimeoutinconfig/server.json, andmaxWorkerExecuteTimeinconfig/vertx.json - ClickHouse timeout like

max_execution_time,keep_alive_timeoutandhttp_receive_timeoutetc. - Client timeout, for example:

socketTimeoutin ClickHouse JDBC driver

- datasource timeout, for example:

Migration

-

Upgrade to 2.x

2.x is a complete re-write not fully compatible with older version. You'll have to re-define your datasources and update your queries accordingly.

Build

You can use Maven to build ClickHouse JDBC bridge, for examples:

git clone https://github.com/ClickHouse/clickhouse-jdbc-bridge.git

cd clickhouse-jdbc-bridge

# compile and run unit tests

mvn -Prelease verify

# release shaded jar, rpm and debian packages

mvn -Prelease packageIn order to build docker images:

git clone https://github.com/ClickHouse/clickhouse-jdbc-bridge.git

cd clickhouse-jdbc-bridge

docker build -t my/clickhouse-jdbc-bridge .

# or if you want to build the all-ine-one image

docker build --build-arg revision=20.9.3 -f all-in-one.Dockerfile -t my/clickhouse-all-in-one .Develop

JDBC bridge is extensible. You may take ConfigDataSource and ScriptDataSource as examples to create your own extension.

An extension for JDBC bridge is basically a Java class with 3 optional parts:

-

Extension Name

By default, extension class name will be treated as name for the extension. However, you can declare a static member in your extension class to override that, for instance:

public static final String EXTENSION_NAME = "myExtension"; -

Initialize Method

Initialize method will be called once and only once at the time when loading your extension, for example:

public static void initialize(ExtensionManager manager) { ... } -

Instantiation Method

In order to create instance of your extension, in general you should define a static method like below so that JDBC bridge knows how(besides walking through all possible constructors):

public static MyExtension newInstance(Object... args) { ... }

Assume your extension class is com.mycompany.MyExtension, you can load it into JDBC bridge by:

-

put your extension package(e.g. my-extension.jar) and required dependencies under

extensionsdirectory -

update

server.jsonby adding your extension, for example... "extensions": [ ... { "class": "com.mycompany.MyExtension" } ] ...Note: order of the extension matters. The first

NamedDataSourceextension will be set as default for all named datasources.

Performance

Below is a rough performance comparison to help you understand overhead caused by JDBC bridge as well as its stability. MariaDB, ClickHouse, and JDBC bridge are running on separated KVMs. ApacheBench(ab) is used on another KVM to simulate 20 concurrent users to issue same query 100,000 times after warm-up. Please refer to this in order to setup test environment and run tests by yourself.

| Test Case | Time Spent(s) | Throughput(#/s) | Failed Requests | Min(ms) | Mean(ms) | Median(ms) | Max(ms) |

|---|---|---|---|---|---|---|---|

| clickhouse_ping | 801.367 | 124.79 | 0 | 1 | 160 | 4 | 1,075 |

| jdbc-bridge_ping | 804.017 | 124.38 | 0 | 1 | 161 | 10 | 3,066 |

| clickhouse_url(clickhouse) | 801.448 | 124.77 | 3 | 3 | 160 | 8 | 1,077 |

| clickhouse_url(jdbc-bridge) | 811.299 | 123.26 | 446 | 3 | 162 | 10 | 3,066 |

| clickhouse_constant-query | 797.775 | 125.35 | 0 | 1 | 159 | 4 | 1,077 |

| clickhouse_constant-query(mysql) | 1,598.426 | 62.56 | 0 | 7 | 320 | 18 | 2,049 |

| clickhouse_constant-query(remote) | 802.212 | 124.66 | 0 | 2 | 160 | 8 | 3,073 |

| clickhouse_constant-query(url) | 801.686 | 124.74 | 0 | 3 | 160 | 11 | 1,123 |

| clickhouse_constant-query(jdbc) | 925.087 | 108.10 | 5,813 | 14 | 185 | 75 | 4,091 |

| clickhouse(patched)_constant-query(jdbc) | 833.892 | 119.92 | 1,577 | 10 | 167 | 51 | 3,109 |

| clickhouse(patched)_constant-query(jdbc-dual) | 846.403 | 118.15 | 3,021 | 8 | 169 | 50 | 3,054 |

| clickhouse_10k-rows-query | 854.886 | 116.97 | 0 | 12 | 171 | 99 | 1,208 |

| clickhouse_10k-rows-query(mysql) | 1,657.425 | 60.33 | 0 | 28 | 331 | 123 | 2,228 |

| clickhouse_10k-rows-query(remote) | 854.610 | 117.01 | 0 | 12 | 171 | 99 | 1,201 |

| clickhouse_10k-rows-query(url) | 853.292 | 117.19 | 5 | 23 | 171 | 105 | 2,026 |

| clickhouse_10k-rows-query(jdbc) | 1,483.565 | 67.41 | 11,588 | 66 | 297 | 206 | 2,051 |

| clickhouse(patched)_10k-rows-query(jdbc) | 1,186.422 | 84.29 | 6,632 | 61 | 237 | 184 | 2,021 |

| clickhouse(patched)_10k-rows-query(jdbc-dual) | 1,080.676 | 92.53 | 4,195 | 65 | 216 | 180 | 2,013 |

Note: clickhouse(patched) is a patched version of ClickHouse server by disabling XDBC bridge health check. jdbc-dual on the other hand means dual instances of JDBC bridge managed by docker swarm on same KVM(due to limited resources ;).

| Test Case | (Decoded) URL |

|---|---|

| clickhouse_ping | http://ch-server:8123/ping |

| jdbc-bridge_ping | http://jdbc-bridge:9019/ping |

| clickhouse_url(clickhouse) | http://ch-server:8123/?query=select * from url('http://ch-server:8123/ping', CSV, 'results String') |

| clickhouse_url(jdbc-bridge) | http://ch-server:8123/?query=select * from url('http://jdbc-bridge:9019/ping', CSV, 'results String') |

| clickhouse_constant-query | http://ch-server:8123/?query=select 1 |

| clickhouse_constant-query(mysql) | http://ch-server:8123/?query=select * from mysql('mariadb:3306', 'test', 'constant', 'root', 'root') |

| clickhouse_constant-query(remote) | http://ch-server:8123/?query=select * from remote('ch-server:9000', system.constant, 'default', '') |

| clickhouse_constant-query(url) | http://ch-server:8123/?query=select * from url('http://ch-server:8123/?query=select 1', CSV, 'results String') |

| clickhouse_constant-query(jdbc) | http://ch-server:8123/?query=select * from jdbc('mariadb', 'constant') |

| clickhouse_10k-rows-query | http://ch-server:8123/?query=select 1 |

| clickhouse_10k-rows-query(mysql) | http://ch-server:8123/?query=select * from mysql('mariadb:3306', 'test', '10k_rows', 'root', 'root') |

| clickhouse_10k-rows-query(remote) | http://ch-server:8123/?query=select * from remote('ch-server:9000', system.10k_rows, 'default', '') |

| clickhouse_10k-rows-query(url) | http://ch-server:8123/?query=select * from url('http://ch-server:8123/?query=select * from 10k_rows', CSV, 'results String') |

| clickhouse_10k-rows-query(jdbc) | http://ch-server:8123/?query=select * from jdbc('mariadb', 'small-table') |